ASRock Rack 1U10E-ICX2 Internal Hardware Overview

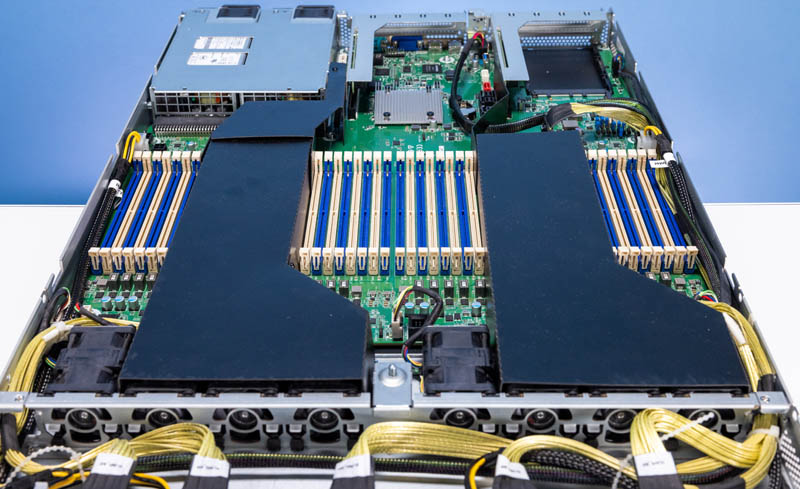

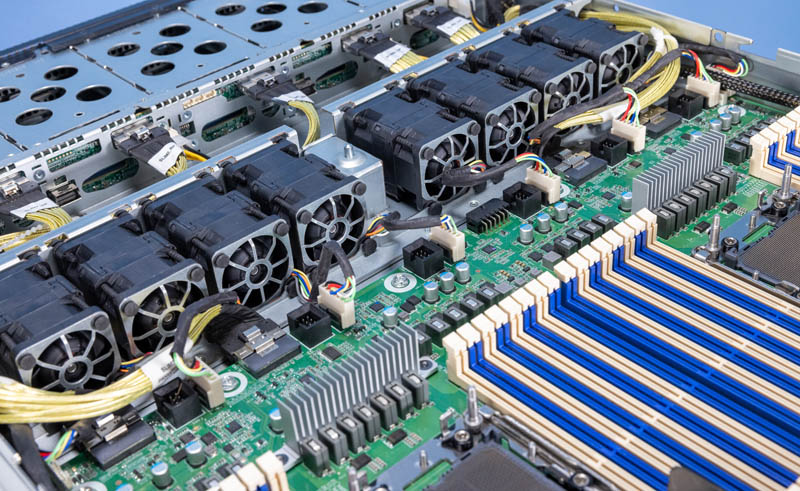

Inside the system, the most striking feature is the airflow guides. Each takes airflow from three fan modules and directs it over a CPU heatsink. There are additional rear guides to divert airflow over specific components.

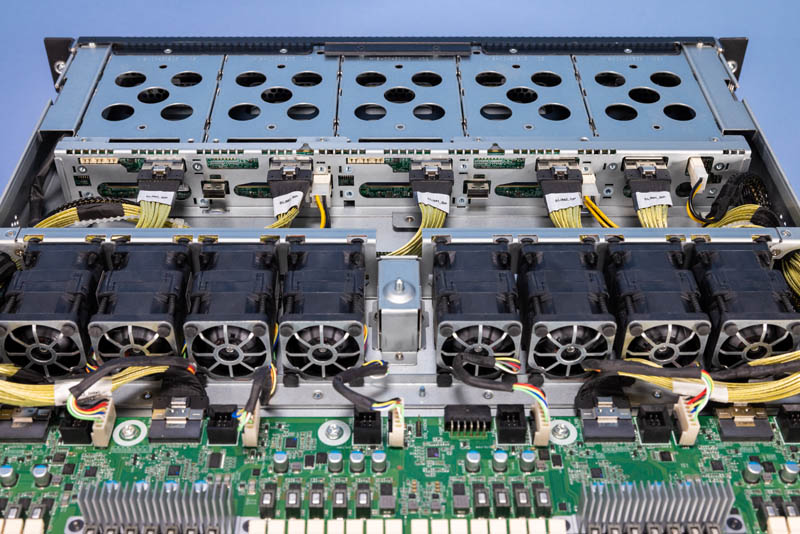

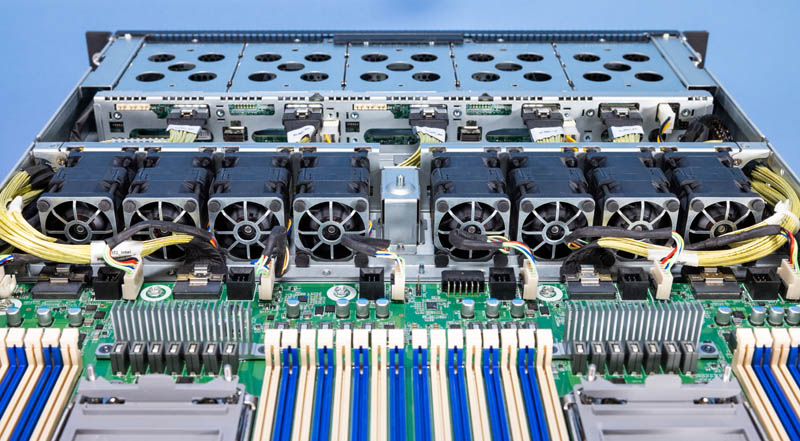

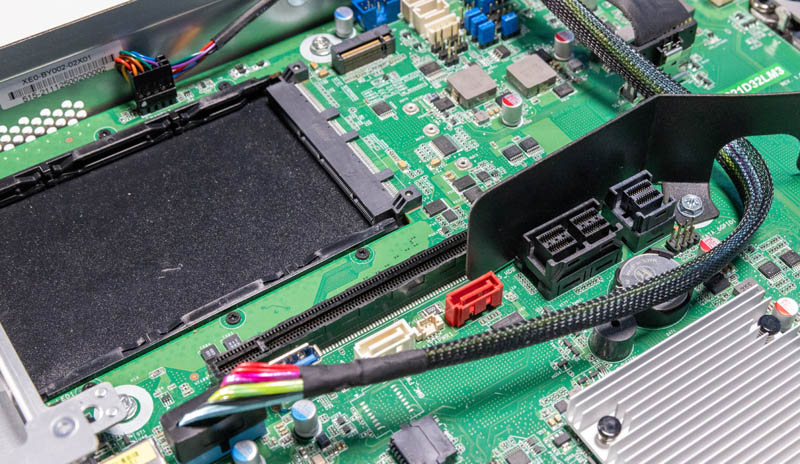

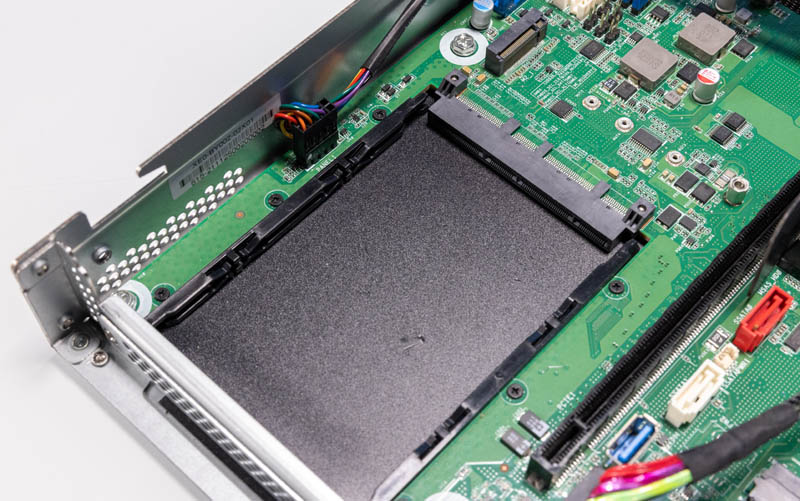

The 10x 2.5″ NVMe drives utilize PCIe Gen4 SlimLine 8i connections. This keeps cabling a bit cleaner with only five connectors and cables versus ten if they all utilized x4 cabling.

Cooling in the system is provided by eight 1U dual fan modules. These use more traditional connectors and are not easy hot-swap units. That is common, however, in 1U servers just due to height restrictions.

One can see that the front of the motherboard has the 1U fan headers but also spots for the 2U version of this system’s hot-swap fans. ASRock Rack also has motherboard PCIe connectors designed to service the front panel with short cable lengths. Many servers bring all PCIe to the rear of the chassis, then use long cables to work through the chassis to the front drive bays. That is a big difference here.

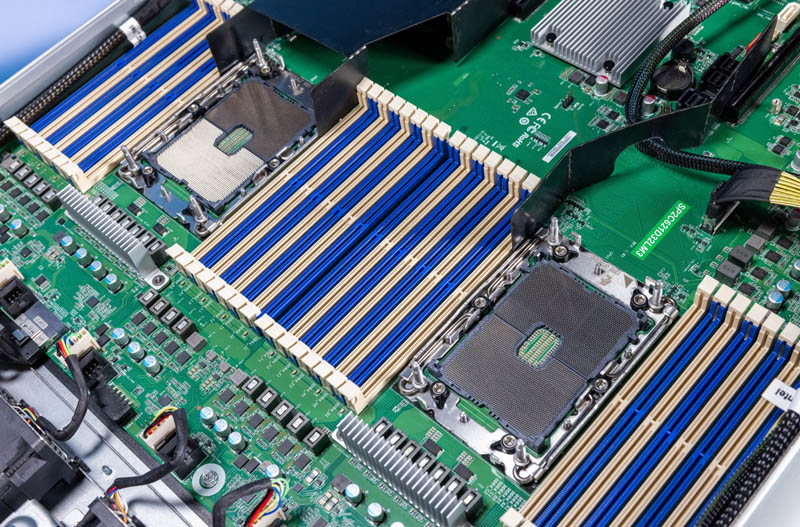

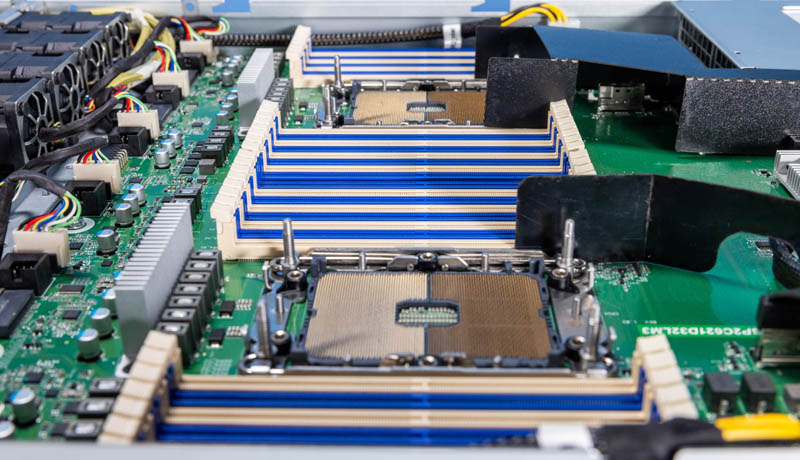

There are two CPU sockets. Each supports a 3rd Gen Intel Xeon Scalable processor, codenamed Ice Lake.

Each CPU gets a total of 16x DIMM slots for 32 total. That means we have 8 channel memory and two DIMMs per channel (2DPC.) One can also use Intel Optane PMem 200 series DIMMs if desired for more capacity or fast storage. See our Glorious Complexity of Intel Optane DIMMs for more on that technology.

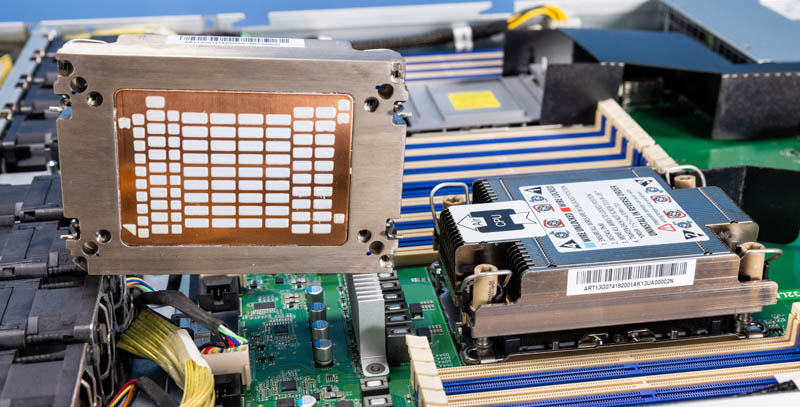

Here is a shot of the 1U heatsinks. It is amazing to see how much smaller these are than the newer generations that are cooling 350W+ of TDP per CPU. Also, this has to be one of the more interesting thermal paste screening patterns we have seen.

Behind the CPUs, we have the Lewisburg PCH. One item we wanted to show quickly is that there is a hidden PCIe x16 slot on the motherboard next to the x24 slot that the x16 riser is plugged into. We wish ASRock Rack had an option with an extra M.2 drive or two for this unused slot.

Next to the PCH, is an ASPEED AST2500 BMC.

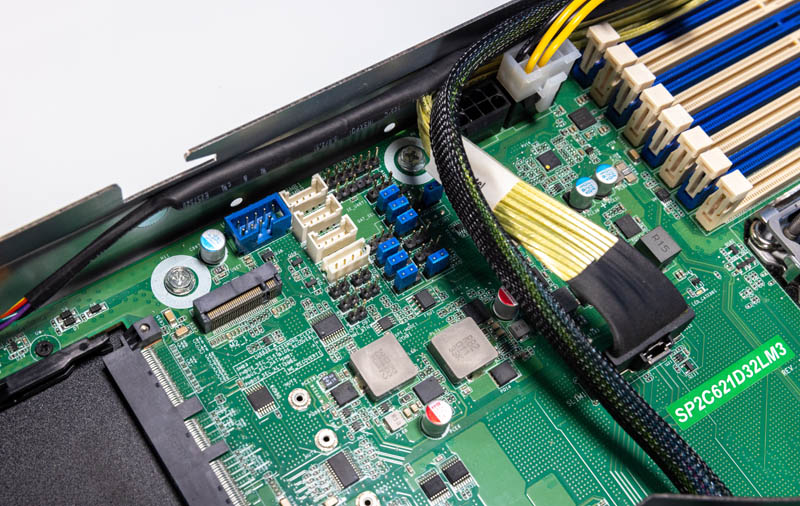

Above that we have a USB 3 Type-A header, two SATA ports, and then additional SFF-8643 SATA connectivity from the chipset. These SATA ports are not being used in this system.

Above that, we have the OCP NIC 3.0 slot that is PCIe Gen4 x16 as well as a Gen3 x4 M.2 slot. It would have been nice to have dual M.2 for mirrored boot.

As a fun little feature of the motherboard, we have a group of jumpers and headers between the memory and the M.2 slot. It is unlikely you will need these, but they are neatly grouped in one spot instead of strewn about the motherboard, as we have seen on other systems.

We did not use this feature, but ASRock Rack also has GPU power connectors onboard that can be used for GPU power or to power storage.

Something that is really interesting here is that the motherboard is the ASRock Rack SP2C621D32LM3. This is shared between this 1U server as well as the company’s 2U platform. ASRock Rack has made a lot of standard form factor motherboards (mITX, mATX, ATX, EATX, and so forth), but this is a newer proprietary form factor. These days, to fully utilize a platform in a rackmount server, old consumer motherboard standards no longer have enough space. That is great to see from the company.

As one can tell by the caption above, the least favorite part was the pin that held the rear airflow guide in place. It was difficult enough to remove that we did not want to break it taking photos before we got the system running.

Next, we are going to get to the topology, management, and performance.

Regarding the extra PCIe x16 slot:

Maybe one of these fits:

https://www.asrockrack.com/general/productdetail.asp?Model=RB1U2M2_G4

https://www.asrockrack.com/general/productdetail.asp?Model=RB1U4M2_G4

If you aren’t going to throw out your servers/workstations every 8 to 12 months to buy new ones. Stay Far away from Asrock. Their support is awful. Almost like they are advised against providing any form of service for products older than a year. Huge part of buying these products at their high prices is to be able to used them as long as possible. We have had so many Asrock servers and now server boards die for the past 2 years. Especially their EPYC line. God awful company.