Getting a Sense of Relative Performance

The Arm world is, well interesting. We were asked to use GeekBench and both the single and multi-core numbers of ThunderX2 VMs versus Raspberry Pi 4’s using the geomeans of the single and multi-core scores. Things got a little bit strange when we did this request since we could not get a direct Raspberry Pi 4 Android score, but we could get a Raspberry Pi 4 Linux score.

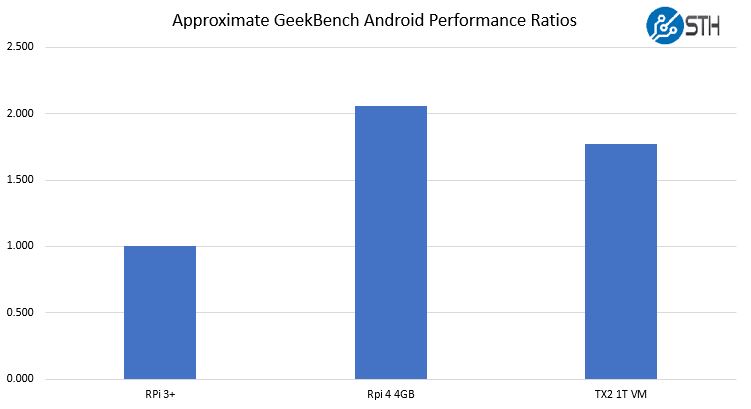

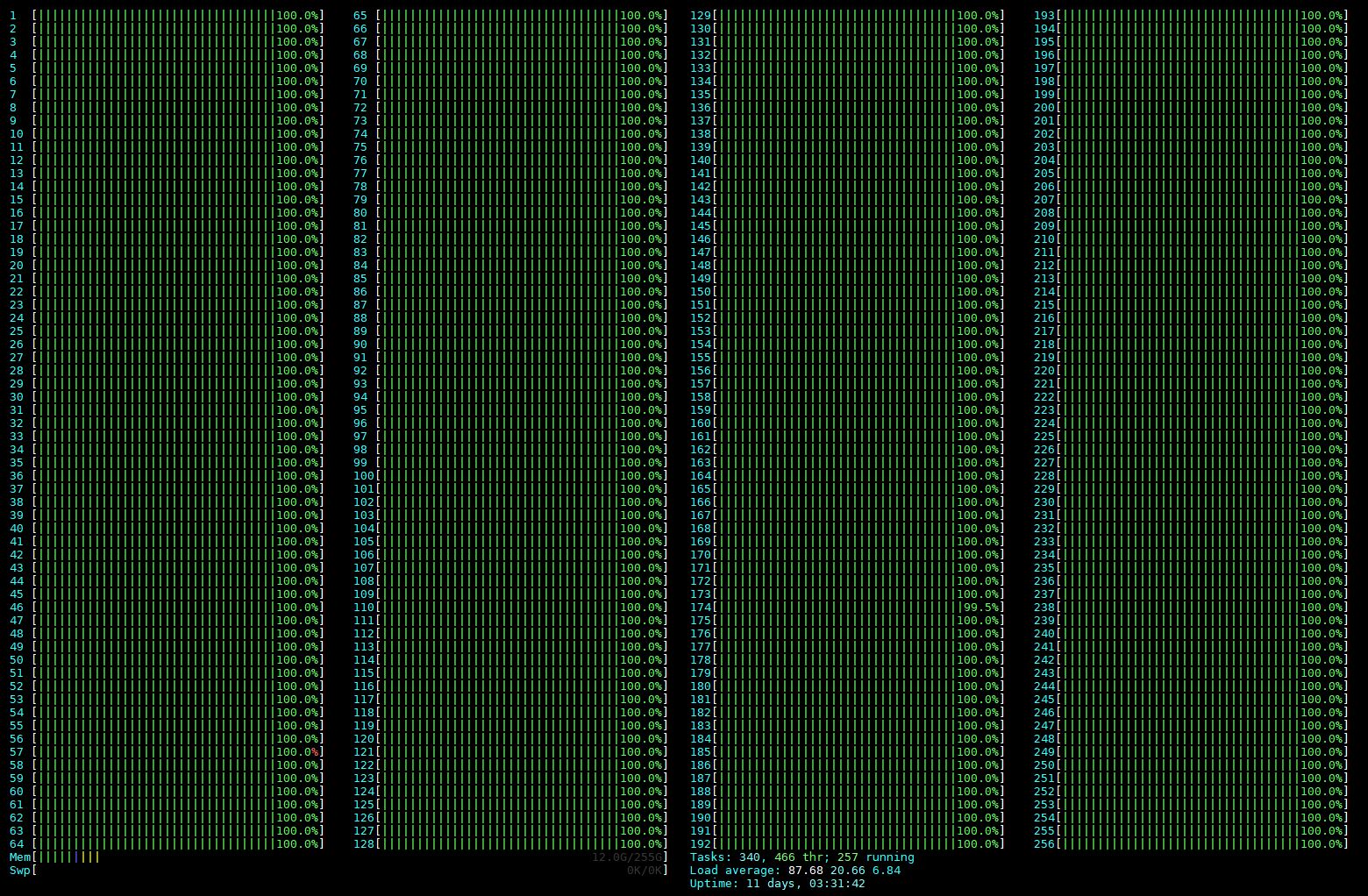

What we essentially did, and the company we did this for thought it was close enough to be valid, was to take Raspberry Pi 3+ and Raspberry Pi 4 4GB Linux scores. We found that the Raspberry Pi 4 4GB was over twice the speed of its predecessor. We then ran GeekBench 2 and 3 on the Raspberry Pi 3+ and a ThunderX2 single thread VMs using dual 32 core / 128 thread parts. This meant we could have up to 256 of these VMs per machine, however, one generally does not want to fully load a server with VMs to leave some room for local tasks. The ThunderX2 single thread VM was just under twice the speed of a Raspberry Pi 3+ in Android. The ratios looked something like this:

The net impact is that a single ThunderX2 thread was running about 86% as fast as a Raspberry Pi 4 4GB. That came out to just over 220 RPi 4’s worth of performance per dual 32-core Marvell ThunderX2 server.

Using 220 felt wrong on both sides. First, a key principle of virtualization is overprovisioning CPU resources. Not all VMs will be running at all times. Larger virtual machine hosts help administrators balance workloads and oversubscribe vCPUs. That is Virtualization 101. On that side, we should be using more than 220. A counterpoint is that if they are all running full speed simultaneously, 220 is too many. The VM host needs some resources and running a machine at 100% is also in Virtualization 101 as a bad idea.

We are going to use 190 VMs as our number. We first took 10% off of the number of VMs which got us to 198. When we did 4GB of memory x 198 we got 792GB of total ram. That was close to what we could get with 768GB of memory in a system (192x 4GB) and we then left 8GB for the host. That got us to 190 4GB VMs to replace 4GB Raspberry Pi 4’s. This number also fits nicely into 8x 24-port PoE switches or 4x 48 port PoE switches.

One could argue that we could over-provision memory, but since we are not doing that on the CPU side, it felt like we should not be doing that on the memory side. Now that we have performance and RAM capacity targets for the ThunderX2 server, all that is left is coming up with the ThunderX2 system cost and doing a comparison to our Raspberry Pi node cost.

Marvell ThunderX2 System Pricing

The equivalent of the Gigabyte server we used in our Cavium ThunderX2 Review loaded with RAM at a lower-margin reseller, we are told, prices out to around $9000. We are going to add another $2200 for four 10TB hard drives and a 100GbE NIC.

One could argue that we do not need the 100GbE NIC if we are strictly comparing to the 190 Raspberry Pi 4’s, but we should have this capability. The 10TB hard drives give us more raw and usable capacity than the 24TB FreeNAS NAS we priced into our nodes. If we were building our own system, we would use SSDs, but we are using hard drives here.

We are also adding $300 for “extra bits.” That includes a management network port, a provisioning port, an upstream 100GbE port, and any other needed parts.

Total cost we are putting this as $11500. That will vary based on the discount you can get, but it seems reasonable.

Now that we have all of this, it is time to put it together and compare the costs.

We’ve done something similar to you using Packet’s Arm instances and AWS Graviton Instances. We were closer to 175-180 but our per-RPi4 costs were higher due to not using el cheapo switches like you did and we assumed a higher end case and heatsinks.

What about HiSilicon and eMAG?

Well, I guess using single OS instance server also simplifies a lot of CI/CD workflow as you do not need to work out all the clustering trickiness as you just run on one single server — like you would run on single RPi4. There is no need to cut beautiful server into hundreds of small VMs just to later make that a cluster. Sure, for price calculation this is needed, but in real life it just adds more troubles, hence your TCO calculation adds even more pro points to the single server as you save on stuff keeping cluster of RPis up & running.

That’s a Pi 3 B+ in the picture with the POE hat not a Pi4

What about HiSilicon and eMAG?

Maybe a better cluster option – Bitscope delivers power through the header pins, and can use busbars to power a row of 10 blades. For Pi3 clusters I did, it was $50 per blade/4 Pis, and $200 for 10 blade rack chassis.

Hi Patrick,

thank you for interesting article. In my opinion to compare single-box vs scale out alternatives one need to consider proper scaling

unit. I would try not RPi 4, but OdroidH2+ as it offers more RAM and SSD with much higher IO throughput (even with virtualization to get threads number).

If I am counting roughly then 2 alternatives should be at least comparable regarding the TCO with ThunderX2 alternative:

A) 24 x OdroidH2+, 32GB RAM, 1TB NVMe SSD = (360 USD per 1 SBC) + 1×24 Port Switch (no PoE) + DC Power Supply 400W

B) 48 x OdroidH2+, 16GB RAM, 500 GB NVMe SSD = (240 USD per 1 SBC) + 2×24 Port Switch (no PoE) + DC Power Supply 800W

Regards,

Milos

Hi Milos – the reason we did not use that OdroidH2+ is that it is x86. We were specifically looking at Arm on Arm here.