This morning, Ampere is announcing the next step in its roadmap update. The company now has a 512-core Ampere AmpereOne Aurora processor coming, allegedly designed for cloud-native AI computing. As a quick note, we are going to have three pieces today. First, this one covers the higher-level roadmap items. We will have an update on the pricing and OEMs for the AmpereOne line up to 192 cores. Finally, we will have a deeper dive into AmpereOne architecture and projected performance.

Ampere AmpereOne Aurora 512 Core AI CPU Announced

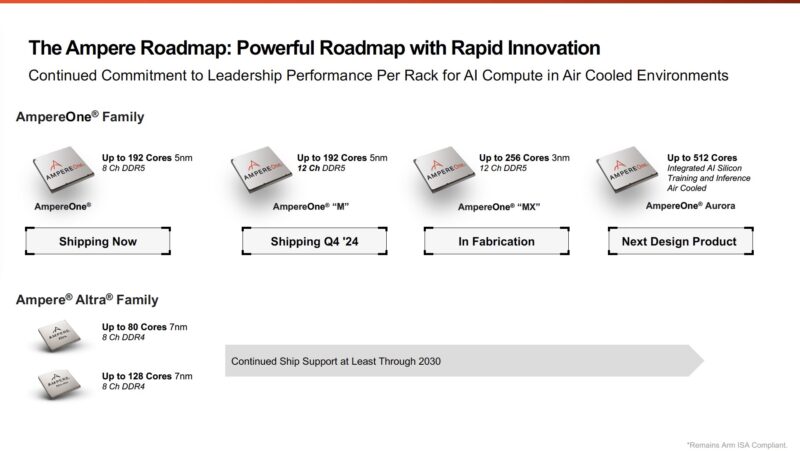

Ampere AmpereOne is currently shipping with up to 192 cores on 5nm. In Q4 2024, we will have the AmpereOne M series that will still have up to 192 cores but be built for the company’s 12-channel DDR5 platform that STH first saw at Computex 2023. That 12-channel platform will hit 256 cores sometime in 2025.

Just to put that into perspective, the 12-channel AmpereOne M and AmpereOne MX will likely be in the market around the same time as the AMD EPYC “Turin Dense” 192 core/ 384 thread part and the 288 core / 288 thread Sierra Forest-AP. Notably here, AMD and Intel list pricing is much higher than Ampere given that AMD and Intel both quote enterprise pricing. Ampere appears to be closer, at least, to Intel and AMD’s cloud price lists, which are small fractions of what is quoted on websites for the enterprise market. We are going to have the next piece today go into the AmpereOne pricing.

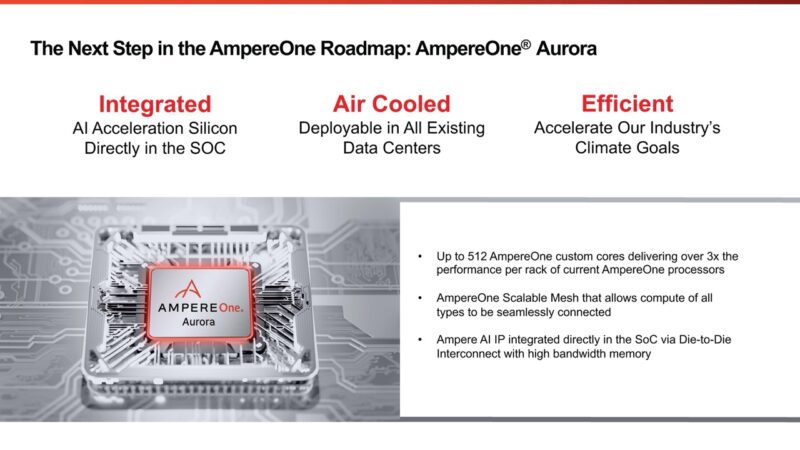

The big one on the roadmap is the AmpereOne Aurora. This is designed to be a 512-core part. Ampere says it will have 3x the performance per rack of current AmpereOne processors, but it is hard to translate to per-chip gains, given performance per rack also includes power consumption.

The built-in AI acceleration and HBM memory integration on SoC are important. It was described to us as integrating floating point units, whereas decades ago, those would have been separate chips. AI has become a big enough domain that it will just be integrated. Notably, Ampere is targeting AI training and inference with its future chip.

Ampere did not give a process node for Aurora. At the same time, it seems like it is integrating multiple chiplets onto its scalable mesh, and the company was on stage at the Intel Foundry event earlier this year. It would be interesting if this was on either Intel 18A or was being packaged by IFS. If Intel executes with Clearwater Forest on 18A, it is going to be more challenging for the Arm folks if they are using older process nodes. If Intel holds an even small process node advantage, that has historically been enough to put a lot of pressure on Arm chips.

Final Words

This is the first of a few announcements that Ampere is making today, but it is a big one. In the coming years we are going to see the company come out with a 512 core part with heavy AI integration and integrated off-compute-die memory.

Hopefully, we see these parts sooner rather than later. Next up, however, we want to see AmpereOne in the lab.

:/

More and more AI bullshit.

Please make it stop. (not you Patrick, but the industry!)

I hope this AI bubble bursts sooner rather than later. It is going off the rails.

Cant see them succeeding broadly. Each hyperscaler has their own Gravitron/Maia/Axion. Ampere overpromissed and late-delivered in past. And e-core Intel/small-core AMD are likely not too much more in volume pricing with comparable memory speeds and interference perf.

Checking the Top500 list the highest ranked ARM supercomputer has 7,630,848 cores, compared with ~180K x86 cores in it’s neighboring ranked, so about 42x as many cores; divide by two cuz you don’t like the math, and that’s 512/21=24 x86 cores of performance.

I don’t know how Oracle or Amazon are able to claim a ~40% better price/performance using ARM versus x86; when AmpereOne CPUs cost way more than x86 CPUs on a price performance basis.

@Name

What’s more Graviton 4 is a full ARMv9.x design with SVE2 operating on 4×128-bit FP per core. Ampere’s core is ARMv8.x without any SVE, just NEON, with 2×128-bit FP per core. For any HPC/AI uses Ampere’s performance will be way lower, that’s why they are planning on introducing dedicated AI hardware.

However it’s not as simple as that since there’s a lot of software enablement and support that has to happen before custom AI hardware gets usable with the most popular frameworks. That’s a lot of work ahead of them.

@Rob

The Fugaku supercomputer is using a 2019 ARM design, and a very customized one at that – it’s using SVE operating on 2x 512-bit FP with predicate support . It’s not really representative of 2024 ARM.

Phoronix provides benchmarks of new server ARM designs, and on Chips and Cheese you can find analysis of the cores themselves, most of them anyway.

@Rob Fugaku is a pure-CPU supercomputer, whereas the other supercomputers make heavy usage of GPUs. Add the GPU cores to the count, and the numbers are not so different.