A Word on Power Consumption

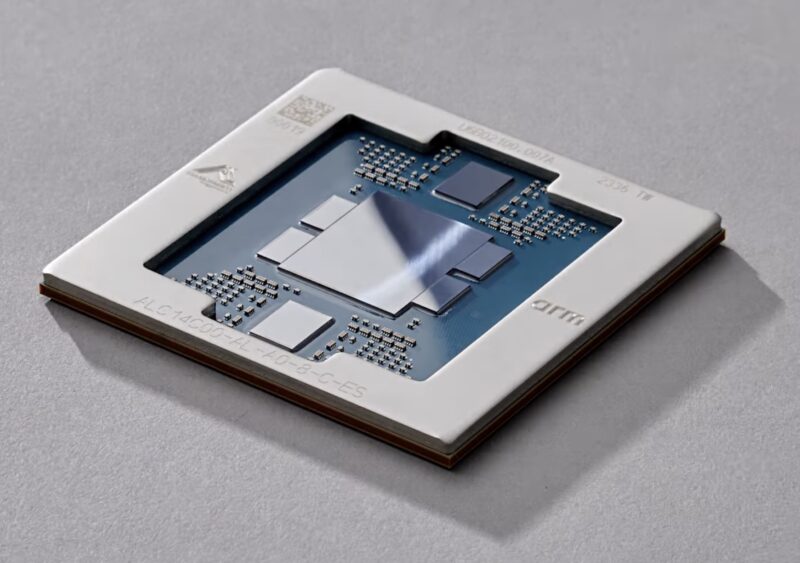

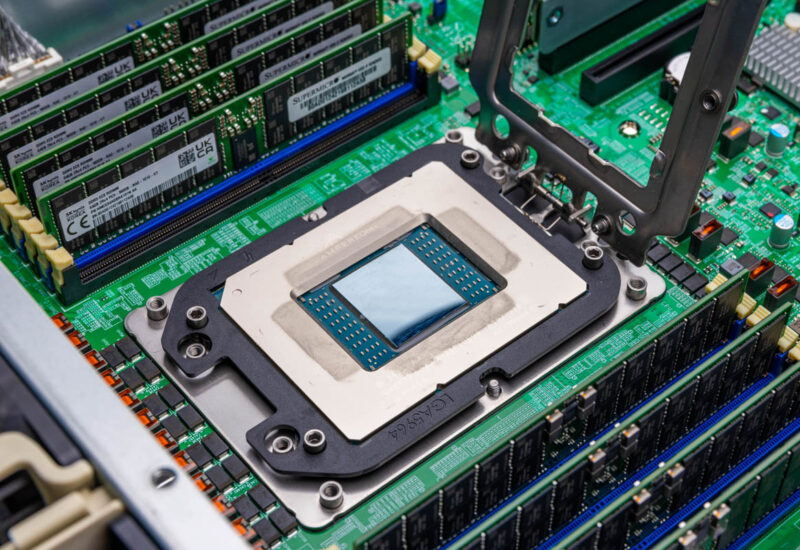

We went into detail regarding the power consumption of the AmpereOne platform we are using in the Supermicro MegaDC ARS-211M-NR Review. The big takeaway is that the idle power consumption was fairly high compared to a Xeon 6700E platform or a AMD EPYC 9005 platform. It was not like 10-20W more, but more like 70W+ higher which is very notable on a single-socket system.

Under full load with the 400W AmpereOne A192-32A, the AMD EPYC Turin 9965 would use more power, but less than 100W more. The Intel Xeon 6780E is just a lower power platform at 330W TDP. There are probably two ways to look at this. First, AMD and Intel have largely closed the performance per watt gap over Ampere. On the other hand, AmpereOne as a 2022-2023 part would have been way ahead. Its big challenge is that it hit general availability outside cloud providers in 2024, so it has a different competitor set. If you want a bit more detail on the power consumption, check the system review.

Key Lessons Learned: Competition

At this point, I think we should talk about competition for our Key Lessons Learned.

Key Lessons Learned: Intel Competition

First off, the Intel Xeon 6700E is looking very good. Intel is competitive on a performance basis. Intel’s E-cores are at least in the same ballpark as the AmpereOne cores. We might give an edge to AmpereOne, but at the same time, that will be shortsighted. For now, the fact that Ampere has 192 cores whereas the Intel Xeon 6700E is limited to 144 cores is the big win for Ampere. Remember, these chips are about placing as many customer <8 vCPU instances per socket. Ampere has more cores so it wins there. Still, Intel has largely closed the gap.

On the flip side, the Intel Xeon 6766E is fascinating. That 250W TDP part has a SPEC CPU2017 int_rate score of around 1320 in dual socket configurations, so that is around 660 per CPU versus a 702 AmpereOne score, but at 400W. Again, different compilers. Still, shedding 6% performance for 150W socket TDP is going to be worth it for many folks. Intel has done a good job of closing the power/ performance gap.

Perhaps the big one is also the cost. AmpereOne at 192 cores is less than half the list price of the Intel Xeon 6780E. Intel needs to re-do its pricing and discounting strategy because it now looks odd.

We know that AmpereOne M is coming with 256 cores and 12-channel DDR5. We also know Intel will have Sierra Forest-AP at 288 cores with 12-channel DDR5. Intel should end up very competitive here, but at a higher-cost. Perhaps the strangest part is that Clearwater Forest is the generation we would expect from Intel to see more traction with its cloud-native processor line.

Key Lessons Learned: AMD Competition

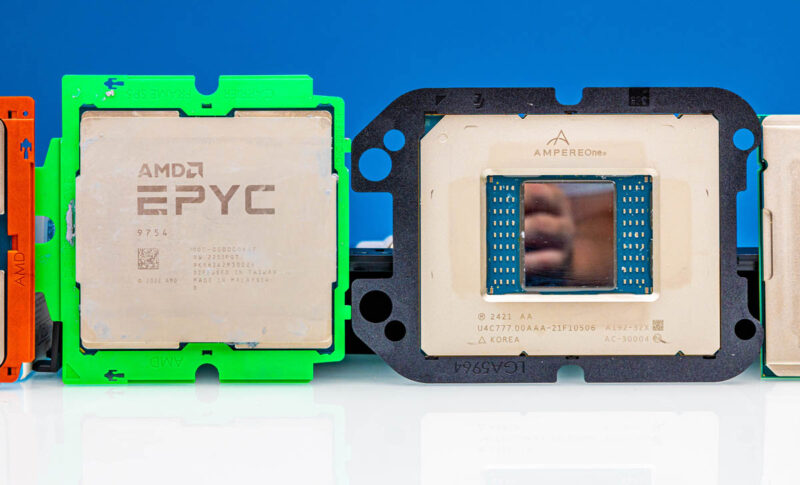

AMD’s big chips have higher list prices, but the AMD EPYC 9005 “Turin” series is very good. Perhaps there is a good reason for this. Our sense is that AmpereOne was really supposed to be an AMD EPYC 9754 “Bergamo” generation competitor rather than a Turin Dense competitor. If we remember that Ampere was shipping AmpereOne to customers like Oracle Cloud in 2023, then that makes a lot more sense. The 8-channel AmpereOne was not designed to compete with the 192 core/ 384 thread Turin Dense design.

As with Intel, AMD’s Turin list price is much higher than AmpereOne. Still, an assertion that AMD, or Intel, are not competitive in this space would be hard to stand behind at this point. That is likely because we need to see AmpereOne M.

Key Lessons Learned: NVIDIA Competition

NVIDIA is the wildcard here. We did a piece called The Most Important Server of 2022 The Gigabyte Ampere Altra Max and NVIDIA A100 which also got its own GTC session. Now, if you want to attach a NVIDIA GPU to an Arm CPU, it is most likely going to be a NVIDIA Arm CPU.

One could argue that this is bad for Ampere. It is probably a good thing. NVIDIA has the AI product that is hot in the market right now, and it will push folks to use Arm with that leverage. The Grace architecture is a decent alternative to P-core x86 CPUs, especially when those CPUs are at a lower core count. For high core count cloud-native, NVIDIA is not playing in that space, even with its 144 core Grace Superchip.

We do not see a market for AmpereOne in high-end HGX B100/ HGX B200 training or inferencing systems. At the same time, as NVIDIA pushes Arm on its customers and ecosystem, some of the best-optimized applications for Arm right now are things like web servers that AmpereOne is targeting.

The fact is that if you want on-prem Arm, you are buying either NVIDIA or Ampere, and both vendors target opposite ends of the performance per core spectrum.

Key Lessons Learned: Cloud Competition

The cloud is nothing short of a battleground for Ampere. Ampere’s key issue is that big hyper-scalers are building their own chips. Companies like Microsoft with the Azure Cobalt 100 can use Arm Neoverse CSS to build their own designs. AWS is going upmarket with Graviton.

Four years ago, Ampere was winning at hyper-scalers with the Altra / Altra Max. Where it likely needs to pivot is to offer an on-prem migration path for repatriation. To put this in perspective, if you have an Arm-based instance type that is running on Microsoft Azure, AWS, GCP, or even Oracle cloud, and you wanted to repatriate the workload on-prem or into a colocation facility, then you need an Arm server. NVIDIA is focused on selling GPUs for AI and has CPU attached to that end. The on-prem option to repatriate a cloud workload is somewhat strange. Most vendors have a NVIDIA MGX platform for Grace, but that is a higher-performance design. If you want to repatriate something like a web server, then the option is really Ampere. Companies like Gigabyte and Supermicro have Ampere Altra and AmpereOne platforms. HPE has Altra (Max) in the HPE ProLiant RL300 Gen11. If you are a Dell shop, or Lenovo (in the US) shop, then it is harder to get a non-NVIDIA Arm server.

AmpereOne effectively has that market in front of it. It is much harder to win deals for a few CPUs to a few thousand CPUs than it is to win deals denominated in increments of 25,000 CPUs. The question now is whether Ampere will start focusing on giving folks an off-ramp to cloud Arm instances.

Final Words

Is AmpereOne the fastest CPU you can buy in Q4 2024? No. It is also not trying to be. Instead, it is trying to be an Arm-based design that offers 192 cores at just over 2W/ core. One of the big challenges is that we always look at the raw performance of entire chips. Realistically, these get deployed as cloud instances mostly comprised of 8 vCPUs or fewer. Likely these instances run at low CPU utilization, and a bigger faster core would just be a waste.

To get the 1P Ampere Altra Max results for this, we bought an ASRock Rack 1U server based on the ASRock Rack ALTRAD8UD-1L2T. It is an older and less expensive generation for a storage project we had. Overall, it is easy to use Arm CPUs these days, but it is not a given that there is zero switching cost. There is a cost, it is just a lot less than it used to be. NVIDIA and cloud providers pushing Arm CPUs will only help drive down that switching cost over time.

All told, in the context of this being a 2022-2023 CPU that we are reviewing in 2024 AmpereOne is good. Perhaps the bigger takeaway, however, is that AmpereOne is the only game in town if you do not work at a hyper-scaler that can build its chips but wants a cloud-native Arm design. Sometimes, being in a class of one is a great place to be.

How significant do you think the 8 vs. 12 channel memory controller will be for the target audience?

Lots of vCPUs for cloud-scale virtualization is all well and good as long as you aren’t ending up limited by running out of RAM before you run out of vCPUs or needing to offer really awkward ‘salvage’ configs that either give people more vCPUs than they actually need because you’ve still got more to allocate after you’ve run out of RAM or compute-only VMs with barely any RAM and whatever extra cores you have on hand; or just paying a premium for the densest DIMMs going.

Is actual customer demand in terms of VM configuration/best per-GB DIMM pricing reasonably well aligned for 192 core/8 channel; or is this a case where potentially a lot of otherwise interested customers are going to go with Intel or AMD for many-cores parts just because their memory controllers are bigger?

You’ve gotta love the STH review. It’s very fair and balanced taking into account real market forces. I’m so sick of consumer sites just saying moar cores fast brrr brrr. Thank you STH team for knowing it isn’t just about core counts.

I’m curious what real pricing is on AMD and Intel now. I don’t think their published lists are useful

We might finally pick up an Arm server with one of these. You’re right they’re much cheaper than a $50K GH200 to get into.

“We are using the official results here so that means optimized compilers. Ampere would suggest using all gcc and shows its numbers for de-rating AMD and Intel to gcc figures for this benchmark. That discussion is like debating religion.”

Question to ask is “Do any real server chip customers actually use AOCC or ICC compilers for production software?”

Also, to use CUDA in the argument is suspect, IMO, given it’s GPU, not CPU, centric optimizations.

It’s a great review.

JayBEE I don’t see it that way. It’s like you’ve got a race with rules. They’re showing the results based on the race and the rules of the race.

I’d argue it hurts Ampere and other ARM CPUs that they’re constantly having to say well we’re going to use not official numbers and handicap our competition. It’s like listening to sniveling reasons why they can’t compete according to race rules. I’d rather just see them say this is what we’ve got. This whole message of we can’t use ICC or AOCC just makes customers also think if they can’t use ICC or AOCC what else can’t these chips do? I can’t just spin up my x86 VM’s as is to ARM, forget any hope of live migration. Arm’s marketing message just falls flat because it’s re-enforcing what they can’t do. For the cloud providers that own software stacks they don’t care. It’s also why the HPE RL300 G11 failed so hard they don’t have AmpereOne.

That’s something I think STH could have harped on more. If you’re migrating x86 instances, even if it isn’t a live migration, it is turn off, then on to go between AMD and Intel. You’re rebuilding for ARM. Even if the software works great, there’s extra steps.

I can tell you that my company does not use specialized compilers, namely AOCC or ICC, when evaluating AMD, Intel, and Ampere products. We want as best “apples to apples” comparisons as possible when evaluating performance across different server offerings. Results generated by special compilers, compilers my company will never use, are of no interest to our performance evaluations.

And let’s not forget that some of the specialty compiler optimizations were deemed invalid by SPEC.

https://www.servethehome.com/impact-of-intel-compiler-optimizations-on-spec-cpu2017-example-hpe-dell/

I don’t think most enterprises run their own apples to apples on this kind of thing. How do they know they’ve tuned properly for each? The server vendor tells them? In this case, that isn’t Dell Lenovo or HPE. That’s why most orgs just have the SPEC CPU2017 in their RFP’s.

SJones that was 3 generations ago, and stopped being relevant with emerald, right? It’s only Intel not AMD too, right?

xander1977

SPEC ruled an AOCC and ICC optimization for 505.mcf_r as a violation, but there had been so many scores already published with it, they withdrew it. Can’t find the link at the moment. This was an optimization that GCC did not implement. With 505.mcf_r being one of the lower resulting tests, this huge improvement from the optimization had a large impact on the overall SIR score since the overall is the geomean of the 10 individual tests.

While “apples to apples” is difficult to achieve, a critical part of that work for us is in fact using common GCC versions across architectures. This also helps us identify areas of potential code/compiler improvements to pursue.

JayBEE asked “Do any real server chip customers actually use AOCC or ICC compilers for production software?”

From my perspective the kind of customers who run the kind of software focused on by SPEC CPU are likely to employ experts whose main job is helping others tune the compiler and application to the hardware. If you are not that customer, then making a hardware decision based on SPEC is similar to choosing the family car based on the success of a racing team sponsored by the same manufacturer.

On the other hand Intel had been donating much of their proprietary compiler technology to GCC and LLVM. The result allows Intel to focus on x86 performance optimisations while language standards and conformance are handled by others. Something similar needs to happen for ARM and I suspect it does.