Ampere Altra Wiwynn Mt. Jade Server Performance

For this exercise, we had to deviate from our norm quite a bit. We are specifically using CentOS 8 with the gcc 10 toolchain instead of our standard Ubuntu 20.04 LTS base. Given CentOS 8 is effectively discontinued, perhaps more relevant would be using Oracle Cloud Linux. With Oracle as a major Ampere customer, we would expect Oracle Cloud Linux support to be great on these servers, but we did not get a chance to test it.

Ubuntu gave us a bit of a challenge due to the fact that the server would not hit turbo frequencies with the standard installation. This simply required using:

echo 1 > /sys/devices/system/cpu/cpufreq/boost

And that enabled support for us. Still, we are not using our normal Linux-Bench2 tests here for two reasons. First, we are on a different platform (soon discontinued CentOS v. Ubuntu.) Second, we had a number of containers/ VMs that needed to get updated for support. Many of our newer workloads like our KVM-based virtualization testing require VMs to be rebuilt for Arm. That process just takes a long time due to going through and validating that we have apples-to-apples components in the VMs.

As a result, we wanted to give some sense of performance here, but we will follow-up in January 2021 with a more extensive performance pice. Still, we wanted to focus on some server-level metrics not just CPU-focused. Modern workloads depend a lot on network performance and storage performance so we wanted basic server I/O testing to be done first. Having a great CPU that has poor NVMe or networking performance is far from ideal, so that was a focus of our Wiwynn server testing in this piece.

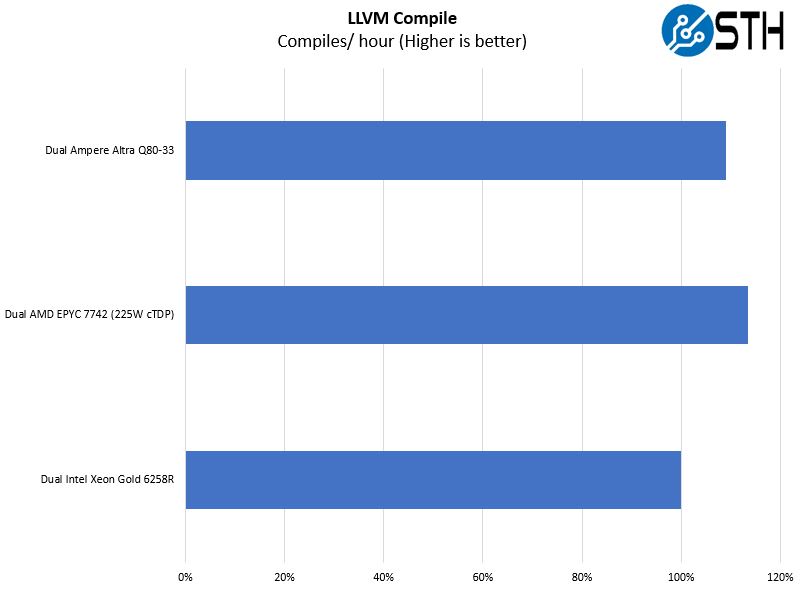

LLVM Compile

With our x86 reviews, we have a very consistent Linux kernel compile benchmark. With Arm, that comparison point is not excellent. Instead, we are using LLVM project compilation times to see how the Ampere Altra solution fares.

There are a few big notes here. The Ampere Altra does very well and is not far from the dual AMD EPYC 7742 setup. Both put distance between themselves and the Intel Xeon Gold 6258R solution.

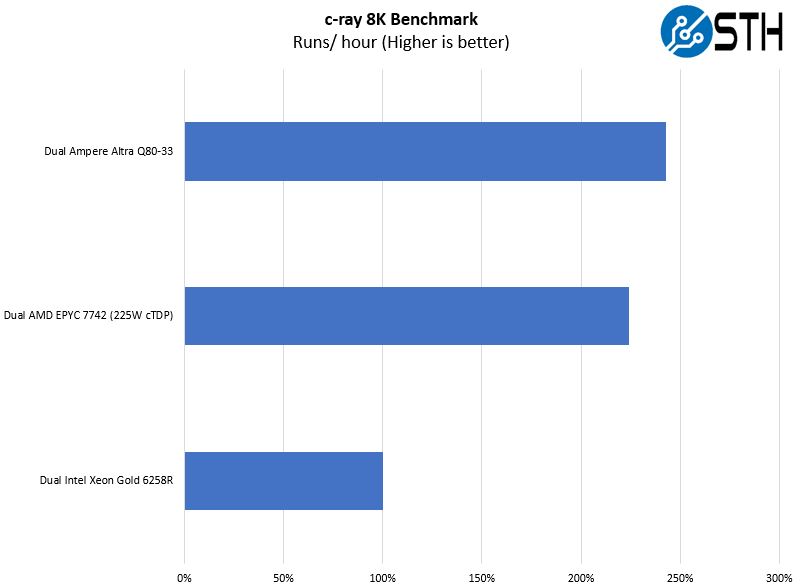

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

Here, the cores make a big difference. For those wondering, we tried the AMD EPYC 7742 setup at 240W here and it closes half of the gap between it and the Ampere Altra Q80-33. One may see a 250W TDP for the Q80-33 and assume that it is using more power than a 225W EPYC 7742, but that is only sometimes the case. Also, one may assume that the Intel Xeon Gold 6258R is a lower-power CPU at 205W. That is not always the case either. Often the CPU monitoring has the Ampere using less. At the same time, the wall power numbers are skewed by the pre-production firmware on our Mt. Jade platform not well-optimizing fans.

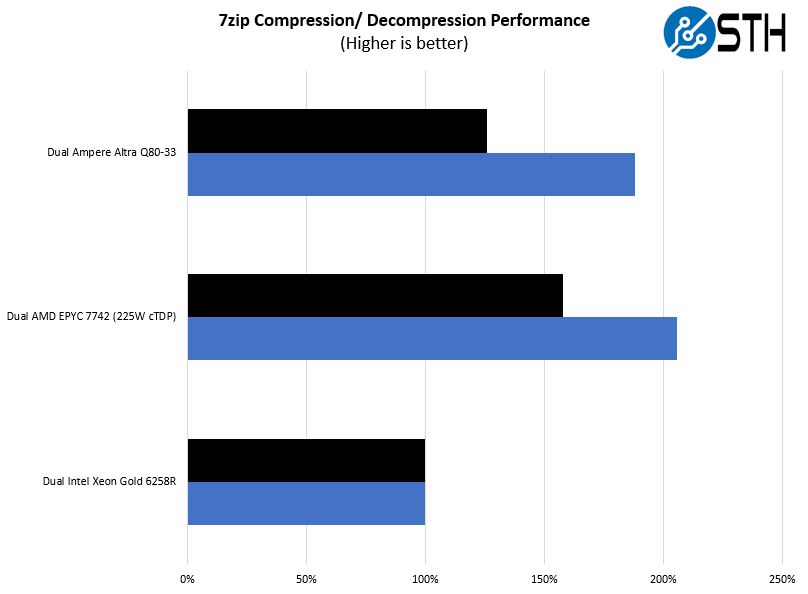

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Here we see the AMD EPYC 7742 and the Altra Q80-33 trade places in terms of performance. For those wondering, we are using the dual Intel Xeon Gold 6258R as our base level of performance since it is usually far enough behind to show differentiation between the AMD and Ampere CPUs. That speaks to just how far behind Intel is as it effectively stretches its 2017 14nm CPU design into 2021. At some point, Intel is just limited on core count.

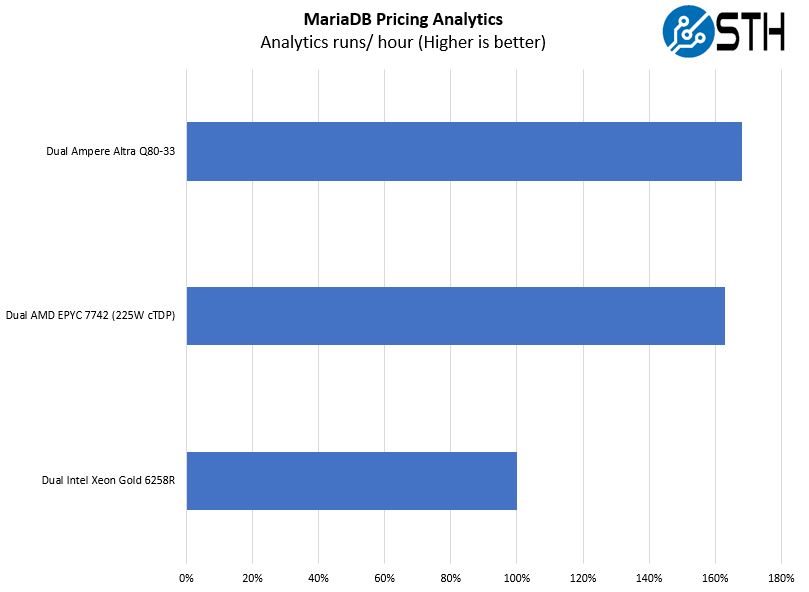

MariaDB Pricing Analytics

This is a personally very interesting one for me. The origin of this test is that we have a workload that runs deal management pricing analytics on a set of data that has been anonymized from a major data center OEM. The application effectively is looking for pricing trends across product lines, regions, and channels to determine good deal/ bad deal guidance based on market trends to inform real-time BOM configurations. If this seems very specific, the big difference between this and something deployed at a major vendor is the data we are using. This is the kind of application that has moved to AI inference methodologies, but it is a great real-world example of something a business may run in the cloud.

Here the Ampere Altra is able to slightly edge out the EPYC 7742. It takes a 240W TDP on the EPYC SKU to bring them closer to the same level of performance.

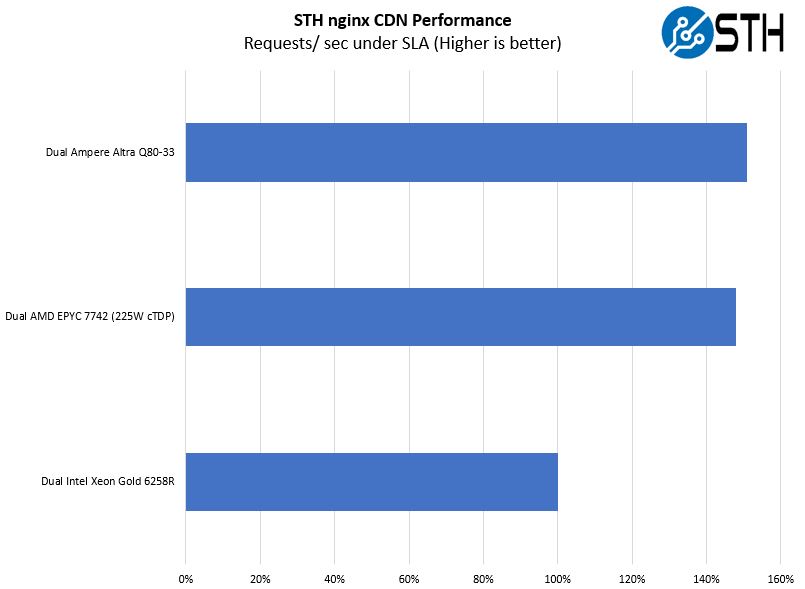

STH nginx CDN Performance

On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with DRAM caching disabled, to show what the performance looks like fetching data from disks. This requires low latency nginx operation but an additional step of low-latency I/O access which makes it interesting at a server-level. Here is a quick look at the distribution:

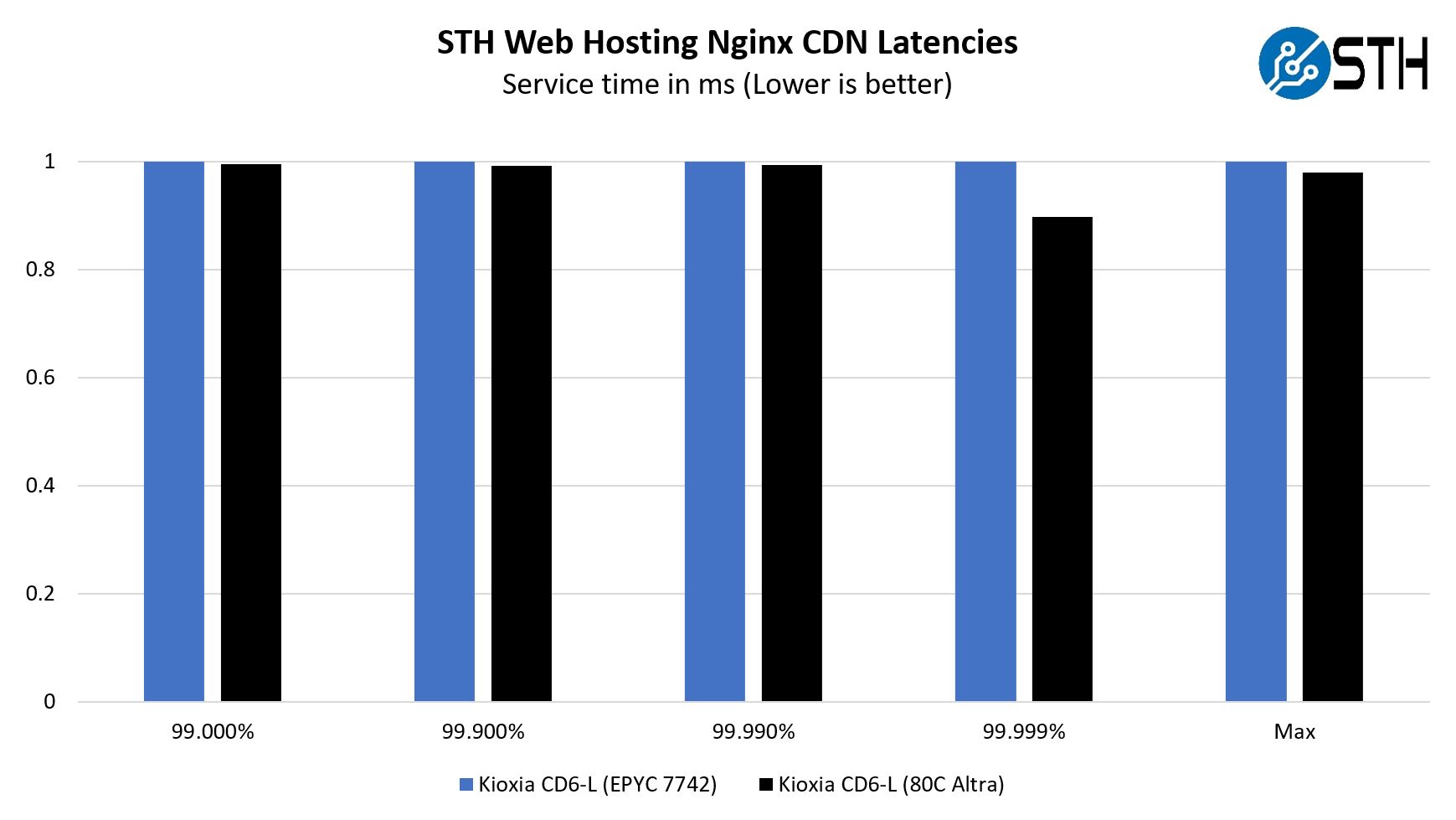

As you can see, the Ampere Altra slightly out-performs the dual AMD EPYC 7742 setup here. Here is a view of the latency distribution:

As you can see, the Ampere Altra Q80-33 performs very well in this test. It seems like a combination of the monolithic die v. chiplet I/O performance plus the fact that nginx is extremely well optimized for Arm at this point as has been a leading application for optimization.

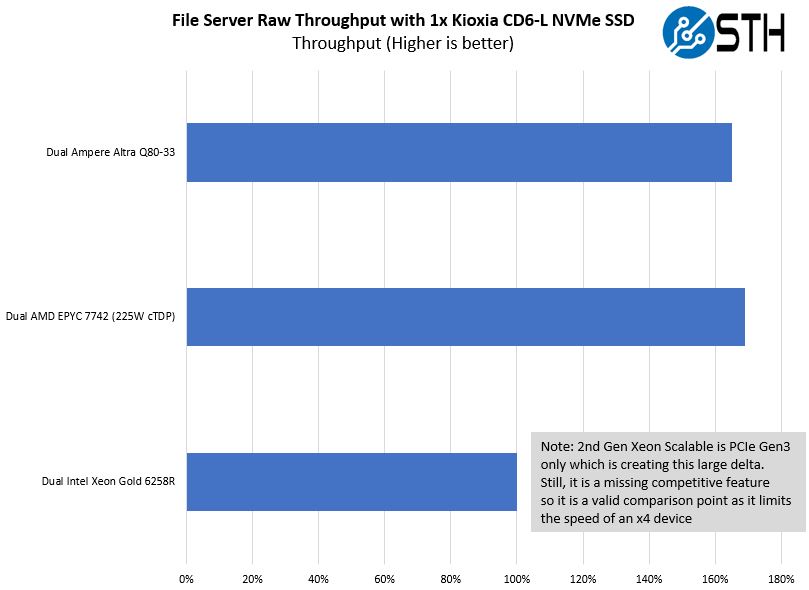

File Server Performance

Getting into another common use case where one may want a PCIe Gen4 SSD is in terms of file server performance:

We focused on the single Kioxia CD6-L drive here. Since Intel still does not have a PCIe Gen4 server CPU in the market, it simply gets hard-limited performance-wise with PCIe Gen3 speeds. The Kioxia CD6-L we are using is not the fastest NVMe SSD, so there may be some variation here.

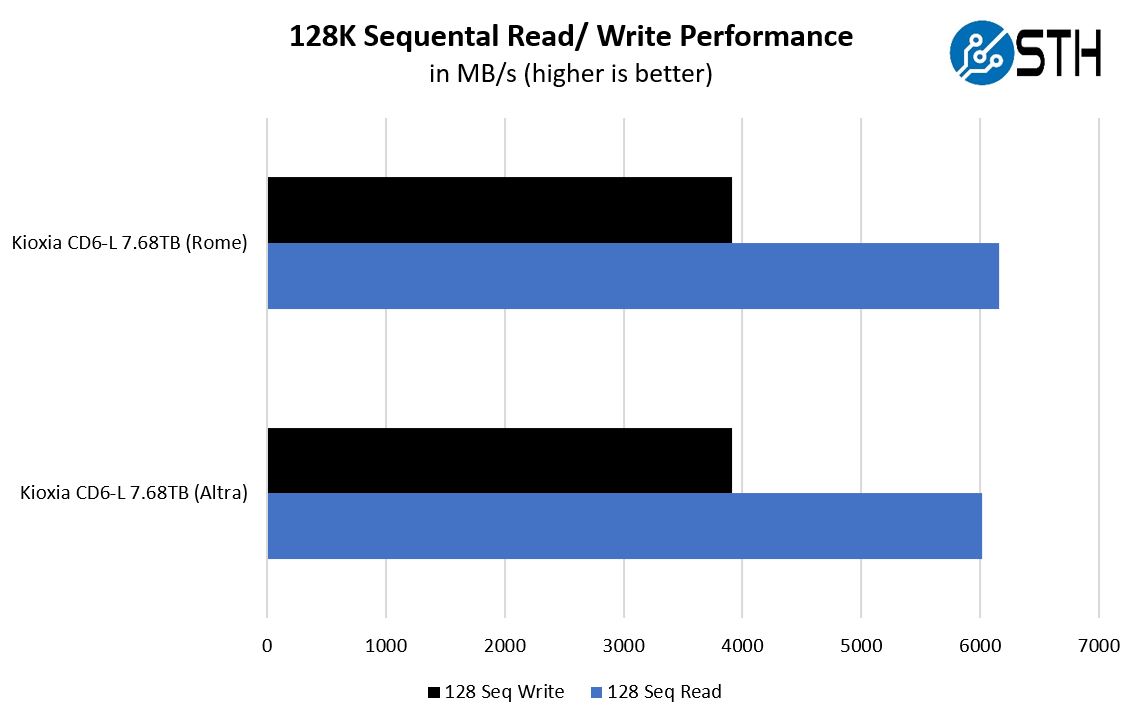

NVMe Storage “4-Corners” Testing

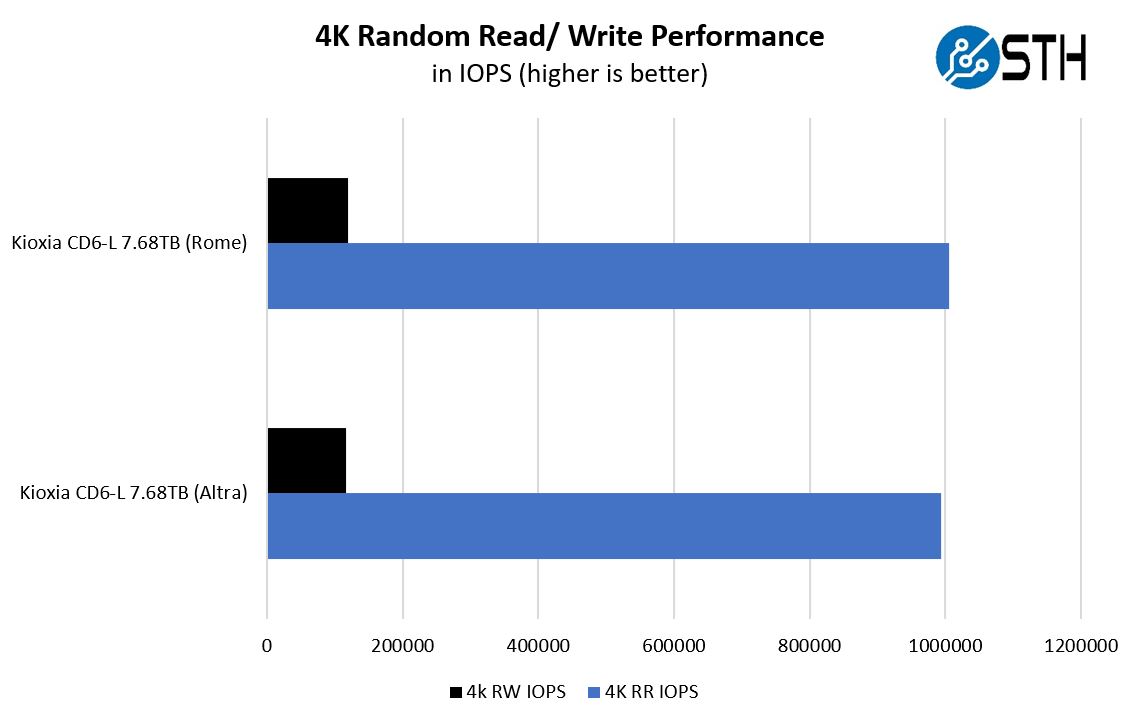

Since we already published these figures over a week ago in the CD6-L review, we just wanted to show storage performance using traditional sequential then random 4K IOPS testing. You will notice the Xeon Gold 6258R is not here just because it is PCIe Gen3.

Sequential speeds are very close. AMD has a slight advantage here. Looking at the random 4K results we see something similar:

For many, this may not seem impactful, but in older generations of Arm server CPUs, pre-ThunderX2, it was common that these tests would yield a large delta. Now, this is in the range of what we would expect just by moving to a different architecture.

Networking Performance

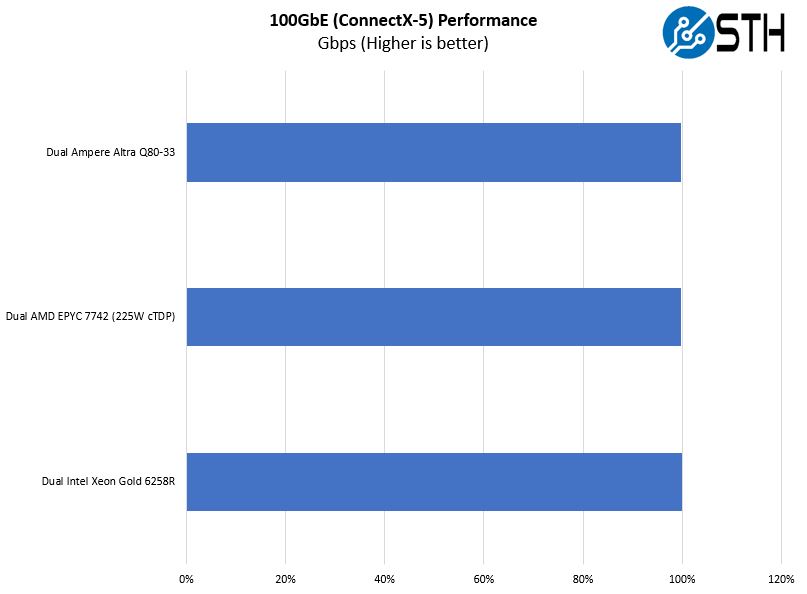

We tested the Wiwynn server’s networking speed using a ConnectX-5 100GbE adapter that we already had in our AMD and Intel testbeds since we wanted to see networking performance:

If we zoom in a lot, we could see small variations. Instead of declaring a winner here, it is perhaps more appropriate to say that the Wiwynn Mt. Jade platform can deliver equivalent 100Gbps network performance to what we see on the x86 side. Software will get ported and optimized, but having the basics like good network and storage performance is essential as Arm servers move from development platforms to mainstream deployment.

Next, we are going to get to power consumption, the STH Server Spider, and our closing thoughts.

Wiwynn Mt. Jade Power Consumption

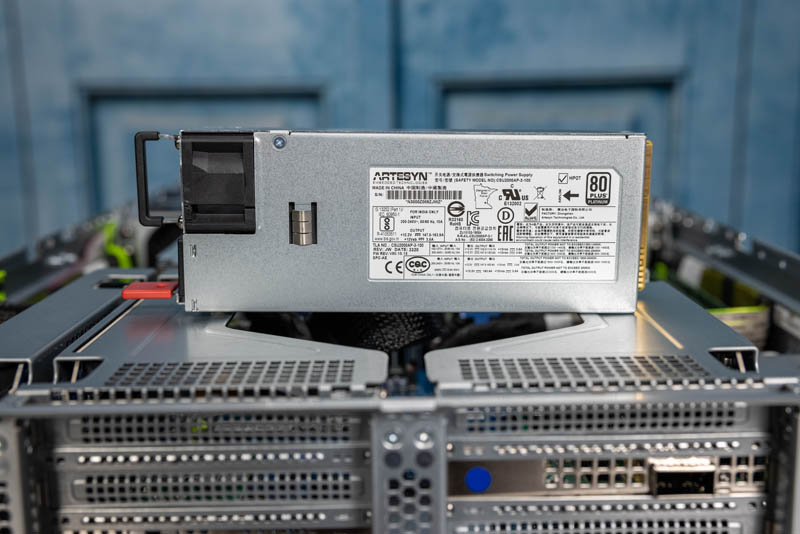

Ampere asked us not to publish power consumption figures in the Mt. Jade platform. The pre-production firmware in Wiwynn’s server was not managing fans as we would expect from a normal server. In the industry, this is very typical for early firmware as fan curves and responsiveness tends to be a later item.

We will make a few general observations. First, do not let the 2kW 80Plus Platinum PSUs deceive you. The server uses nothing near that mark. It is actually very close to what we would see in a modern AMD EPYC server under load. On the Intel Xeon side, it is similar, save for what we see with Intel on heavy AVX-512 workloads.

What is happening, and we have more on this in our Ampere Altra full review, is that the Q80-33 is staying at higher clock speeds longer than its x86 counterparts and power is varying from there. It is somewhat strange to see Intel and AMD frequencies vary a lot more under load and Ampere stay more consistent while varying more. That is a key value proposition of the Ampere platform, it is just that our standard data center PDU measured power consumption is lost in the early firmware fan curves. Ultimately, we are more interested in system power consumption since that is what drives actual TCO. It is why we invest so heavily in having data center PDUs that log power consumption per-outlet so we can effectively monitor power like a colocation/ data center provider.

I love the tech reviews in server. There’s like 3 covering this:

AT – crazy weeds

Phoronix – useful application and tracks linux like a hawk

STH – real market context and analysis and higher-level applications

It’s funny. I read AT and loved pingpong there. Phoronix has many numbers. STH has why someone would buy a server with Altra. Do you all discuss who’s doing what?

It’s so much better than consumer chip reviews

I’m not using Arm yet, but this looks like it’ll be a generation before the generation we use

I read the AT article too and it’s like “THIS IS BEST CPU EVA!” yet where was the ISV support discussion.

It’s really good STH is covering this too. There’s value everywhere, but I like Patrick’s market pundit view. I don’t always agree with his messages, but I know he’s done research and isn’t just spouting marketing junk.

The day cannot come soon enough when cabled PCIe replaces PCIe slots! Neat machine, thanks Patrick!

STH: When can we expect to see actual power numbers for the whole system?

Thanks Patrick really cool video, very exciting news for the future of computing especially providing options out of the current establishment (cough.. Intel)

Now I don’t know how the adoption of this will play but IME (of 40 years) it could take a while to be validated by:

1. Security of code embedded in the system (server components and CPU), all sort of backdoors and vulnerabilities included (not that the common enterprise players don’t include their own)

2. Integration within the used OS (drivers etc), Ubuntu and other Linux / BSD flavors will be fine, Windows, VMware etc might take a while to work if ever.

But overall very exciting for cloud providers. Thanks

I see AWS as a possible customer aside of the Oracle Cloud but that’s about it

Hello STH!

Could you guys run some monero benchmarks on these arm systems for us would be a real nice new years gift :)

This is the best server that i have ever seen

Is this the same Altra that Oracle Cloud (OCI) is offering? Confirmation would be appreciated!

Yes it is