Ampere Altra Wiwynn Mt. Jade Server Internal Hardware Overview

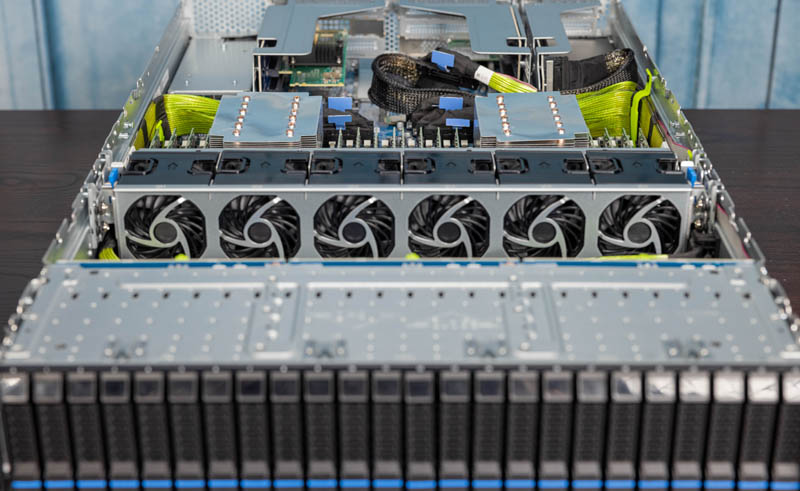

Starting our internal overview, we are going to work from the front and move to the rear. Looking behind the front storage partition, we can see a standard 2U 6-fan layout. There is normally an airflow guide over the heatsinks, but we removed it to show more views of the system.

As we would expect, the fans are hot-swappable. Wiwynn has a nice fan design and there are small features that are nice such as the entire fan partition can be removed. Some in the market see OEM/ODM systems that service hyper-scalers as being only cost-optimized and therefore inferior to traditional server OEM systems. This is a great example showing how that is not the case. There are white box server vendors who have less well-made fan housings or fan partitions that are not removable as they are from the major name brand server vendors. Here, Wiwynn is catering to serviceability and therefore we have a very nice design. Even if there is a slight cost increase for hardware, the trade-off is being made to optimize for serviceability.

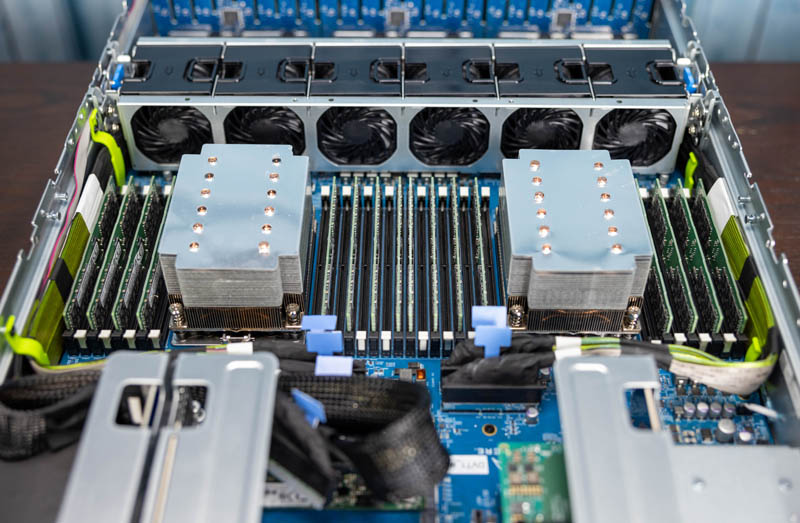

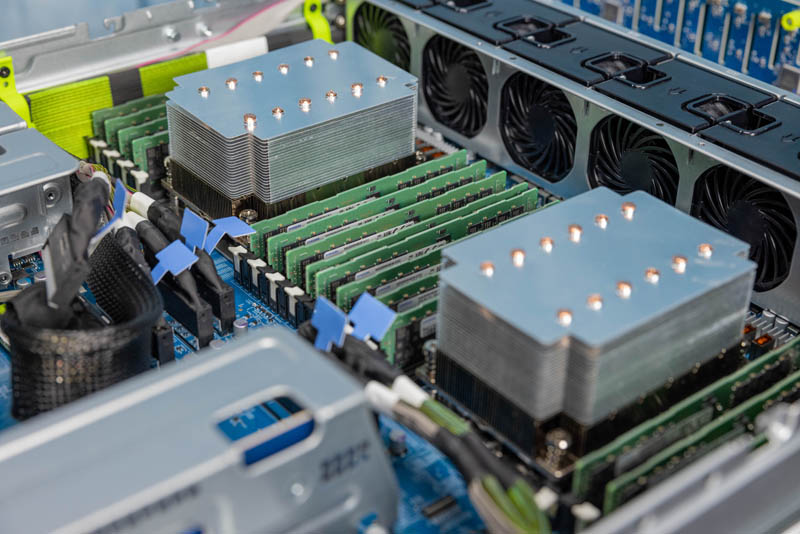

Looking at what is perhaps the most interesting section, we have the dual Ampere Altra CPUs and very nice custom heatsinks. Although we are not picturing the airflow guide here, one can see the impact. The slight bend in the top sections of the heatsink are due to the server’s design. Specifically, Wiwynn is manufacturing with precision tolerances beyond what we see from traditional vendors such as Dell, HPE, and Lenovo. As a result, these heatsink fins are located against the airflow guide. These tight tolerances, and not being accustomed to this level of precision manufacturing in this area, means that we actually bent the top fins slightly during removal. It may seem like a trivial detail, but for someone who reviews servers, it was immediately noticeable and impactful.

We are going to get into the impact of the dual 80-core Ampere Altra Q80-33 CPUs later but here is a great shot to see just how much larger the package is than the AMD EPYC 7002 Rome (right) and a 2nd generation Intel Xeon Scalable CPU (left).

In the video accompanying this review, we have a quick segment showing the installation of the CPU in this platform. One puts the CPU directly into the socket. Both AMD and Intel moved to place the CPU into a carrier to guide socket insertion as we moved to larger chips. This is a bit less refined of a method for getting a large CPU into a socket.

Aside from having two sockets connected via CCIX and having 80 cores each, there is another big feature of the Altra platform. We get a total of 8 channels of DDR4-3200 memory with 2DPC support. That means that the Wiwynn Mt Jade platform has a total of 32 DDR4 DIMM sockets. This provides bandwidth, as well as capacity to allow the solution to align well to AMD EPYC 7002/ 7003 and next-gen Intel Xeon Ice Lake SKUs.

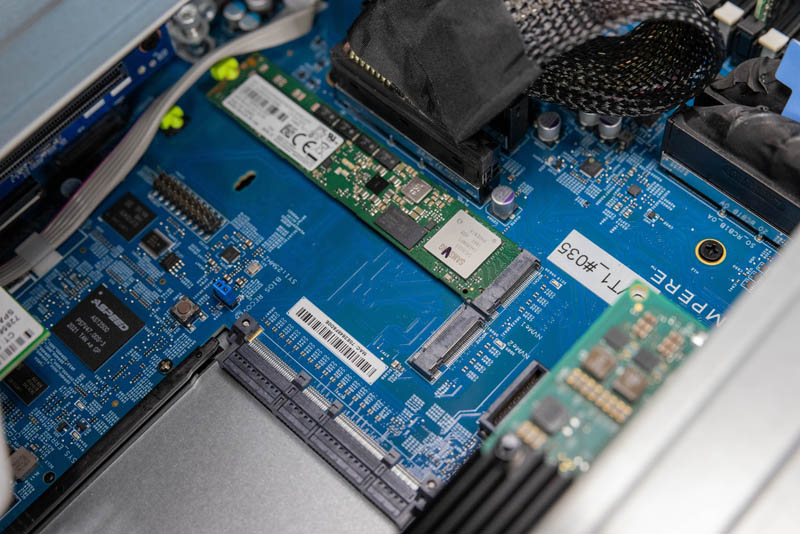

Behind the CPUs, we wanted to focus on expansion next. There are two M.2 slots for NVMe. These can take up to M.2 22110 SSDs. In this view, one can also see the ASPEED AST2500 BMC which is an industry-standard management solution.

On the bottom of the above photo, we can see an OCP NIC 3.0 slot. We are already starting to see the OCP NIC 3.0 form factor take over servers even from traditional OEMs, and in the PCIe Gen4 era, this will become the standard form factor.

There are a series of three PCIe risers that provide the PCIe connectivity for the server. These are all PCIe Gen4 and are either PCIe Gen4 x8 or x16. One can see the first be here with a 200GbE NVIDIA-Mellanox ConnectX-6 200GbE card installed in the middle PCIe Gen4 x16 slot. The Ampere Altra and AMD EPYC 7002/ 7003 with PCIe Gen4 support can handle 200Gbps NICs in a single PCIe Gen4 x16 slot. Until Ice Lake Xeons are launched, Intel needs something like a multi-host adapter that has an extension cable to two PCIe Gen3 x16 slots to handle a 200Gbps port.

On the middle riser, we have three slots with a 1GbE NIC in the bottom slot. Something that will become a challenge in the future is that using a high-speed slot for low-speed networking seems less exciting as we move to PCIe Gen4.

The final riser sits over the power supply. This riser is a low-profile riser. One can also see it has a cabled connection. One can optionally connect this riser via PCIe cable, or use the lanes for the front 4x NVMe SSDs mentioned earlier.

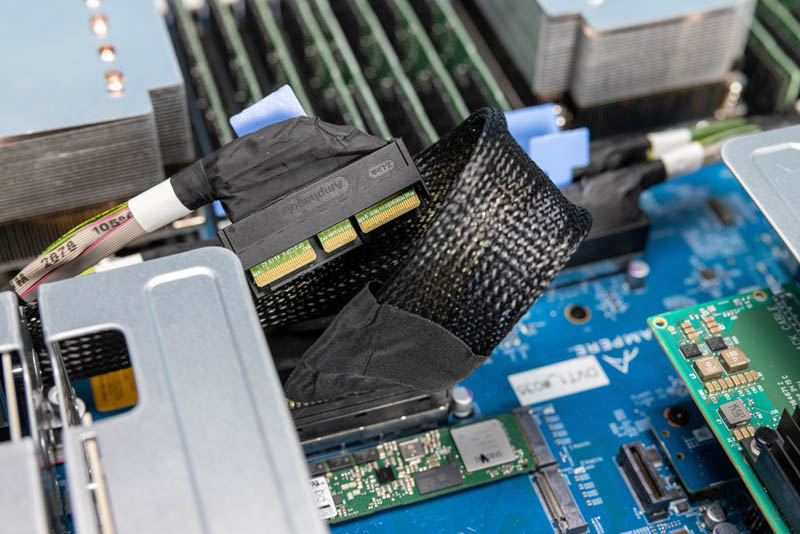

This is the PCIe Gen4 x16 cable to the front four NVMe SSD bays. For our readers, this small connector effectively can handle the speeds of a PCIe Gen4 x16 GPU. As we move forward to newer generations of servers, this flexibility allows for highly configurable servers. As an aside, one can imagine the day in a CXL future where devices could be directly cabled off of a motherboard and placed in a chassis where they make optimal thermal and airflow sense. Again, this is an incredibly forward-looking server design from Wiwynn that is being used here because of the massive PCIe Gen4 2P connectivity on the Ampere Altra.

With all of these large cables, cable management is a challenge. Wiwynn does a great job of bundling cables and moving them in the chassis, but they are large. The PCIe cables are neatly routed on the side of the chassis and held in place by straps. More than once we have wished that this strap was held in place by a thumb screw rather than a traditional screw, but that is a fairly minor point overal.

Hopefully, this hardware overview showed how Wiwynn is building a next-generation platform based on the Ampere Altra’s capability set.

Next, we are going to look at the topology, test configuration, and management before getting to performance.

I love the tech reviews in server. There’s like 3 covering this:

AT – crazy weeds

Phoronix – useful application and tracks linux like a hawk

STH – real market context and analysis and higher-level applications

It’s funny. I read AT and loved pingpong there. Phoronix has many numbers. STH has why someone would buy a server with Altra. Do you all discuss who’s doing what?

It’s so much better than consumer chip reviews

I’m not using Arm yet, but this looks like it’ll be a generation before the generation we use

I read the AT article too and it’s like “THIS IS BEST CPU EVA!” yet where was the ISV support discussion.

It’s really good STH is covering this too. There’s value everywhere, but I like Patrick’s market pundit view. I don’t always agree with his messages, but I know he’s done research and isn’t just spouting marketing junk.

The day cannot come soon enough when cabled PCIe replaces PCIe slots! Neat machine, thanks Patrick!

STH: When can we expect to see actual power numbers for the whole system?

Thanks Patrick really cool video, very exciting news for the future of computing especially providing options out of the current establishment (cough.. Intel)

Now I don’t know how the adoption of this will play but IME (of 40 years) it could take a while to be validated by:

1. Security of code embedded in the system (server components and CPU), all sort of backdoors and vulnerabilities included (not that the common enterprise players don’t include their own)

2. Integration within the used OS (drivers etc), Ubuntu and other Linux / BSD flavors will be fine, Windows, VMware etc might take a while to work if ever.

But overall very exciting for cloud providers. Thanks

I see AWS as a possible customer aside of the Oracle Cloud but that’s about it

Hello STH!

Could you guys run some monero benchmarks on these arm systems for us would be a real nice new years gift :)

This is the best server that i have ever seen

Is this the same Altra that Oracle Cloud (OCI) is offering? Confirmation would be appreciated!

Yes it is