At Hot Chips 2024, we have a talk about the AMD Versal AI Edge Series Gen 2. We first covered the Xilinx Versal AI Edge in 2021, and now we have a product Gen 2.

Please note that we are doing these articles live during the presentations. Please excuse typos. I did two weeks of STH content just yesterday!

AMD Versal AI Edge Series Gen 2 for Vision and Autos

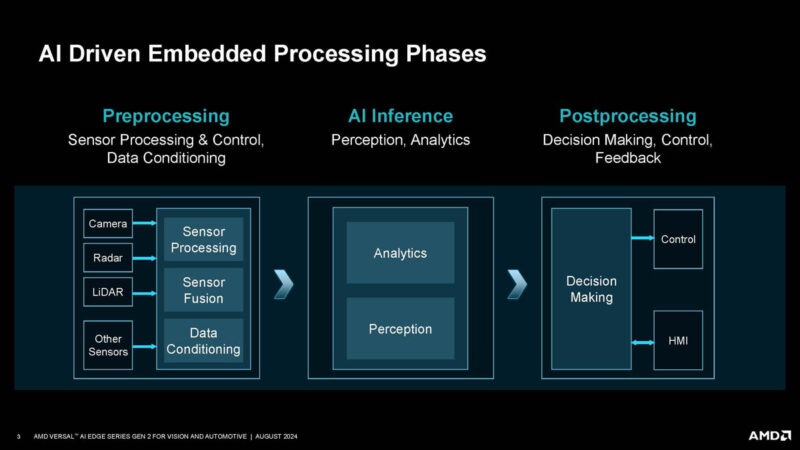

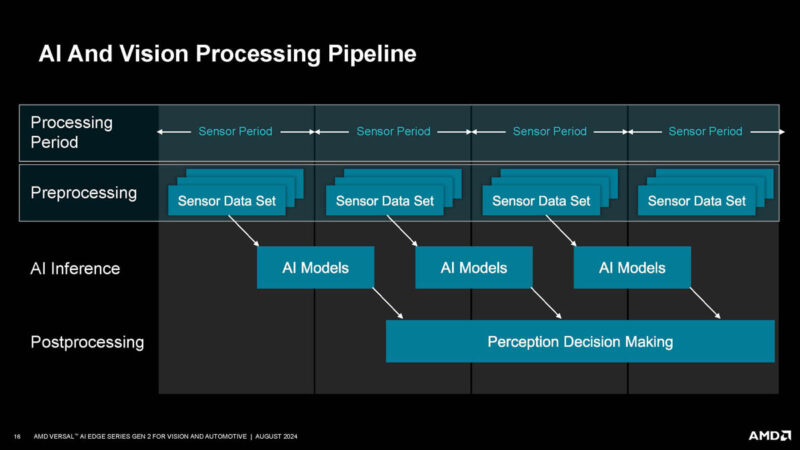

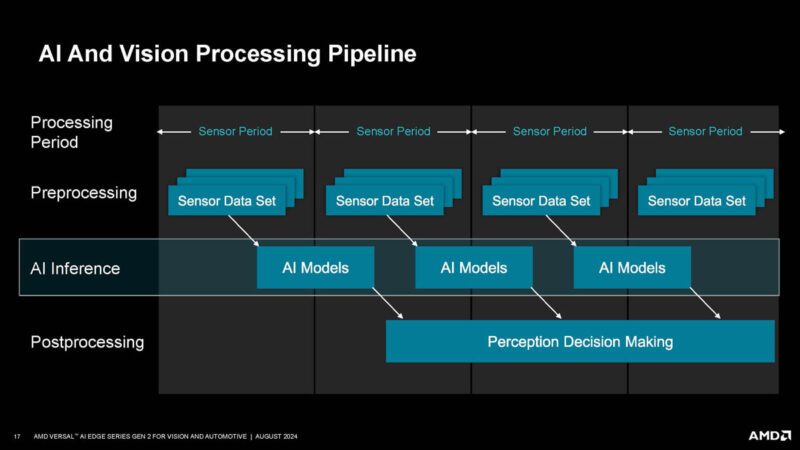

A big part of the AMD FPGA/ SoC value proposition is the ability to do a full workflow from preprocessing the data, to doing inference, to postprocessing of the result and do so at low latency.

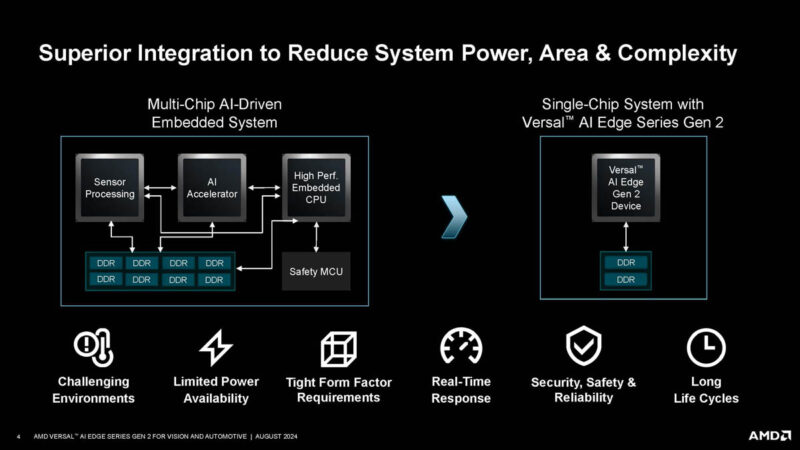

Versal AI Edge Series Gen 2 has a similar value proposition to the previous generation, which is to replace multiple chips with a single chip.

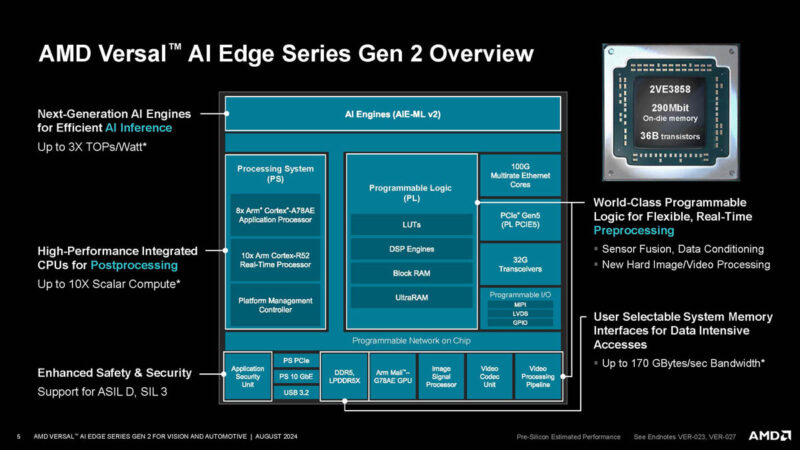

Here is the block diagram. AMD is upgrading the Xilinx-derived AI Engines to provide better performance per watt.

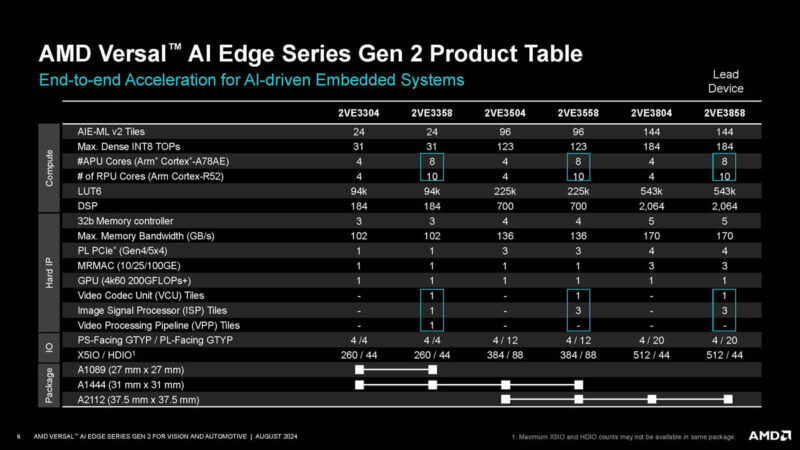

Here is the product table for the AI embedded systems. Some of the SKUs have more Arm Cortex-A78AE cores and video/ image processing tiles.

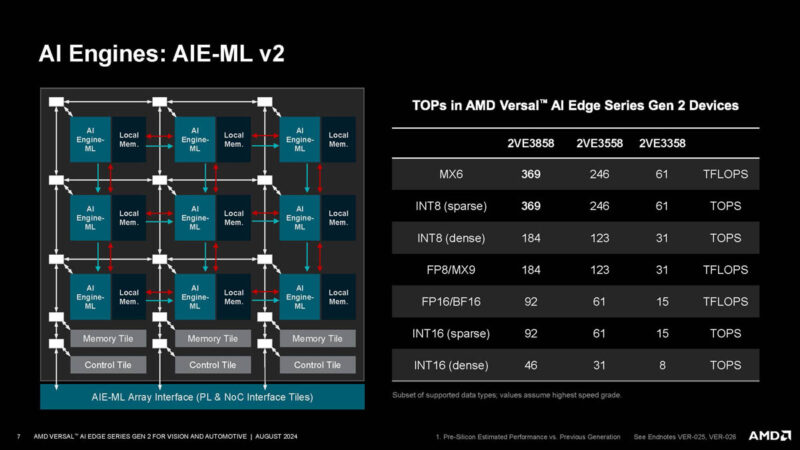

AMD has a new generation of AI engines, the AIE-ML v2 in this generation.

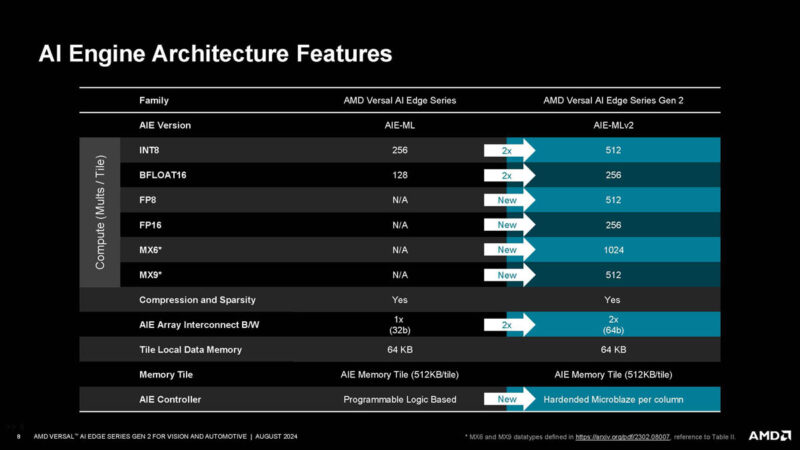

Here is the compute upgrade of the AI Engine. For formats like INT8 and bfloat16 AMD is doubling the performance, but it is also supporting new memory formats.

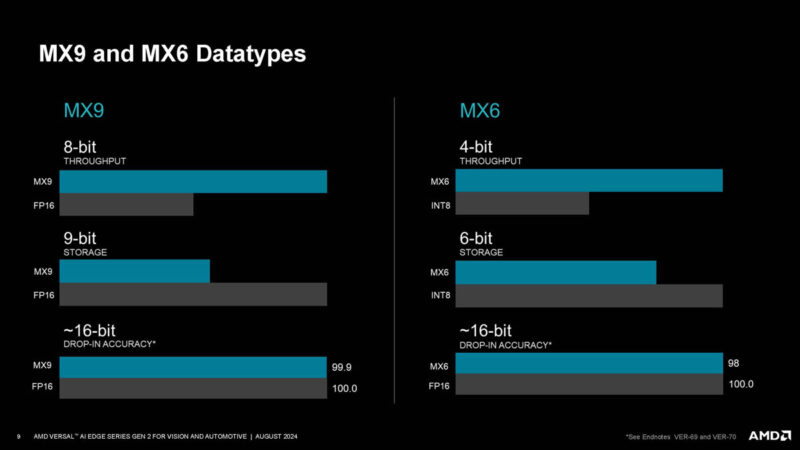

AMD also has MX9 and MX6 datatypes.

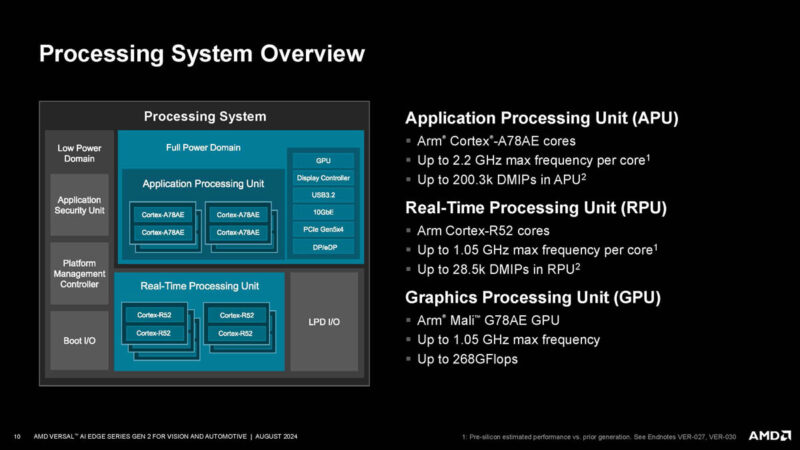

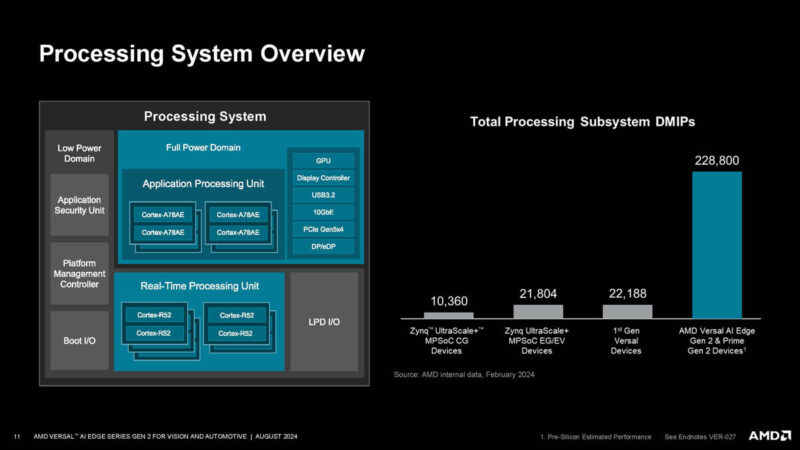

On the processing side, AMD has higher-power CPU cores, real-time cores, and an Arm GPU. If you hear people say AMD does not produce Arm CPUs, remember this slide. AMD sells Arm CPUs for embedded markets.

The new processing system provides more performance.

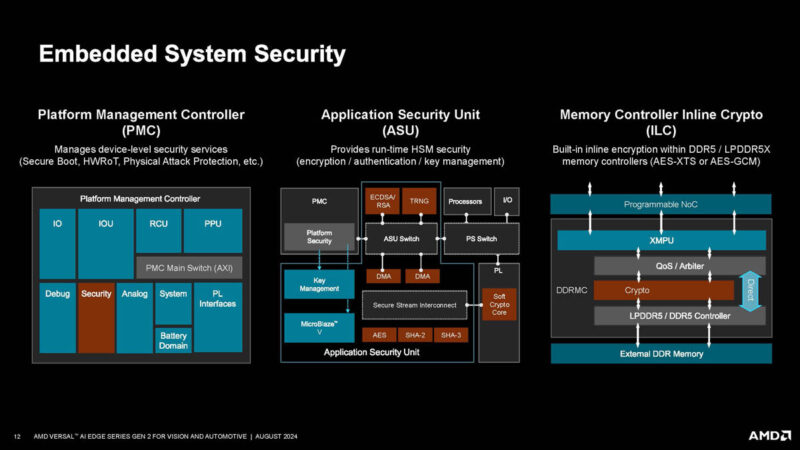

On embedded devices, lifespans are long, and they are often deployed in places less secure than a data center, so embedded system security is a big deal.

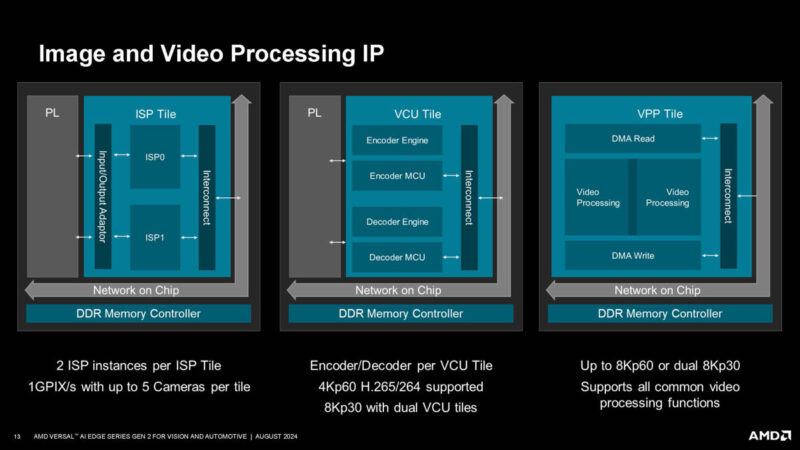

Here is the new video processing IP. These are important, for example, if you have camera feeds that you are trying to do edge inference on.

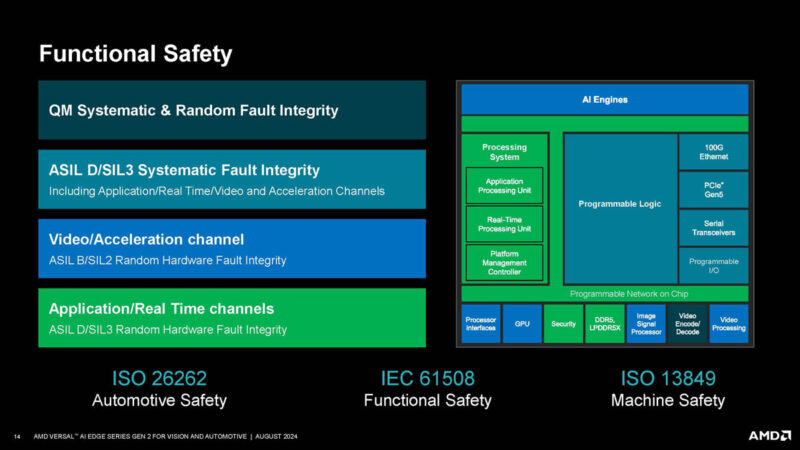

Functional safety is needed in some embedded applications, such as automotive, where chips cannot fail, or at least fail in certain ways.

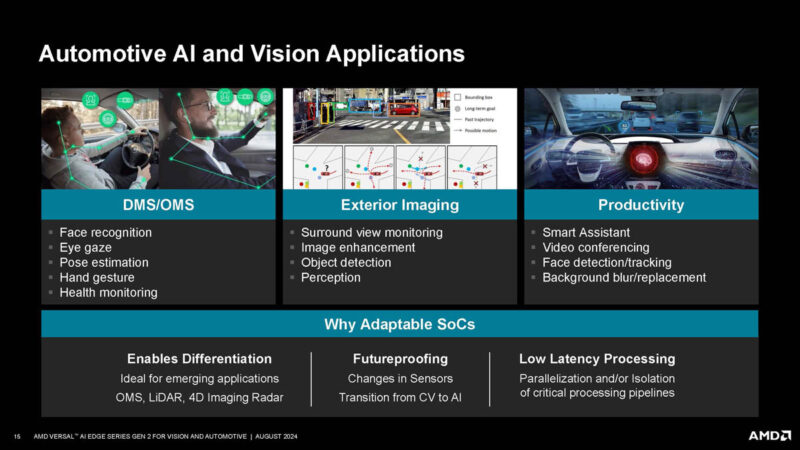

While folks often focus on automotive AI for exterior functions like autonomous driving, some newer vehicles also have internal cabin cameras that are running AI inference to see the state of passengers.

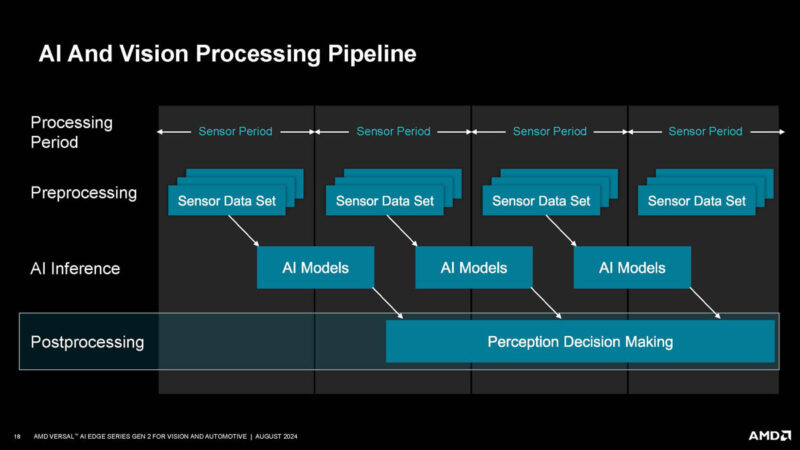

AMD has an AI and vision processing pipeline that starts with obtaining sensor data and preprocessing that data.

Next in the pipeline is AI inference.

Once the sensor data has been fed through the AI models, the output is then used to do something. For example, a cabin camera sees the driver, the AI inference determines that the video feed is showing the driver falling asleep, and the decision is made prompting the driver to take a break.

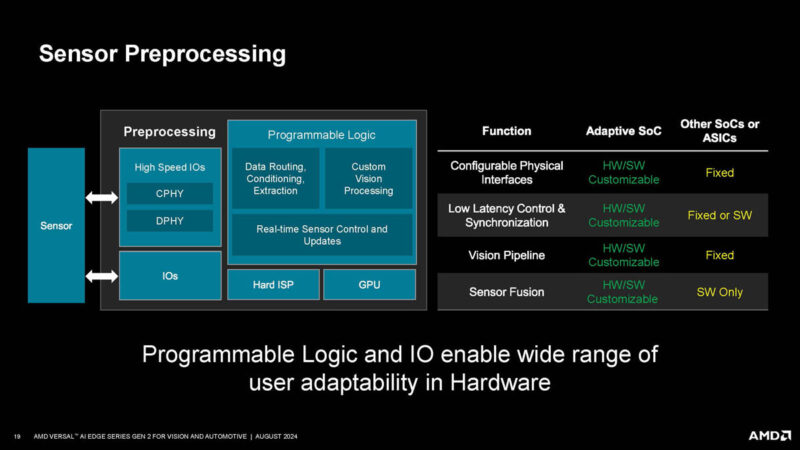

Since this is also FPGA-based, one can create a custom sensor preprocessing pipeline.

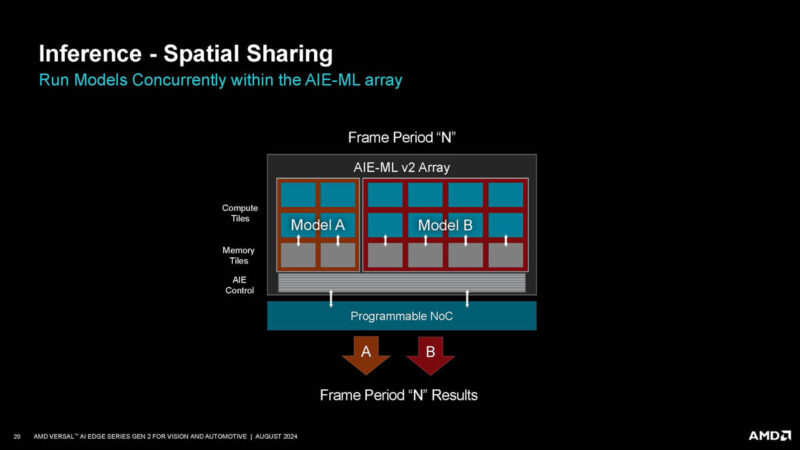

AMD also says that you can run multiple modules on its AI Engines at the same time.

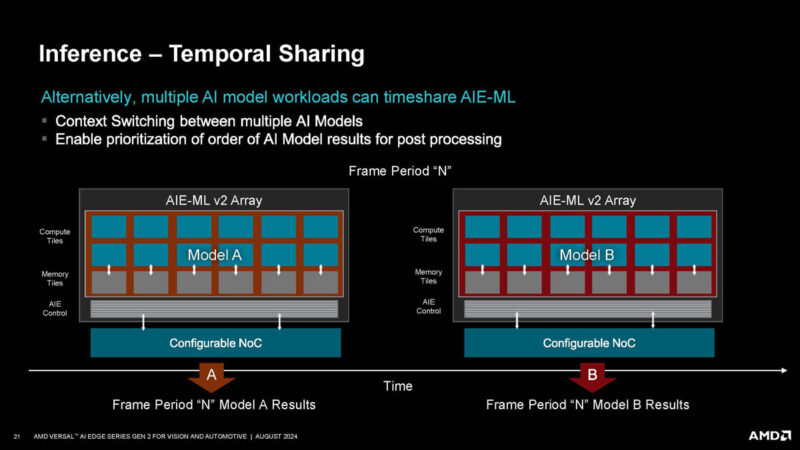

Alternatively, the entire array can context switch between models.

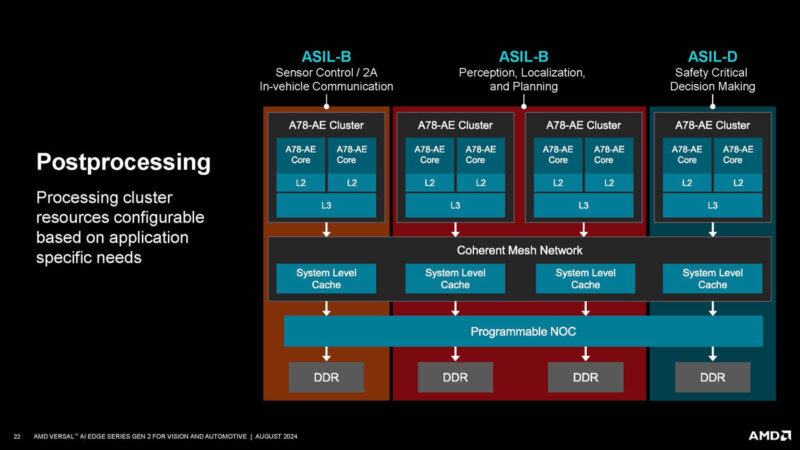

Post-processing can happen, using the CPU cores as well.

An example of making this work is the automated parking.

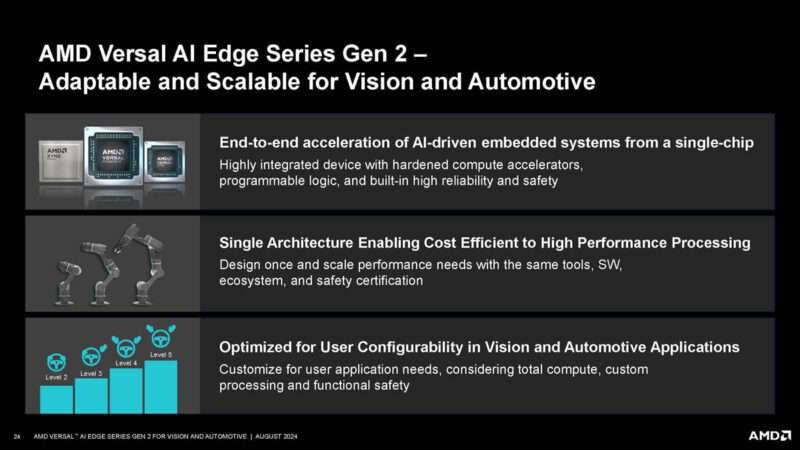

Here is the summary slide.

For those thinking of applications in other areas, AMD also has other Versal lines.

Final Words

Overall, it is cool to see this advance. The edge is perhaps the place most up for grabs in the AI race. There are so many applications and use cases that the likely outcome is a much more heterogeneous mix of chips than in the data center, PC space, and phones combined.