ASRock Rack 1U4LW-B650/2L2T External Hardware Overview

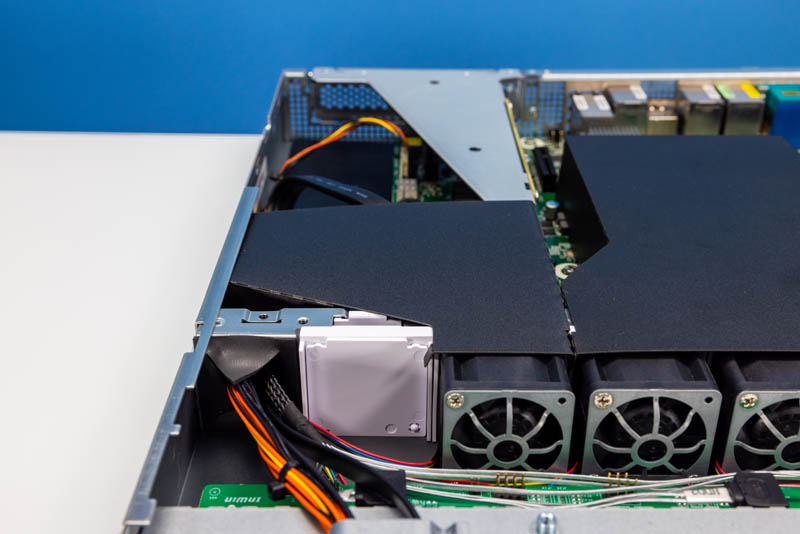

The server itself is a 1U rackmount chassis at ~21″ or 533.4mm in depth. While it is not the shortest-depth server, it is designed to fit in even shallow racks often found in low-cost hosting/ colocation.

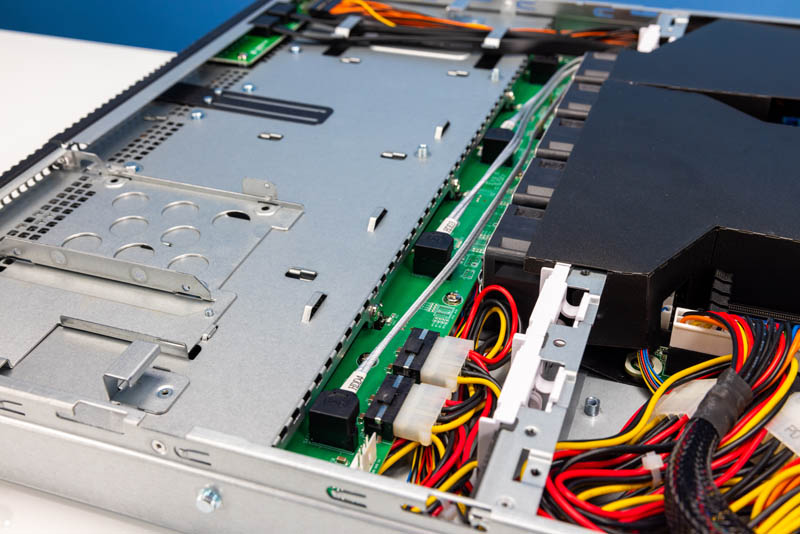

The front of the chassis has the normal USB ports, power, and reset buttons along with status LEDs, but the biggest feature is the four 3.5″ bays. 3.5″ SATA bays are still popular in this market. The 3.5″ trays are toolless for large hard drives but can use 2.5″ SSDs with screws.

These are hot-swap bays, unlike some servers in this segment that are not hot-swappable due to the backplane just behind the storage.

Just above these 3.5″ bays is a optical drive bay slot. In our system, we had a 2.5″ mounting point in that space for an extra SSD, although we did not have the power and SATA cable routed there. Still, it is possible to use this as another option in the server.

Moving to the rear of the server, this is the single PSU option. ASRock Rack has a redundant PSU variant as well. Our system came with an 80Plus Gold power supply. The spec page says it is supposed to be an 80Plus Platinum PSU, although most 400W PSUs top out at Gold levels. (Update 2023-09-03: ASRock Rack has updated its website after this article was published to say the Gold PSU we reviewed is correct.) We also have a slightly pre-production server as the “preliminary” tag was removed from the server after we had tested and reviewed the unit, but while the video was being edited.

The rear I/O is super interesting. Here we get a serial port and a VGA port. We also get four USB ports and an out-of-band management port. What is different, is we get a DisplayPort and HDMI port, because the new AMD Ryzen 7000 series has an integrated GPU. This was a feature we saw on our recent ASRock Rack W680D4U-2L2T/G5 Motherboard Review for the Intel side as well.

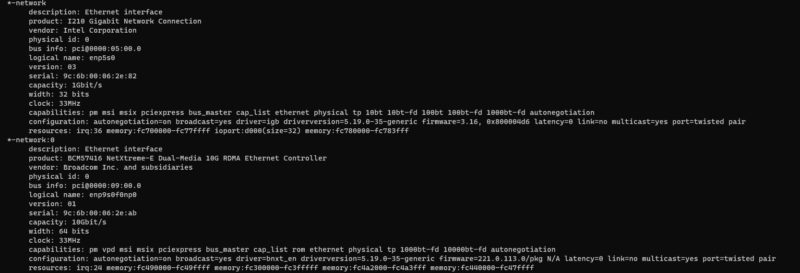

Aside from having two more USB ports, the networking is also a bit different. We have two Intel i210 NICs for 1GbE. Instead of the Intel X710 10Gbase-T ports, here the two 10GbE ports are handled by a Broadcom BCM57416 NetXtreme-E NIC.

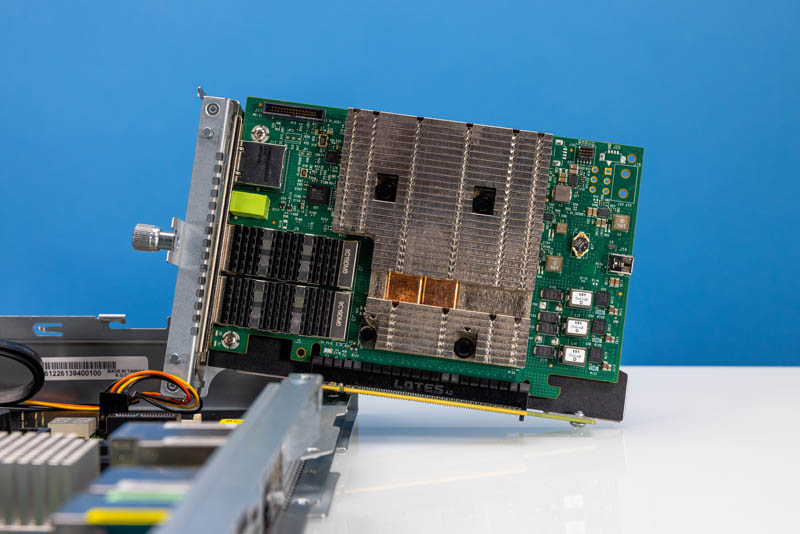

On the side of the chassis, there is a lone PCIe Gen4 x16 riser slot, but at least it takes full-height cards.

With a fast slot like this, one can even add things like dual 100GbE networking on a NVIDIA BlueField-2 DPU that also has its own Arm system. Those cards cost about as much as the entire ASRock Rack barebones, but you could.

The maximum power supported here is 75W on the PCIe slot. There is airflow going to the riser. That means aside from NICs, one could also put lower-power GPUs, FPGAs, or even HBA/ RAID controllers.

Clearly the motherboard and CPU are the stars of this system, so let us get to the topology, management, and performance.

Patrick, you say the CPU matters. Does this mean that the motherboard does not have the usual options to set a custom (lower) power limit? If it does have that option, it might be even better in terms of the flexibility it offers. Particularly for home lab setups that would be a nice feature.

Jorik – Great question. Just added a screenshot of the AMD Overclocking menu in the BIOS so there are levers to tweak.

Hopefully there is the “Eco Mode” option in the bios, or a way to manually recreate it so you can run the X series chips at a reasonable power limit, but still have the option to let it run hot if needed?

Any word on availability and pricing?

Regarding Joriks question:

Is ECO mode available in BIOS like on all desktop boards?

That would allow anyone to bring TDP down to low levels on -X CPUs(105W for 7950X) in a very safe and easy way. Even simpler than setting TDP manually.

Exciting! Patrick, I pulled the mainboard user manual but it only says “frequency and voltage” are in the overclock section (no details given). Can you advise if one can dial in a maximum power (watts) or amps (e.g. via PBO or Curve Optimizer)?

A 7950x, power limited to ~95-105W actual max socket power would be killer in this system if one needs the cores.

7950X3D now

If the motherboard allows you to set TjMAX, then that could also be a very practical lever to pull. On the 7000 series the boost algorithm seems to be quite adept at taking the programmed thermal constraint into account, it is not a hard thermal throttling of yesteryear.

Setting TjMAX to, say, 75 °C should greatly reduce power consumtion in a way that will actually adapt to changes to ambient temperature.

Platforms like this are very appealing for the price/performance/power figures. Let me just get right to my question: 32GB DDR5 UDIMM sticks are a known quantity. Apparently 48GB sticks were recently released as well. What does the future hold for 64GB (or 72/96GB) modules? What does STH (and its readers) think the limit will be for ECC UDIMM module size and when? Related question: DDR4 UDIMMs have topped out at 32GB and we will never see larger, correct?

Looking at the product page for just the motherboard it says the x16 slot is PCIE 5.0, seems like it’s 4.0 on this server though. Is that just the riser being 4.0 I wonder?

@AustinP

I think 64 GB DDR5 UDIMMs will surely be made, but it will take a while. Much like SRAM, DRAM density is also pushing up against the walls of physics and silicon harder than ever. You can only make the capacitors so thin and the electrons so few before your DRAM turns into a hardware RNG device. We are almost certainly in the diminishing returns era of DRAM manufacturing.

AFAIK DDR4 is slowly creeping towards being phased out, there is negligible chance that anyone will make higher density DDR4 DRAM chips now.

Doesn’t the Xeon E series lack RDIMM support anyways?

When compared against that… I don’t think theres anything missing in the actual spec sheets anymore vs the Xeon E series.

As it was not mentioned, one would assume this suffers the same issue list as other am5 mobos if you put 128GB of RAM in the slots?

It’s look interesting, but only one m.2 slot and maximum 32GB module memory is big mistake:-/

Now I’m interested (and sold), this server could fit perfectly for a devlopment env, or even homelab.

Only four SATA and one M.2 slot. Meh..

The problem with Ryzen 7000 (and Intel 13000) is that enormous single x16 pcie5 slot. Nobody outside the hyperscalers needs that slot. The solution to that is splitting it to x16/x0 or x8/x8 depending on what is populated. This ‘server’ does not fix the problem.

I hope to see more Ryzen server motherboards like the one in this server. Like other commenters, I’d like to see more PCIe slots. Also would like to accommodate 110mm M.2 and large low noise heatsink/fan for use at home. uATX / ATX layout would be great. I’d prefer to skip the integrated 10G, I’d want SFP+ anyway. Give me an extra PCIe slot to install what I want instead.

I have been using 5950x server for some time … very fast.

I am very curios to see how this gen is performing.

This really is a pedestal board, not a 1U board. You can find boards (for other CPU sockets) where much of the PCIe is brought out in x8 cabled sockets. SFF8654, SlimSAS, not sure I remember the name correctly. These boards are good for 1U: you can wire up nvme backplanes, or run a load of SATA if the board is made right.

Anyway the PCIe lanes on this thing are mostly wasted by the case form factor. People who want a home Ryzen 7000 server: build a pedestal or 2U or 4U server using this board, you will have a much more useful machine.

ASRock Rack does have some interesting M.2 risers for 1U platforms. Like the RB1U2M2_G4 and RB1U4M2_G4. I would be curious so see multiple RB1U4M2_G4 models in a 1U tested. Could be nice for space starved homelabs.

Wish the 2 drive model was 8x instead of 16x.

Tested the non 10G version of this board yesterday (B650D4U) with Ryzen 7950 cpu and dynatron 1U watercooling, power is impressive (61500 cpu marks) but power draw is peaking at 325 watts for the whole server during geekbench 6..

With upgraded fans, the Dynatron handles it quite well (83 – 89°), still 325watts is a lot in datacenter environnement.

Will order a 7900X cpu to compare powerdraw and benchmark results.

https://browser.geekbench.com/v6/cpu/468424

https://www.passmark.com/baselines/V10/display.php?id=503406055051

Does anyone know if the x16 slot supports bifurcation? I didn’t see anything in the manual about it but figured I’d ask. Getting one of these is mighty tempting, I just wish it had a little more PCIe.

Does anyone have confirmation on whether the Broadcom BCM57416 supports NBase-T speeds? From what I’ve seen it does not, but I’m hoping someone with experience can confirm one way or another.

Patrick (or anyone with hands on experience), you reference the noise of the unit briefly, but don’t show it in the video. Just how bad is it?

We currently use Dell Precision 3930 Rack workstations for field deployments, and I think this with a 7900 would make an excellent next step up in CPU performance. But we do operate in close proximity to the servers. The Dells are practically silent in less than 60% lost.

Hi Patrick,

I would be interested in the total power consumption using the 7950@105W (ECO). Would this be possible?

Where can I buy this?

@Pete

https://www.asrockrack.com/general/buy.asp

My only problem with your reviews is that you do not mention anything about what “server” OS you are testing these with and what kind of issue or not that you have had with installing said OS. Please expand into the OSes that will run on these hardware platforms, specifically server OSes. – Thank you.

Package idle is pretty uninteresting. What draws the system from the wall in idle?

This is really an impressive piece! We got one from local disti in Australia (wisp.net.au) and thinking to order another one. Apparently more units coming end of July, if anyone is from down under

@netswitch

What mounting bracket and upgraded fans are you using?

I cant seem to find a compatible bracket for AM5 for this cooler, only AM4

Could someone post IOMMU groups?

IOMMU groups

[ 0.405364] iommu: Default domain type: Translated

[ 0.405364] iommu: DMA domain TLB invalidation policy: lazy mode

[ 0.423218] pci 0000:00:01.0: Adding to iommu group 0

[ 0.423235] pci 0000:00:02.0: Adding to iommu group 1

[ 0.423246] pci 0000:00:02.1: Adding to iommu group 2

[ 0.423257] pci 0000:00:02.2: Adding to iommu group 3

[ 0.423272] pci 0000:00:03.0: Adding to iommu group 4

[ 0.423286] pci 0000:00:04.0: Adding to iommu group 5

[ 0.423303] pci 0000:00:08.0: Adding to iommu group 6

[ 0.423313] pci 0000:00:08.1: Adding to iommu group 7

[ 0.423323] pci 0000:00:08.3: Adding to iommu group 8

[ 0.423343] pci 0000:00:14.0: Adding to iommu group 9

[ 0.423356] pci 0000:00:14.3: Adding to iommu group 9

[ 0.423408] pci 0000:00:18.0: Adding to iommu group 10

[ 0.423418] pci 0000:00:18.1: Adding to iommu group 10

[ 0.423428] pci 0000:00:18.2: Adding to iommu group 10

[ 0.423445] pci 0000:00:18.3: Adding to iommu group 10

[ 0.423455] pci 0000:00:18.4: Adding to iommu group 10

[ 0.423471] pci 0000:00:18.5: Adding to iommu group 10

[ 0.423482] pci 0000:00:18.6: Adding to iommu group 10

[ 0.423491] pci 0000:00:18.7: Adding to iommu group 10

[ 0.423502] pci 0000:01:00.0: Adding to iommu group 11

[ 0.423513] pci 0000:02:00.0: Adding to iommu group 12

[ 0.423523] pci 0000:02:01.0: Adding to iommu group 13

[ 0.423534] pci 0000:02:02.0: Adding to iommu group 14

[ 0.423544] pci 0000:02:03.0: Adding to iommu group 15

[ 0.423555] pci 0000:02:04.0: Adding to iommu group 16

[ 0.423565] pci 0000:02:08.0: Adding to iommu group 17

[ 0.423577] pci 0000:02:0c.0: Adding to iommu group 18

[ 0.423587] pci 0000:02:0d.0: Adding to iommu group 19

[ 0.423590] pci 0000:04:00.0: Adding to iommu group 13

[ 0.423593] pci 0000:05:00.0: Adding to iommu group 14

[ 0.423596] pci 0000:06:00.0: Adding to iommu group 15

[ 0.423599] pci 0000:07:00.0: Adding to iommu group 15

[ 0.423603] pci 0000:09:00.0: Adding to iommu group 17

[ 0.423606] pci 0000:09:00.1: Adding to iommu group 17

[ 0.423608] pci 0000:0a:00.0: Adding to iommu group 18

[ 0.423611] pci 0000:0b:00.0: Adding to iommu group 19

[ 0.423622] pci 0000:0c:00.0: Adding to iommu group 20

[ 0.423645] pci 0000:0d:00.0: Adding to iommu group 21

[ 0.423658] pci 0000:0d:00.1: Adding to iommu group 22

[ 0.423669] pci 0000:0d:00.2: Adding to iommu group 23

[ 0.423679] pci 0000:0d:00.3: Adding to iommu group 24

[ 0.423690] pci 0000:0d:00.4: Adding to iommu group 25

[ 0.423701] pci 0000:0d:00.5: Adding to iommu group 26

[ 0.423712] pci 0000:0d:00.6: Adding to iommu group 27

[ 0.423722] pci 0000:0e:00.0: Adding to iommu group 28

[ 0.649911] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

What about TPM 2.0 supported by VMware? we have a tried the TPM-SPI 2.0 but VMware didn’t accept with internal error message. Anyone know the exact proper bios configuration? running bios 10.18

At least here the firmware displays the fTPM 2.0 device from the cpu itself.