AMD Radeon RX 6800 Compute Related Benchmarks

We are going to compare the Radeon RX 6800 to our growing GPU data set. Of note, benchmarks that use CUDA acceleration are not going to run on these cards.

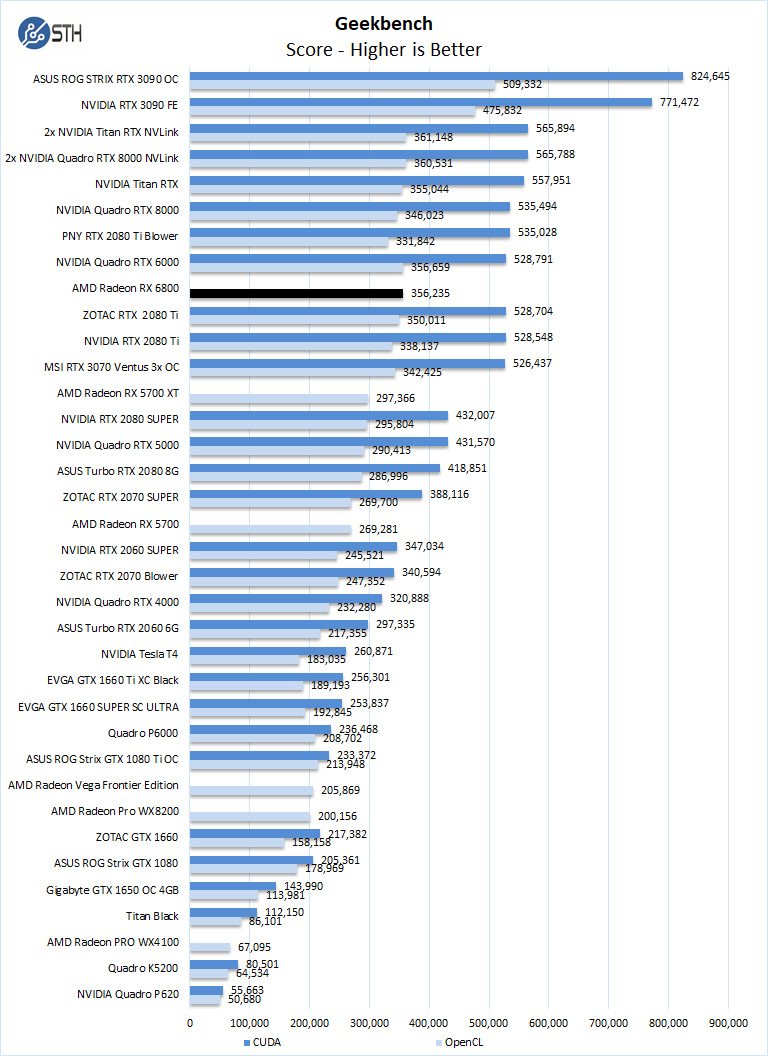

Geekbench 4

Geekbench 4 measures the compute performance of your GPU using image processing to computer vision to number crunching.

Our first compute benchmark, and we see the AMD Radeon RX 6800, we can see the OpenCL performance competes well with the NVIDIA GTX 2080 Ti and is the strongest AMD graphics card we have tested to date. Hopefully, the Radeon RX 6900 (XT) will be even faster.

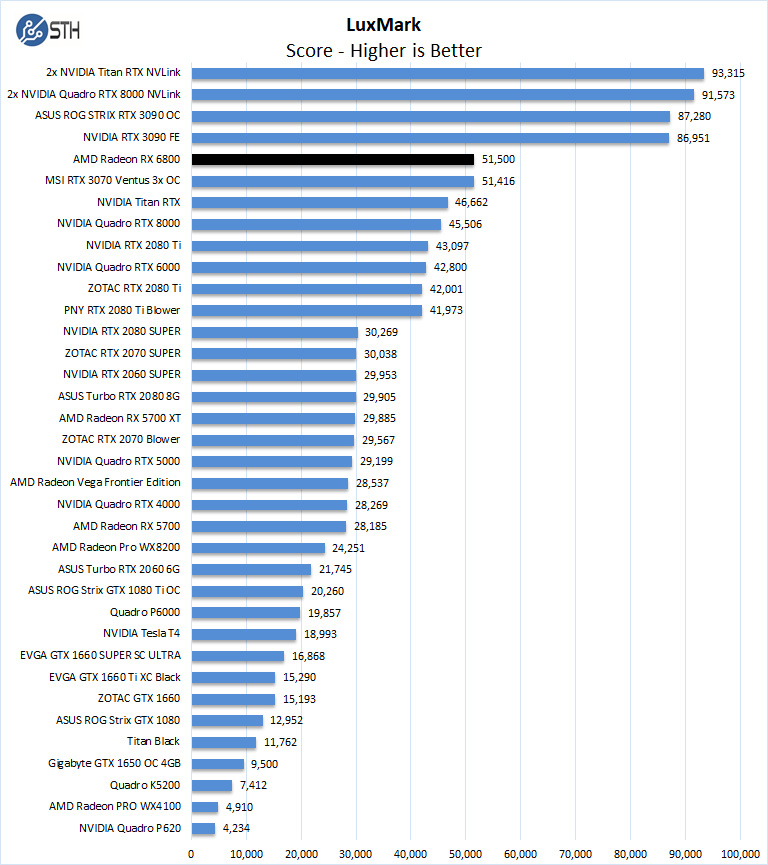

LuxMark

LuxMark is an OpenCL benchmark tool based on LuxRender.

In LuxMark, a single AMD Radeon RX 6800 matches up well with the GeForce RTX 3070 series.

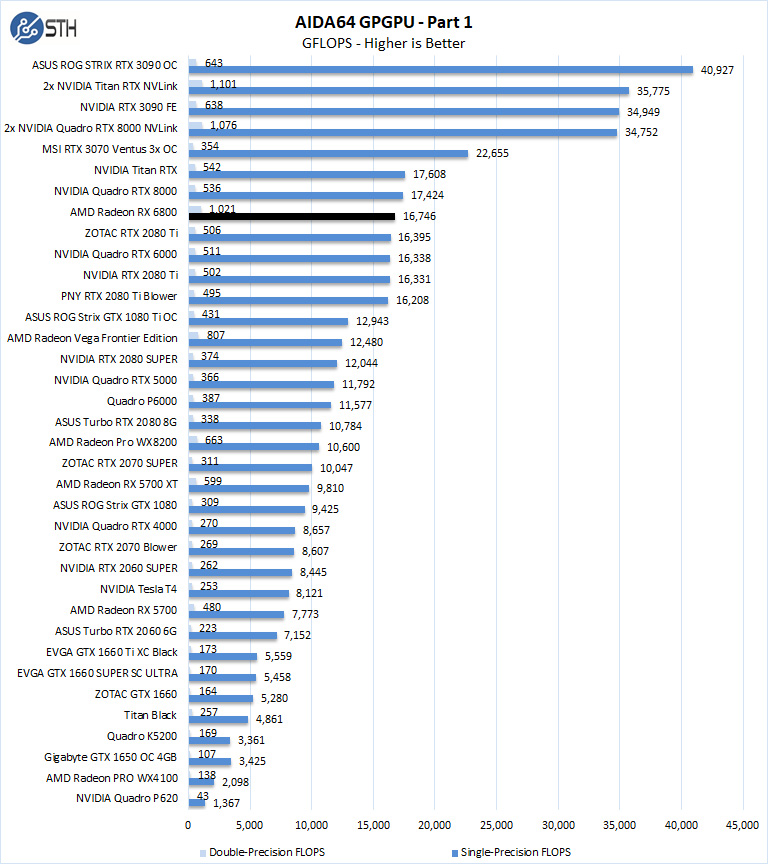

AIDA64 GPGPU

These benchmarks are designed to measure GPGPU computing performance via different OpenCL workloads.

- Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

- Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data.

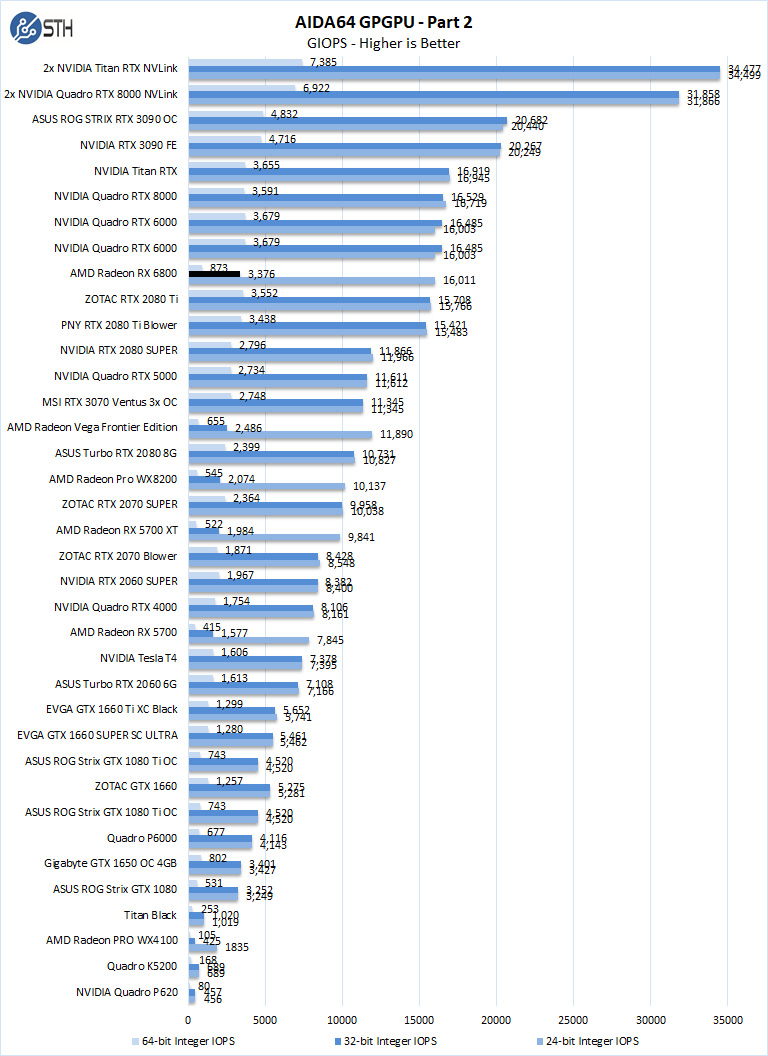

The next set of benchmarks from AIDA64 are:

- 24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This particular data type defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units.

- 32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

- 64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead, they emulate the 64-bit integer operations via existing 32-bit integer execution units.

Here we get single-precision FLOPS, and 24/32 bit integer numbers that are close to NVIDIA. Double-precision FLOPS are significantly better than what NVIDIA has but 64-bit integer is significantly slower than NVIDIA.

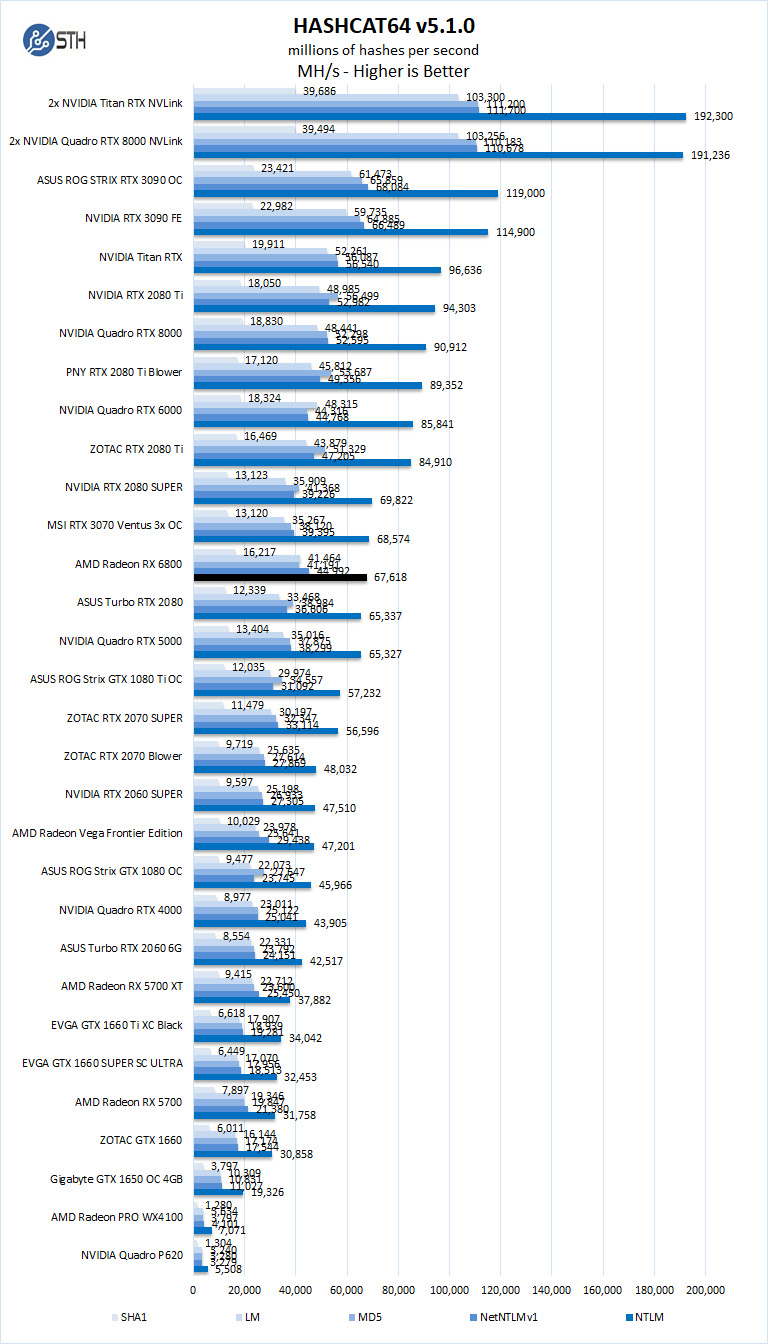

hashcat64

hashcat64 is a password cracking benchmarks that can run an impressive number of different algorithms. We used the windows version and a simple command of hashcat64 -b. Out of these results, we used five results in the graph. Users who are interested in hashcat can find the download here.

Here we can see that the hashcat performance is generally in the NVIDIA GeForce RTX 2080 Ti level except, of course, in the one test we sort our chart by.

Next, we will look at SPECviewperf 13 performance tests.

Will you include video encoding benchmarks? Given how popular Plex is in the home lab space, I would expect video encoding to be very relevant.

Wanna know the real dirty little secret woth your new card, William? ROCm does not support RDNA GPUs. The last AMD consumer cards that ROCm supported was the Vega 56/64 and it’s 7nM die shrink the VII.

You got a RX5000 or RX6000 series card or any version of APU, well you get to use OpenCL. Aren’t you lucky?

nVidia supported CUDA on every GPU since at least the 8800GT. I can’t imagine how AMD expects to get ROCm out of the upcoming national labs when the only modern card it will work on is the mi100. Ever try to buy an mi100 (or mi50)? It is basically possible to find an AMD reseller that will even condescend to speak to a small ISV.

*correction:

It is basically IMPOSSIBLE to find an AMD reseller that will even condescend to speak to a small ISV.

I find all these reviews and release news for both AMD and Nvidia card a joke at the moment, as an end user I can’t ever find any in stock no matter how deep my pockets!!

I’m not just talking about STH

Why have the new reviews suddenly decided to skip 3080 altogether ???

Hi Ryuk – we still have not been able to grab one for testing.

Pure junk selling, USA warranty evading, AMD still owes me a video board since I did not even get a 1/2 year of performance from the 3 very poorly designed and QA, Vega Frontier Editions.

AMD wanting all the selfish benefits of consumer sales, but none of the mature responsibilities.

The replacement warranty boards from AMD all junk. The first not lasting more than 2 days without crashing (BSD), the second, having waited ~1 mth, just 1 day then crashing. I having the impression, no one watching AMD, hey simply return “defective boards” as replacements, then wash their foul hands, not honoring anything, their word to then not respecting customer.

Worst, this company then sought to abuse USA Consumer Protection Laws by expecting their customers in the USA to send the defective product OUT OF COUNTRY, having no USA depot.

Park McGraw above ^^ had a faulty system (likely PSU or motherboard) that was making graphics cards either not work or actually break/fail, and then decided to blame AMD for it… ♂️

You didn’t get 3x faulty graphics cards in a row, you freaking imbecile… Basic silicon engineering science says that getting 3x GPU duds in a row is practically an impossibility (unless the product itself had a fundamental device killing flaw… of which Vega 10 did not). Aka, it was YOUR SYSTEM that was killing the cards!

And to emerth, I wouldn’t expect ROCm to EVER come to RDNA personally. API translation seriously isn’t easy, so keeping things limited to just two instruction sets (modern CUDA to GCN [+ CDNA which uses the GCN ISA & is basically just GCN w/ the “graphics” cut out]) likely cuts down the work & difficulty DRAMATICALLY!

Not to mention that even IF RDNA DID support ROCm, performance vs Nvidia would still be total crap because of the stark lack of raw FP compute! (AMD prioritized pixel pushing DRAMATICALLY over raw compute w/ RDNA 1 & 2 to get competitive gaming performance & perf/W, with only RDNA 3 starting to eveeeeer so slightly reverse course on that front).

AMD just doesn’t give a crap, whatsoever, about the hobbyist AI/machine learning market. Nvidia’s just got way, WAAAAAY too much dominance there for it to be worth AMD spending basically ANY time & effort to try and assault it. Especially when CDNA is absolutely beating the everliving SH!T out of Nvidia in the HPC & supercomputer market!