AMD Radeon Pro WX 8200 Performance

We are going to run through a few benchmarks just to give some sense of where this card performs. This is the start to a new series, so we are going to add these to some of our legacy results before filling in newer cards in subsequent reviews.

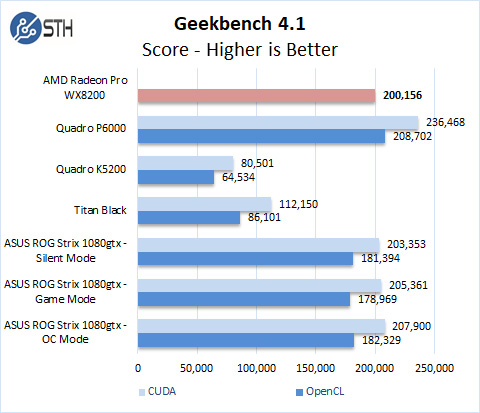

Geekbench 4

Geekbench 4 measures the compute performance of your GPU using image processing to computer vision to number crunching.

While the WX 8200 does not have a CUDA score, it does show a powerful OpenCL score which rates up with higher-end GPU’s. OpenCL is the strong point for the WX 8200 which we will see in other benchmarks also.

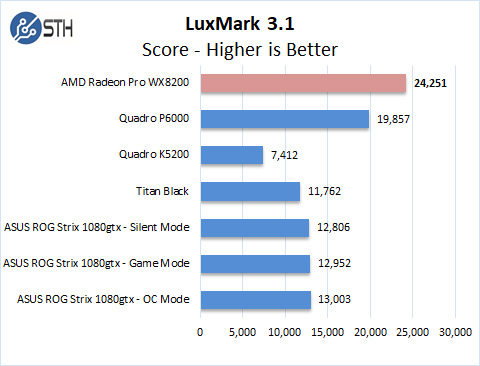

LuxMark

LuxMark is an OpenCL benchmark tool based on LuxRender.

The WX 8200 easily takes the high score for LuxMark based on OpenCL versus Pascal and earlier NVIDIA generations.

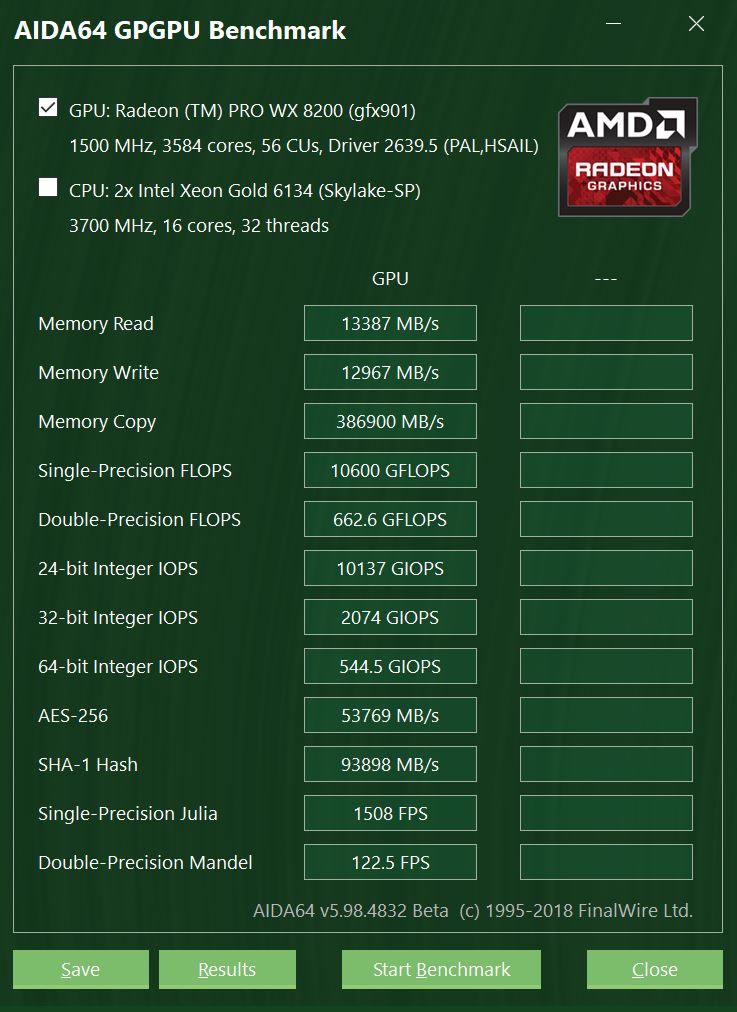

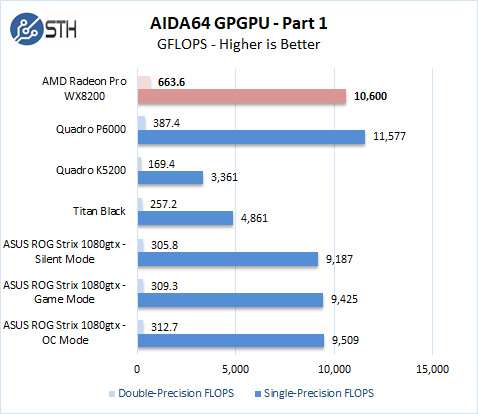

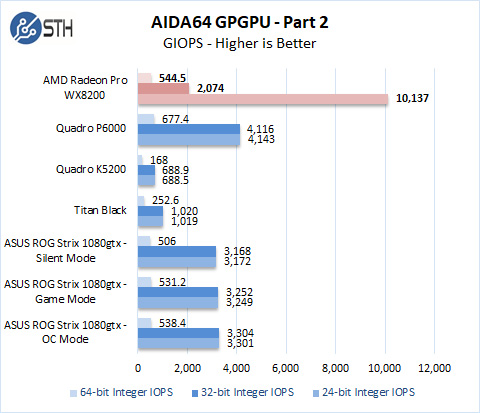

AIDA64 GPGPU

These benchmarks are designed to measure GPGPU computing performance via different OpenCL workloads.

Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data.

The next set of benchmarks from AIDA64 are.

24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This particular data type defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units.

32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead, they emulate the 64-bit integer operations via existing 32-bit integer execution units.

The WX 8200 crushes the 24-bit integer IOPS results. NVIDIA uses math performance at different precision levels to differentiate features in its cards.

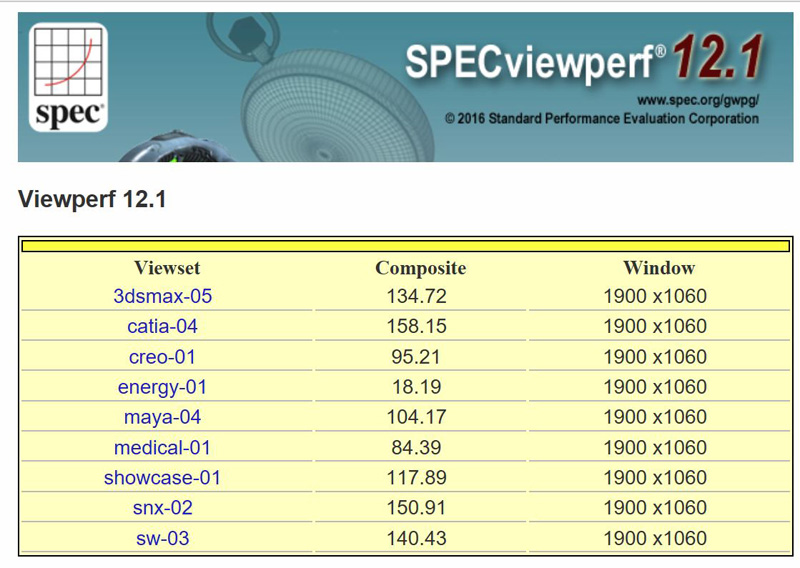

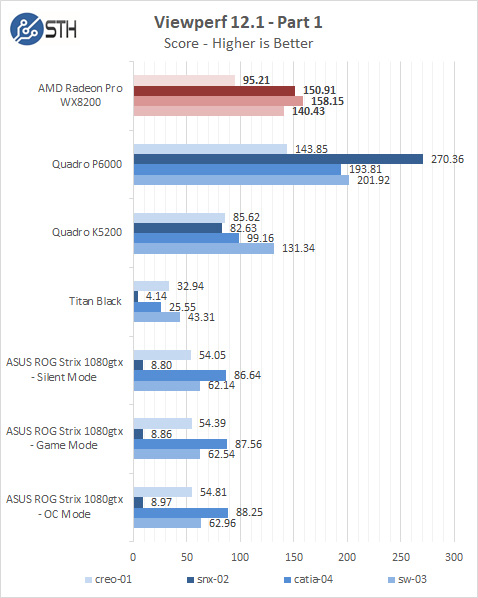

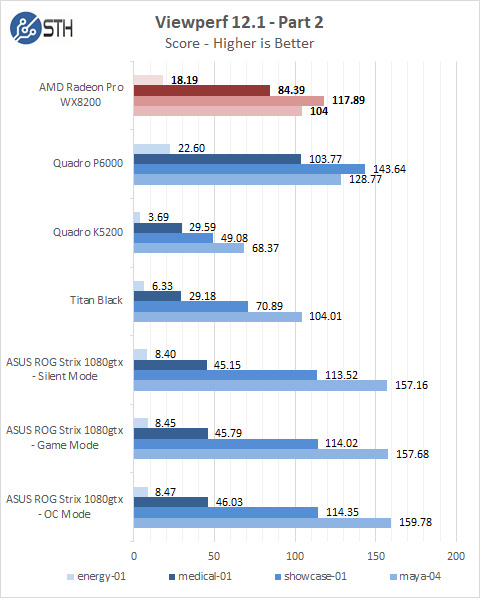

SPECviewperf 12.1

SPECviewperf 12 measures the 3D graphics performance of systems running under the OpenGL and Direct X application programming interfaces.

The WX 8200 rolls in at second place in some tests compared to much higher cost graphics cards in Part 1 tests and gave a good showing in Part 2 benchmarks.

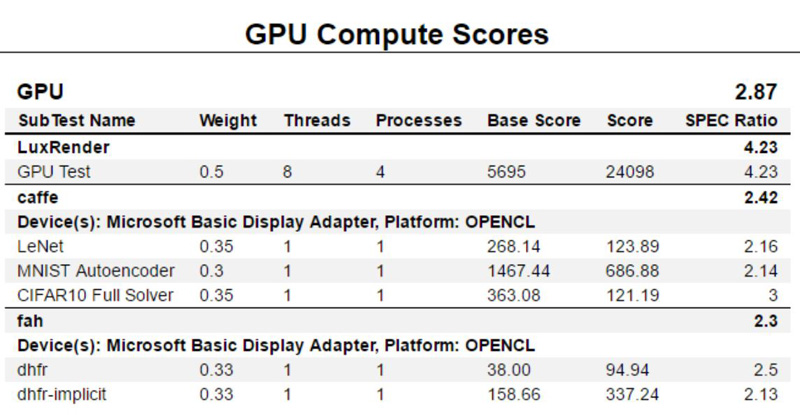

SPECworkstation 3

SPECworkstation 3 is the new workstation benchmark from SPEC. Being this is a new benchmark, and we haven’t run many systems with this, we posted the output of the GPU compute scores as a reference.

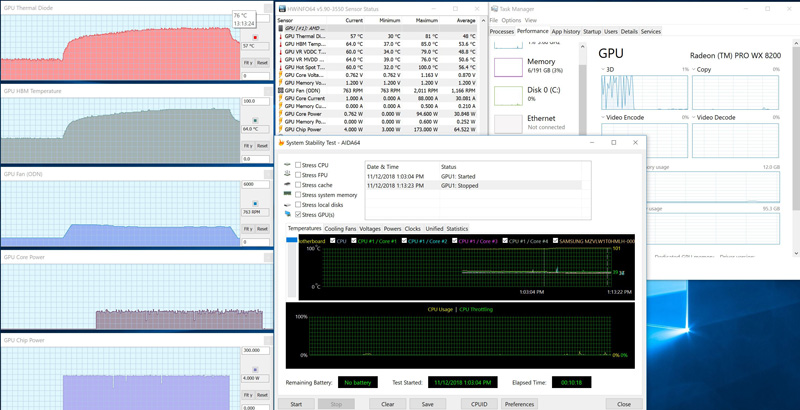

Temperature and Fan Tests

For our power testing, we used AIDA64 to stress the WX 8200, then HWiNFO to monitor temperatures, fan speed.

After the stress test has ramped up the WX 8200 for a length of time, we find the Vega 10 GPU reaches temps at 81C; the HBM memory reaches 85C. The single blower fan spins up to 2,011 RPM to cool these components and does not make a lot of noise.

Conclusion

For OpenCL applications, the WX 8200 is a beast and can outperform many higher-end GPU’s. For the most part, users will focus on applications such as Radeon ProRender, Blender, Maya and Adobe Premiere Pro and other Vega certified apps.

The price point of the WX 8200 is the exciting part, a quick scan on Amazon and other retailers show MSRP and street pricing under $1000 at the time of writing. Many compare the WX 8200 to the Quadro P5000 which perform relativity close from benchmarks we have seen, but at a much higher price, showing $1,758.85. Again, application specific requirements will dictate what GPU you would use. Overall, performance is outstanding, close to a P5000 and costs substantially less.

The WX 8200 runs relativity cool and generates little noise at full loads which allows for small compact cases in a low noise environment. You may have seen this in our recent BOXX APEXX T3 Flagship AMD Ryzen Threadripper Workstation Review as the GPU of choice for a powerful and compact system. While NVIDIA may hold the absolute performance crown with some of its Volta and Turing generation GPUs, AMD has a GPU that is extremely competitively priced given its feature set.

WX9100 at $1,350 and WX8200 $1,000 are both pretty good value when you need driver support for pro apps and 10 bits color overlay in OpenCL compared to the NVidia counterparts.

Power consumption comparison?

When the WX9100 is running professional applications, power consumption ranges from ~140W for 2D drawing and 3D wireframes to almost 250W under OpenGL, it jumps all the way to 265-275W for rendering.

what driver support for pro apps do you refer to? they all support consumer cards anyway.

Is it possible to share WX8200 bios to gpuz bios database? Thank you!

@Luke SolidWorks, Creo, SiemensNX, Adobe with 10bits color, etc…

I suppose, if one needs driver support for pro apps and 10bit color in OpenGL – a used Vega Frontier Edition at ~ $500 is a way better deal. The only thing these cards seem to have that FE doesn’t is ECC memory?

Did some googling, and there seem to be more differences between WX 9100 and Vega FE. The former has 6 miniDP outputs. Might have better driver support actually. Should have more pro-oriented features than the FE (most of them I couldn’t even understand lol, something about syncing virtual desktops etc). So for someone that can utilize some of those extras it’s probably worth it to go with a proper workstation card (wx 9100/8100). For amateurs Vega FE does look very attractive though, considering how many of them have become available since mining profits went down so much, and they’re popping on sites like ebay every day.