AMD has a new DPU. The AMD Pensando Salina is a 400G capable DPU that is designed to take on the NVIDIA BlueField-3 DPU for front-end networks. The DPU itself has 16 Arm Neoverse N1 cores along with up to 128GB of DDR5 memory onboard and the company’s P4 packet processing engine. STH found it at the AMD Advancing AI event with the AMD EPYC 9005 “Turin” launch and we thought we would share.

AMD Pensando Salina 400 DPU

Here is the quick spec slide. We can see the 232 P4 MPU which is the heart of the card’s packet processing. That along with the 16 Arm Neoverse N1 cores gives the card a lot of flexibility and processing power. Something worth noting is that there are dual 400GbE connections, but the card itself only has a PCIe Gen5 x16 interface to drive a single 400GbE link, not two simultaneously from the host system.

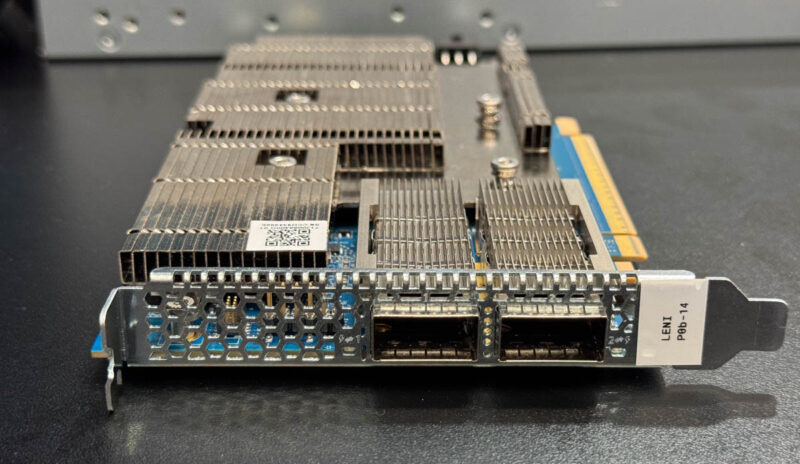

Here is the card. We can see a full-height card with two optical cages and a lot of heatsink across the card. Something quite notable here is that there is not an out-of-band management NIC. Typically, on NVIDIA BlueField DPUs, and even the newer BlueField SuperNICs, we see an OOB management port along with the main data ports. At the same time, when we had some hands-on time with an AMD Pensando DSC2-100G Elba DPU in a Secret Lab we found that VMware ESXio was not using the OOB management port.

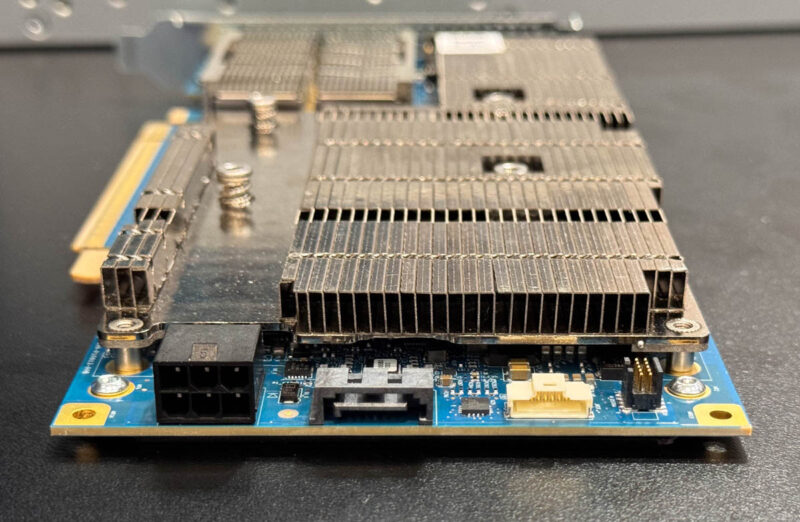

On the back, we can see a power input. That is fairly common since these DPUs tend to use a good amount of power. Then there is perhaps the coolest feature: a SATA port. Let us just say that in 2024 that is unexpected.

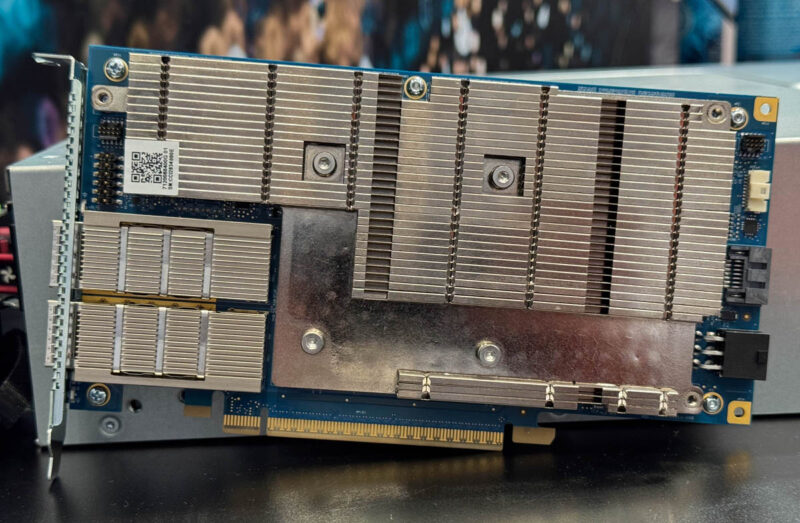

Here is the view of the card covered in a heatsink.

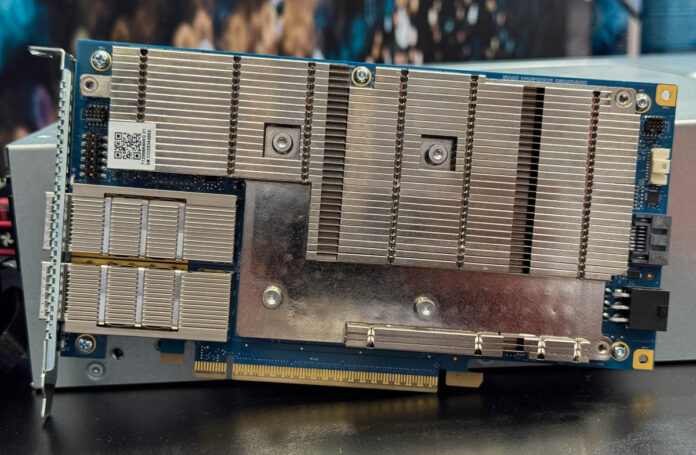

Here is the back of the card with a nice heatspreader.

AMD told us this is able to handle a lot of traffic, like 5 million connections per second due to the programmable P4 engines.

Final Words

We shall see how this fares. The NVIDIA BlueField-3 DPU has been at STH making cameos in pieces for several months now. Likewise, we tested the Marvell Octeon 10 Arm Neoverse N2 DPU almost two years ago and that had Arm Neoverse N2 cores. Hopefully we can get some hands-on time with the new Salina-based cards both on the DPU and NIC side over the next few quarters.

Without OOB, how to managle the DPU

jasonlu – you map the DPU to a VM, and you manage it through VM

As for the SATA – IIRC, I heard that it’s also being used for power on devices which don’t have PCI 6 pin cables

I think the time frame for discrete DPU products is going to be coming to an end as high speed networking moves on socket as part of the chiplet approach. They’ll still exist in the sense that there will be a some dedicated IP to supplement NIC functionality but the idea of full dedicated CPU cores for this will just migrate back to the main CPU complex once on package.

What is the purpose to put two 400GbE links on this card with the PCIe Gen5 x16 interface can handle one ?

My guess the reason for 2 x 400GbE link is perhaps the card is being qualified or has support for PCIE Gen6 x16?

As a DSC with 800GbE port capacity and 400GbE bus capacity it’s to be used in applications where it’s not transfering all the bits over the bus, unless it can always obtain better compression than expected.

It’s more likely that what comes in one port will mostly go out the other, you’d probably be able to grab all the headers and do intelligent routing, or firewall security; with P4 updated over the PCIe 5 bus

It could implement a UltraEthernet router, supporting the UET over Ethernet.

Etc.

Could be the 2nd 400GbE link is for redundancy

#Iwork4Dell

Id like to see network security products with this dpu. Suricata, snort etc. Waf…

Several of those internal headers/ports are suitable for use as a 100M or 1G management NIC.

I don’t know why they would do it that way instead of an externally connected one, but it would still work.

To those saying the SATA port is for more power…no?

That is a SATA data port. It isn’t rated for much power, and it would be really weird to shoehorn power into that kind of connection instead of just using a header(which would be cheaper).

Maybe it is for a SATA drive for external logging or some other file storage that shouldn’t be churning through the internal flash?

As for the two external ports being faster than the x16 internal connection…sure?

You don’t use one of these DPUs purely to as a NIC. There are much cheaper options for that.

You do it because you want the DPU to pre-process and/or mirror/route traffic.