Today we are in San Francisco, California at the AMD Next Horizon event. We are going to update our coverage live. We expect to hear more about AMD EPYC “Rome” generation CPUs and the next generation AMD Radeon Instinct GPUs. We had a hint that both will be 7nm products we expect to launch in the coming months.

Opening Comments From Dr. Lisa Su

Today’s focus is going to be on the data center and how AMD will shape the data center.

AMD is pushing all-new design approaches to CPU and GPU architectures, not competing head-on with legacy Intel monolithic designs. Decisions made years ago were bets on where the company saw the market going. Today’s design choices are being made to impact the company’s roadmap for years in the future.

AMD EPYC has been in the market for about 15 months. AMD EPYC the company has said is doing well is the market. We hope to hear about the next generation today. AMD confirmed we are getting a Rome preview today.

Radeon Instinct has been in the market since 2017, but the company knows that it needs to push software to compete. AMD believes that its key value going forward will be in open source software.

Announcing AMD EPYC on AWS

Today AWS and AMD are announcing that there are immediately available AMD EPYC instances in AWS. This news is huge.

Today R5a, M5a, and T3a instances are going to be available today on Amazon AWS EC2. Changing from R5 to R5a or M5 to M5a will cost 10% less than their Intel counterparts. R5 instances are for big memory instances. M5 is for mainstream applications such as databases and enterprise applications. T3 instances are for blogs and other lower-end applications.

Again, this is a big deal. Lower cost instances from AWS based on AMD EPYC and a simple change from R5 to R5a.

Mark Papermaster on the new 7nm AMD Technology

Mark is now talking about Zen 2. More importantly, the company is showing that it can deliver roadmap. Zen 2 7nm is sampling, and Zen 3 is on track via 7nm+ in 2020.

There is a big discussion on how roadmap is important and AMD is committed to delivering on its roadmap.

AMD believes that it made a big bet with 7nm. The company made a bet that it could produce chips on 7nm. It saw 7nm as a long and sustaining node in the industry.

The company saw this as a difficult jump to 7nm, but felt that if they could execute, it could leapfrog their performance and power consumption. They are saying that TSMC 7nm product is doing very well.

The company was aiming to achieve parity with Intel using 7nm versus Intel 10nm when it made the original bet. Instead, it is going to be first to market on the new node.

AMD is not just aiming for IPC improvements or lower power. AMD is aiming for 2x performance per compute node.

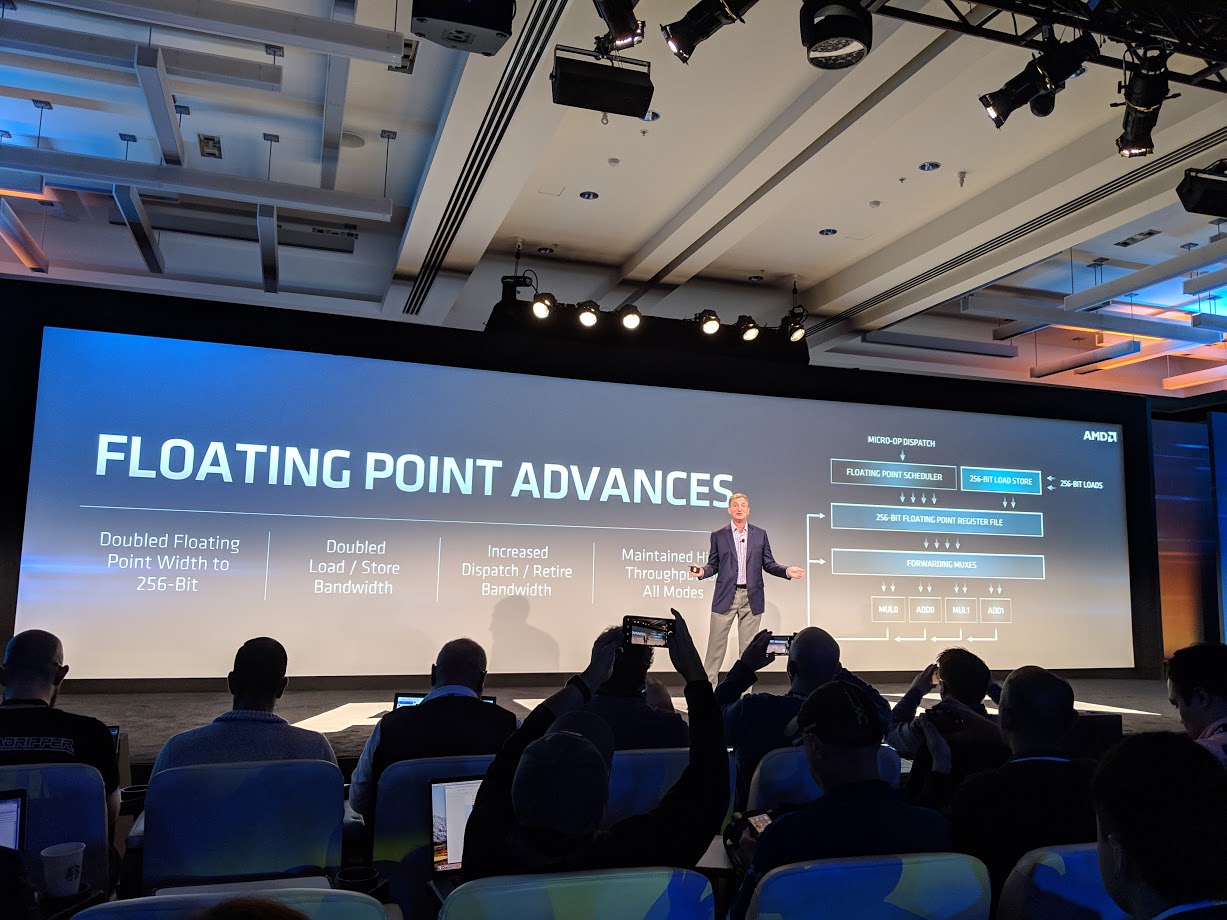

Here are the new front end andvances helping to deliver twice the throughput.

Doubling floating point performance through width (256-bit), double load/ store bandwidth, faster dispatch and retire bandwidth, and maintain high throughput for all processing modes.

New security features are coming with Zen 2. Hardware enhanced Spectre mitigations with AMD Zen 2.

We are now getting into interconnects. You can read about the current AMD EPYC interconnect versus Intel in: AMD EPYC Infinity Fabric v. Intel Broadwell-EP QPI Architecture Explained.

AMD is using a 14nm I/O die and then optimizing on CPU performance by using 7nm CPU chiplets. This is a “revolutionary” approach. As a note here, Intel, Xilinx and others have multi-chip package designs using different process nodes.

AMD is delivering Zen 2. Zen 3 is on track and Zen 4 is nearing completion. AMD wants to show it is delivering on its roadmap.

AMD Radeon Technologies Group David Wang Presentation

AMD is making a push into the data center with AMD Radeon. This is where we expect AMD will start a renewed push against NVIDIA. AMD 7nm Vega on track to ship “later this year.”

AMD is talking about double precision being important in the data center. This is an area where NVIDIA has been strong.

AMD Radeon Instinct MI60 is the world’s first 7nm GPU for the data center.

New enhanced AMD Vega architecture. The new architecture supports FP64 down to 4-bit Integer operations for flexible compute.

32GB of memory and 1TB/s of memory bandwidth with ECC.

AMD EPYC Rome to MI60 PCIe Gen4!

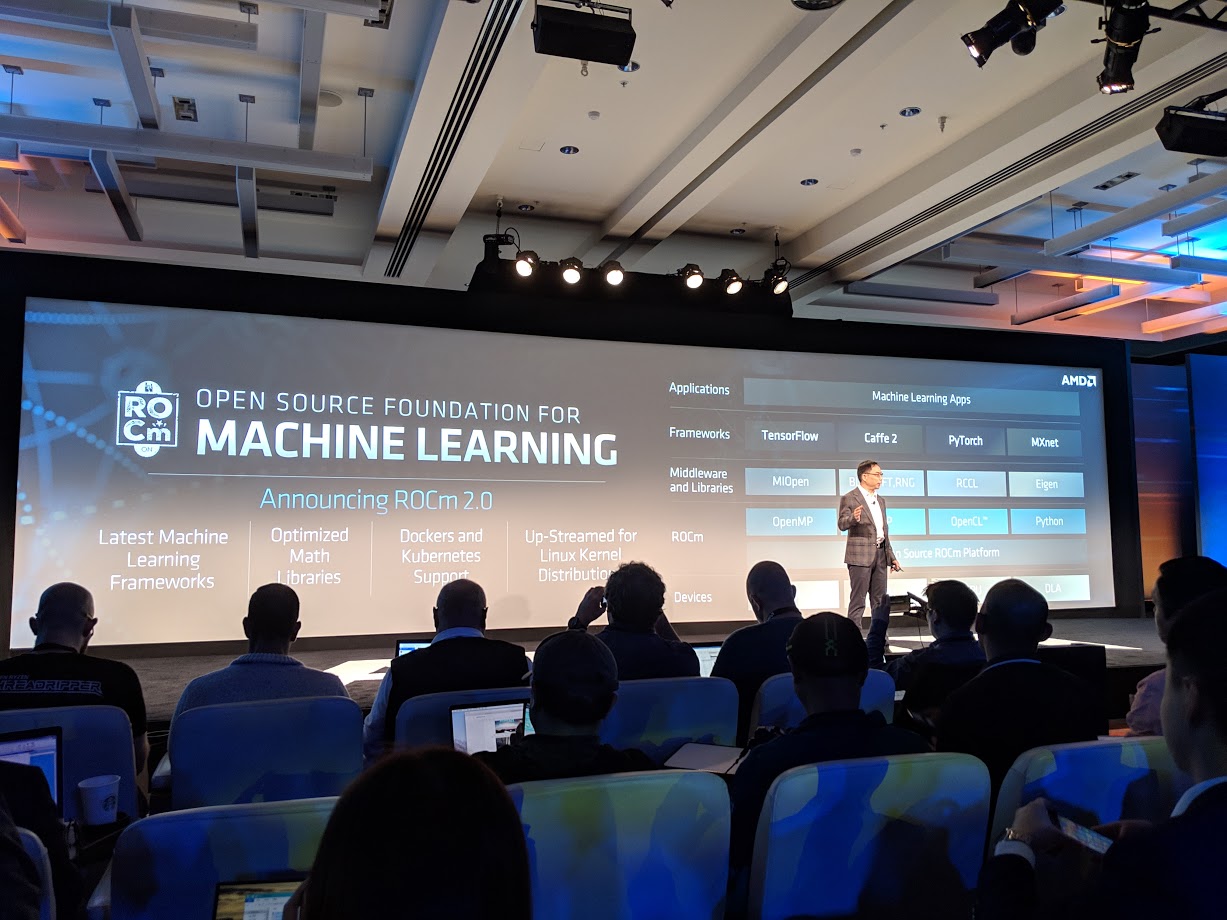

On the software side, AMD ROCm 2.0 is being announced and upstreamed in Linux. AMD also says it is embracing containers with ROCm 2.0 (finally!!!!)

AMD is claiming 8.8x double precision performance MI60 over MI25 previous generation. Resnet-50 2.8x.

AMD is claiming similar or better performance in single and double precision performance to the NVIDIA Tesla V100 PCIe.

It is great to see AMD getting competitive with NVIDIA here. At the same time, the NVIDIA Tesla V100 has been out for a long time. Running close to a 2017 era GPU that has enormous adoption with a GPU launching in late 2018 is good, but we need to see pricing information. If it is on price parity with a Tesla V100, AMD will still have work to do. Also, using the PCIe version means that the company is not using the DGX-1 SXM2 modules or the DGX-2 modules which have additional thermal headroom. AMD is comparing against the “low end” NVIDIA Tesla V100. You can read more about the NVIDIA DGX-1 class SXM2 modules in our How to Install NVIDIA Tesla SXM2 GPUs in DeepLearning12 article.

Here is the roadmap for the AMD Radeon Instinct GPU.

This section is done. We expect AMD EPYC 2 after the break!

AMD EPYC Rome with Forrest Norrod

We are going to get more details on AMD EPYC Rome 7nm Zen 2 product today. Rome is sampling with a roadmap to Zen 3 thereafter. We already have a preview.

As we get ready for the next part of the session to start we know a few details about Rome:

- Several x86 compute chiplets on 7nm that are going to see big performance improvements over Zen 1 “Naples” EPYC

- There is a 14nm I/O chip with fabric

- DDR4, Infinity Fabric, PCIe and other I/O all are on the I/O chip

- PCIe Gen4 support

- Ability to connect GPUs and do inter-GPU communication over the I/O chip and Infinity Fabric protocol so that one does not need PCIe switches or NVLink switches for chips on the same CPU. We covered the current challenges in: How Intel Xeon Changes Impacted Single Root Deep Learning Servers

- 64 cores confirmed AMD EPYC Rome Details Trickle Out 64 Cores 128 Threads Per Socket

Forrest Norrod is going into a recap of how AMD EPYC gen 1 has gone since its inception.

Here is an interesting slide. Today top seven server customers account for about 40% of servers.

Forrest is talking about how the company is doing with AMD EPYC in the market today. This is important because the company needs to show the adoption of AMD EPYC. Cloud service providers are offering differentiated instances and services. Forrest is talking about how Microsoft and AWS have different types of uses for AMD EPYC. Packet, Hivelocity, and Baidu are using single socket platforms for hosting and services. Tencent is using AMD EPYC both for cloud and internal operations.

VMware video is rolling saying that the company will allow AMD EPYC’s high core count, memory, and single socket benefits in their existing licensing structure.

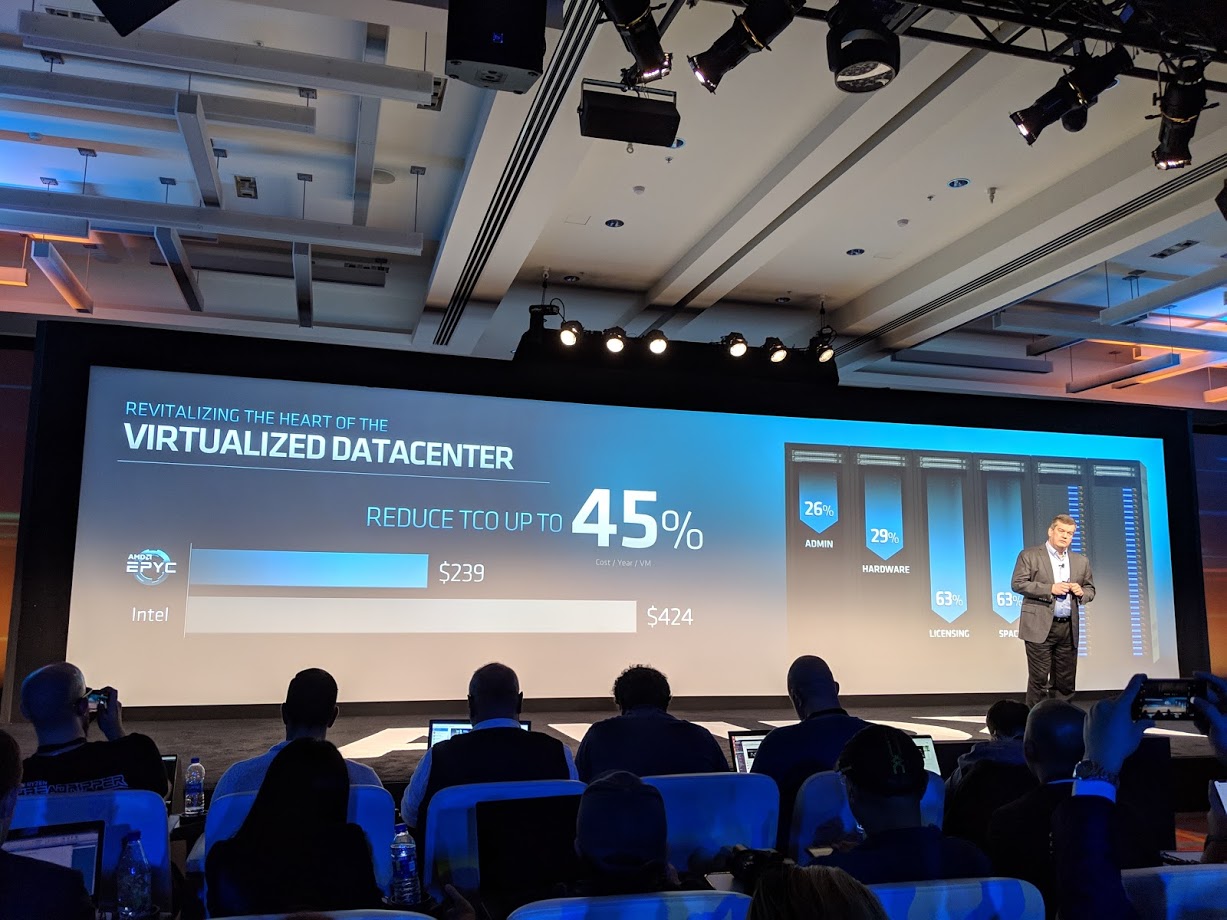

AMD says that most buyers will just buy the cost equivalent next generation. Replacing dual Intel Xeon E5-2660 V3 servers to dual Intel Xeon Gold 5118 servers.

Forrest says that you can use a single AMD EPYC 7551P. Between administrative, hardware, licensing and space, AMD is claiming one can get a 45% per VM TCO.

In high-performance computing, something we will see more of at SC18 next week. The company is talking about Cray CS500 Supercomputer Platform. Next, they are talking about the Shasta supercomputer with the Slingshot interconnect.

AMD EPYC 2 Rome details are coming next. AMD “Naples” is just the beginning.

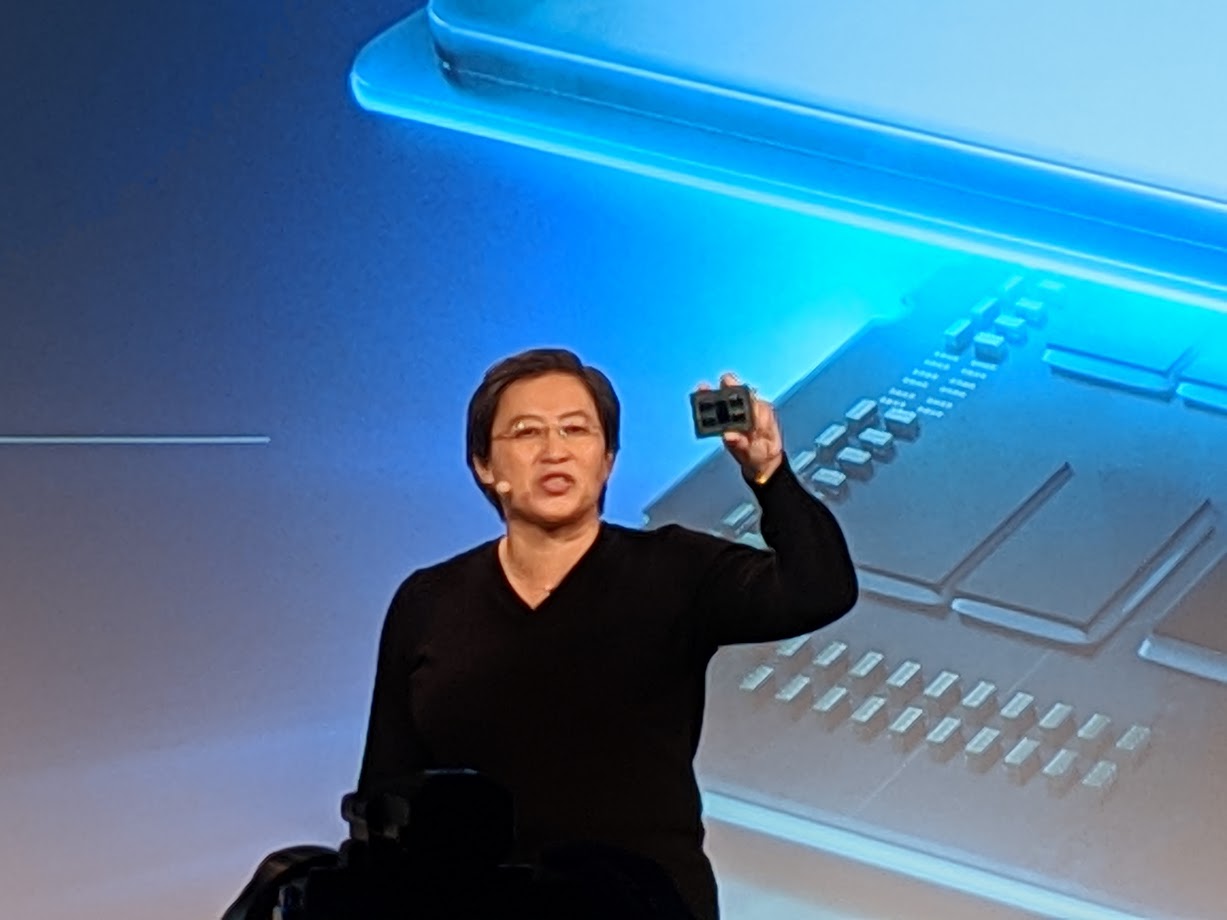

Dr. Lisa Su is Back on Stage for AMD EPYC 2 “Rome”

Here we go on the AMD EPYC 2 “Rome” generation.

Up to 64 Zen 2 cores per socket. Faster IPC. Higher I/O and memory bandwidth. This is double the Naples generation.

AMD EPYC will be the first PCIe 4.0 x86 CPU.

Existing Naples platforms will run Rome (with PCIe 3.0) and Milan after that.

Rome will deliver 2x the per socket performance and 4x the floating point performance.

There are 8x core die in 7nm plus a 14nm I/O die. Each of the 8x core die has 8 cores.

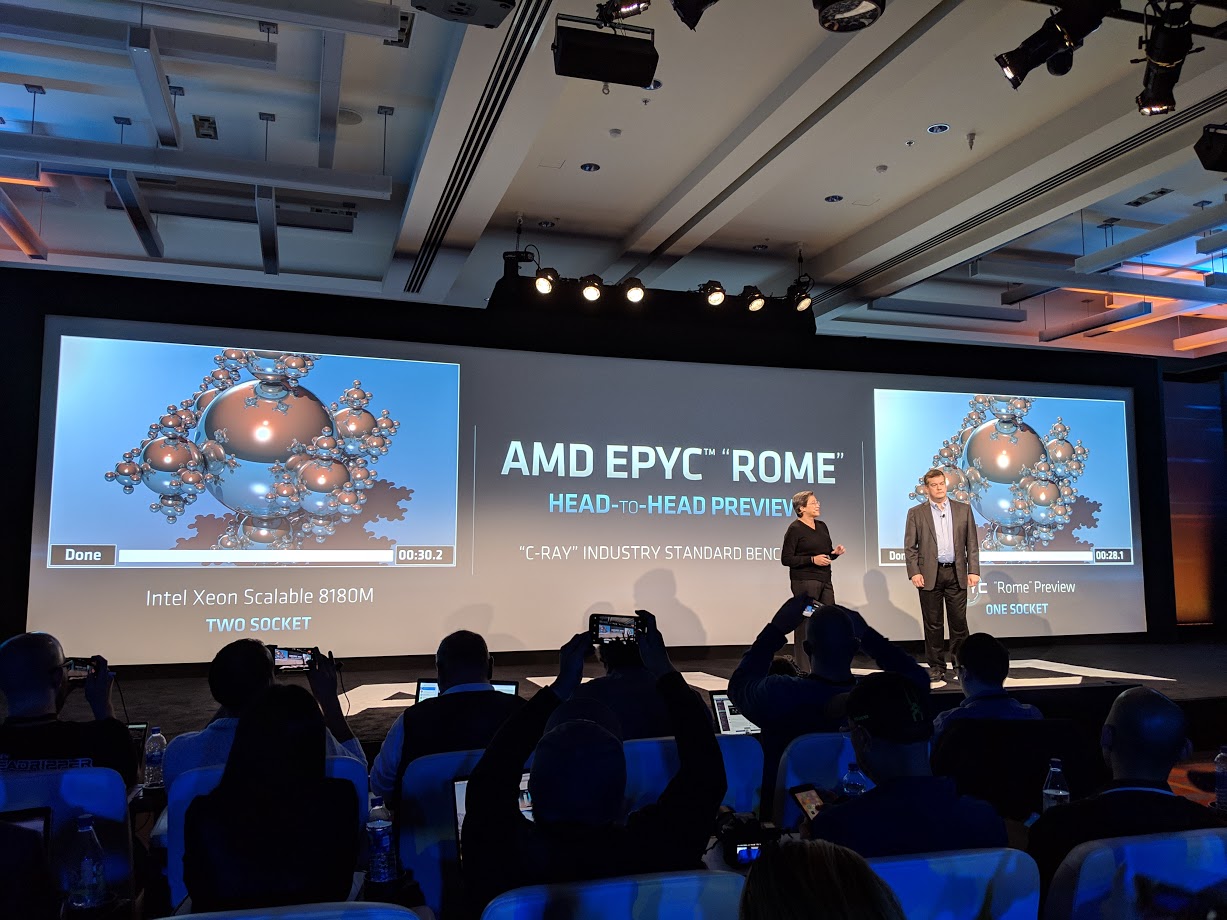

Rome v. Intel head to head with best in class Skylake. One prototype AMD Rome v. dual Intel Xeon 8180M CPUs.

If you read our AMD EPYC or Intel Xeon benchmarks, we often use c-ray, and have been doing so for years. C-ray is an extremely favorable benchmark for AMD’s Zen architectures over Intel Xeon architectures. Please keep that in mind. Still, this is an impressive result!

Wrapping Up

We will be heading to breakout sessions soon and will summarize the live notes above later.

Thanks for the comprehensive coverage.

Why does C-ray favor Zen architecture over Xeon?

“Rome will deliver 2x the per socket performance”

Deliver 1x the per core performance?

@David

At the same TDP per socket.

@Misha

With 7nm, 0.5x the power and 2x the density (core count), so 1x the power per package.

2x the performance per watt (from 7nm), but 1x the IPC performance?

The NorthBridge functionality has been migrated to the CPU over the years, now AMD is taking all that out of the CPU again. Interesting.