AMD Instinct MI300X and MI300A Market Impact

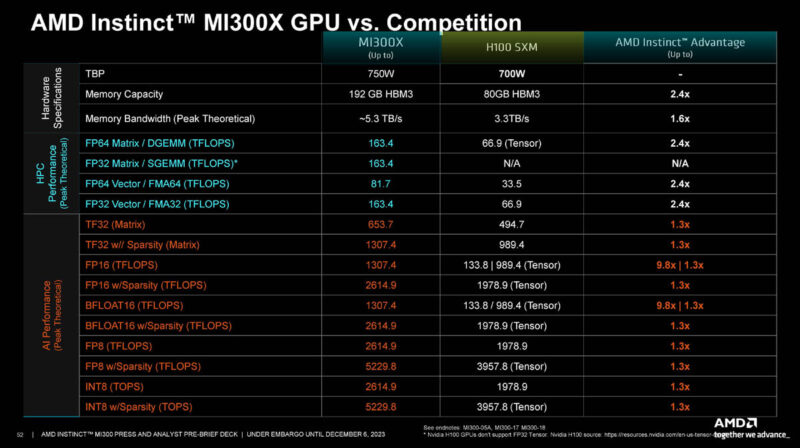

Today, the AI market is really NVIDIA, or the NVIDIA H100, and everyone else. NVIDIA is also pushing parts like the NVIDIA L40S for inference, but the H100 is the hot item. AMD built a giant GPU using chiplets and advanced packaging and built something that can more than just go toe-to-toe with NVIDIA, but actually win in terms of performance. Again, 750W versus 700W does not take into account NVSwitch power, so these are effectively very close numbers.

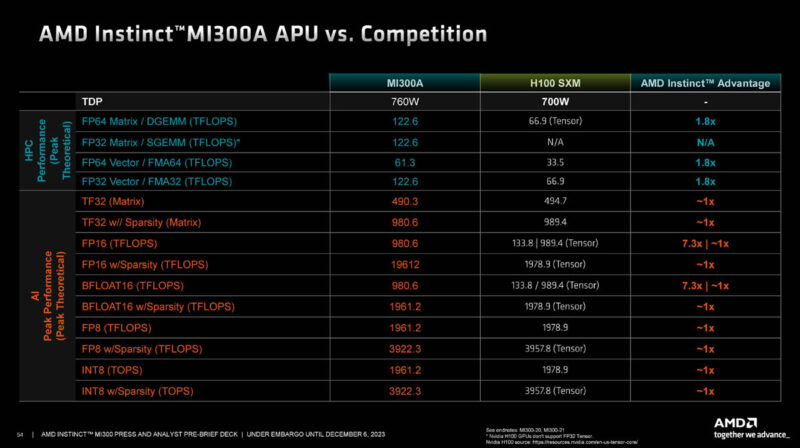

The MI300A is something new. Work with El Capitan will help the HPC software stack mature, and perhaps this one will be big. On the other hand, we sense that the MI300X is going to be a much larger opportunity for AMD over the next 12-24 months.

Traditionally, AMD’s Achilles heel has been software. It sounds like AMD has come a long way, and they are starting to win at hyper-scalers like Meta and Microsoft. From folks at the event, it sounds like NVIDIA is still better on the software side, but AMD now has a serviceable solution.

To us, the big question is how AMD’s performance scales and what kind of gains can it achieve over time on its hardware. Scaling to an 8x GPU node is good. The question is how it scales to 10,000+ GPUs.

One of the non-MI300 announcements is that AMD is sharing Infinity Fabric with other ecosystem partners. An example is that Broadcom was on stage saying next-gen Broadcom switches will offer Infinity Fabric and XGMI to offer an alternative to NVLink. Today, AMD’s system-to-cluster interconnect is Ethernet (and Infiniband.) Still, that is in the future.

To us, AMD can probably deliver these at a high margin while also undercutting NVIDIA pricing. The difference between what AMD has and many upstart competitors is that it is not half the performance at less than half the price. Instead, AMD is equal or better performance and potentially at a lower price. The bigger question is how many MI300X’s AMD can ship as the H100 is still on allocation and folks have been lining up for the NVIDIA H200.

Final Words

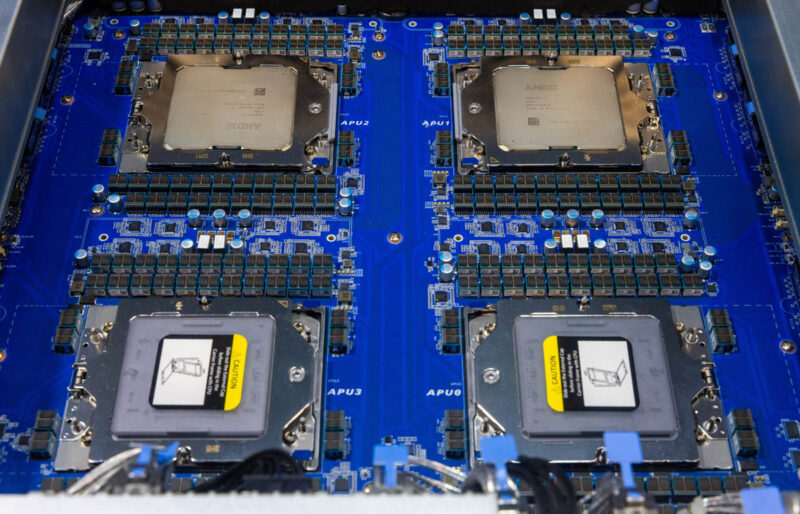

Now the big question is around availability. We have already seen these systems, such as this one from Gigabyte. The big question now is how long until the MI300X gets to market. The other real question is will the MI300A be adopted outside of HPC applications.

Luckily, there is a lot of time to find out the answers to these questions. For now, at least, it looks like AMD brought its tech to the table and has declared its intent to take NVIDIA head-on with more advanced chip packaging and bigger chips. Unlike many AI startups that have failed, AMD can leverage its other lines for chiplet technology and has the scale to win customers. Now we get to see. Hopefully, we get hands-on with the chips soon.

STH testing when?

Great article as always Patrick and Team STH

It looks like a couple of MI300A systems are available: https://www.gigabyte.com/Enterprise/GPU-Server/G383-R80-rev-AAM1 and https://www.amax.com/ai-optimized-solutions/acelemax-dgs-214a/

Couldn’t find prices but if it’s supposed to compete with GH then it’ll be around U$30K.

It’s a good question which of the MI300A or MI300X is going to be more popular. As a GPU could the MI300X be paired with Intel or even IBM Power CPUs?

I personally find the APU more interesting. Not because the design is new so much as the fact that real problems are often solved using a mixture of algorithms some of which work well on GPUs and others better suited to CPUs.

Do you know if mi300A supports CXL memory?

I hope to see some uniprocessor MI300A systems hit the market. As of today only quad and octo.

Maybe a sort of cube form factor, PSU on the bottom, then mobo and gigantic cooler on the top. A SOC compute monster.

In the spirit of all the small Ryzen 7940hs tiny desktops. Just, you know, more.