AMD EPYC 9004 Genoa 12-channel DDR5-4800

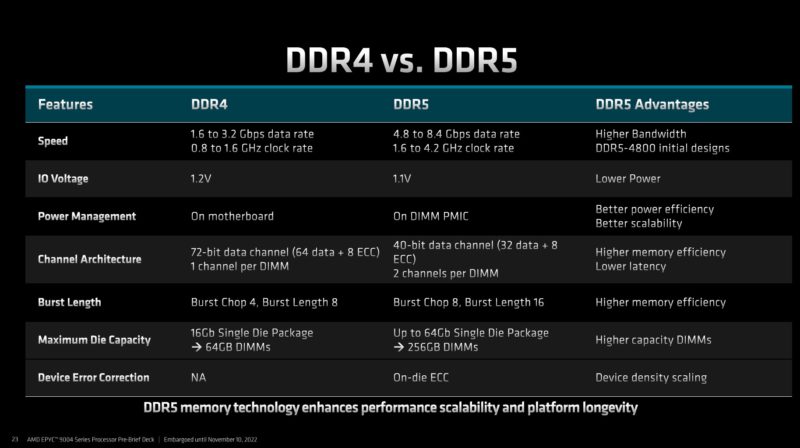

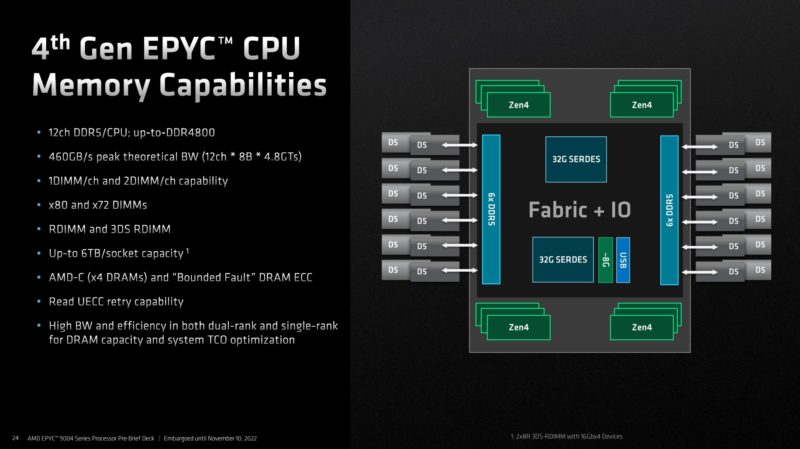

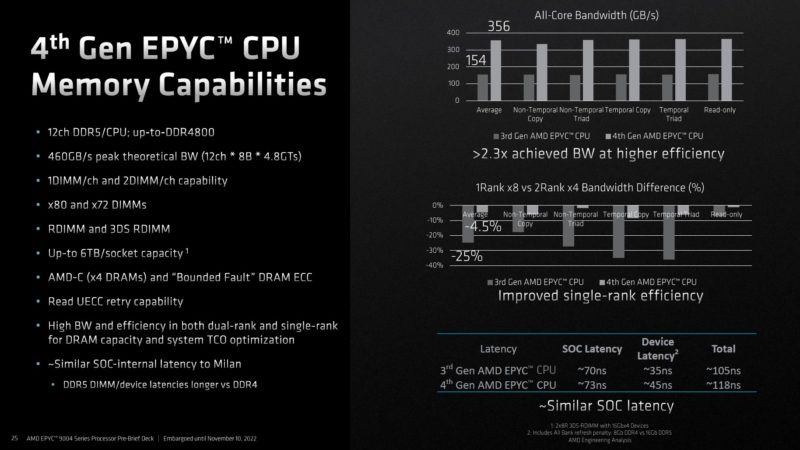

On the memory side, this generation is the DDR5 generation. This is extremely important to understand Genoa. A 64-core Genoa chip, for example, will get 12 channels of memory, a 50% increase over Milan. Beyond that, AMD is also going from DDR4-3200 to DDR5-4800 speeds, for a huge jump in per-channel bandwidth. Intel’s Sapphire Rapids will be 8x DDR4-4800 as we have seen so AMD has 50% more memory channels. CXL of course is the other side of memory bandwidth in this generation, so let us get to that later.

While we get more performance there is another side to this. DDR5 has more components such as the PMIC for power management being moved onboard. That, plus moving to a new production generation, means that DDR5 prices are much higher where we have been paying around 50% more. For Genoa, that means each DIMM costs 50%+ more than the DDR4 Milan uses, and there are 50% more channels to fill. That has led to some interesting things like non-binary memory to reduce costs and match AMD’s 48 and 96-core offerings on a GB/ core basis.

AMD is not supporting 2DPC on its dual-socket platforms at launch, nor is it supporting features like LRDIMMs. Here is a look at what AMD is supporting with Genoa.

All of this leads to >2x the memory bandwidth on a per-core basis. Of course, a lot of the 12 memory channels are to keep the same ratio on top-end parts. AMD had one memory channel per 8 cores on the previous generations of Rome and Milan 64-core parts. It now has the same ratio on the 50% larger 96-core parts.

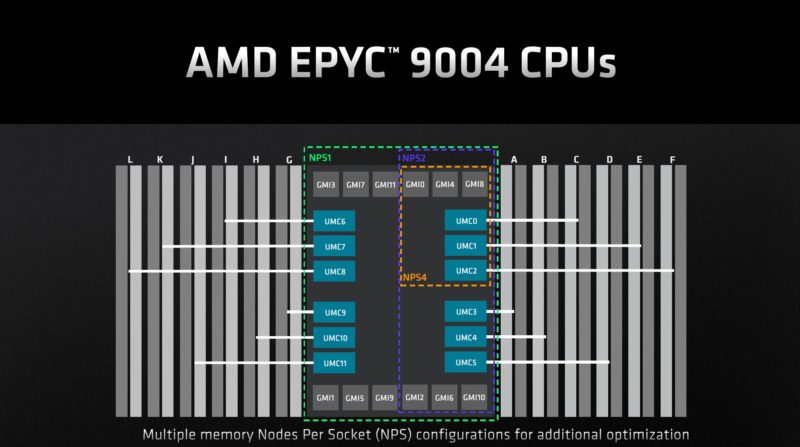

With the bigger chip, AMD has modes to partition chips off into smaller segments of up to three dies and three memory channels per partition (times four for the entire processor.) Intel just disclosed its SNC4 and UNC modes for Intel Xeon Max this week as well. These are of a similar feature class although not exactly the same.

All of this discussion of memory bandwidth is not complete without CXL.

AMD EPYC 9004 Genoa CXL Overview

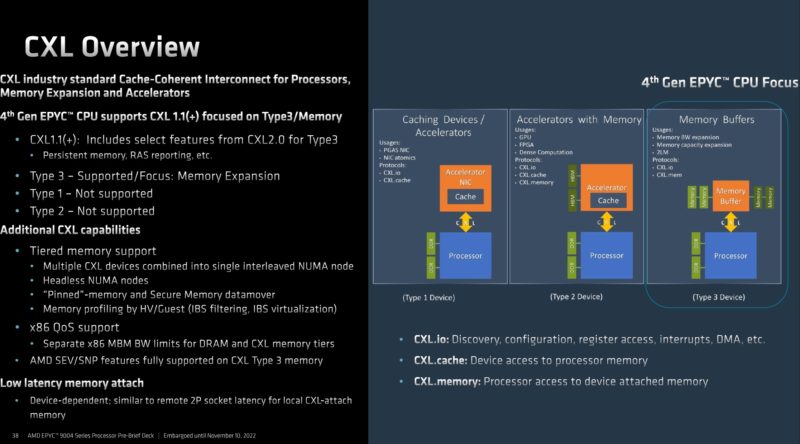

The new chips support CXL 1.1 with some forward features. AMD is only supporting Type 3 memory buffers that one can think of as memory expansion devices. These generally show up in operating systems as new NUMA nodes with attached memory capacity, but without CPUs.

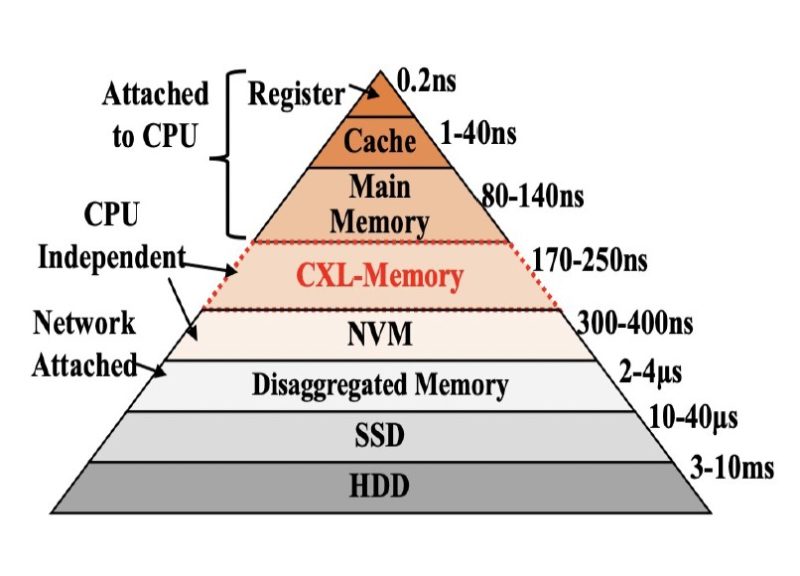

Latency is on the order of accessing memory connected to the remote socket’s CPU in a dual-socket server. Here is the latency hierarchy that we saw in Compute Express Link CXL Latency How Much is Added at HC34.

The key here is that with up to 64x lanes that can be used for CXL devices, and a CXL 1.1 x16 connection being roughly as much bandwidth as two DDR5 channels, AMD can, in theory, get not just more memory capacity with CXL 1.1 devices, but also more available bandwidth (whether it can use that bandwidth is another story.) If it could use all of the memory channels, then a 1P Genoa system would, in theory, have 12 local DDR5 channels plus ~8 more via CXL 1.1 devices that will look like memory sitting on other non-processor-attached NUMA nodes. That is why the next generation of systems with CXL are going to start getting crazy.

Talking about the parts is great, but next, let us get to the SKUs themselves.

Any chance of letting us know what the idle power consumption is?

$131 for the cheapest DDR5 DIMM (16GB) from Supermicro’s online store

That’s $3,144 just for memory in a basic two-socket server with all DIMMs populated.

Combined with the huge jump in pricing, I get the feeling that this generation is going to eat us alive if we’re not getting those sweet hyperscaler discounts.

I like that the inter CPU PCIe5 links can be user configured, retargeted at peripherals instead. Takes flexibility to a new level.

Hmm… Looks like Intel’s about to get forked again by the AMD monster. AMD’s been killing it ever since Zen 1. So cool to see the fierce competitive dynamic between these two companies. So Intel, YOU have a choice to make. Better choose wisely. I’m betting they already have their decisions made. :-)

2 hrs later I’ve finished. These look amazing. Great work explaining STH

Do we know whether Sienna will effectively eliminate the niche for threadripper parts; or are they sufficiently distinct in some ways as to remain as separate lines?

In a similar vein, has there been any talk(whether from AMD or system vendors) about doing ryzen designs with ECC that’s actually a feature rather than just not-explicitly-disabled to answer some of the smaller xeons and server-flavored atom derivatives?

This generation of epyc looks properly mean; but not exactly ready to chase xeon-d or the atom-derivatives down to their respective size and price.

I look at the 360W TDP and think “TDPs are up so much.” Then I realize that divided over 96 cores that’s only 3.75W per core. And then my mind is blown when I think that servers of the mid 2000s had single core processors that used 130-150W for that single core.

Why is the “Sienna” product stack even designed for 2P configurations?

It seems like the lower-end market would be better served by “Sienna” being 1P only, and anything that would have been served by a 2P “Sienna” system instead use a 1P “Genoa” system.

Dunno, AMD has the tech, why not support single and dual sockets? With single and dual socket Sienna you should be able to be price *AND* price/perf compared to the Intel 8 channel memory boards for uses that aren’t memory bandwidth intensive. For those looking for max performance and bandwidth/core AMD will beat Intel with the 12 channel (actually 24 channel x 32 bit) Epyc. So basically Intel will be sandwiched by the cheaper 6 channel from below and the more expensive 12 channel from above.

With PCIe 5 support apparently being so expensive on the board level, wouldn’t it be possible to only support PCIe 4 (or even 3) on some boards to save costs?

All other benchmarks is amazing but I see molecular dynamics test in other website and Huston we have a problem! Why?

Olaf Nov 11 I think that’s why they’ll just keep selling Milan

@Chris S

Siena is a 1p only platform.

Looks great for anyone that can use all that capacity, but for those of us with more modest infrastructure needs there seems to be a bit of a gap developing where you are paying a large proportion of the cost of a server platform to support all those PCIE 5 lanes and DDR5 chips that you simply don’t need.

Flip side to this is that Ryzen platforms don’t give enough PCIE capacity (and questions about the ECC support), and Intel W680 platforms seem almost impossible to actually get hold of.

Hopefully Milan systems will be around for a good while yet.

You are jumping around WAY too much.

How about stating how many levels there are in CPUS. But keep it at 5 or less “levels” of CPU and then compare them side by side without jumping around all over the place. It’s like you’ve had five cups of coffee too many.

You obviously know what you are talking about. But I want to focus on specific types of chips because I’m not interesting in all of them. So if you broke it down in levels and I could skip to the level I’m interested in with how AMD is vs Intel then things would be a lot more interesting.

You could have sections where you say that they are the same no matter what or how they are different. But be consistent from section to section where you start off with the lowest level of CPUs and go up from there to the top.

There may have been a hint on pages 3-4 but I’m missing what those 2000 extra pins do, 50% more memory channels, CXL, PCIe lanes (already 160 on previous generation), and …

Does anyone know of any benchmarking for the 9174F?

On your EPYC 9004 series SKU comparison the 24 cores 9224 is listed with 64MB of L3.

As a chiplet has a maximum of 8 cores one need a minimum of 3 chiplets to get 24 cores.

So unless AMD disable part of the L3 cache of those chiplets a minimum of 96 MB of L3 should be shown.

I will venture the 9224 is a 4 chiplets sku with 6 cores per chiplet which should give a total of 128MB of L3.

EricT – I just looked up the spec, it says 64MB https://www.amd.com/en/products/cpu/amd-epyc-9224

Patrick, I know, but it must be a clerical error, or they have decided to reduce the 4 chiplets L3 to 16MB which I very much doubt.

3 chiplets are not an option either as 64 is not divisible by 3 ;-)

Maybe you can ask AMD what the real spec is, because 64MB seems weird?

@EricT I got to use one of these machines (9224) and it is indeed 4 chiplets, with 64MB L3 cache total. Evidently a result of parts binning and with a small bonus of some power saving.