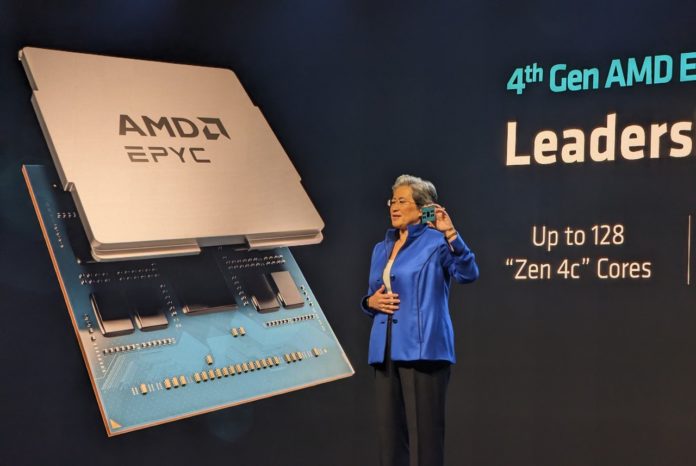

Today AMD launched a new series of processors, the AMD EPYC Bergamo. In many ways, this is something new, with up to 128 cores per socket and 256 threads. Given the popularity of 2U 4 node chassis on the market, this will make a 2U4N platform a 2048 thread machine or one with 1024 threads per U. AMD’s approach is straightforward. It is trading cache size for core density in this generation. This is one of AMD’s biggest launches ever.

We are doing these live during AMD’s event, so please excuse typos here.

AMD EPYC Bergamo Launched 128 Cores Per Socket

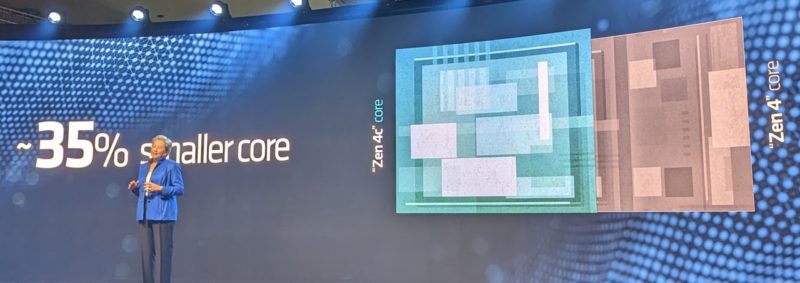

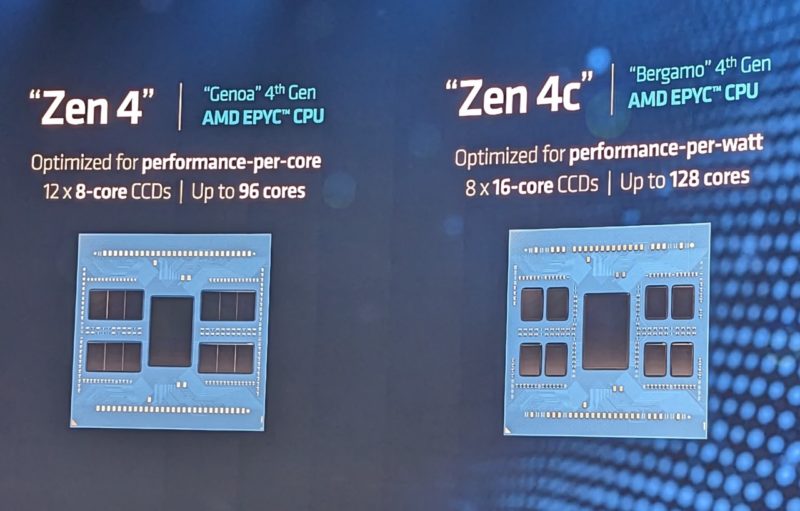

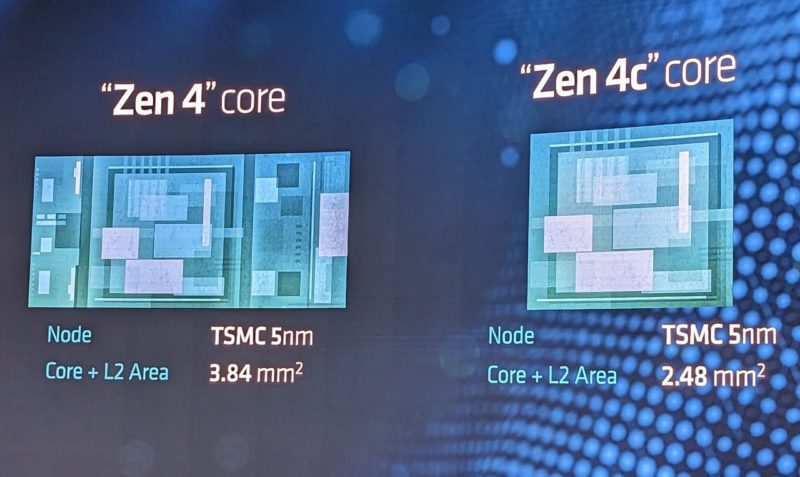

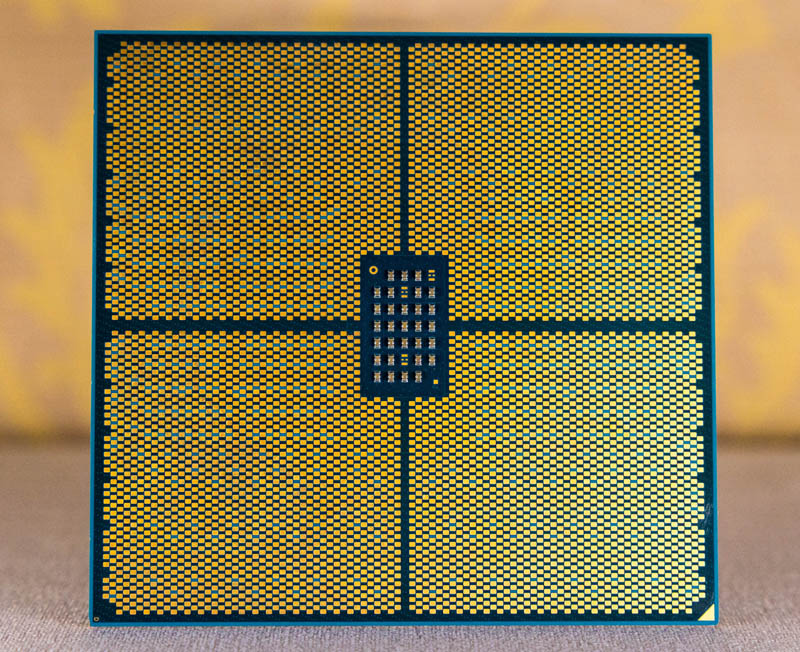

Setting the stage, the AMD EPYC “Bergamo” or the EPYC 97×4 series uses a core called “Zen 4c”. The simple idea of this core is that AMD is reducing the L3 cache so it can fit more cores onto each CCD. AMD says its Zen 4c core is 35% smaller than the Zen 4 core, largely from reducing cache sizes and optimizing the footprint around that.

For a SP5, that means up to 128 cores.

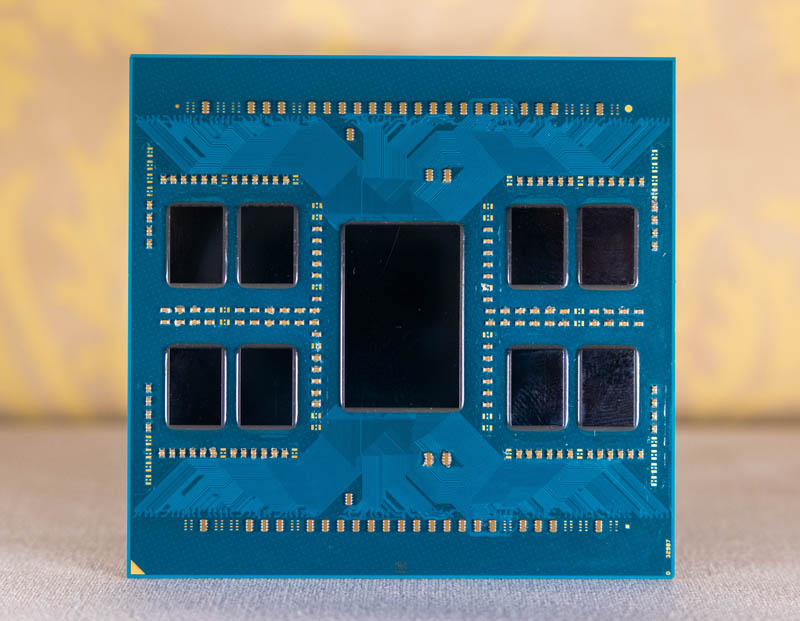

The construction of Bergamo should be fairly familiar. We have the same IO die as we have with the AMD EPYC 9004 Genoa family, this is mostly a CCD change. That means we have the same memory, PCIe Gen5, and dual socket capabilities. If you want to learn more about the platform, see AMD EPYC Genoa Gaps Intel Xeon in Stunning Fashion.

In terms of performance, initial benchmarks put this part within striking distance of 1000 SPECrate2017_int_base per socket. That is awesome, and it is one of the biggest differentiators of this part. These are designed for scale-out cloud instances, not performance-per-core applications. Zen 4c, being a cache-reduced Zen 4, still is a very capable core and has features like AVX-512 and VNNI for AI inference.

Beyond that, the real goal is to provide a 256 vCPU, per socket platform. That spec is one where AMD and Ampere are going to each claim leadership. Ampere AmpereOne has up to 192 Arm cores per socket. Since it is not realistic for most cloud providers to do a single vCPU instance size splitting physical and SMT threads into separate vCPUs, Ampere will claim it has leadership in odd-numbered vCPU instance sizes with 192 physical cores to AMD’s 128. If one has an even number of vCPUs, say 2 vCPUs, then AmpereOne will have a maximum of 96 instances per socket while AMD will be at 128 still. For its part, Intel has a maximum of 60 cores/ 120 threads in this generation. Intel is counting on Sierra Forest to play in this space using its rapidly evolving E-cores.

Final Words

We will be testing Bergamo soon enough, today is just the launch (again.) Make no mistake though, this is a HUGE launch. When we discuss chips with hyper-scale vendors and major chip companies, the 5-year projections vary on how much will be these scale-out cores. Some say the answer is 25% of the market. Others are as high as 75%. What we know is that this is a huge new emerging segment and AMD has a plan to capture the market.

AMD is also announcing Genoa-X, the 3D V-Cache version of its EPYC Genoa today. Looking at AMD’s tools now, they can reduce the core footprint by reducing cache sizes on CCDs. AMD has another technology, however, to add a cache on a different plane using 3D V-Cache.

One of the cool announcements is that Meta has deployed “hundreds of thousands” of AMD servers since it started deploying AMD in 2019. Meta says that Bergamo is showing a 2.5x performance uplift over Milan, so that is why Meta will be deploying Bergamo as a next-generation platform.

Making a clear call today: AMD is going to put these two technologies together and create higher core count processors with 3D V-Cache added to pump core counts much higher in the future.

“… this will make a 2U4N platform a 2048 thread machine or one with 1024 cores per U.”

2048 threads/2U is correct but

4 nodes/2U * 2 sockets/node * 128 cores/socket = 1024 cores/2U = 512 cores/U

Am I missing something?

“AMD says its Zen 4c core is 35% smaller than the Zen 4 core, largely from reducing cache sizes and optimizing the footprint around that.”

I don’t think this statement is true. The cache part of the core is where they’ve reduced the footprint the least. The reduction in cache is halving of L3 cache per core, but that’s not part of the core.

The 35% reduction is probably due to a different frequency optimization point, and through having traditional Zen 4 verified structures which probably means you can make stricter assumptions.