Key Lessons Learned: Competition with Intel

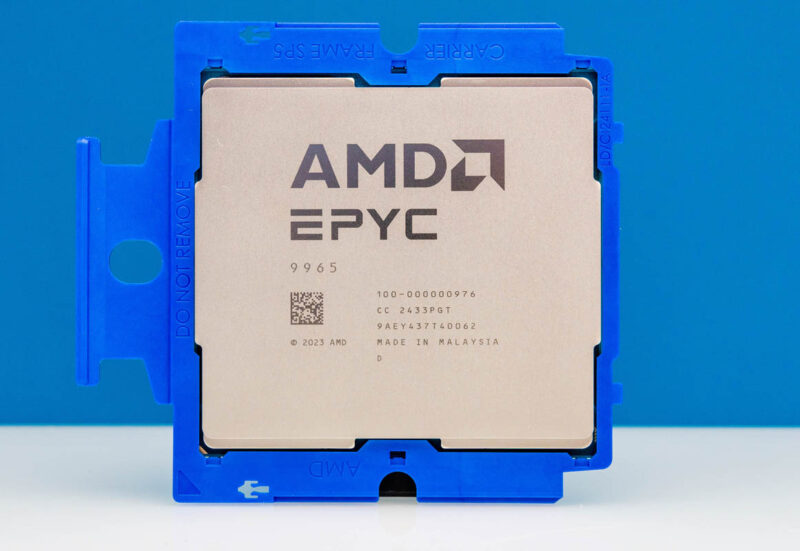

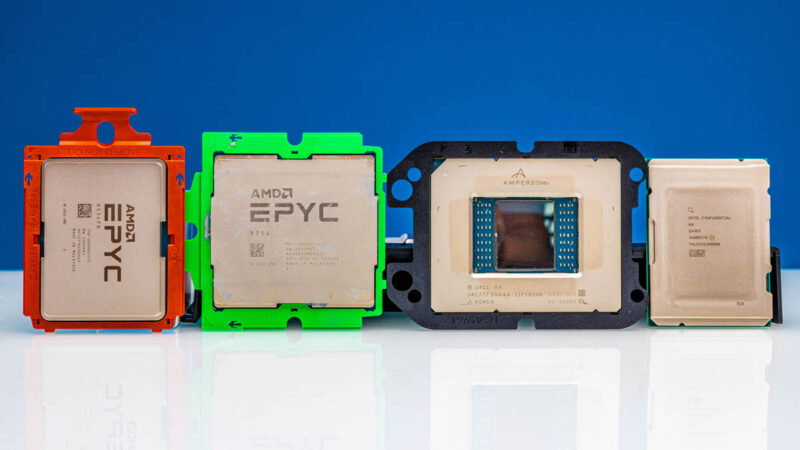

In terms of competing directly with Intel for the top-end sockets vying for the top AI nodes, the situation is a bit more nuanced than one might think. At 192 cores, the Zen 5c-based AMD EPYC 9965 is great for throughput. It is excellent for virtualization and cloud workloads. Still, at 128 cores with the Intel Granite Rapids-AP versus 128 cores with the AMD EPYC 9755, AMD does not have the same outright leadership that it had before. Or better to say, AMD is no longer competing at the top-end just with itself.

Intel has more PCIe Gen5 lanes (192 vs. 160), faster memory speed (DDR5-6400 vs. DDR5-6000), and the MCRDIMM/ MRDIMM 8000MT/s option. Intel also has features like AMX for AI along with other accelerators like QAT. In raw CPU performance, AMD is still doing great. In the context of entire systems, Intel is showing up with at least something competitive at the top-end again.

The AMD EPYC 9965 is truly spectacular. It may not win versus the full Zen 5 L3 cache parts and the full Granite Rapids-AP cores when we look at per-core performance, but its competition is different. On one hand, one could rightly argue that the AMD EPYC 9965 with 192 cores and 384 threads is competing against the Intel Xeon 6780E and Xeon 6766E which scale to 144 cores in only 250-330W of TDP. Realistically, AMD is competing against the unreleased Sierra Forest-AP with 288 cores, but without SMT. Our best guess is that AMD will have more raw performance than a 288 E-core Sierra Forest-AP. For some sense, 2x Intel Xeon 6780E Sierra Forest 144 core CPUs in a 2P system have a SPECrate2017_int_base score of around 1410. With the same number of cores but a different I/O ratio, our best guess would be the 288-core Sierra Forest-AP (6900E series) should achieve a SPECrate2017_int_base of 2820 +/- 10%. That is not too far off from the AMD EPYC 9965 at around a SPECrate2017_int_base of 3000. The wildcard, of course, is that if a cloud provider wants to offer 1 vCPU VMs then Sierra Forest-AP will be denser because it is using physical cores.

In 2019, when we did our AMD EPYC 7002 Series Rome Delivers a Knockout piece, that is exactly what it was. Intel has spent the last four years climbing back. It can compete in the 128-core full P-core SKU part of the stack, and the Intel Xeon 6766E is a really neat 144-core part, but it does not have a direct answer for the EPYC 9965 at least until the 6900E series is launched.

Let us quickly talk about list pricing. Intel must drop its Sierra Forest Xeon 6700E list prices significantly. The AMD EPYC 9965 at $14,813 versus the Intel Xeon 6780E at $11,350 is off on pricing for a part offering around half the performance of its AMD competition.

At $17,800 the Intel Xeon 6980P is competitive against the $12,984 AMD EPYC 9755, but it is hard to look at the numbers and have a 37% list price premium on the Intel side.

After discounting, we expect pricing to be better aligned. Still, Intel needs to reset its list pricing methodology for the non-hyperscale market since it is hard to justify the current pricing structure. It just looks strange optically to have list prices this far off.

Competition is good for the industry, and we have some again.

Key Lessons Learned: Competition with Arm

Here is the wildcard: Arm. If you are an enterprise buying hardware, you effectively have your choice of the Ampere Altra (Max) and the AmpereOne CPUs for E-cores, as well as the NVIDIA Grace Superchip/ Grace Hopper for P-cores.

AMD made major strides against Ampere. We just showed the AmpereOne A192-32X in our Supermicro MegaDC ARS-211M-NR Review. AMD is now very competitive to the A192-32X on a performance and a performance per watt basis. Ampere’s list pricing is much lower, but between the Intel Xeon 6780E / 6766E and the AMD EPYC 9965, x86 has closed the gap on energy efficiency. Ampere Altra Max is on a different curve and is a lower cost, lower power, and lower feature part. For some applications like virtualizing Arm-based workloads, Ampere makes a lot of sense. Ampere can play, but at this point it needs to play at a discount to AMD and Intel, which is part of the company’s intent.

NVIDIA is really interesting. We reviewed the NVIDIA GH200 platform and just from a raw CPU performance perspective, EPYC is faster, and the new DDR5-6000 speeds help equalize memory bandwidth advantages. The NVIDIA Grace Superchip at 144 cores each is really a dual-CPU in a single module. From a scalability standpoint, AMD can get much higher performance, core count, and memory capacity per system than NVIDIA. It is fairly hard to say one wants a NVIDIA Grace versus x86 now unless you really want Arm, or if your GPU allotment is tied to Grace deployment.

The bigger question is on the hyper-scale side. Hyper-scalers are the ones driving Arm adoption in the cloud. 192 cores/ 384 threads of a solid Zen 5c CPU is going to put folks on notice. At the same time, if a hyper-scale customer is religious about delivering custom Arm CPUs, then the big question is whether this is enough to change religion.

Final Words

Underlying this entire review is a key change AMD made. Previously, there was Zen 4 and Zen 4c as Genoa and Bergamo. Now, we have a single “Turin” generation with both Zen 5-based variants. One has higher frequencies and more cache, but fewer cores. The other has plenty of cores and is tuned for those workloads. That works. Intel has a very different message with P-cores and E-cores.

What is somewhat wild, is how much the 768 thread dual socket servers push boundaries. We saw appreciable benefits to using faster SSDs and networking, especially on the EPYC 9965. The higher-end 500W SKUs also push power to the point that those still using 120V power supplies are going to need to reconsider. Running into three fundamental scaling points when testing a new CPU was really cool to see. It also means we need to tell folks to think about the entire system’s performance, not just the CPUs, when they put this many cores into a system.

I’ve come to rely on your cross-generational SKU stacks — hope you get that updated with these new CPUs!

Smart Data Cache Injection (SDCI) which allows direct insertion of data from I/O devices into L3 cache could be a huge gain for low latency network IO workloads. It’s similar to Intel’s Data Direct I/O (DDIO).

There’s great chips here! AMD engineers doing great things.

9965 what a time to be alive

Fascinating to finally see something that hits the limits of x4 PCIe4 SSDs in practice.

768 threads in a server & benchmarks… for a fun test you could run a CPU rendered 3D FPS game. IIRC there is a cpu version of Crysis out there somewhere.

i have a few questions_

Why does the client needs so many vms to run a workload instead of using containers and drastically reduce overhead?

Second question: can you go buy a 9175f and test that one with gaming?

I’m surprised you perform benchmarks on such 2P system with NPS=1 and “L3 as Numa Domain” turned off.

Such a processor deserved an NPS=4 + L3_LLC=On to let the Linux kernel do proper scheduling.