A Note on DDR5 Memory Speeds

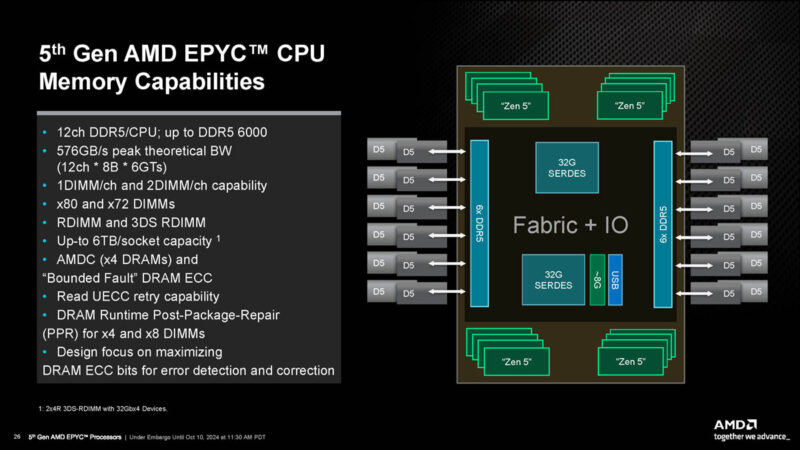

In our initial briefings, AMD said it would support DDR5-6000 on the platform.

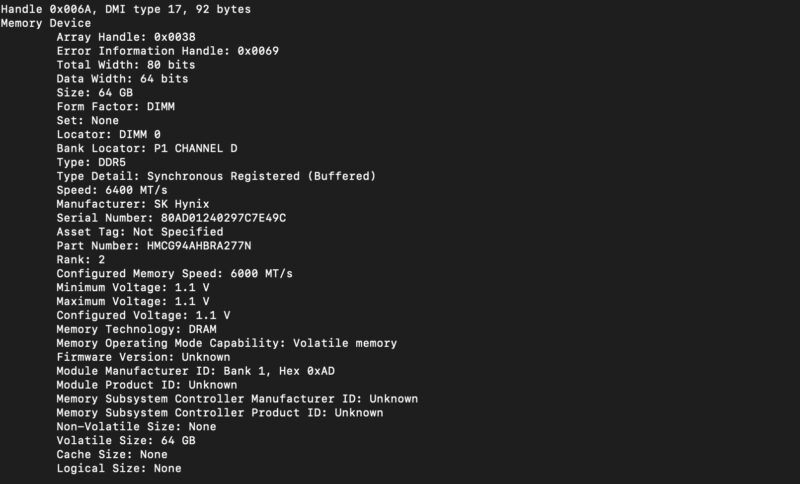

Indeed, when we looked at our AMD Volcano platform, we found DDR5-6400 RDIMMs running at 6000MT/s.

Later, AMD said that it would validate DDR5-6400 in customer platforms. This movement on the AMD side seems to be messaged a lot more after Intel’s Xeon 6900P launch. The best guidance is that we can tell folks to check their platforms, but the DDR5-6000 support should be standard, and DDR5-6400 on some validated platforms.

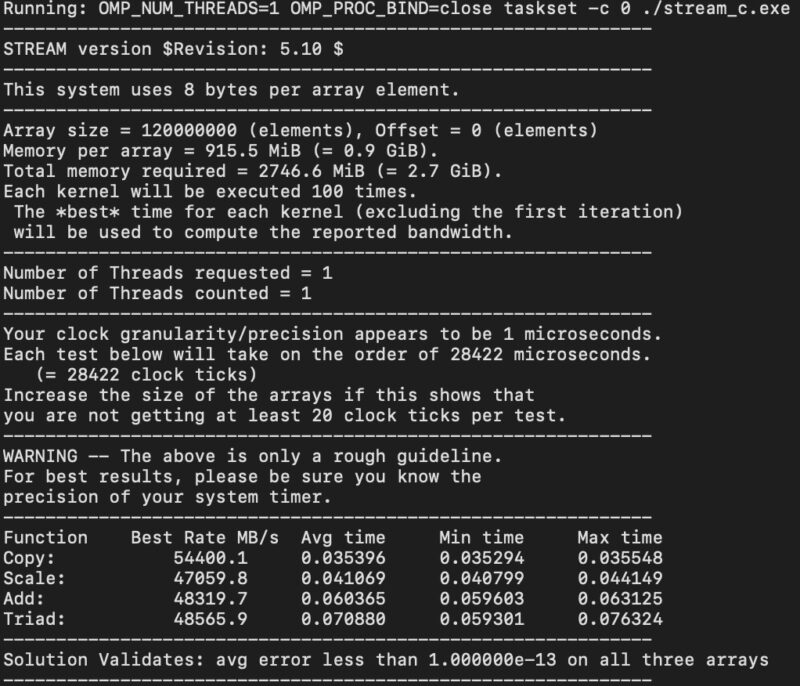

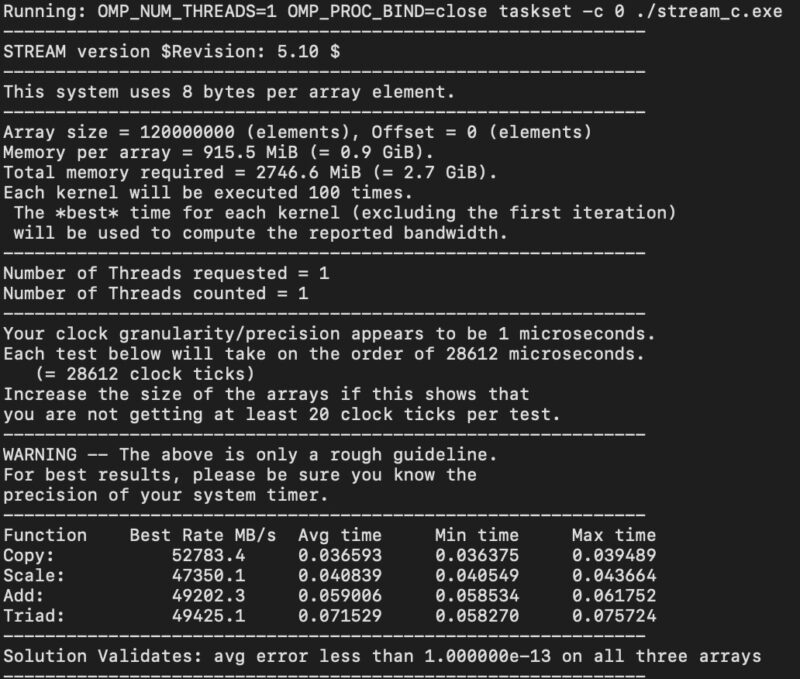

We ran our gcc STREAM runs to get both the single thread as well as the full core count on a socket. These are gcc compiled and the same as we used on other platforms including the NVIDIA GH200. AMD can get higher performance using AOCC, so take this as another data point beyond what AMD shows with much lower levels of optimization. First, here is the AMD EPYC 9965 at one core:

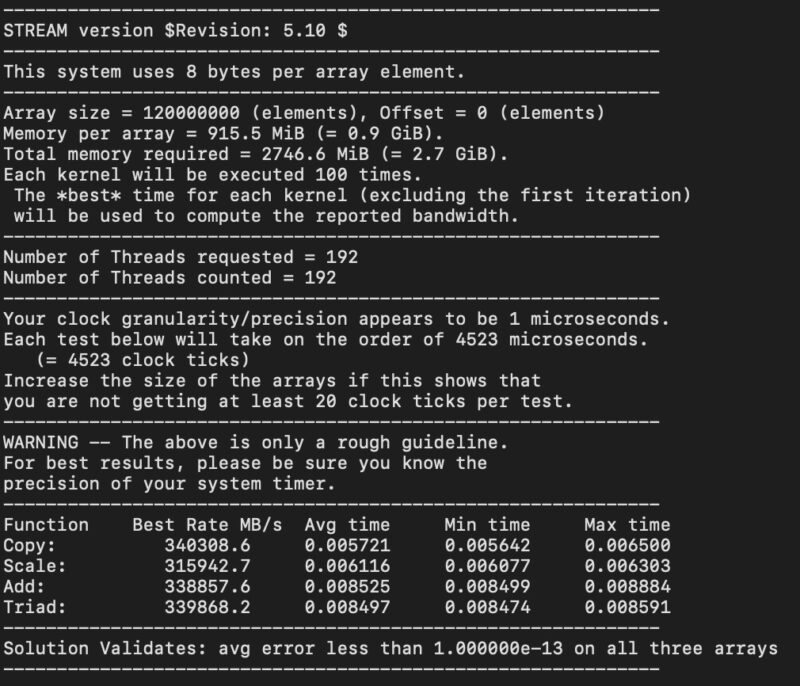

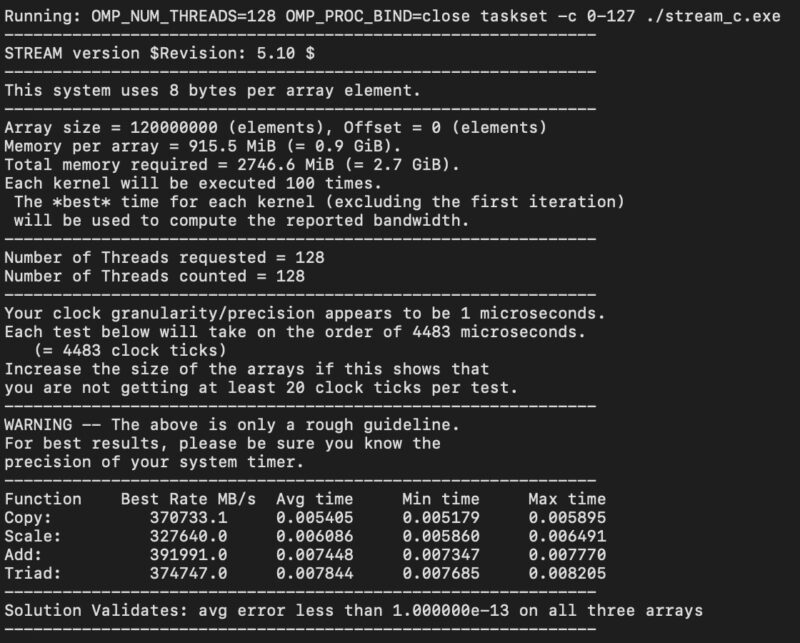

Here is the full 192 cores:

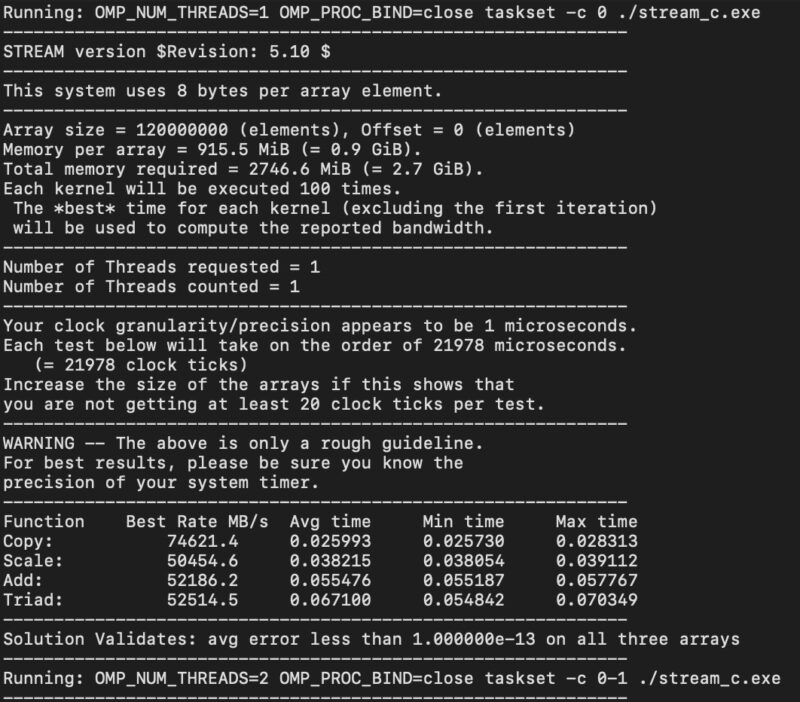

Here is the AMD EPYC 9755 at one core:

Here is the same at 128 cores:

Here is the single core AMD EPYC 9575F:

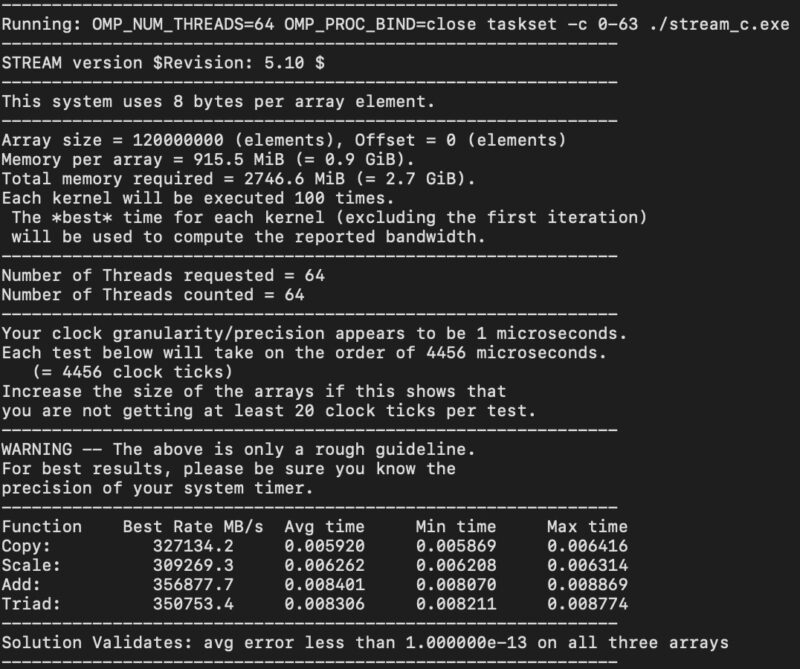

Here is the 64 core AMD EPYC 9575F:

This is good across the different SKUs. That AMD EPYC 9575F has not just high frequency, hitting up to 5GHz, but it also has a lot of memory bandwidth per core. Not everyone needs huge performance per core figures.

AMD EPYC 9005 Turin Power Consumption

The 500W parts certainly use 500W each. With 24 DIMMs, NICs, SSDs, and cooling, a single dual-socket server consumed 1.5-1.7kW of power.

We will have more on this soon with OEM platforms. Usually the development platforms have higher power consumption than OEM platforms. On the other hand, AMD’s platform was more mature than the Intel Xeon 6900P one we used a few weeks ago.

Still, the power message is simple. 120V power is going to be challenging for power supplies that often can only output ~1.2kW per PSU. This new generation’s top-end is somewhat like the end of 120V in racks. It was neat to see we were running into bottlenecks with PCIe Gen4 NVMe SSDs, 100GbE networking, and 120V power supplies at the same time.

Of course, the option here is to use a lower power platform, or a single socket platform.

Next, we have key lessons learned.

I’ve come to rely on your cross-generational SKU stacks — hope you get that updated with these new CPUs!

Smart Data Cache Injection (SDCI) which allows direct insertion of data from I/O devices into L3 cache could be a huge gain for low latency network IO workloads. It’s similar to Intel’s Data Direct I/O (DDIO).

There’s great chips here! AMD engineers doing great things.

9965 what a time to be alive

Fascinating to finally see something that hits the limits of x4 PCIe4 SSDs in practice.

768 threads in a server & benchmarks… for a fun test you could run a CPU rendered 3D FPS game. IIRC there is a cpu version of Crysis out there somewhere.

i have a few questions_

Why does the client needs so many vms to run a workload instead of using containers and drastically reduce overhead?

Second question: can you go buy a 9175f and test that one with gaming?

I’m surprised you perform benchmarks on such 2P system with NPS=1 and “L3 as Numa Domain” turned off.

Such a processor deserved an NPS=4 + L3_LLC=On to let the Linux kernel do proper scheduling.