AMD EPYC 7F52 Market Positioning

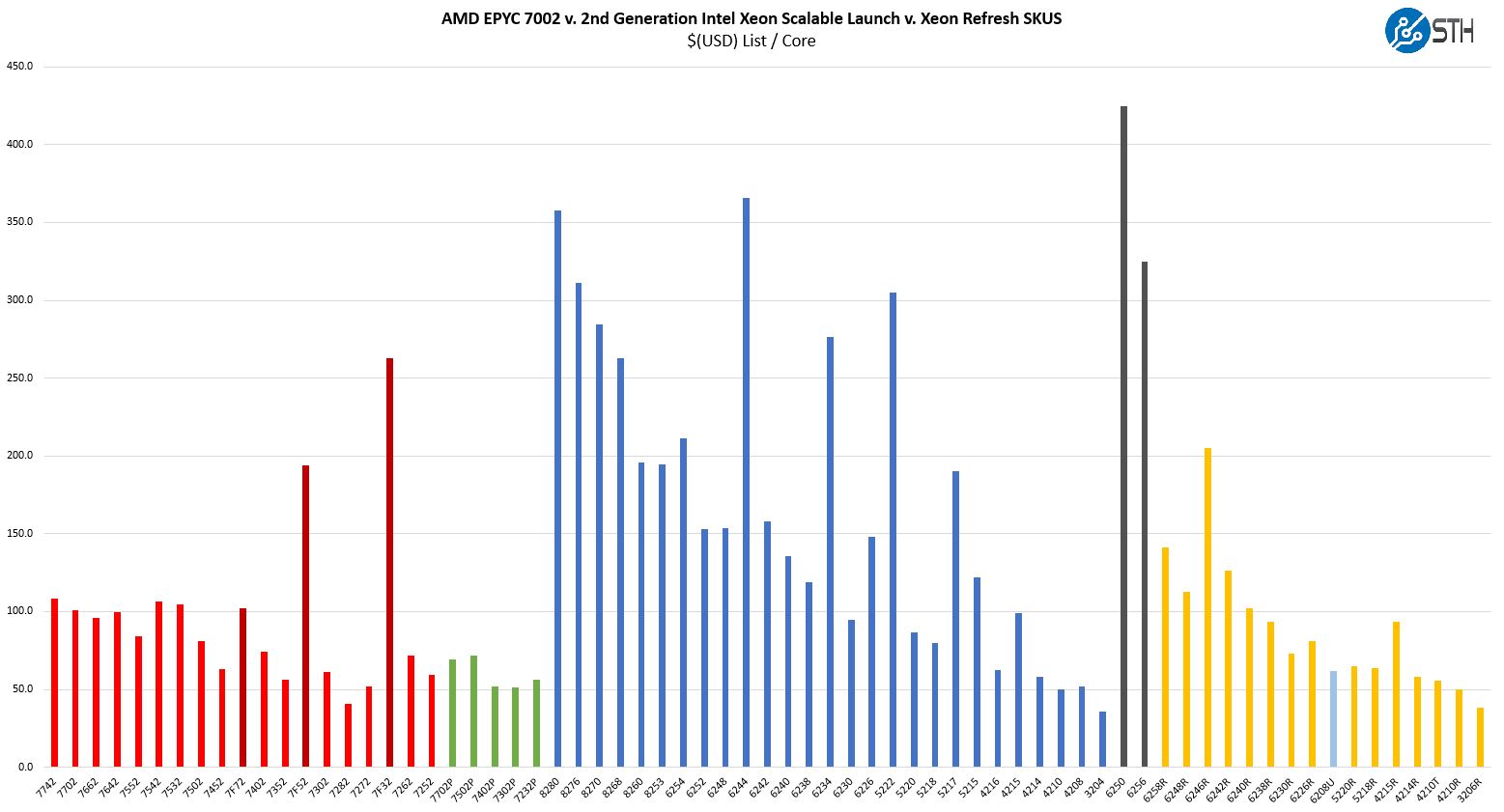

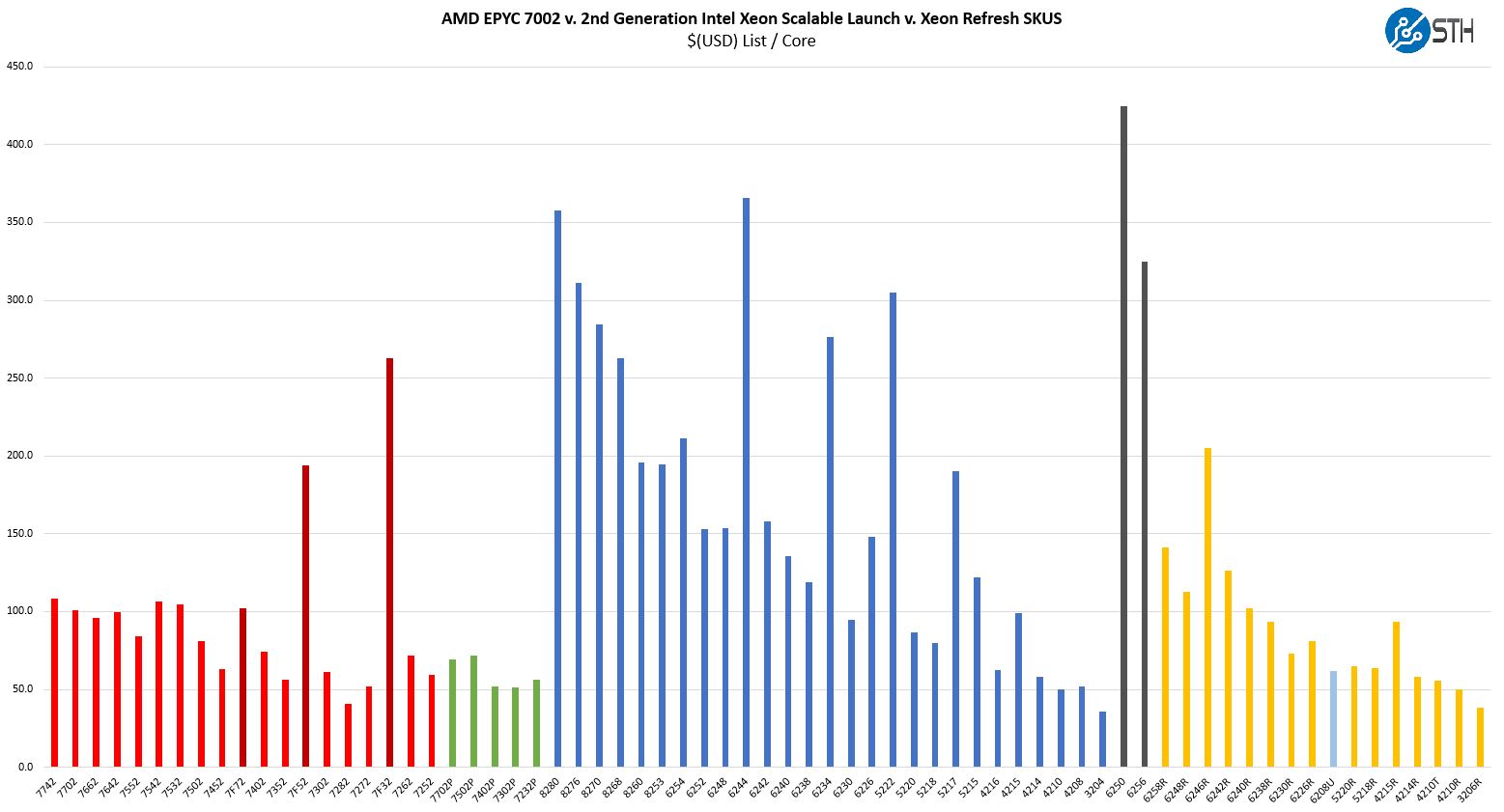

Thes chips are not released in a vacuum instead, they have competition on both the Intel and AMD sides. When you purchase a server and select a CPU, it is important to see the value of a platform versus its competitors. Here is a look at the overall competitive landscape in the industry:

We are going to first discuss AMD v. AMD competition then look to Intel Xeon.

AMD EPYC 7F52 v. AMD EPYC

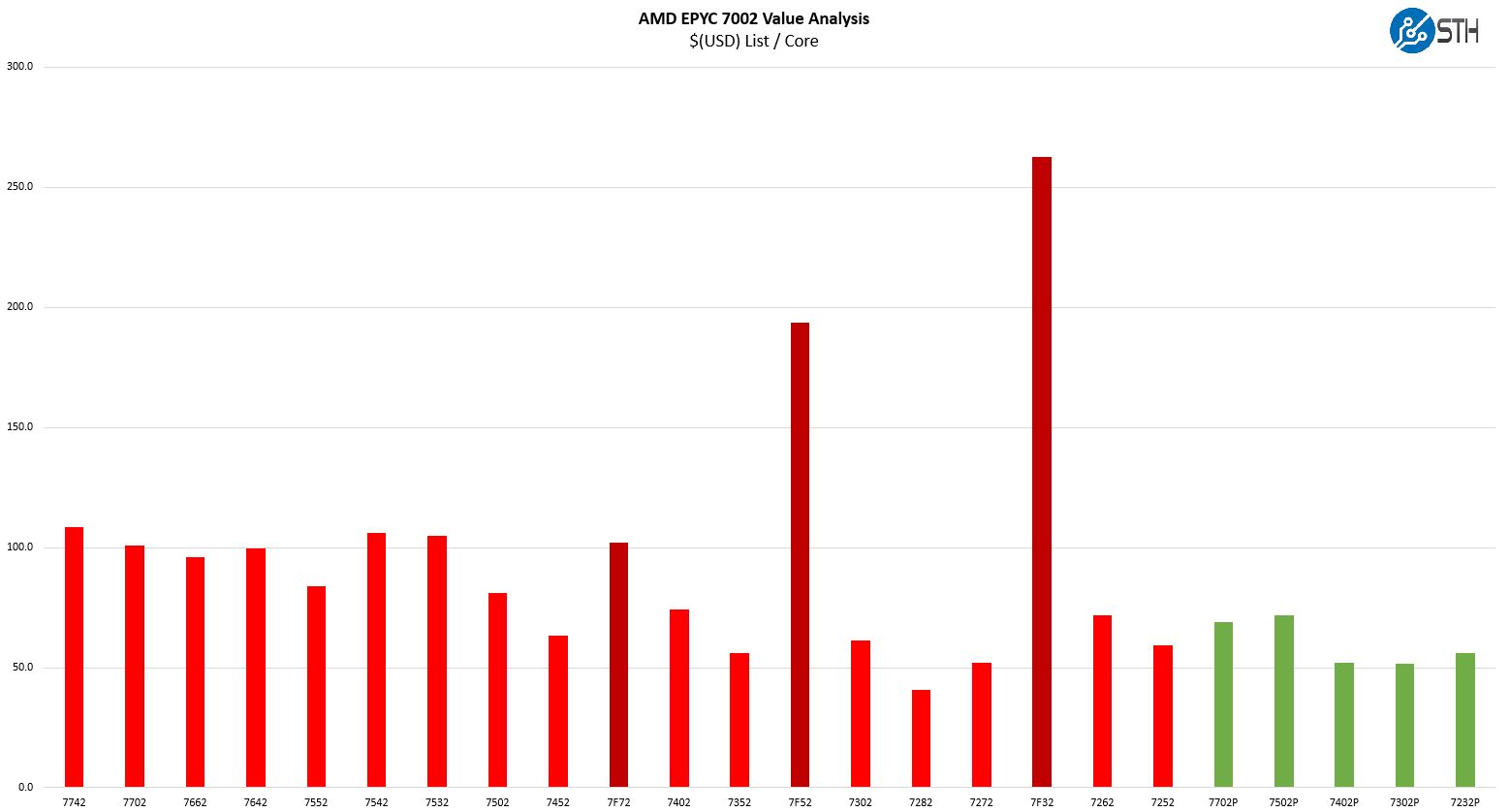

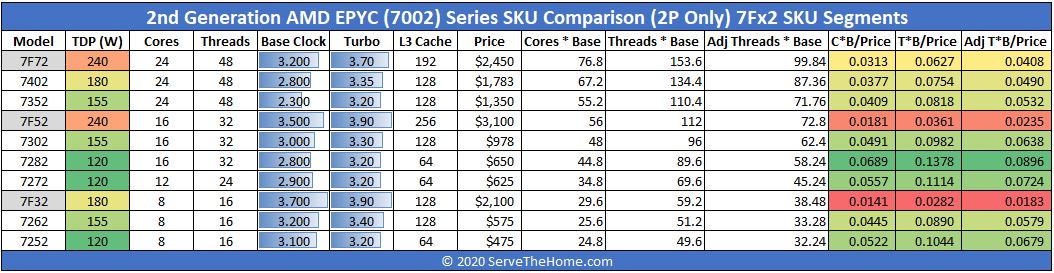

One of the nice features surrounding a frequency optimized part is that there are only a few effective competitors. There are the other 16-core SKUs, obviously, but then the rest is a survey since we are trying to hit a 16-core target for licensing purposes. Here is what the AMD stack looks like with the new SKUs including the EPYC 7F52.

As you can see, this is now the second-highest $/core part at $193.75 per core. For some context, the previous high dollar per core part was the halo AMD EPYC 7742 64-core 225W TDP part at $108.59. Of course, those are intended for completely different markets. While the EPYC 7742 is designed to compete for open source workloads and heavy virtualization servers where outright core counts dominate, the EPYC 7F52 is focused squarely on delivering 16 fast cores. Interestingly enough, while the AMD EPYC 7742 is being directly targeted by Arm server vendors with high-core count parts, these 16-core per-core parts are not in the Arm vendor’s crosshairs, yet.

In terms of 16-core parts, the AMD EPYC line has the EPYC 7302(P) which is effectively a lower cache and clock speed version of this chip but at a drastically lower price. The EPYC 7302 is 68% less expensive or $2122 less than the EPYC 7F52. If you, for example, were only licensing Windows Server 2019 Standard at $972 list price and not running other licensed software and did not need massive performance, then frankly the per-core licensing pressure is not great enough to overcome the chip pricing delta.

The AMD EPYC 7282 is also a 16-core CPU, but it is a completely different class of device. The EPYC 7282 is one of AMD’s 4-channel memory optimized SKUs. It has a lower TDP and less cache. You can learn about this optimization in our AMD EPYC 7002 Rome CPUs with Half Memory Bandwidth piece with accompanying video here if you want to queue it up to listen to later:

Realistically, those looking for the AMD EPYC 7F52 are focused on maximum performance per core and are willing to spend the money for it both in up-front costs, as well as through operating costs.

We did want to discuss operating costs as well. There is another impact of the 240W TDP. Many 2U 4-node systems today are not designed to cool two 240W TDP CPUs per node or 1.92kW of CPU TDP in a 2U box. We have seen many designs such as the Gigabyte H262-Z62 2U 4-node server that can handle 225W TDP CPUs but cannot, for example, populate all front-panel SSDs with that high of TDP CPUs installed. Moving to a 240W TDP platform further exacerbates the concern. This is just on the cusp where we will see some vendors build exotic air cooling solutions and others start to yield to liquid-cooled designs.

AMD EPYC 7F52 v. Intel

Although the AMD EPYC 7F52 pricing may look crazy to some, it is very much in-line with Intel’s offerings. That is an enormous change itself.

The first development has been the Big 2nd Gen Intel Xeon Scalable Refresh which up-ended the competitive landscape. Intel effectively dropped prices across most of its mainstream CPUs.

In the segment most competitive with the EPYC 7F52, Intel could not take the Xeon Gold 6242 and increase core counts as it did elsewhere in the segment. Instead, it increased TDP, cache, and clock speed bringing more performance to the segment with the Xeon Gold 6246R. At the same time, the Intel Xeon Gold 6226R became a strong value option.

In this segment, the Intel Xeon Gold 6226R is a lower-end part which is reflected in its pricing and model number. The Intel Xeon Gold 6242, however, as a similar part we see as a quad-socket-only version now with the new refresh parts. The Intel Xeon Gold 6246R from what we have seen, is going to have its hands full with the EPYC part on a CPU-performance basis. Beyond CPU performance the Gold 6242R gets DL Boost (VNNI) instructions, AVX-512, and low-capacity Optane DCPMM support. The AMD EPYC 7F52 enables the more scalable platform with more PCIe lanes, PCIe Gen4, more memory channel, capacity, and bandwidth.

Perhaps the biggest change is that AMD no longer is starting with deeply discounted pricing compared to Intel’s offerings. Instead, AMD is now pricing at parity with Intel and letting its performance and platform compete head-to-head.

Final Words

When it comes to market shifts, make no mistake, this is a big one. AMD is now competing head-to-head with Intel Xeon on performance even at high clock speeds and price. Doing this means AMD is also using a higher TDP part and that will practically constrain some deployment scenarios. A great case study will be seeing how the 2U 4-node market, popular in the segments the EPYC 7F52 is designed for, evolves with higher-TDP parts.

Beyond the AMD EPYC 7F52, the broader release of the EPYC 7Fx2 series is a big step for the company. To be frank, we want to see a 32-core 240W or even 280W TDP F-series part specifically for VMware workloads. The new AMD EPYC 7532 may seem like a step in that direction, but there is room to go bigger as AMD did with the new series. Still, AMD now is now competing across 8, 16, 24, 32, 48, and 64 core high-frequency segments. This is largely due to having a socket designed for higher TDP and more area around the socket.

What is abundantly clear is that a bastion of Intel Xeon, the frequency optimized and high-cache parts is under siege by AMD. The AMD EPYC 7F52 shows that it has a lot of performance available for the 16-core frequency optimized market. For the remainder of 2020, until both AMD and Intel refresh their portfolios, AMD may just have the better 16-core part with a more expansive platform, competitive clock-for-clock frequencies and performance, and the ability to use its massive L3 cache to change the game for many mainstream workloads in this market. We think AMD has a winner here that will bring competition back to the market.

These are the SKUs that should be thought of as True Workstation class parts and the higher clocks are welcome there along with the memory capacity and the full ECC memory types support.

So what about asking Dell and HP, and AMD, about any potential for that Graphics Workstation market segment on any 1P variants that may appear. I’m hoping that Techgage can get their hands on any 1P variants, or even 2P variants in a single socket compatible Epyc/SP3 Motherboard(With beefed up VRMs) for Graphics workloads testing.

While in the 7F52 each core gets 16MB of L3 cache, that core has only 2048 4k page TLB entries which only cover 8MB. It would be interesting to see how much switching to huge pages improves performance.

I find the 24 core part VERY interesting, just wish they released P versions of the F parts.

Your 16 core intel model is wrong.

16 cores from a 28 core die could still be set up to have the full 28 cores worth of L3 Cache.

Hi Jorgp2 – this was setup to show a conceptual model of the 16-core Gold 6246R which only has 35.75MB L3 cache, not the full 38.5MB possible with a 28 core die

Hi ActuallyWorkstationGrade, if you are going to convince HPE/Dell about 1CPU workstation then IMHO you will have hard fight as those parts are more or less Xeon W-22xx competitive which means if those makers already do have their W-22xx workstation, then Epyc workstation of the same performance will not bring them anything. Compare benchmark results with Xeon W-2295 review here on servethehome and you can see yourself.

Sometimes I read STH for the what. In this “review” the what was nowhere near as interesting as the “why”. You’ve got a great grasp on market dynamics

whats the sustained all core clock speed

I have said it before and this release only highlights the need for it:

You need to add some “few thread benchmarks” to your benchmark suite !

You are only running benchmarks that scale perfectly and horizontally over all cores. This does not massage the turbo modes of the cores nor highlights the advantages of frequency optimized SKUs.

In the real world, most complex environments are built with applications and integrations that absolutely do not scale well horizontally. They are most often limited by the performance and latency of a lot fewer than all cores. Please add some benchmarks that do not use all cores. You can use exisiting software and just limit the amount of threads. 4 – 8 threads would be perfectly realistic.

As you correctly say, the trend with per core licensing will only make this more relevant over time. Best is to start benching as soon as possible so you can build up some comparison data in your database.