AMD EPYC 7763 Market Positioning

These chips are not released in a vacuum instead, they have competition on both the Intel and AMD sides. When you purchase a server and select a CPU, it is important to see the value of a platform versus its competitors. We are going to first discuss AMD v. AMD competition then look to AMD v. Intel Xeon.

AMD EPYC 7763 v. AMD EPYC

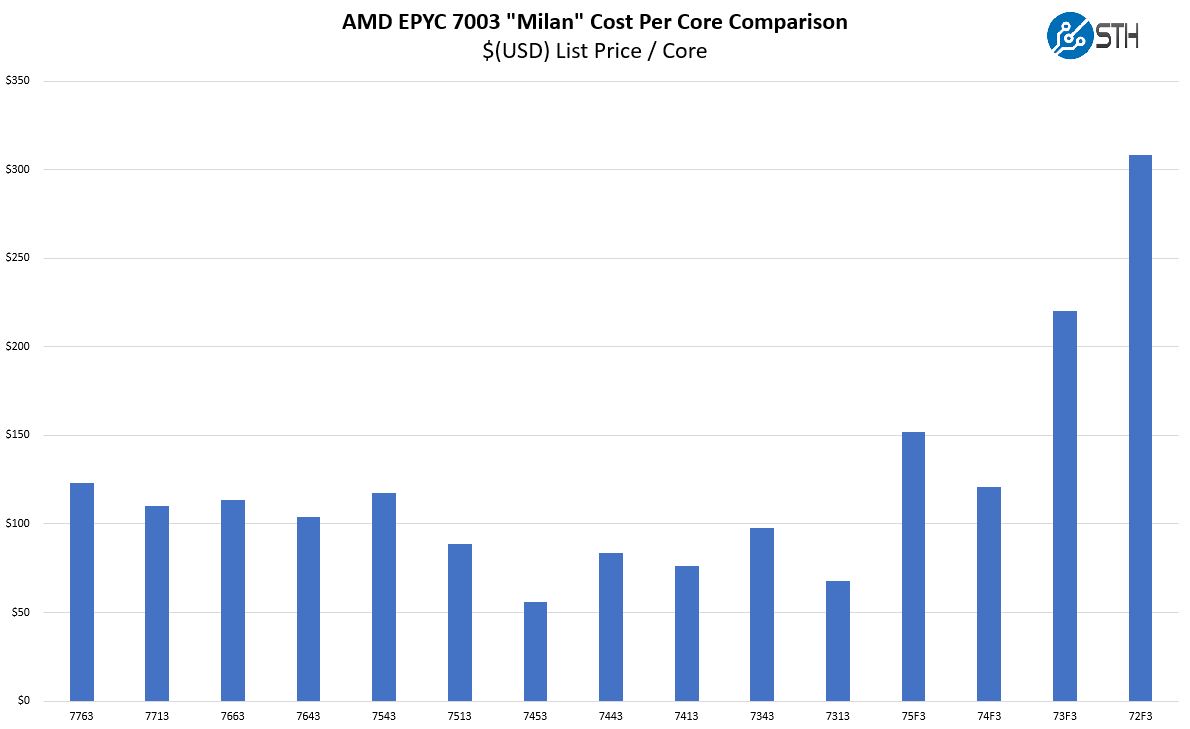

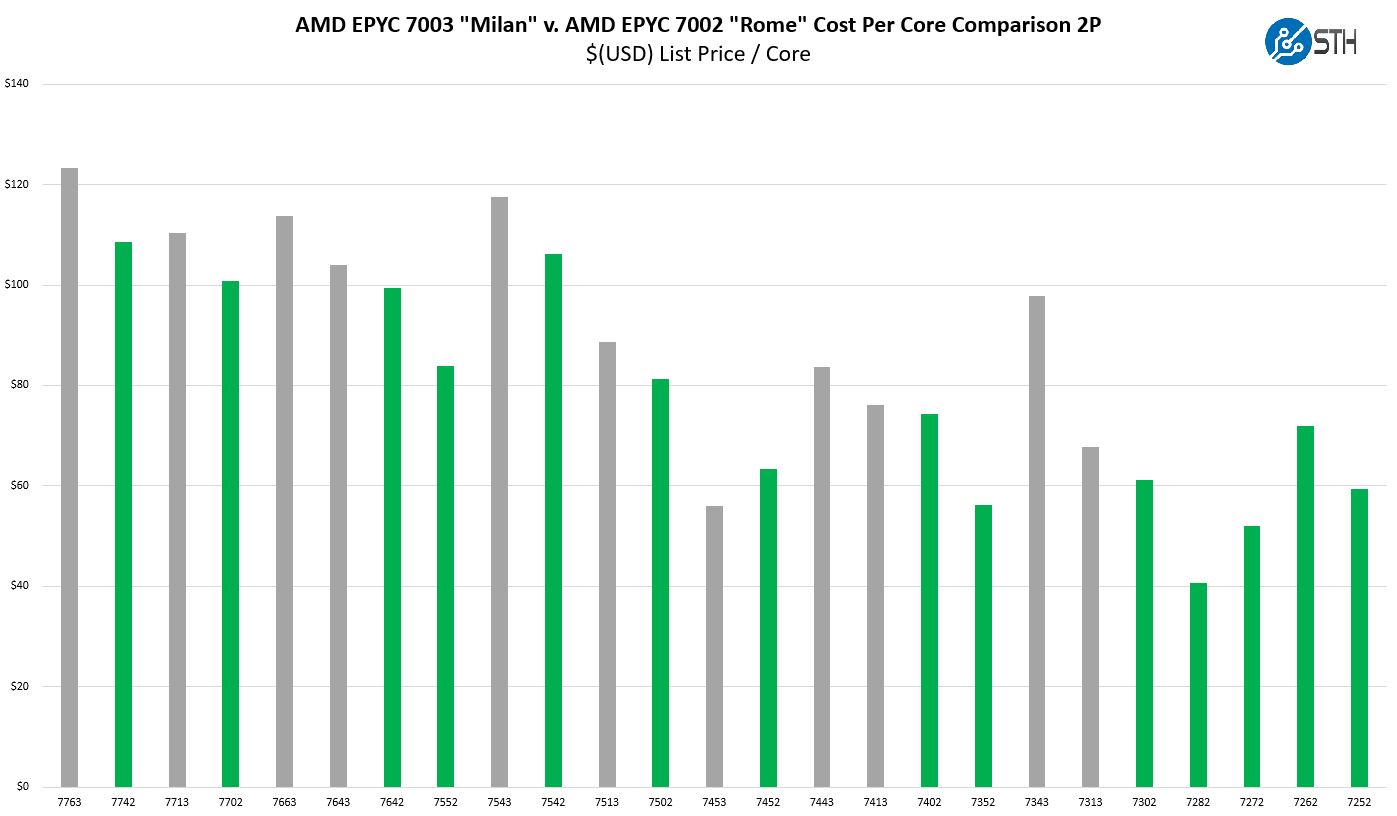

Here we have the main segments of the AMD EPYC and 2nd Generation Intel Xeon Scalable processors on a $(USD) List per core basis:

Excluding the high-frequency parts, we see that this is priced at the top-end of the range with a $123 list price per core. This represents a decent increase in generational uplift as well:

There is a bit more going on here. As a 280W TDP CPU, this will require liquid cooling in some 2U 4-node systems where the EPYC 7713 will not. Most standard density servers will still be able to cool these CPUs, but this is just about at the limit. If one wants to get higher core/ U density and remain on air cooling, the EPYC 7713 may be a better option.

Compared to the AMD EPYC 7742, we get higher power consumption and a higher cost, but with a lot more performance. Again, this is much closer to an EPYC 7H12 competitor.

AMD is keeping the pricing competitive looking at the EPYC 7543 32-core part versus the EPYC 7763 part. While the EPYC 7543 has higher per-core performance, which is important, and it fits well within VMware vSphere/ Microsoft Windows Server licensing models, there is another aspect. If one simply needs more cores, consolidating to the EPYC 7763 may cost more on a per-core basis, but there are cost savings to having half the number of systems as well.

AMD EPYC 7763 v. Intel Xeon

We are still a few days away from the Intel Xeon Ice Lake launch and cannot provide a detailed analysis at this point. Intel has already discussed how it will catch-up with many of AMD’s features such as memory configurations and PCIe Gen4. Still, we expect per-socket general-purpose performance AMD will still be ahead just due to raw core count. AMD has a multi-die package that allows yields that lead to larger chips.

Looking at older generation Xeons, first and second-generation Intel Xeon processors topped out at 28 cores. Not only does AMD have more cores with the EPYC 7763, but it has significantly more. Intel has more power budget per core, to push clock speeds up at its high-end despite being on an older generation process node. The Intel Xeon Gold 6258R has a $141 list price per core which is still higher than the EPYC 7763 at $123/ core. The challenge Intel faces until Ice Lake both launches and becomes available in OEM systems is that its current lineup has AMD EPYC 7763 competition with:

- More than a 2x core advantage

- Higher-end platform features (8 channel DDR4-3200, PCIe Gen4, confidential computing)

- Lower cost per core

That is not a great spot to be in, so we are going to instead discuss more competitive details when we can share the Ice Lake Xeon SKU stack, platform details, and performance.

Another aspect of the competition is also the existing Xeon installed base. Some companies are happy with their older Xeon E5 systems, and so moving to a new AMD platform does not make sense even to this day, let alone the highest-end that AMD has to offer. One has to remember that there is an entire market conditioned to purchase low-cost and low-power Xeons to fit into existing racks. The AMD EPYC 7763 is likely a better option for many of those organizations, but it takes a lot to move the market on extreme consolidation ratios.

Final Words

Our sense is that the AMD EPYC 7763 is going to be a top-tier product for 2021. This chip was the child of an AMD direction to move to multi-chip per socket integration. When Ice Lake was intended to launch in 2019, Intel was still focused on monolithic dies. Now, Intel’s new CEO Pat Gelsinger is all-in on multi-tile or multi-die integration even though Ice Lake is launching in 2021 designed as a monolithic die-era chip. Just given that, the AMD EPYC 7763 will still be a strong competitor even as Intel increases core counts, updates its architecture, and shows its suite of supporting technology.

There are effectively two types of customers that will focus on the top-end EPYC 7763 SKU. The first is the customers that can see the benefits of consolidating more systems and sockets onto far fewer. Sales teams and customers who understand and can take advantage of this are going to end up doing very well in this cycle. The other set of customers is likely to be those looking at high-performance computing options. Here we do not just mean traditional HPC but also those building accelerated systems. Using up to 160x PCIe Gen4 lanes in a system and filling those lanes with high-end storage and networking is costly 10% lower accelerator performance can cost more than one of the CPUs which is why these higher-end CPUs will get a lot of opportunities in that space.

Overall, this is a great chip, but just the power, pricing, and the fact that to a major segment it will require sales and customer education efforts will limit its reach. Still, for those that read STH, even if you choose not to use a SKU like this today, it is worth getting your organization ready for the future. Core counts and servers are only getting bigger. By next year, there will be some amazing new technology that will further this trend so preparing with discussions now may pay off in a few quarters.

Please, please, please can you guys occasionally use a slightly different phrase to “ These chips are not released in a vacuum instead”.

Other than that, keep up the good work

That Dell system appears quite tasty, a nice configuration/layout design, actually surprised HPE never sent anything over for this mega-HPC roundup.

280TDP is I guess in-line with nVidia’s new power hungry GPUs, moores law is now kicking-in full-steam. Maybe a CXL style approach to CPUs could be the future, need more cores? expand away. Rather than be limited by fixed 2/4/8 proc board concepts of past.

“These chips are not released in a vacuum instead, only available to the Elon Musks of the tech world”

280W TDP is far less than NVIDIA’s GPUs. The A100 80GB SXM4 can run at 500W if the system is capable of cooling that.

“Not only does AMD have more cores than the EPYC 7763, but it has significantly more. ”

This wording seems…suspect. Perhaps substitute the word “with” in place of current “than”?

“I guess in-line with” – meaning the rise in recent power hungry tech, tongue-in-cheek ~ the A100s at full-throttle can bring a grid down if clustered-up to skynet ai levels.

@John Lee, good review, thanks! You always do good reviews. Can you look at the last sentence of the second last paragraph though? It is not clear what you are trying to say.

“Using up to 160x PCIe Gen4 lanes in a system and filling those lanes with high-end storage and networking is costly 10% lower accelerator performance can cost more than one of the CPUs which is why these higher-end CPUs will get a lot of opportunities in that space”

to Drewy:

Aren’t all chips(1) manufactured in a negative pressure environment, i.e. “clean-room” conditions acheived by removal of air and all the floaty bits in it that prejudicially unwelcome anywhere in the chip “birthing”(2) process (neo-natal facilities in hospitals actually have less stringent requirements for receiving the thumbs up (certificate of cleanliness) required prior to inviting the pregnant woman, and the “catcher” doctor/midwife.

(1) integrated circuits comprising semiconductors and thin wires etched onto silicon wafers, sealed at the factory and festooned with pins that allow communicating with the innards via all manner of electronic signalling, for disambiguation from other things we refer to as chips.

(2) we do not really mean birthing, the process by which hardware upon which artificial intelligence, whom identify by whichever pronouns they prefer, exist in a relationship to these chips that is as yet to be determined. Do AI beings exist “on top” of chips? As a consequence of threshold complexity unavoidably gives rise to consciousness as an emergent property [chicken or egg first] or is the AI ontological phenomenon entirely encompassed within software, and thus their entry into existence is prefaced by the command or perhaps as “superuser” (I would very much prefer my creator to have the title “super”, wouldn’t you?) Much like our universe was likely created in a vacuum, the silicon chips were also created in a vacuum , and finally, artificial intelligence becoming aware of itself would likely have an abrupt beginning to its own history (an infinity of nothing then “hello world!”).

I am with you in protesting this asinine assertion that creation happens in any other regime besides voids and vacuums. “In the beginning… there was sweet f[_]( I{ all NOTHING! Fortunately #nothing# was inherently unstable.