AMD EPYC 7402P Benchmarks

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. Starting with our 2nd Generation Intel Xeon Scalable benchmarks, we are adding a number of our workload testing features to the mix as the next evolution of our platform.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results, and highlight a number of interesting data points in this article.

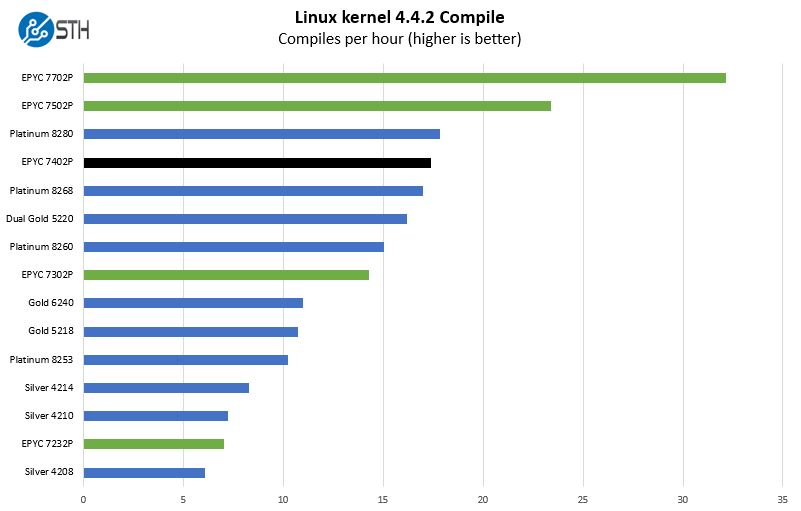

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

The performance of the AMD EPYC 7402P is easy to plot among other AMD EPYC offerings, but it is difficult to plot against Intel Xeon CPUs. What is the comparison we should use? The Intel Xeon Platinum 8260 is perhaps the most directly competitive. It is a $4700 part, but Intel now sells a variant called the Intel Xeon Gold 6212U. The Intel Xeon Gold 6212U has the same features as the Platinum 8260, but it is limited to single-socket operation and costs around $2000. You can read about that part’s genesis in: Intel Xeon Follows AMD EPYC Lead Offering Discounted 1P Only SKUs. The Xeon Platinum 8280 is everywhere while the Gold 6212U is harder to purchase usually as a special order part.

We could use dual CPUs, but that means Intel is disadvantaged with two NUMA nodes instead of one like AMD. It also will generally mean Intel is using significantly more power and will cost more. The upside is that it will have closer to the amount of AMD EPYC 7402P I/O, but still will fall a bit short.

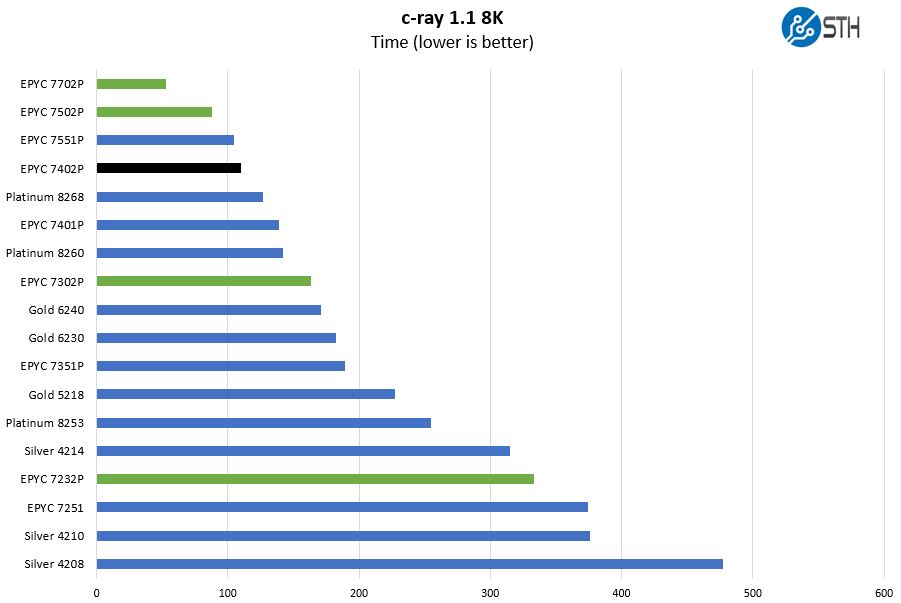

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

This is not the best cross-architecture benchmark, however, we have been using it for a long time and it scales well up to hundreds of threads. AMD tends to do well here because this is taking advantage of EPYC’s low level caching.

When the AMD EPYC 7401P was the new chip on the market, we assumed that the architectural improvements were responsible for its outsized gains versus Intel. Our hypothesis was that we would see the same general performance on the AMD EPYC 7402P but perhaps a few percent faster. Instead, we are seeing what would have been a ~30 core hypothetical AMD EPYC 7001 part here with the AMD EPYC 7402P.

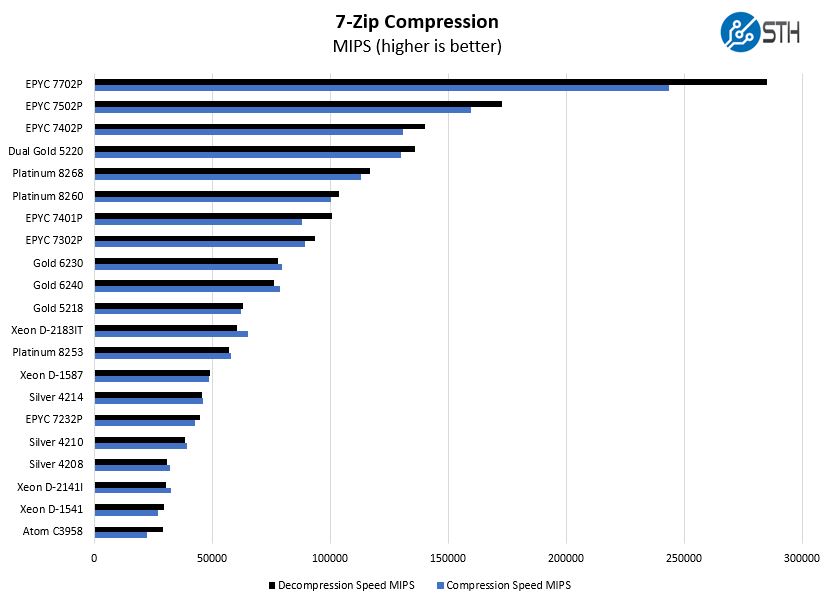

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Here we brought in some both Intel Xeon Silver as well as Intel Xeon D results. There is a segment of the market that relies upon many small nodes in clusters. If you need a compact form factor, the AMD EPYC 7402P does not make sense on a node-for-node basis. The AMD EPYC 3000 series is your best bet there. See AMD EPYC 3000 Coverage From STH Your Guide for our comprehensive coverage.

Still, we are at the point here where the single socket AMD EPYC 7402P will replace four Intel Xeon D-2141I nodes at lower initial cost and power consumption. Even if memory capacity is the concern, the AMD EPYC 7402P supporting up to 4TB means AMD can consolidate entire clusters of these smaller nodes into a single machine. That has trickle-down impacts of requiring fewer network switch ports and PDU ports increasing TCO benefits.

If you have an office or home lab, or a small colocation cluster that is using 250-300W in multiple nodes, consolidating to a single AMD EPYC 7402P may make sense.

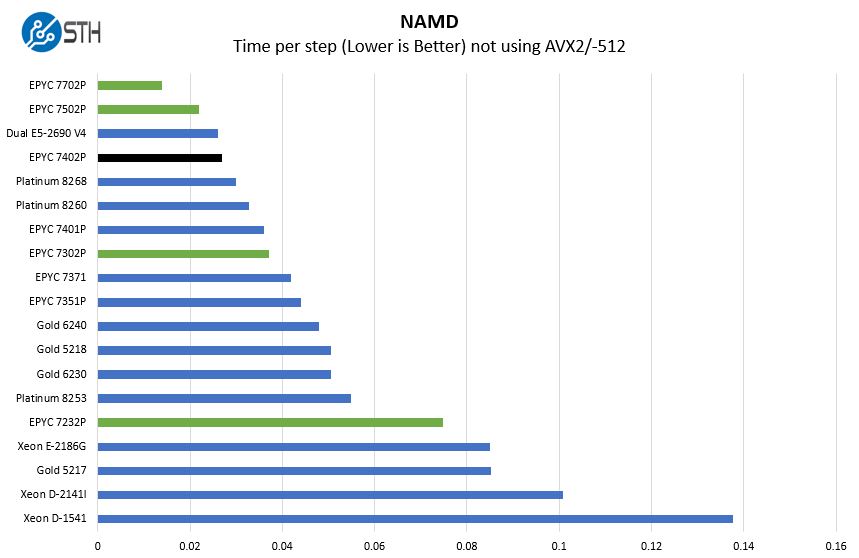

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. With GROMACS we have been working hard to support AVX-512 and AVX2 supporting AMD Zen architecture. The Zen2 version for the AMD EPYC 7002P series is not complete yet, (more on that later) so this is actually a better comparison point right now. Here are the comparison results for the legacy data set:

In this chart, we pulled in a dual Intel Xeon E5-2690 V4 result. These were $2000 each upper mid-range 14 core and higher clock speed CPUs that were the current generation only about 18 months prior to this review. In two years, AMD has delivered $4000 of dual-socket CPU power for around one third the price, in a single socket, with more PCIe I/O, PCIe bandwidth, RAM capacity, and with lower power consumption.

That is a key reason the AMD EPYC 7002 series is game-changing. Instead of delivering incremental performance, AMD is delivering such value that the TCO calculations, even at their lower-end, may make sense to see 1 or 2 year old systems ripped and replaced to get the operational savings. At a minimum, it makes those platforms harder to re-order.

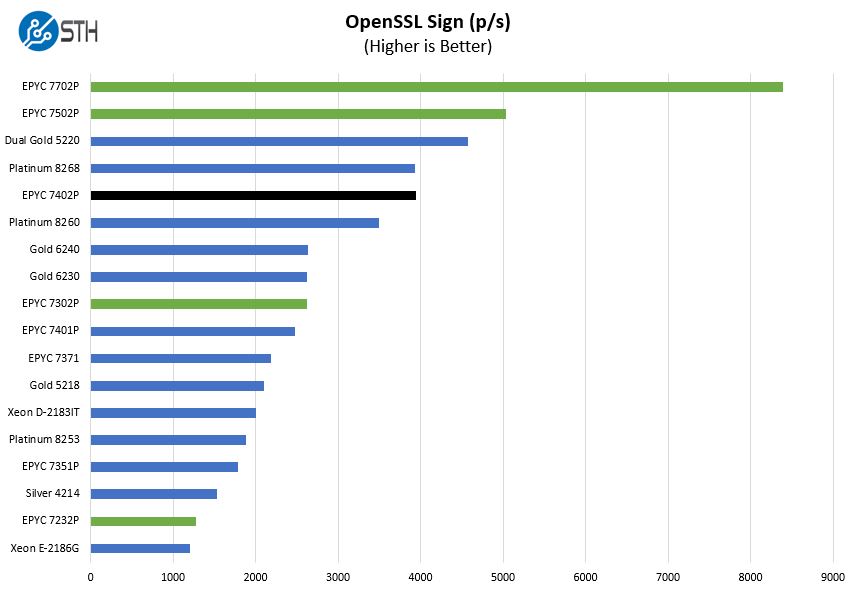

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

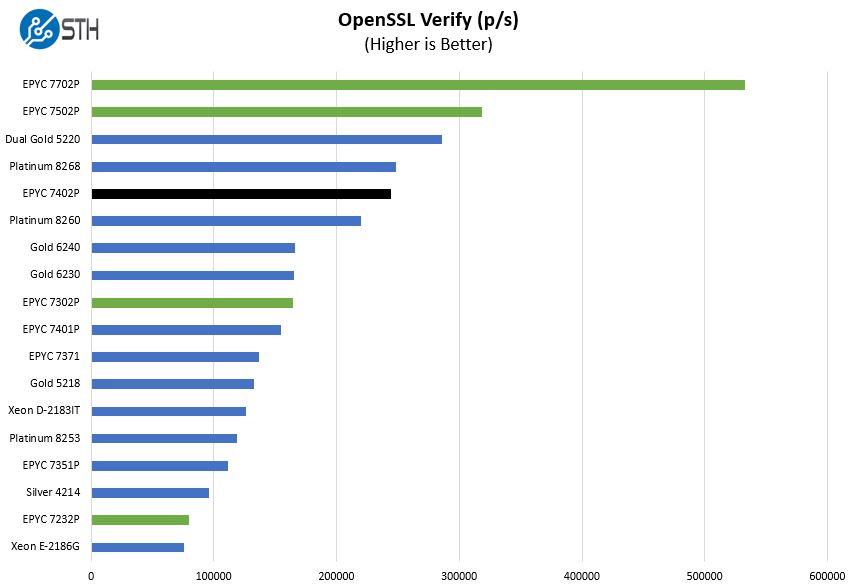

Here are the verify results:

Again we find the AMD EPYC 7402P trading performance core-for-core with the Intel Xeon Platinum 8200 line. With the new and higher clock speeds of the AMD EPYC 7402P, combined with the larger caches, AMD has closed the per-core performance gap that existed in the previous generations.

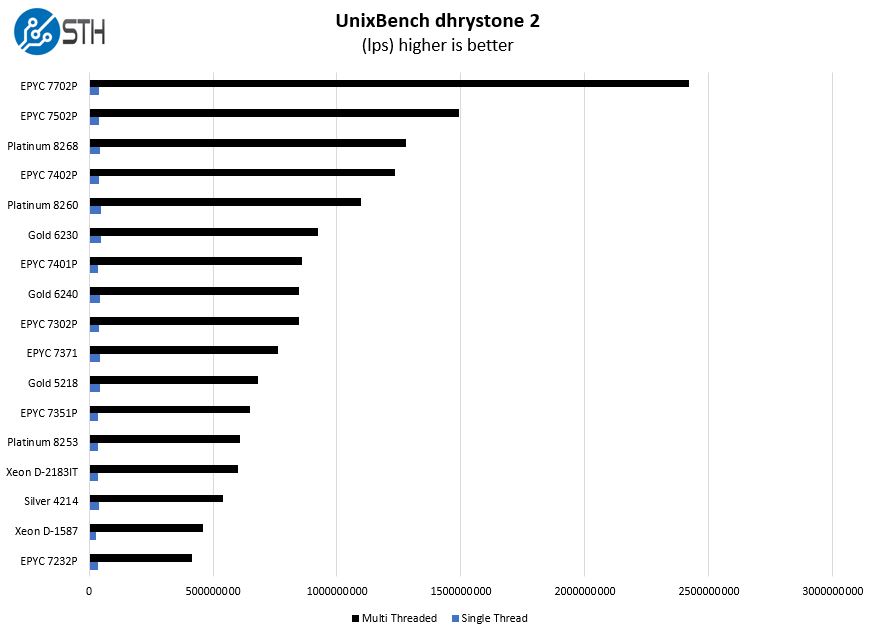

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

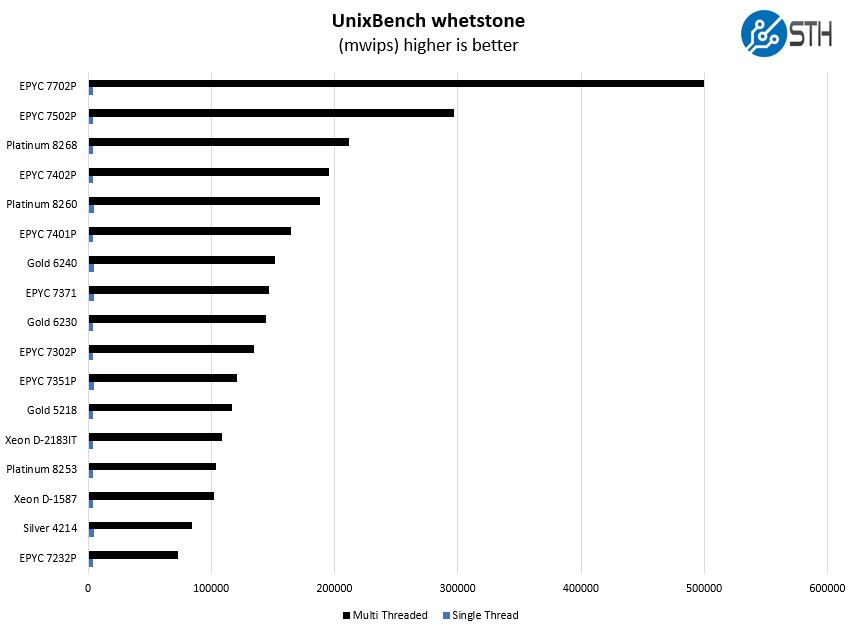

Here are the whestone results:

Again we see the AMD EPYC 7402P fall somewhere between the Intel Xeon Platinum 8268 and Platinum 8260. The AMD EPYC 7402P cannot claim that it is the fastest 24-core part. The base models of those chips for Intel are around $4700 to $6300, without accounting for the additional I/O (2nd CPU needed) or additional memory capacity (M or L SKU needed.) Our sense is that a $1250 CPU providing the same number of cores and per-core performance that is in the $5000-5500 range is still a compelling story for AMD. AMD may not be able to match the Platinum 8268, but also uses less power, has more memory capacity and I/O so it is not an outright win for the Platinum 8268.

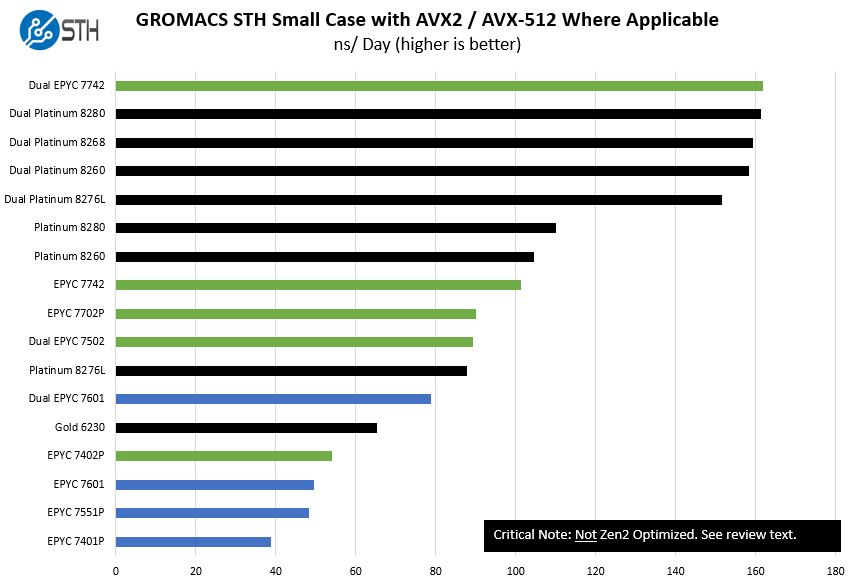

GROMACS STH Small AVX2/ AVX-512 Enabled

During our initial benchmarking efforts, we have found that our version of GROMACS was taking advantage of AVX-512 on Intel CPUs. We also found that it was not taking proper advantage of the AMD EPYC 7002 architecture. From our original AMD EPYC 7002 Series Rome Delivers a Knockout piece:

We have had one of the lead developers on our dual AMD EPYC 7742 machine and changes are being upstreamed. The initial results were putting dual AMD EPYC 7742’s at around 2.7x of dual Intel Xeon Gold 6148F parts which are a go-to HPC chip. The above will shift significantly once this is changed, but not in time for this AMD EPYC 7402P review.

Instead of continuing to publish this benchmark, we are going to hold off until later in 2019 when those results get upstreamed. At worst, as shown above, the chips are about even. When properly optimized, they are well ahead of Intel’s offerings at a given price point.

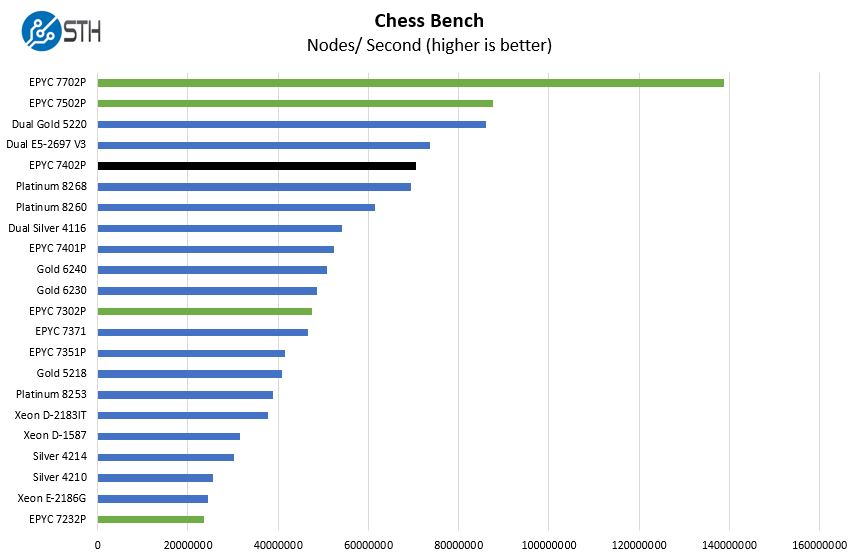

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

We added dual Intel Xeon E5-2697 V3 processors to this mix to go back to a 3-5 year replacement cycle. Here, the AMD EPYC 7402P is able to nearly match a virtually top-end dual-socket system from the Intel Xeon E5-2600 V3 era. This is what bringing competiting back to the market looks like.

We also wanted to point out that while Intel delivered around 30% more performance at a given price point with the 2nd generation Intel Xeon Scalable mid-range CPUs (e.g. Silver 4200, Gold 5200, and Gold 623x), they did so largely by adding cores. You can see our Intel Xeon 6230 review as a great example of this. AMD’s new architecture has yielded a similar gain at the same core count which you can see by comparing the EPYC 7401P and the AMD EPYC 7402P.

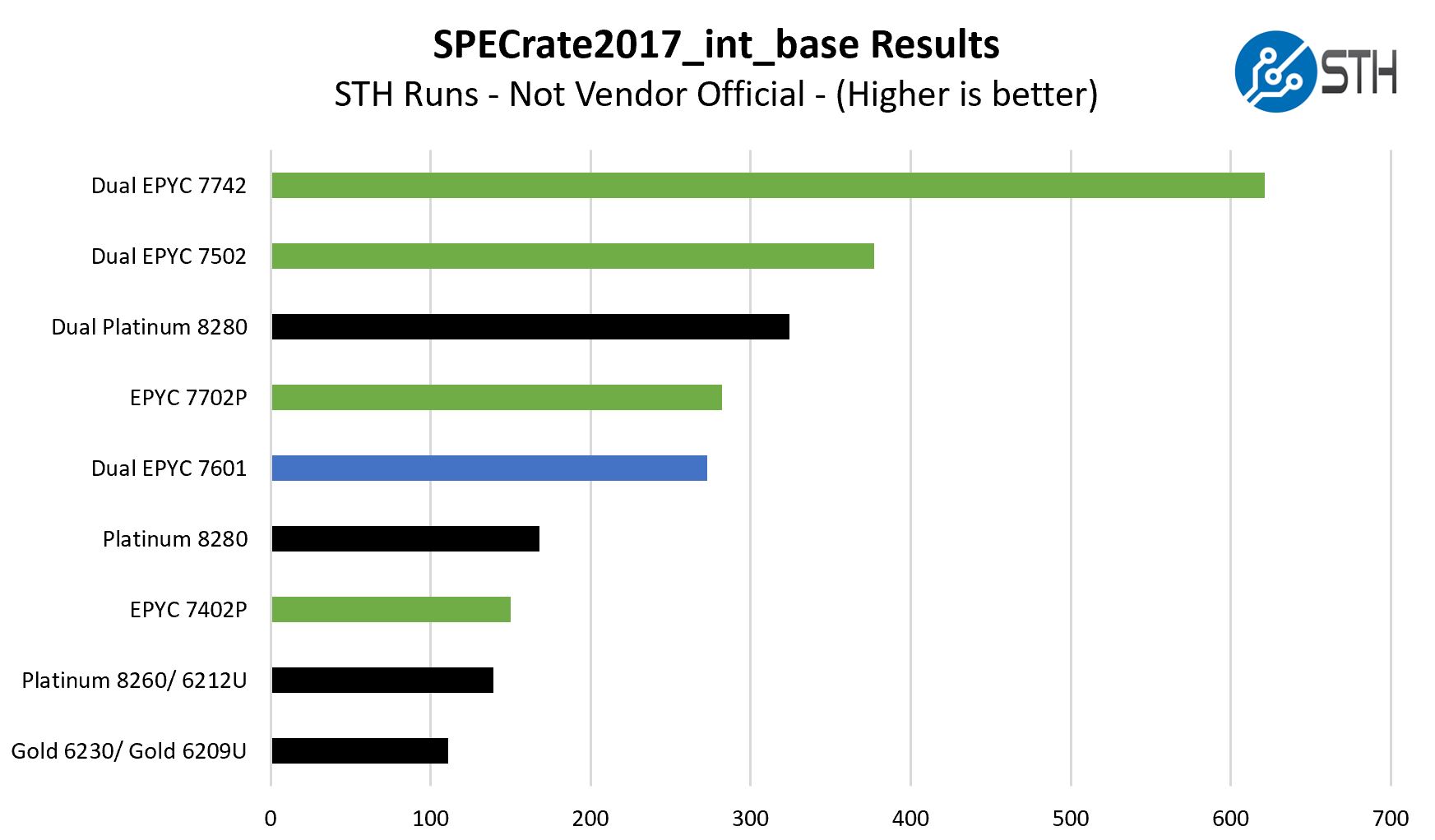

SPECrate2017_int_base

The first workload we wanted to look at is SPECrate2017_int_base performance. Specifically, we wanted to show the difference between what we get with Intel Xeon icc and AMD EPYC AOCC results. We expect server vendors get better results than we do, but this gives you an idea of where we are at:

We had this from our original AMD EPYC 7002 Series Rome Delivers a Knockout piece, but it had a number of the comparison points we wanted, including the EPYC 7402P. You can see it compares well even on industry-standard workloads used in RFPs such as SPECrate2017_int_base. Again, we urge you to check your vendor’s benchmarks for actual RFPs since OEMs get better numbers than we do.

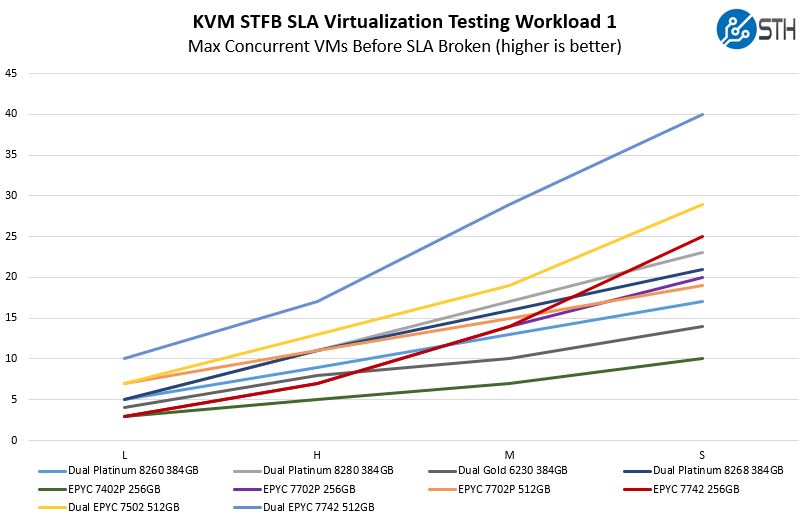

STH STFB KVM Virtualization Testing

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker.

We wanted to show an example of where the AMD EPYC 7402P was not on top. Here, thre are two drivers. One is that the Intel systems are dual socket with more cores. Second, the 8x 32GB (256GB) configuration was the lowest we tested. The key takeaway here is that a single AMD EPYC 7402P system with 256GB RAM is less than half the price of the dual Intel Xeon Platinum 8260 system, yet offers more than half the performance. In the world of vSAN, Ceph, and other software defined storage solutions used for hyper-converged clusters, adding more nodes with more storage and networking I/O can help the overall system TCO.

Next, we are going to look at the AMD EPYC 7402P market positioning and give our final words.

Thanks for the review, always appreciated!

Could you show the result of lstopo? Preferably with all pcie slots populated.

Also it would be very interesting to run the cuda P2P benchmark populated with Nvidia GPU’s.

Very interesting review, thanks for putting in the solid effort and detail. The new EPYC 7002 CPUs are certainly the best choice in most situations.

How will the 24 core EPYC 7402p chip work with virtualization of of 4 core and 8 core VMs? Will the CCX layout put this particular CPU at a disadvantage?

What’s that writing on the green part in black pen?

Great write up! Thank you for including your results for SPECrate2017_int_base. Would it be possible to include the floating point “rate” and “speed” varieties too next time around too?

Stellar review STH

Thanks for the review. is the clock speed high enough on the 7401P to use in a workstation?

Patrick, wondering if you’ve tried the 7402 in a Dell poweredge 7415 server to see if it will work.

A lot there.

k8s – we can see. Perhaps in the forums when it gets open. I think we had one or will have one in a review coming up.

Esben – It worked well enough that in our KVM testing we did not see any issues. VMware/ Hyper-V may be different of course. New architectures tend to get patches over time.

HankW – We have a lot of CPUs. Marking the model number on the carrier helps tell if a CPU’s thermal paste is not cleaned. It is a lab hack.

David – we can look into that. Another option is to just look at official vendor runs on the spec.org site. Vendors have teams that focus on optimizations.

Martin – I have thought about it. It is likely usable, but I would still suggest using a desktop chip if possible. The 4.0-5.0GHz speeds are nice on modern desktop CPUs.

Steve – not yet. Dell said they will announce Rome systems soon. Stay tuned.

@Martin: The most recent ThreadRipper leak suggests that AMD will introduce a workstation tier in the TR line. If true, yo can rasonably call it a desktop Epyc – 8 RAM channels + (L)R-DIMM support, 96-128 PCIe lanes, and most probably higher frequencies.

Nice review! Do we know the relative performance pr CORE@same freqyency on Intel Gold 6140 (1gen cascade), Intel Gold 6240 vs Epyc 7×02 ? In other words core IPC performance vs. the competition?

/TE

Expect to see something from Dell next week.

Great review – thanks!

Any plans to compare 7402P performance with dual 7272 (2×12 cores; 2x $625) and/or dual 7282 (2×16 cores; 2x $650) which cost almost exactly the same but have twice RAM bandwidth and 2x higher RAM size limit?

Also, any plans to benchmark dual 7452 which seems to be as fast or faster than dual Xeon 8280 in many (most?) cases of server workloads?

@ Esben Møller Barnkob

In my data center I have a couple Dell R7425’s with dual 7401 CPUs and there is no problems with virtualization of 4 or 8 core VMs, including running DB’s on it.

I can’t make sense of most of the graphs. Not in terms of “oh, this processor is faster/slower”, but in terms of the colour choices.

In the first chart, Linux Kernel Compile Benchmarks, green bars are AMD processors, blue bars are Intel processors and the black bar is the 7402P (the reviewed processor).

In C Ray 8K Benchmark, however, the green bars are still AMD processors, and the black bar is still the 7402P, but the blue bars are a mix of Intel and AMD processors (7551P, 7401P, 7351P, 7251).

In NAMD Benchmark, the blue bars are Intel plus 7401P, 7371, 7351P, which is different from the mix in C Ray 8K.

OpenSSL Sign and Verify Benchmarks, blue is Intel plus 7401P, 7371, 7351P, as in the NAMD benchmark.

GROMACS STH Small Case Not Zen2 Optimized Benchmark … Black is now Intel, Green is still AMD (but now has the tested 7402P and blue is Dual 7601, 7601, 7551P, 7401P

Chess Benchmark, blue is Intel plus 7401P, 7371, 7351P

SPECrate2017_int_base Benchmark, blue is now the tested 7402P, and black is Intel.

In an otherwise excellent article, the lack of consistency in the colours chosen for the graphs is really detracting, as it makes it difficult (for me at least) to skim the graphs and still get a decent idea of what they’re showing, because it forces me to constantly check the labels for each bar.

7402P is a great CPU, and what makes it even better is power consumption: under sustained heavy loads the 1014 platform hovers at just above 200W, making it easy to fill a standard 40U 8kW rack without worrying about going over the limit while keeping every server configuration identical. Unfortunately, Supermicro ships the platform with ridiculously overpowered dual 500W power supplies, operating in sub-optimal power range when redundant with no add-in cards.

Comparable platforms with Intel’s 62{09,10,12}U installed, in my experience, perform slightly better in single-threaded tasks, but their peak power consumption is significantly higher than in platforms with Rome, even

I’m looking forward to see my fleet upgraded with the new AMD systems. Coincidentally, I’ll be upgrading dual 2630v3/4s, just like this article suggests; I figured a safe bet would be about 1.5x old servers per new one, but time will tell if I was right.