This is our first formal AMD EPYC 7002 review after our AMD EPYC 7002 Series Rome Delivers a Knockout launch piece. Although John has a few reviews queued up, I wanted to take the P-series AMD EPYC processor reviews myself. Specifically, we are starting with this AMD EPYC 7402P review for two reasons. First, it is perhaps the best value CPU on the market today. Second, at STH, we use a gaggle of EPYC 7401P systems in our lab. The AMD EPYC 740xP series is a single-socket only part with 24 cores. Prices are slightly higher than the previous generation, but they are well worth it. In this review, we are going to show you what makes this solution so compelling.

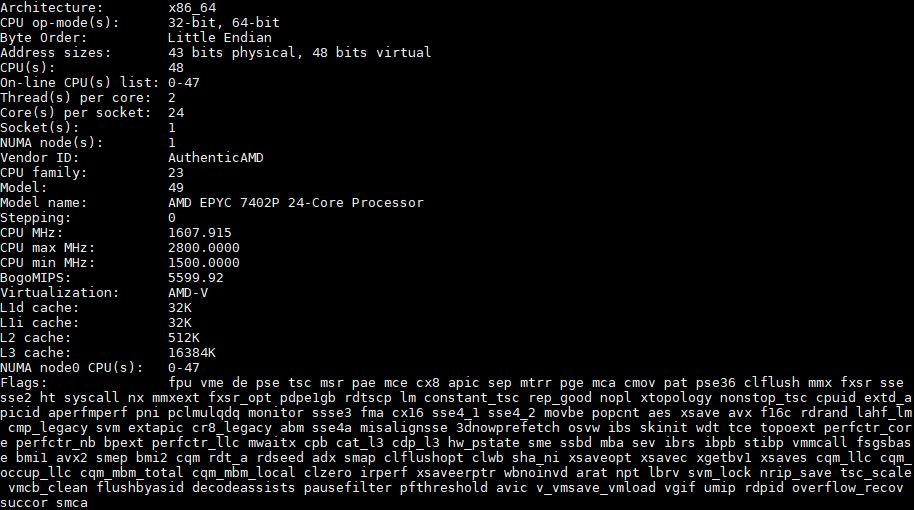

Key stats for the AMD EPYC 7402P: 24 cores / 48 threads with a 2.8GHz base clock and 3.35GHz turbo boost. There is 128MB of onboard L3 cache. The CPU features a 180W TDP. That cTDP is configurable from 165W to 200W for different applications. These are $1250 list price parts.

Here is what the lscpu output looks like for an AMD EPYC 7402P:

128MB of L3 cache is a massive number. There is also 8MB of L2 cache. For some perspective here, the competitive Intel Xeon Platinum 8268, Platinum 8260, and Gold 6252 are 24 core parts with a relatively measly 35.74MB of cache. A key design principle we hear in the computer industry today is keeping data as close to the compute as possible and reusing that data as much as possible The massive 128MB L3 cache helps achieve this minimizing trips to memory. That is even with 8 channels of DDR4-3200 compared to 6 channels of DDR4-2933 for the current generation Intel Xeon Platinum and Xeon Gold.

Also at STH, we have shown the virtue of the AMD EPYC single-socket offerings. AMD discounts the single-socket only EPYC 7402P by about 30% for having a single-socket only design. That puts the chip in the chip in the same price bracket as a lower clock speed 16 core Intel Xeon Gold 5218. The single-socket story also means that AMD is delivering up to 128 PCIe Gen4 lanes from one CPU. Intel can deliver 48 PCIe Gen3 lanes per processor. Even with two CPUs, AMD has more PCIe lanes and double the bandwidth per lane. You can see our recent Gigabyte R272-Z32 Review to see how a single AMD EPYC $1250 list price part can handle more NVMe storage than two Intel Xeon Platinum 8280‘s or even the Intel Platinum 9200 series.

A Word on Power Consumption

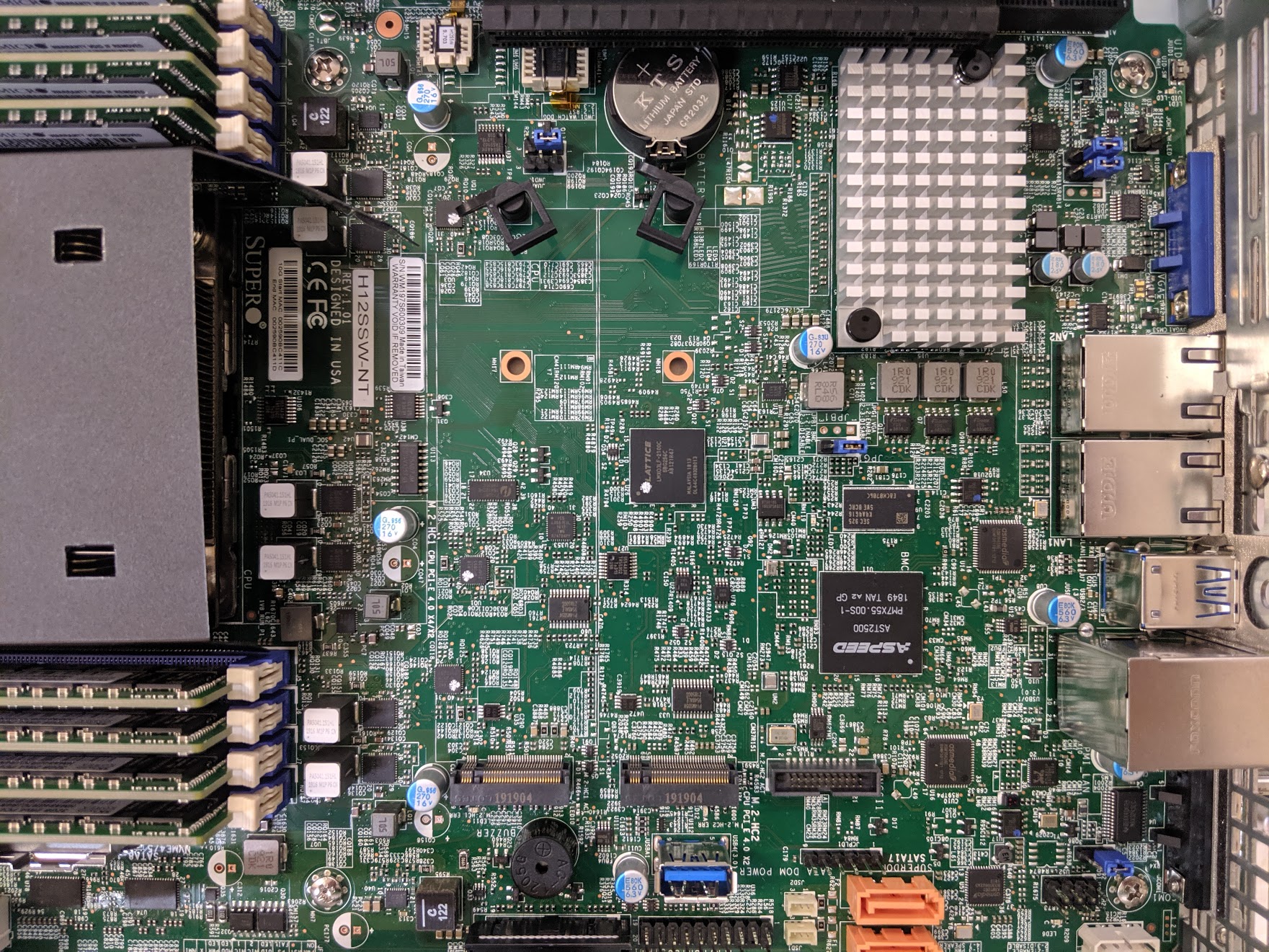

We tested these in a number of configurations. The lowest spec configuration we used is a Supermicro AS-1014S-WTRT. This had two 1.2TB Intel DC S3710 SSDs along with 8x 32GB DDR4-3200 RAM. One can get a bit lower in power consumption since this was using a Broadcom BCM57416 based onboard 10Gbase-T connection, but there were no add-in cards.

Even with that here are a few data points using the AMD EPYC 7402P in this configuration when we pushed the sliders all the way to performance mode and a 180W TDP:

- Idle Power (Performance Mode): 99W

- STH 70% Load: 185W

- STH 100% Load: 212W

- Maximum Observed Power (Performance Mode): 242W

As a 1U server, this does not have the most efficient cooling, still, we are seeing absolutely great power figures here. The impact is simple. If one can consolidate smaller nodes onto an AMD EPYC 7402P system, there are power efficiency gains to be attained as well.

Next, let us look at our performance benchmarks before getting to market positioning and our final words.

Thanks for the review, always appreciated!

Could you show the result of lstopo? Preferably with all pcie slots populated.

Also it would be very interesting to run the cuda P2P benchmark populated with Nvidia GPU’s.

Very interesting review, thanks for putting in the solid effort and detail. The new EPYC 7002 CPUs are certainly the best choice in most situations.

How will the 24 core EPYC 7402p chip work with virtualization of of 4 core and 8 core VMs? Will the CCX layout put this particular CPU at a disadvantage?

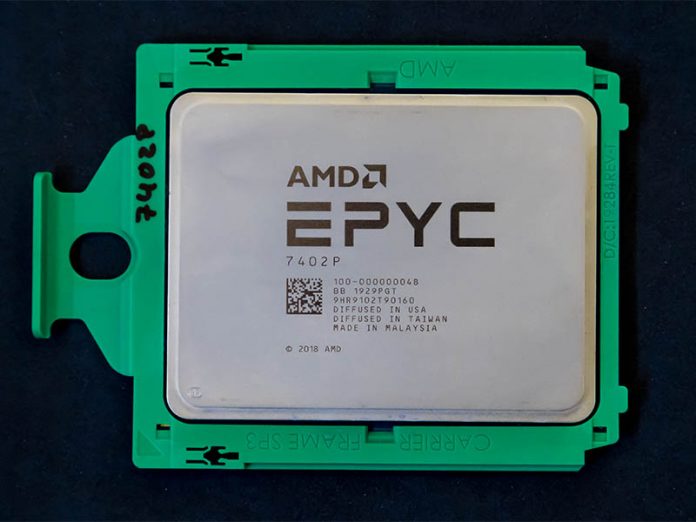

What’s that writing on the green part in black pen?

Great write up! Thank you for including your results for SPECrate2017_int_base. Would it be possible to include the floating point “rate” and “speed” varieties too next time around too?

Stellar review STH

Thanks for the review. is the clock speed high enough on the 7401P to use in a workstation?

Patrick, wondering if you’ve tried the 7402 in a Dell poweredge 7415 server to see if it will work.

A lot there.

k8s – we can see. Perhaps in the forums when it gets open. I think we had one or will have one in a review coming up.

Esben – It worked well enough that in our KVM testing we did not see any issues. VMware/ Hyper-V may be different of course. New architectures tend to get patches over time.

HankW – We have a lot of CPUs. Marking the model number on the carrier helps tell if a CPU’s thermal paste is not cleaned. It is a lab hack.

David – we can look into that. Another option is to just look at official vendor runs on the spec.org site. Vendors have teams that focus on optimizations.

Martin – I have thought about it. It is likely usable, but I would still suggest using a desktop chip if possible. The 4.0-5.0GHz speeds are nice on modern desktop CPUs.

Steve – not yet. Dell said they will announce Rome systems soon. Stay tuned.

@Martin: The most recent ThreadRipper leak suggests that AMD will introduce a workstation tier in the TR line. If true, yo can rasonably call it a desktop Epyc – 8 RAM channels + (L)R-DIMM support, 96-128 PCIe lanes, and most probably higher frequencies.

Nice review! Do we know the relative performance pr CORE@same freqyency on Intel Gold 6140 (1gen cascade), Intel Gold 6240 vs Epyc 7×02 ? In other words core IPC performance vs. the competition?

/TE

Expect to see something from Dell next week.

Great review – thanks!

Any plans to compare 7402P performance with dual 7272 (2×12 cores; 2x $625) and/or dual 7282 (2×16 cores; 2x $650) which cost almost exactly the same but have twice RAM bandwidth and 2x higher RAM size limit?

Also, any plans to benchmark dual 7452 which seems to be as fast or faster than dual Xeon 8280 in many (most?) cases of server workloads?

@ Esben Møller Barnkob

In my data center I have a couple Dell R7425’s with dual 7401 CPUs and there is no problems with virtualization of 4 or 8 core VMs, including running DB’s on it.

I can’t make sense of most of the graphs. Not in terms of “oh, this processor is faster/slower”, but in terms of the colour choices.

In the first chart, Linux Kernel Compile Benchmarks, green bars are AMD processors, blue bars are Intel processors and the black bar is the 7402P (the reviewed processor).

In C Ray 8K Benchmark, however, the green bars are still AMD processors, and the black bar is still the 7402P, but the blue bars are a mix of Intel and AMD processors (7551P, 7401P, 7351P, 7251).

In NAMD Benchmark, the blue bars are Intel plus 7401P, 7371, 7351P, which is different from the mix in C Ray 8K.

OpenSSL Sign and Verify Benchmarks, blue is Intel plus 7401P, 7371, 7351P, as in the NAMD benchmark.

GROMACS STH Small Case Not Zen2 Optimized Benchmark … Black is now Intel, Green is still AMD (but now has the tested 7402P and blue is Dual 7601, 7601, 7551P, 7401P

Chess Benchmark, blue is Intel plus 7401P, 7371, 7351P

SPECrate2017_int_base Benchmark, blue is now the tested 7402P, and black is Intel.

In an otherwise excellent article, the lack of consistency in the colours chosen for the graphs is really detracting, as it makes it difficult (for me at least) to skim the graphs and still get a decent idea of what they’re showing, because it forces me to constantly check the labels for each bar.

7402P is a great CPU, and what makes it even better is power consumption: under sustained heavy loads the 1014 platform hovers at just above 200W, making it easy to fill a standard 40U 8kW rack without worrying about going over the limit while keeping every server configuration identical. Unfortunately, Supermicro ships the platform with ridiculously overpowered dual 500W power supplies, operating in sub-optimal power range when redundant with no add-in cards.

Comparable platforms with Intel’s 62{09,10,12}U installed, in my experience, perform slightly better in single-threaded tasks, but their peak power consumption is significantly higher than in platforms with Rome, even

I’m looking forward to see my fleet upgraded with the new AMD systems. Coincidentally, I’ll be upgrading dual 2630v3/4s, just like this article suggests; I figured a safe bet would be about 1.5x old servers per new one, but time will tell if I was right.