AMD EPYC 7401P Benchmarks

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

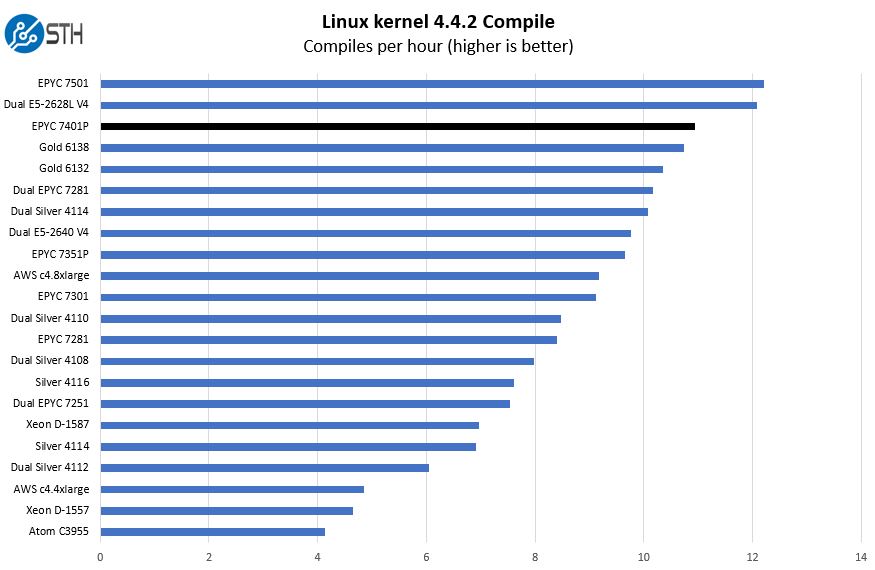

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read.

Our Linux Kernel compile benchmark shows the performance of the multi-die architecture. Here, AMD’s nearest competition is the 20 core Intel Xeon Gold 6138 priced at over $2600 each. If you want to the CPU that is closest on price, that would be the Intel Silver 4116. When we say EPYC “P” parts deliver performance per dollar, this is a stark example. The particular workload will scale with cores but prefers fewer bigger die.

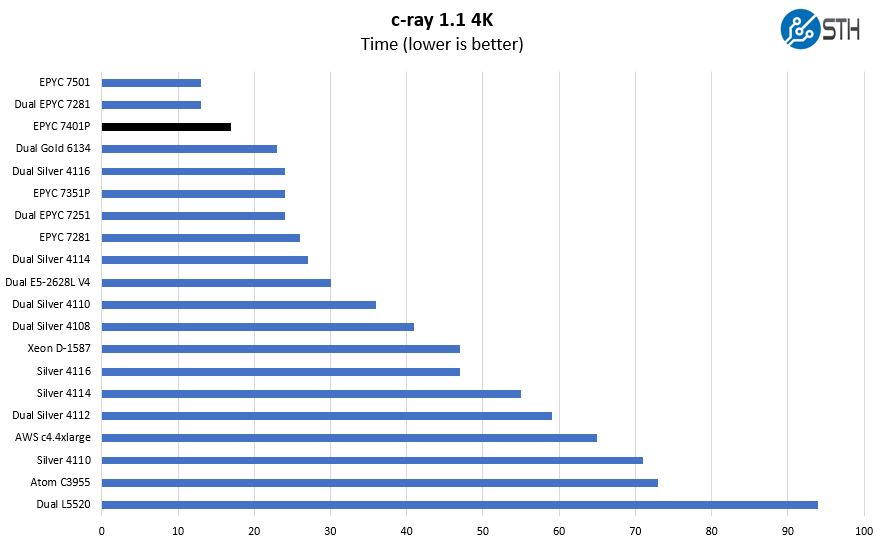

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads.

We cut down this comparison significantly from what we would normally use. What we are doing, and will do in all of our charts, is to show at least 16, 24 and 32 core EPYC configurations, both from single and dual socket configurations.

C-ray requires fast caches and does not push data across cores and Infinity Fabric or Mesh/ UPI often so AMD EPYC is particularly strong is this type of workload.

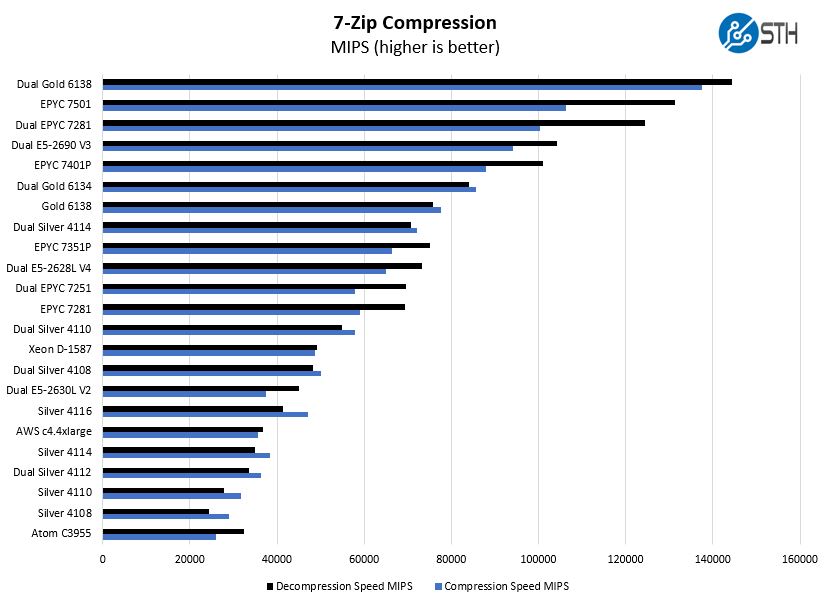

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Overall great performance by the AMD EPYC 7401P. We did want to pause here and note the dual Intel Xeon Gold 6134 results that we have in these charts. The Intel Xeon Gold 6134 is a CPU that will be popular for per-core licensing workloads. It only has 8 cores/ 16 threads but has a large (for Intel) L3 cache structure as well as high clock speeds with a 3.2GHz base. While it is performing relatively close to the single socket AMD EPYC 7401P, it is a significantly costlier (from a hardware standpoint) setup. When one says Intel has parts optimized for per-core performance, we saw this as a good example to use.

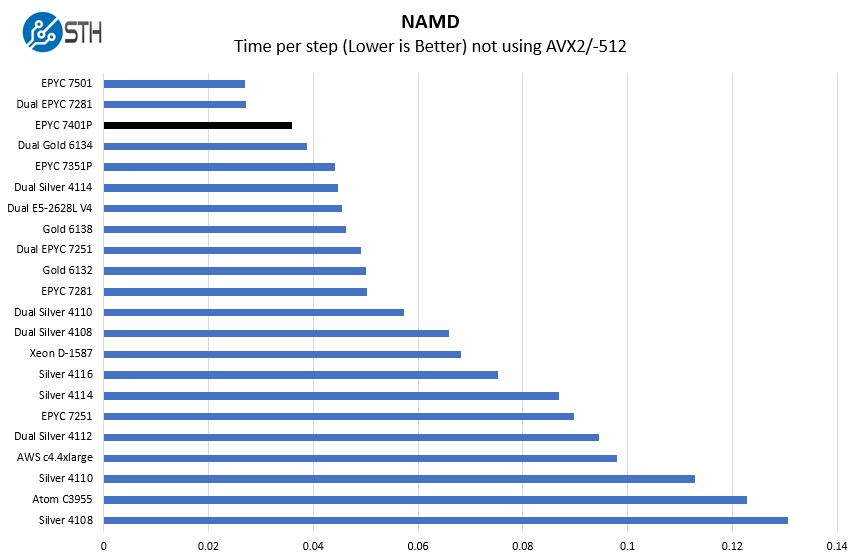

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. We are going to augment this with GROMACS in the next-generation Linux-Bench in the near future. With GROMACS we have been working hard to support Intel’s Skylake AVX-512 and AVX2 supporting AMD Zen architecture. Here are the comparison results for the legacy data set:

There is an enormous delta between the Intel Xeon Silver 4116 or dual Silver 4110’s that are about the same price as the AMD EPYC 7401P. The $1075 EPYC 7401P is competing for more in the realm of the Xeon Gold series here when we are not utilizing AVX-512. Our GROMACS results will show what happens when we utilize the dual FMA AVX-512 on the Xeon Gold 6100 series with a similar type of application.

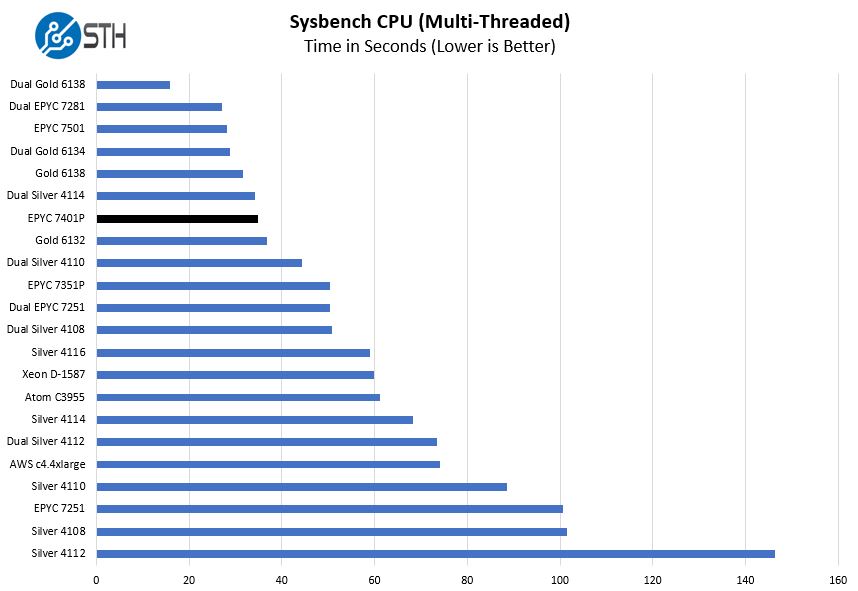

Sysbench CPU test

Sysbench is another one of those widely used Linux benchmarks. We specifically are using the CPU test, not the OLTP test that we use for some storage testing.

Again, another solid performance and one that shows that the EPYC 7401P has a competitive case against dual Xeon Silver 4114.

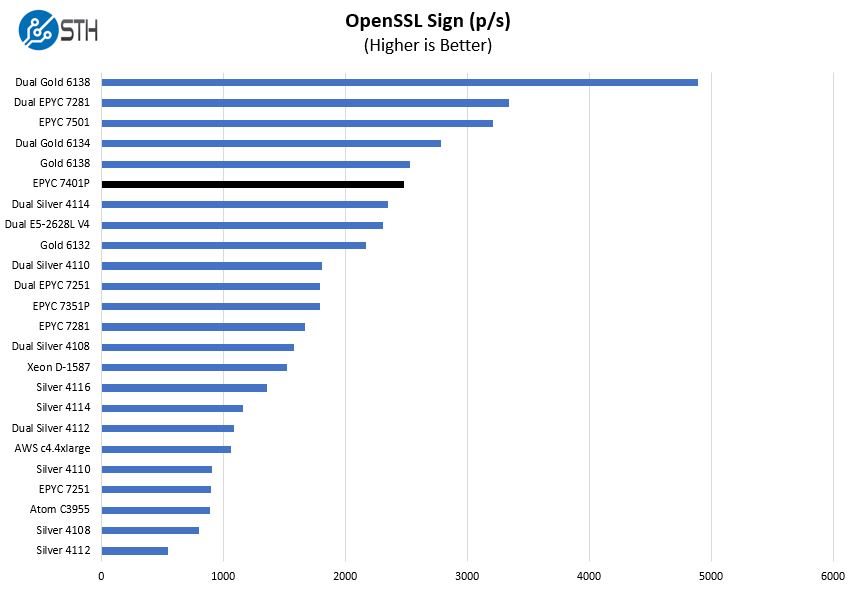

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

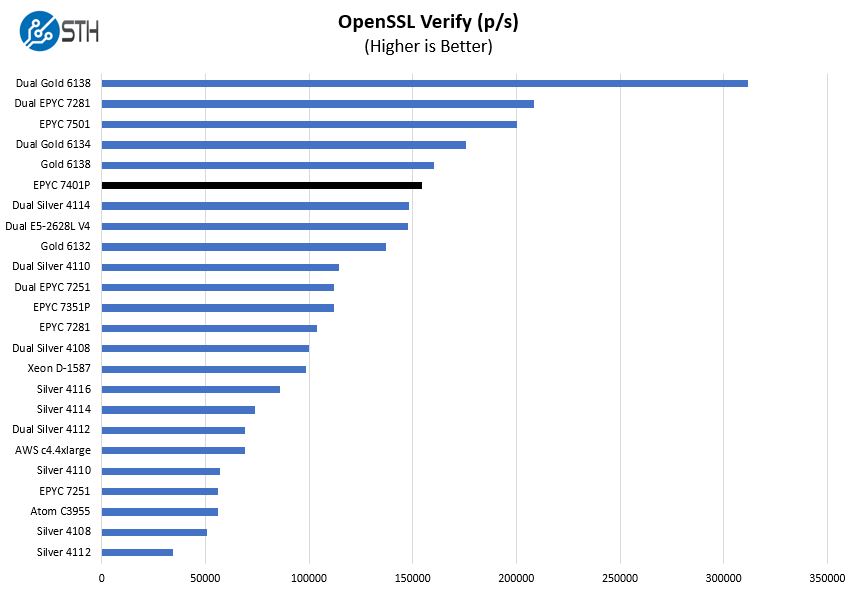

Here are the verify results:

Here we see the Intel Xeon Gold 6138 with 20 cores perform well but the competition again is in the $1500-2600 range for Intel’s CPUs against a $1075 AMD single socket part.

Overall, this is a great price/ performance showing for AMD.

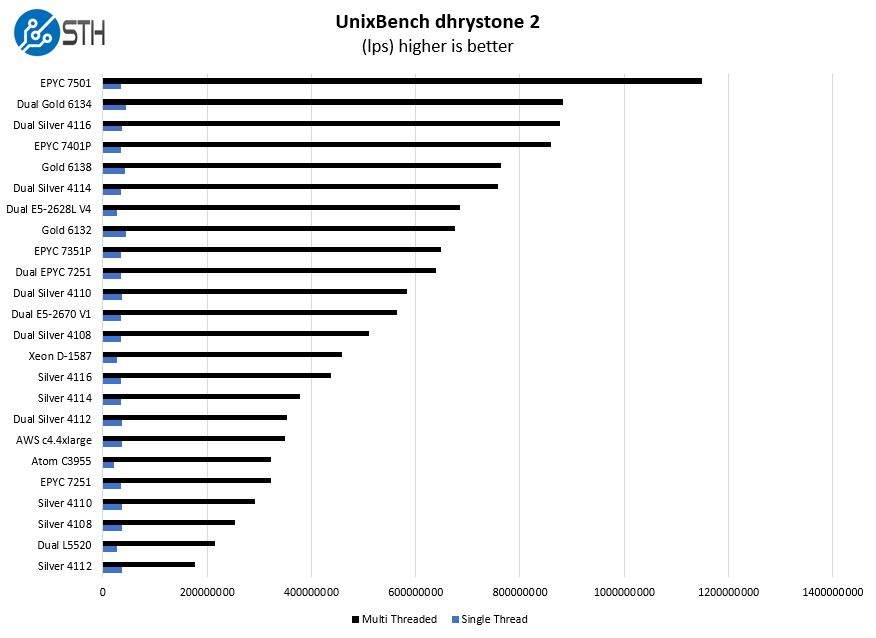

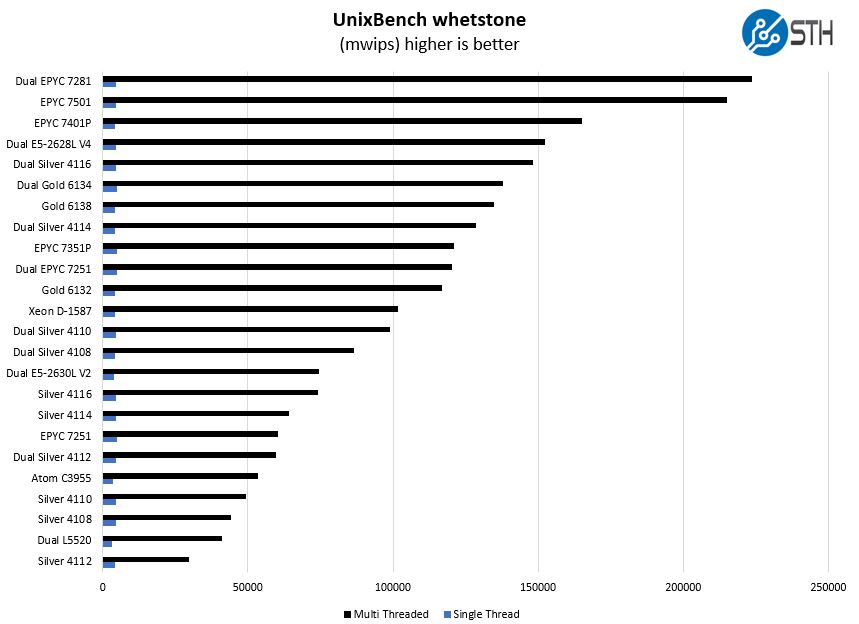

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

Here are the whetstone results:

We added dual Intel Xeon Silver 4116 results to this chart. The dual Silver 4116 combination is still finishing up longer test runs for the next few days, but we wanted to provide some data points as to where it would fall against the AMD EPYC 7401P. Two Silver 4116 chips have the same TDP and cost about 90% more than the EPYC 7401P.

We think these charts are a great validation that AMD’s single socket performance SKU strategy has merit. It also shows why we like the EPYC 7401P.

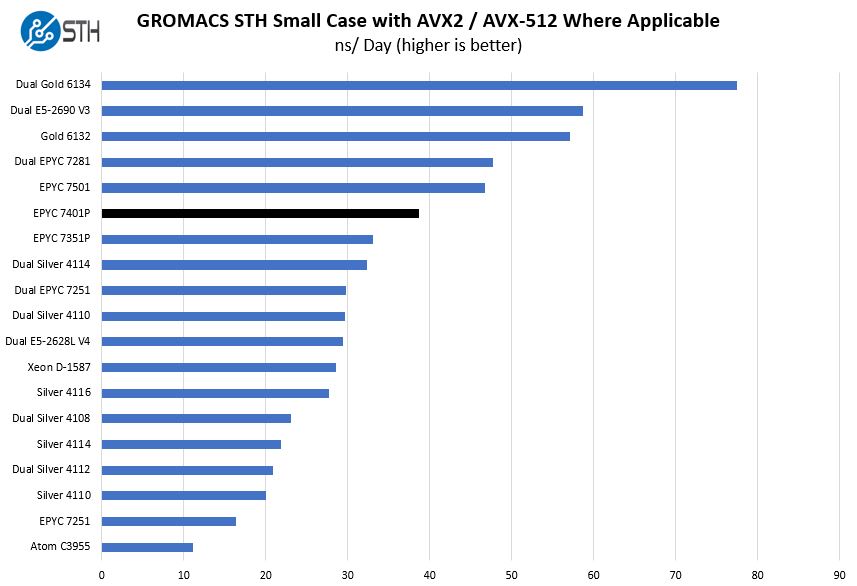

GROMACS STH Small AVX2/ AVX-512 Enabled

We have a small GROMACS molecule simulation we previewed in the first AMD EPYC 7601 Linux benchmarks piece. In Linux-Bench2 we are using a “small” test for single and dual socket capable machines. Our medium test is more appropriate for higher-end dual and quad socket machines. Our GROMACS test will use the AVX-512 and AVX2 extensions if available.

There are a few things to point out on this chart. First, against the Intel Xeon Silver 4100 (and Gold 5100 for that matter) parts that are in the price range of the AMD EPYC 7401P, the single socket SKU performs well. Without the second AVX-512 FMA, Intel simply does not reap the benefits.

Conversely, the dual Intel Xeon Gold 6134 setup only has 16 cores between the two CPUs. High clock speeds and dual AVX-512 FMA mean big numbers. If you have an AVX-512 heavy workload, get the Intel Xeon Gold 6100 series. Even with that, remember that the Xeon Gold 6132, while faster, is still a $2100 CPU or about twice the cost of the EPYC 7401P.

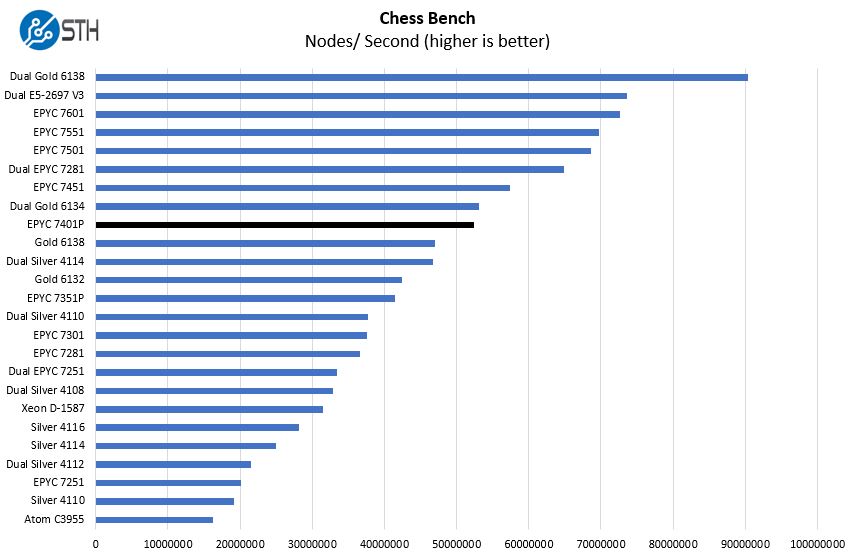

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

We decided to put something special in this chart: every EPYC. All 9 performance variants are represented in the chart as well as two of the EPYC dual socket combinations. When we put the dual EPYC 7601 in the chart the scale made everything else difficult to read. Still, every EPYC, every Xeon Silver, every price competitive Xeon Silver dual socket configuration, and the top-end Xeon D / Atom C3000 series all in the same chart.

Patrick,

It seems numbers for Dual Silver 4116 are not present for all benchmarks, any reason for that?

Great review. I don’t see how Intel can ignore this, but there will probably have to be a hit to the OEM channel before they act, and product lines change slowly.

FYI, the Linux kernel compile chart has the Xeon Gold 6138 twice with two different results

Thanks for the review. I think this processor will be used for the next video editing workstation I am going to build. With this workstation I will follow the GROMACS advice and use GPU power to do most of the heavy lifting.

It’s good to see that the GROMACS website advices to use GPU’s to speed up the process. GPU’s are relatively cheap and fast for these kind of calculations. Blackmagicdesign Davinci Resolve uses the same philosophie, use GPU’s where possible. AMD EPYC has enough PCIe-lanes for the maximum of 4 GPU’s supported in Windows (Linux upto 8 GPU’s in 1 system).

Micha Engel, where do you get 4? The single socket Epyc has 128 PCIe lanes.

@Bill Broadley

Davinci Resolve supports upto 4 GPU’s under windows (4x16PCIe-lanes). I will use 8 PCIe-lanes for the DecLink card(10 bit color grading) and 2×8 PCIe-lanes for 2 HighPoint SSD7101A in raid-0 for realtime rendering/editing etc (14GB/s sustained read and 12 GB/s sustaind write, 960 pro SSD’s will be 25% overprovisioned).

@BinkyTO – The Xeon Silver 4116 dual socket system still has a few days left of runs. Included what we had.

@TR – Fixed. The filter was off.

We need 4 node in 2u or 8 node in 4u of this. We can use for web hosting.

OpenStack work on these?

I still want to test it in our environment before buying racks of them. This is still helpful. Maybe we’ll buy a few to try

@Girish

Have a look at the supermicro website (4 node in 2U).

Maybe 1 node in 1 U is already fast enough.

I see no reason why AMD EPYC wouldn’t work with OpenStack, AMD is one of the many companies supporting the OpenStack organizations (and they have the hardware to back it up).

Does anyone know when these will be in stock. I’ve tried ordering from several companies, including some that listed them as in stock– but they appear to be backordered.

Andrew – We were able to order recently through a server vendor.

Do you have any information on thermals? Our EPYC 7401 run 24/7 rendering, and seem to be throttling down to 2.2GHz instead of staying at 2.8GHz boost. Any thoughts on that? Thermals are reading a package temp of 65C.