It is that time again. At STH we have a long-running series looking at the SKUs and pricing strategies for each server CPU line. This time, we have our AMD EPYC 7002 series article where we will look at how AMD is pricing and valuing each SKU. With the AMD EPYC 7002 series, AMD has continued its practice of differentiating a set of its SKUs with the “P” series designed for single-socket operation. AMD’s contention is that one no longer needs a dual-socket server given the high core count and I/O that its CPUs deliver. In this article, we are going to look at all of the SKUs, then look at the single-socket only SKUs and dual-socket capable SKUs.

As a special bonus, we are introducing a TDP per core metric to the analysis.

Looking at the AMD EPYC 7002 Series Full Stack

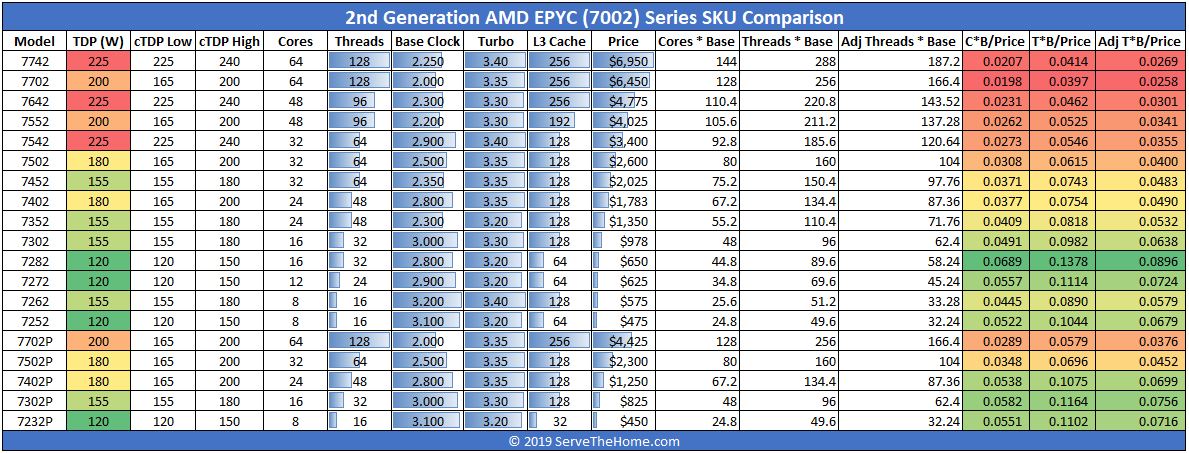

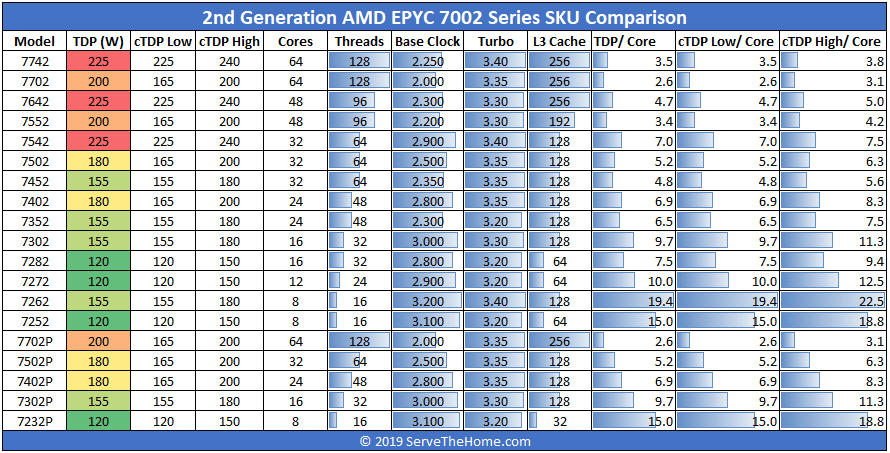

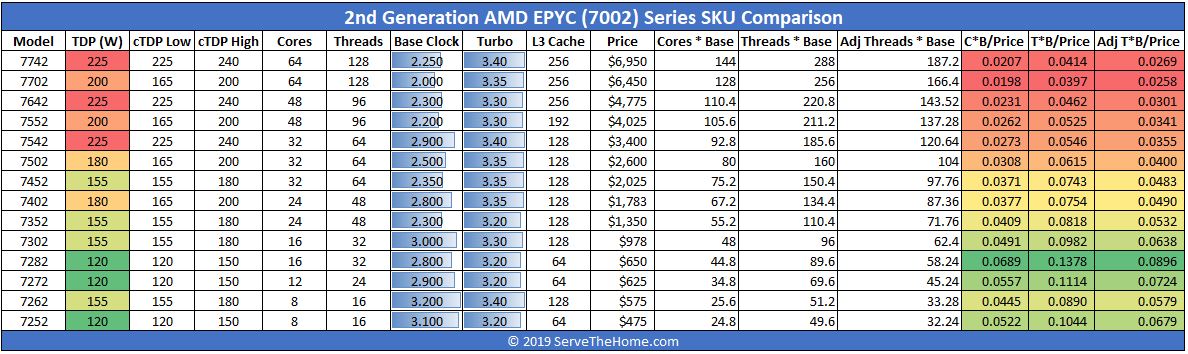

Here is the full stack of SKUs for the AMD EPYC 7002 series at launch. We are going to note that there is an HPC-focused AMD EPYC 7H12 chip, but that is not one we had the list price of.

One can see that AMD is charging a premium for its higher core count and frequency parts, but the delta is not that great. Compared to other companies, this is a relatively muted premium for greater socket consolidation.

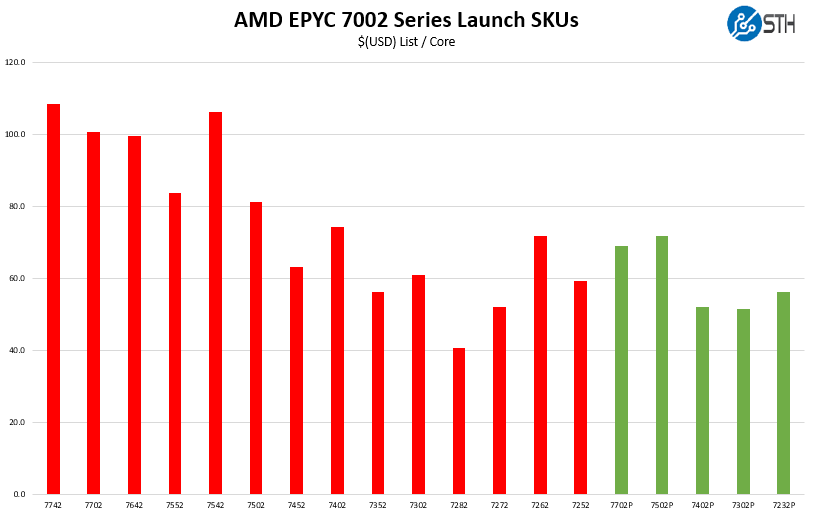

Here is a look at the cost per core, excluding clock speed, across the line:

Here, one can see the impact of the single socket SKUs as they generally offer large discounts over the dual-socket parts.

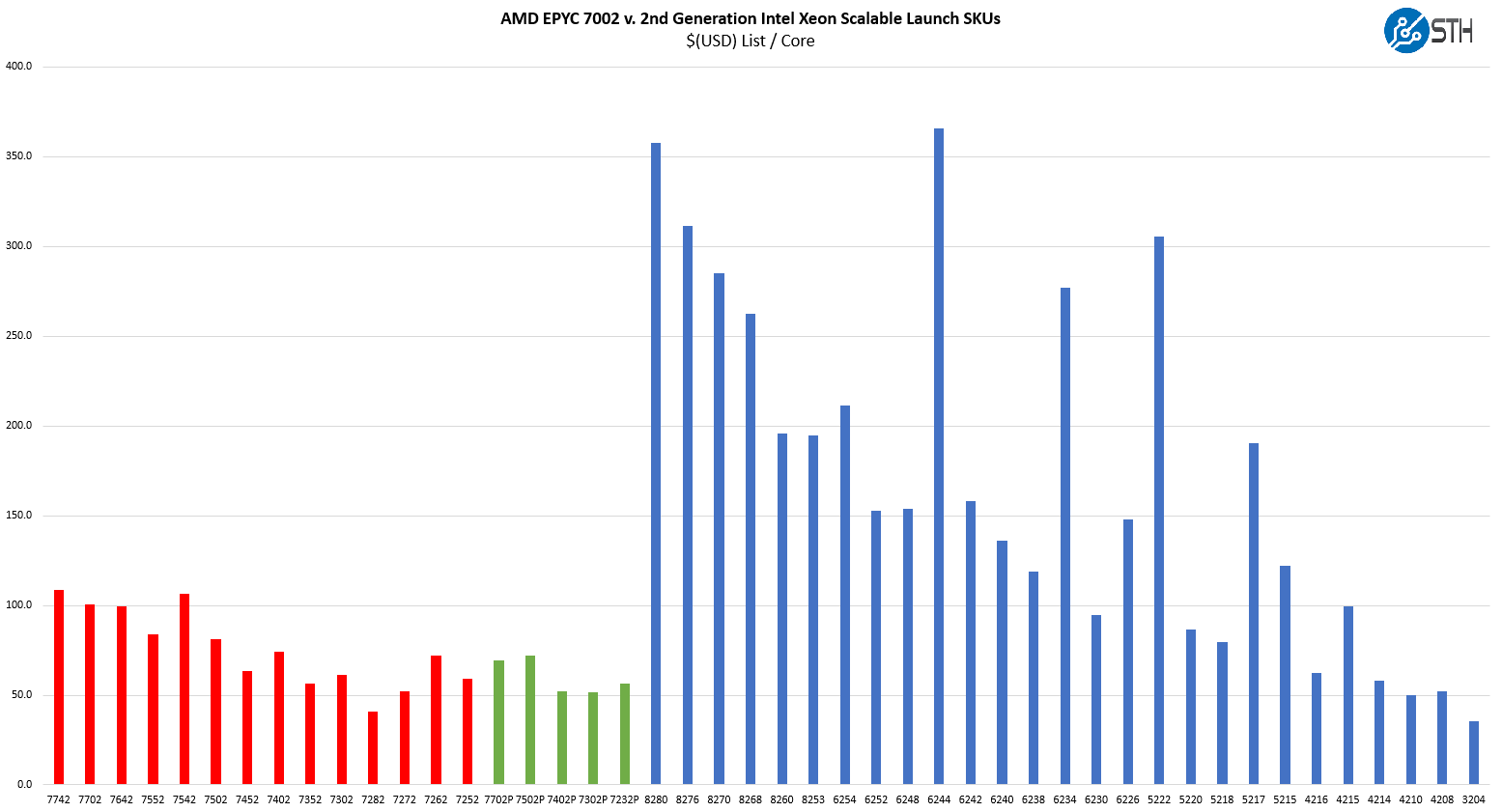

For a sense of scale on that chart, here is AMD’s list pricing versus Intel’s list pricing.

Of note, on a raw CPU performance metric, the Intel Xeon Platinum 8280 is performance-wise falls just left of the midpoint of both the red (2P) and green (1P) set of AMD EPYC bars in these charts putting the list price at around 3.5x per core.

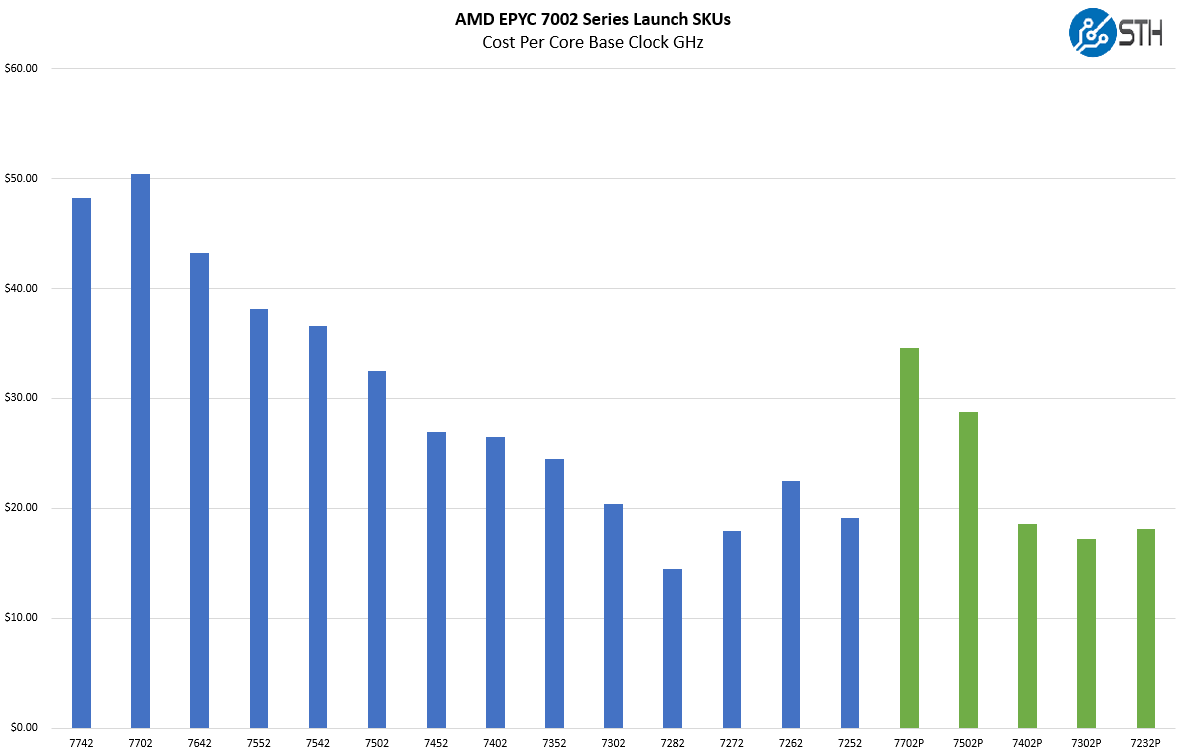

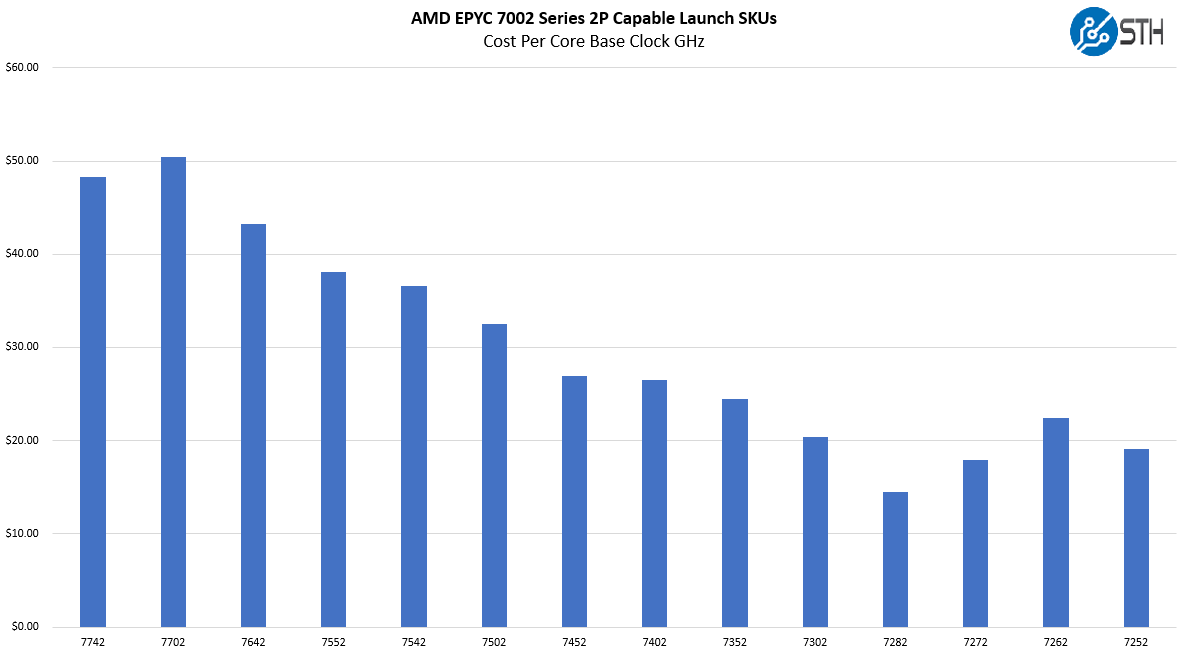

Drilling down into the AMD EPYC 7002 numbers, here is what the chart looks like when we take the USD list price over the number of cores times the base clock:

Again, the AMD EPYC 7282 looks like a fascinating part. The price per core is the lowest of the AMD EPYC 7002 family as is the cost per core base clock. With only 64MB of L3 cache, or half of what the AMD EPYC 7302P and EPYC 7302 have, this may make sense.

Here is a new look for this article: TDP per core. The AMD EPYC 7002 series CPUs have a configurable TDP. That means, for example, you can run AMD EPYC 7742 SKUs at 225W as standard, but also up to 240W TDP.

Here, when we look at the cTDP “High” limit of the AMD EPYC 7742, we see that the extra 15W TDP has a notable difference. Our TDP per core goes from 3.5 WcTDP to 3.8WcTDP or almost 10% per core higher. Having this huge cache and core structure also means that the power per core, in general, is much lower on the 64-core parts.

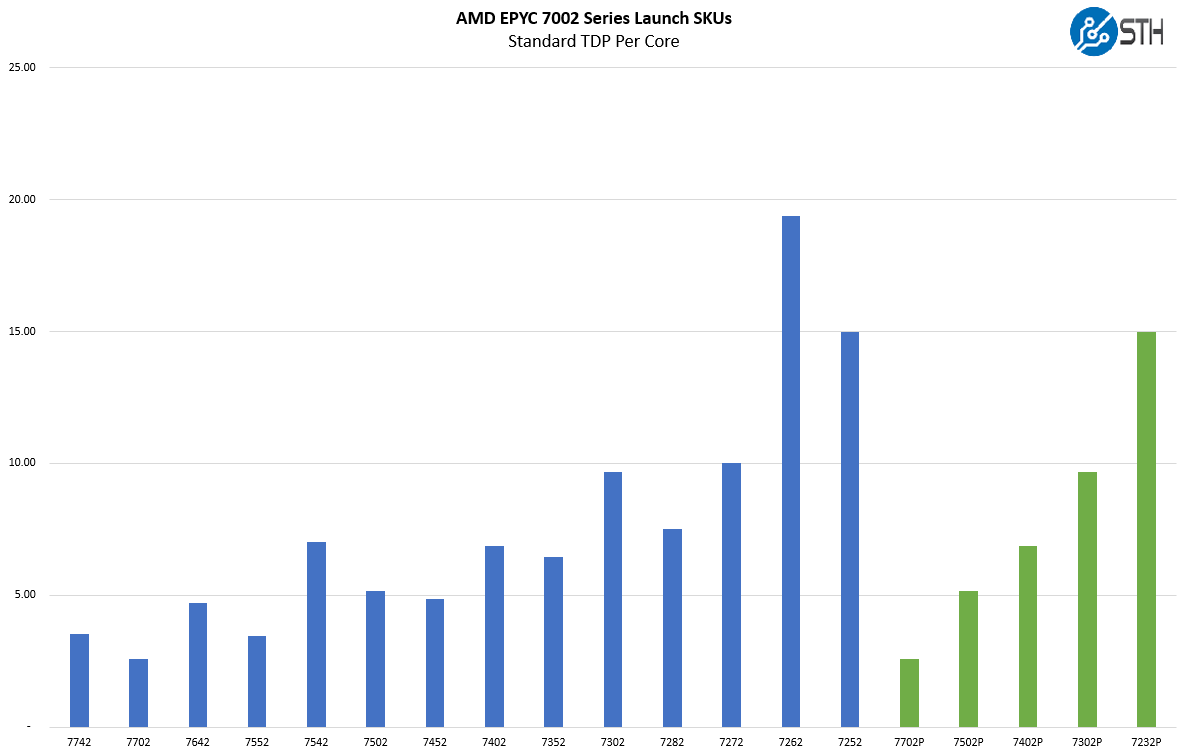

At each extreme, both with 8 core models, and 64 core models, one can see either extremely high TDP per core or low TDP per core. Putting these on the chart:

If you look at both charts, the 48 and 64 core SKUs have higher TDPs but due to the sheer number of cores being added, the TDP per core ratio drops. Also, the 8 core models have very high TDP per core. That is because the chip still needs the I/O die to handle the same 8 channels of memory and 128 lanes of high speed I/O. Given this, it would seem that the I/O die is using an enormous amount of power.

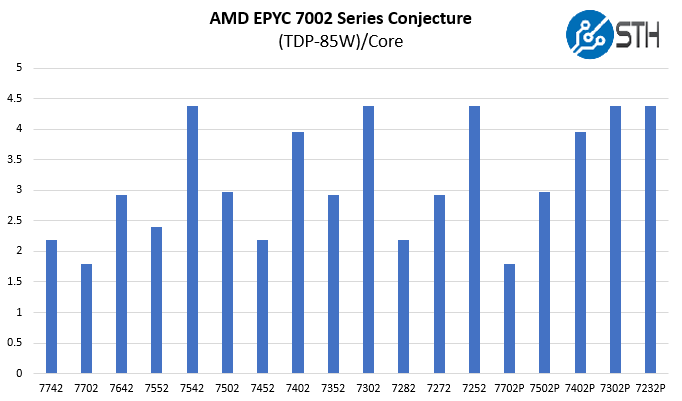

If the 7232P which has the same 32MB per 8 cores as the EPYC 77×2 64-core parts had a similar 3W or so per core of TDP it would indicate an 80-90W I/O die. I spoke to Patrick our Editor-in-Chief about this, and he had an idea to see if we could back out a number that made sense for the I/O Die. After a bit of trying, we are not sure if we found it, but we have this chart:

Note, we pulled out the EPYC 7262 since that was off the chart on every part we made. That leaves us eight SKUs. When we subtract 85W from each SKU’s TDP, we get:

- Five with 4.375W TDP/ core

- Three with 2.917W TDP/ core

- Three at 2.188W TDP/ core

- Two at 3.958W TDP/ core

- Two at 2.969W TDP/ core

- Two at 1.797W TDP/ core

- One at 2.396W TDP/ core

That level of convergence I did not see elsewhere, especially with only a <2.5x range. It seems like this 85W figure is more than a coincidence and is perhaps a constant (I/O Die + Die I/O + others?) in AMD’s TDP build-up. We are going to chalk this up to wild conjecture and speculation, but that chart is fascinating to see.

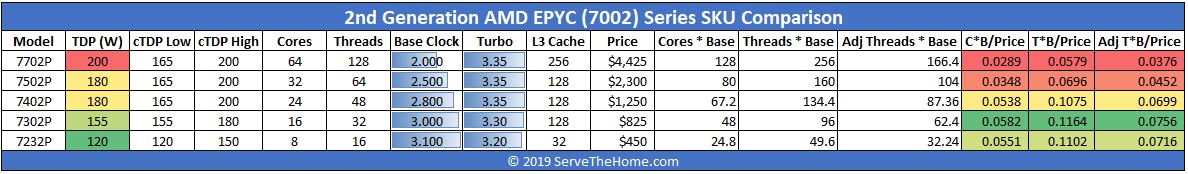

Let us go back to our normal value analysis, starting with the single-socket “P” SKUs.

Value Driver: AMD’s “P” Series Single-Socket SKUs

Overall the AMD “P” series SKUs are very competitive ranging from 8 cores to 64 cores.

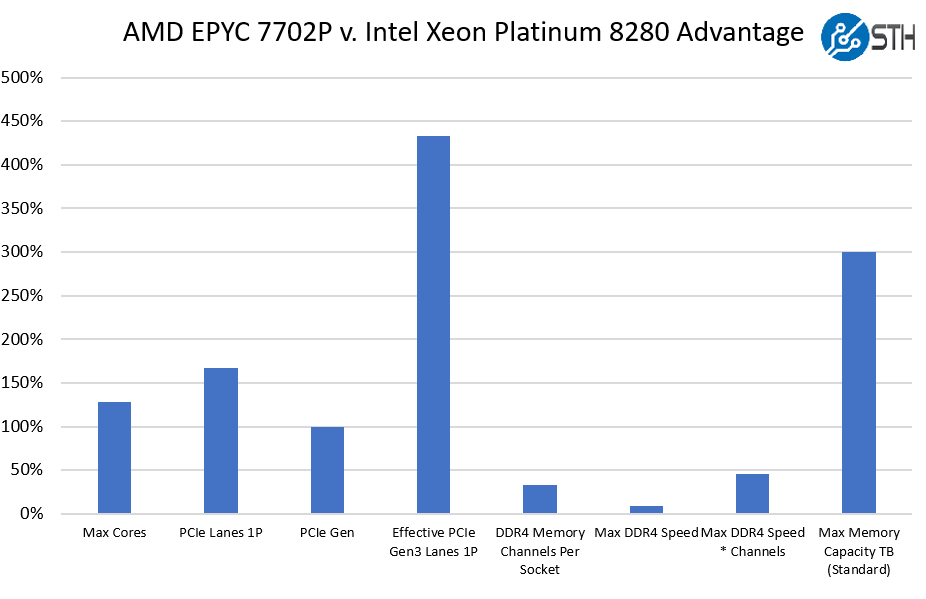

AMD offers a single 12 core SKU, the EPYC 7272 but not in a P-series part. To us, that makes sense given the AMD EPYC 7302P and EPYC 7232P pricing. What does not make sense is the lack of a 48-core part. AMD has room in their stack for a $3300-3400 48 core SKU if they wanted. Perhaps this is something we will see in the future. As we noted in our AMD EPYC 7702P Review, with the current stack we think the 7702P is a better buy than getting a single 48-core part. Also from that review, we show the advantage of the EPYC 7702P versus the Platinum 8280, excluding list price where the Xeon is almost double.

Key here is that AMD offers significantly more in a single-socket configuration than Intel Xeon, often more than twice as much on the I/O side. Combined with lower-cost SKUs, this is how AMD is attacking Intel Xeon’s mainstream dual socket market with discounted single-socket SKUs.

Delving into 2P Capable AMD EPYC 7002 SKUs

On the dual-socket side, here is what that SKU stack looks like:

Here, one will note that while Intel is charging the most per core for its 28-core parts, a 32 core part is midrange in the AMD EPYC pricing stack. The entire SKU stack shows fairly linear pricing except the AMD EPYC 7252 and the EPYC 7282.

Looking at the pricing methodology, one can see that many of the lower core count SKUs are priced at a premium. AMD does not have a completely neutered part like the Intel Xeon Bronze 3204. Instead, it has full speeds and features like two threads per core (SMT) throughout its range. You can learn more about that segment in our piece: A Look at 7 Years of Advancement Leading to the Xeon Bronze 3204.

The AMD EPYC 7282 continues to be an outlier which is why it is on our list of CPUs we want to test. Hitting, at most, around $50 per base core clock GHz is still relatively inexpensive. Here the AMD EPYC 7702 is the only CPU to barely go over that figure due to its lower base clock. In our testing, we found the “P” variant to stay at clocks well above the 2.0Ghz base. We reviewed the P-variant of the EPYC 7402 in our AMD EPYC 7402P Review. We think if you want a dual-socket Xeon equivalent at a significantly reduced price, the EPYC 7402 is a strong contender. At under $1800 per CPU list price, it is priced well for the mainstream dual socket market.

Final Words

AMD is getting a premium for its higher core count parts, but it is more in the 2-2.5x per core range rather than 5x+ that Intel can charge. That is great for consumers. It also lowers the barrier to entry for organizations to consider moving up the stack to higher-core count SKUs versus buying additional servers with lower core counts. We discuss the “P” series parts a lot because of their ability to give buyers the choice between two Xeons and a single EPYC. At the same time, the relatively reasonable pricing for moving up the core count stack makes it so dual socket buyers can purchase higher core count SKUs and consolidate servers. Fewer servers generally use less power and less material to make which is better for the environment and the data center bill. When looking at potential TCO scenarios, low upfront pricing, solid performance allowing for server consolidation, and lower power all yield a strong value proposition.

If you want to see a comparison to the current generation Xeon CPUs, check out our Second Generation Intel Xeon Scalable SKU List and Value Analysis.

That’s some cool first pic!

I’ll agree on that pic.

Great analysis. You’ve got a good point that AMD needs a 48 c P part

I’m glad to read that you’re planning a test of the Epyc 7282 CPU. I’d like to see how the performance and power consumption of this lower-frequency, lower-TDP model compares to other 16-core CPUs like the 7302P.

I wonder if putting a 7282 in a single-socket board would make sense.

I’d be interested to see what the perf difference between the 7282 and the 7302 is.

On the power per core calculations, did you account for the chiplet based architecture? You should be able to determine the number of chiplets based on the L3 cache of the package. Also, since power consumption increases with frequency, this may help determine actual core power consumption. Something I noted with the EPYC sku’s is that the number of cores per chiplet are variable, as in, some CPUs are not using the manufactured 8 cores per die. Compare the cores per chiplet counts with the frequency of each core and I am guessing that will clear up the power per core issue found in the calculation and probably quickly define the power consumption of the IO die.

Isnt chiplet number euqal to l3 cache size?

It’d be interesting to go even further and try to estimate something like minimum TCO/core*base.

That is, even if you’re assuming your choice is dominated by cost of compute and you don’t inherently need any particular size of node, more smaller nodes means paying for more mobos/boxes and for rack space and power (TDP + platform power) for some period of time. That probably makes middle core count parts look better compared to low.

There are wrinkles in the wrinkles of course. For the 2P variants you probably have to assume a more expensive 2P-capable box and amortize the cost across two sockets (which I think’d make them look better, as long as two-socket platforms are less than twice as pricey). Arguably at high core counts you want to assume a larger platform too: even in compute-heavy apps 64 or 2×64 cores need a certain amount of RAM/network, and in terms of space you’re probably not going to cram >=64C in a half-unit server like you might an 8C part.

Anyway, it’s legitimately complicated and you have to figure out where to stop refining/making assumptions, but even a crude go at factoring in a cost per node and per watt could probably shed some additional light on why these prices and what’s likely to sell. Maybe I need to fire up a spreadsheet when I have some time :)

@Eric Smith, @Patrick – want to know why there is no 48 core EPYC? Perhaps the six-good-core chiplets are being used to make Ryzen 9 3900X CPUs.

emerth – I do not think it is that simple. There is more margin in EPYC but clock speeds can be lower.