Today we are going to put the VMware vSAN cluster we have been writing about into use. In the previous piece, All flash Infiniband VMware vSAN evaluation: Part 1 Setting up the Hosts, we got our hosts setup for this proof of concept setup. We then followed a series of steps to get the vSAN cluster up and running in All flash Infiniband VMware vSAN evaluation: Part 2 Creating the Cluster. Let us see how we can add virtual machines to the mix and what the performance is like.

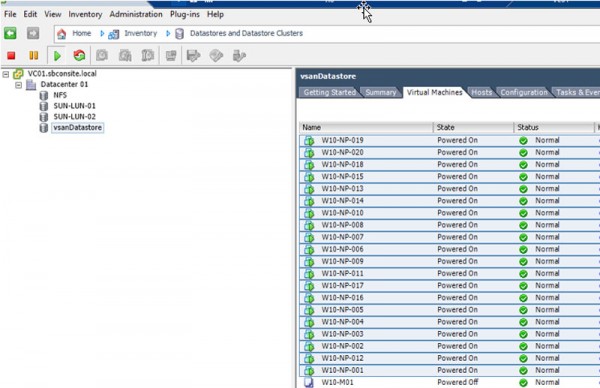

Virtual Machines running on our VMware vSAN cluster

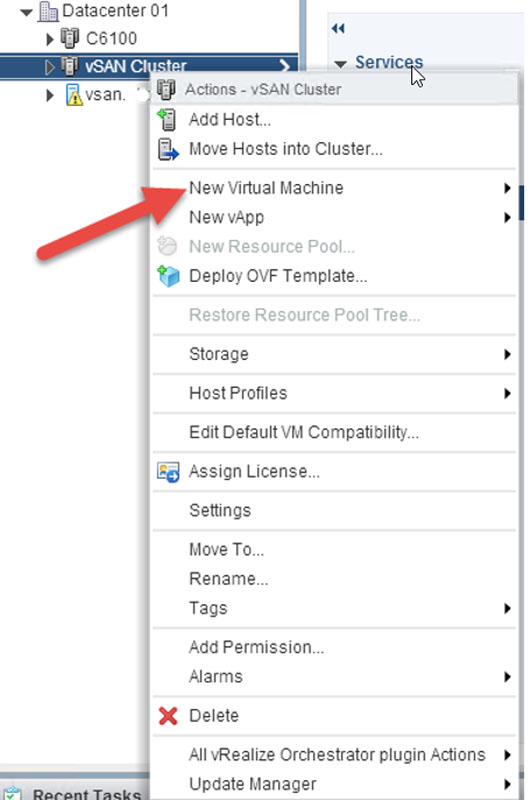

Our next step is to create new virtual machines running on our vSAN cluster. We can then use VMs to test performance. The first step is to select our cluster and create a New Virtual Machine:

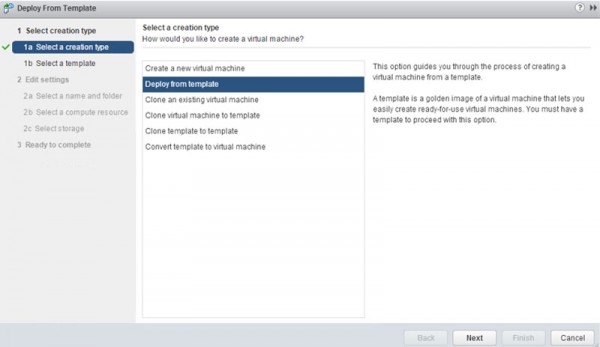

We are going to simplify the process and deploy from a template.

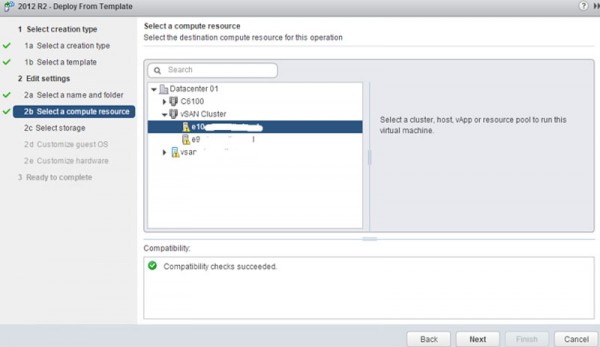

Now we can select a compute resource from the cluster that we made in Part 2 of this guide.

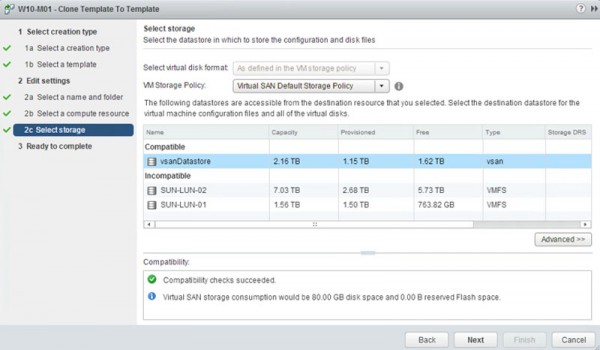

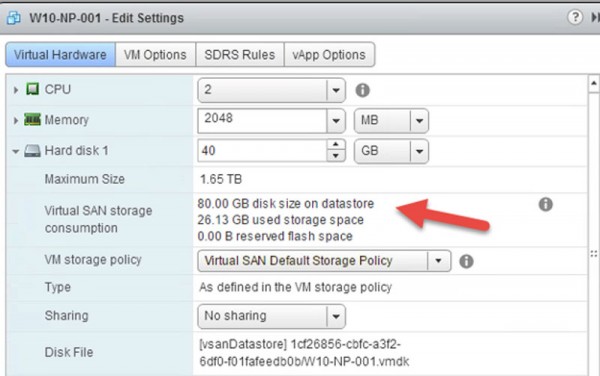

When we get to Select storage we are going to use the Virtual SAN Default Storage Policy and we are going to pick the vsanDatastore.

A quick note here: our vSAN storage consumption will be about be twice the size of the VM since it needs to placed on two different nodes in our setup. This is a bit different than those who are used to RAID 1 or 10 SANs where replication is handled at a lower level (e.g. the RAID controller or software.)

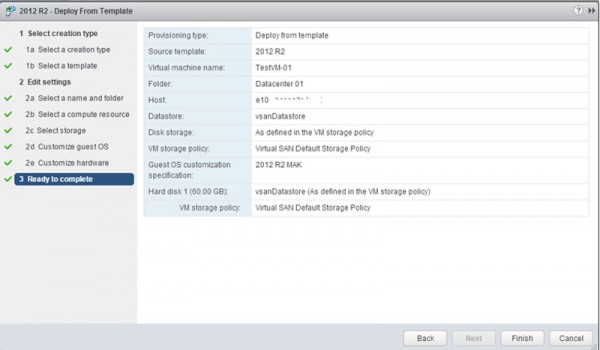

After this is complete we now are ready to deploy our first VM on the vSAN.

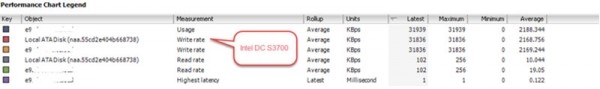

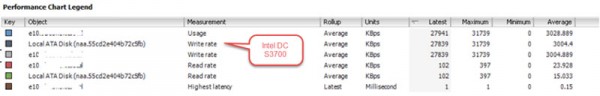

While template is deploying. Both nodes see the Intel DC S3700 heavily utilized. That confirmed all data hit flash tier first in our vSAN environment.

Now that we have successfully set the virtual SAN up, and have a VM running on it, let’s look at the impact in terms of performance.

Performance Testing

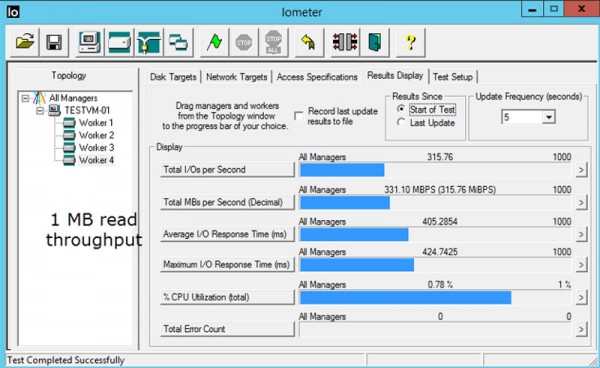

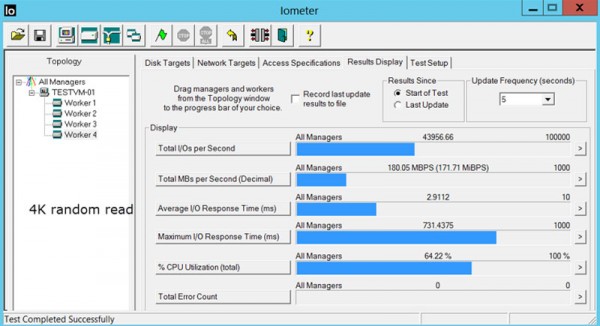

The result shows flash tier performance is very important for vSAN deployment since all data will hitting on that first. (For reference, all flash based vSAN 100% write will hit on flash tier first) For any production environment or high performance lab environment, I would recommend flash tier SSD utilize PCI-E based or NVMe based SSD, otherwise for standard SATA/SAS based SSD the throughput will be limited (550 MB/s).

I did a simple IOmeter testing by add another drive on from the vSAN. The result was in line with performance of Intel DC S3700 with 1 MB throughput of 331 MBps and 4 KB random IO of 43956 IOPS.

Overall performance is good for this setup. We would, of course, expect performance to scale with more disks but even the relatively small setup we have is already putting out solid storage performance.

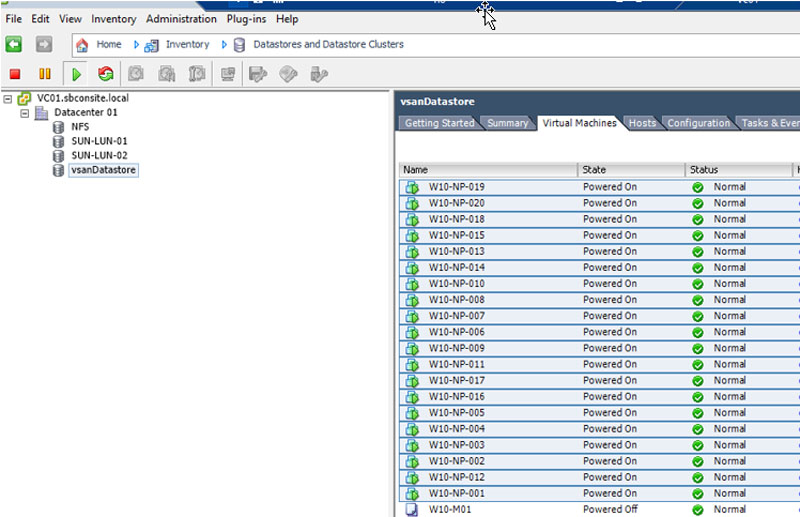

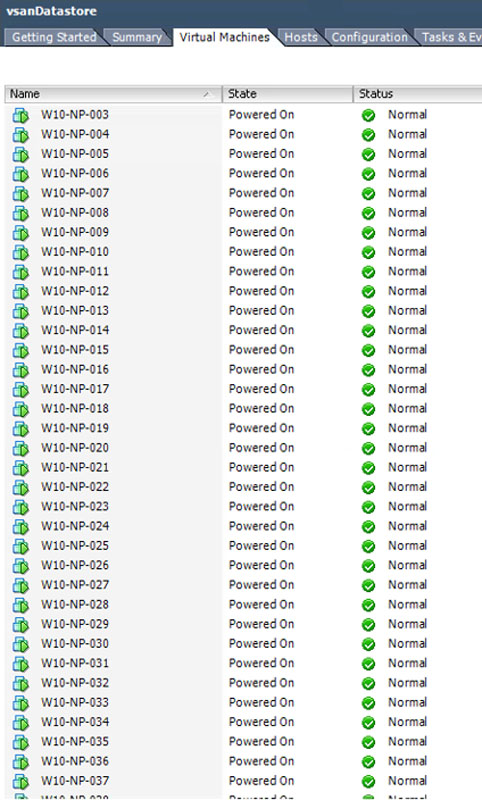

One of most demanding I/O operations is the boot storm in a VDI environment. Boot storm means when lots of VMs starting to power up and get ready for users. This can happen, for example, when a shift of employees logs in at 9AM to start their day. It creates lots of random I/O and will normally bring traditional enterprise storage to its knees. Most companies demand great performance in their VDI environments and therefore tend to acquire all flash based SAN which is very expensive and where virtual SANs can have a big impact. The next test was based on a real-world scenario, I have deployed 20 Windows 10 VM on the vSAN cluster each with a 40 GB hard drive. I powered all 20 VMs at once. The disk latency is truly amazing.

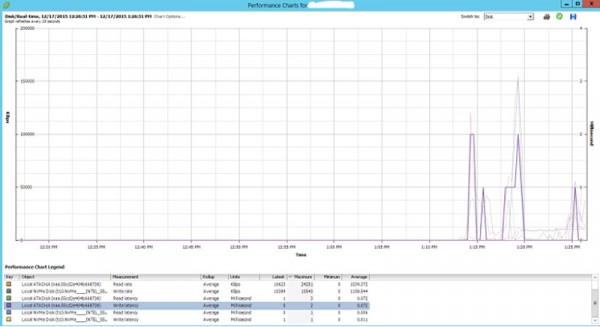

Within 10 mins of VM getting ready. The disk latency in both write tier and capacity tier is almost nothing. Note, for any VDI/SBC environment, when latency over 5 ms user will notice lag. When latency over 20 ms, user will notice session delay. When latency over 50 ms, user session may drop.

The latency number is extremely good. Just to make this a bit harder on the setup, I doubled the VM count from 20 VMs to 40 VMs and created another even larger boot storm. With 40 VMs boot up at the same time, the latency on both flash tier and capacity tier were extremely low as well.

Here are the latency and throughput figures, one can see maximum latency continued to sit below 5ms:

The all flashed based vSAN did not sweat at all. I was running out of memory on the server before I could push any heavy load on the storage.

Conclusion

In conclusion, VMware vSAN is a great add-on to software defined storage solution. It’s simple to setup with great performance. I would highly recommend any VDI/SBC environment who are looking for the best performance to consider VMware vSAN. On the side note, VMware vSAN does require an additional license with exception of VMware Horizon View Premier Edition (a VDI focused product.) If VMware were to grant vSAN to customer who have ESXi standard edition, this could be a game changer. As vendors adopt a software defined storage model, VMware has the opportunity to completely change the storage landscape by making vSAN the default option for those running VMware virtualization workloads. Even with our relatively small lab environment we found performance to be excellent for VDI scenarios.