Power Consumption

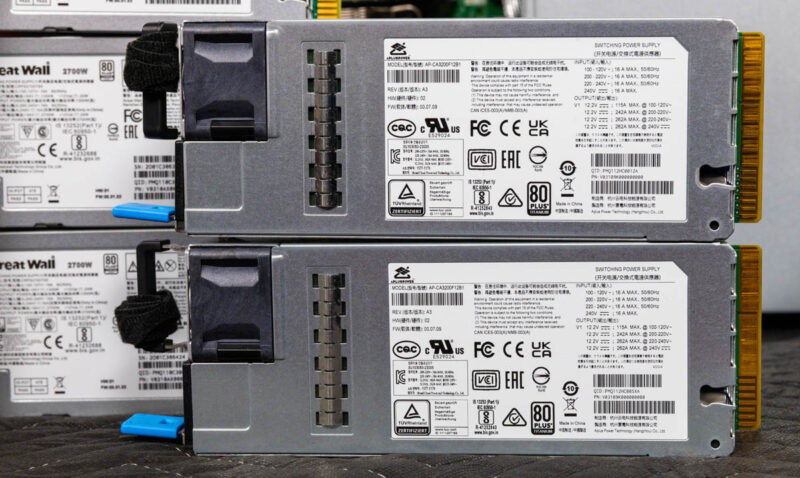

The power situation is neat, with eight power supplies ranging from 2.7kW to 3.2kW each.

The top two PSUs are the 3.2kW power supplies for the 12V portion of the server. The power supplies here all have Titanium ratings, as efficiency is key when running such high-power servers.

The six power supplies are 2.7kW with N+1 redundancy for the HGX H200 8 GPU baseboard. These are the 54V PSUs.

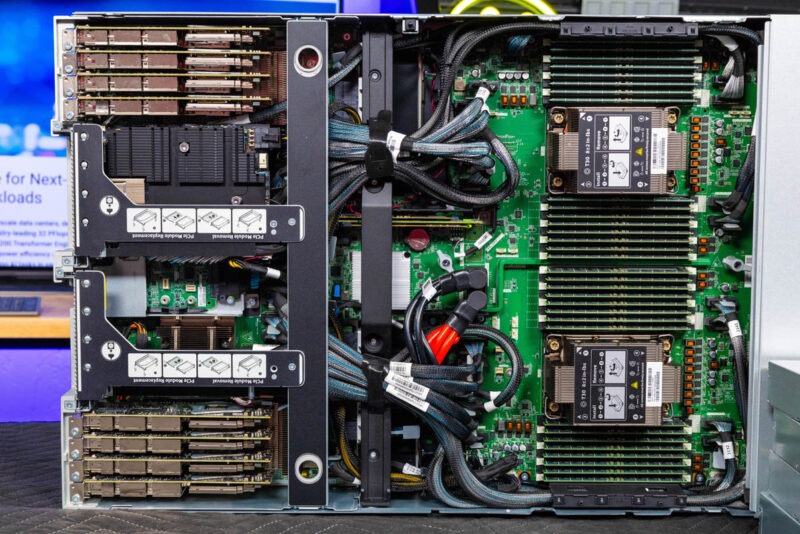

At idle, this server uses over 2kW of power. Under load, it can peak at over 10kW. To give some sense here. Eight 700W GPUs is around 5.4kW, but that does not include the NVLink Switches, PCIe retimers on the baseboard, or the cooling. The CPUs and memory can use over 1kW. There are a few hundred watts more of NICs, PCIe switches, SSDs, and so forth. Then, add another ~15% power or so for fans, and these systems use a lot of energy.

For those ready to deploy AI servers, just be prepared for the required power and cooling.

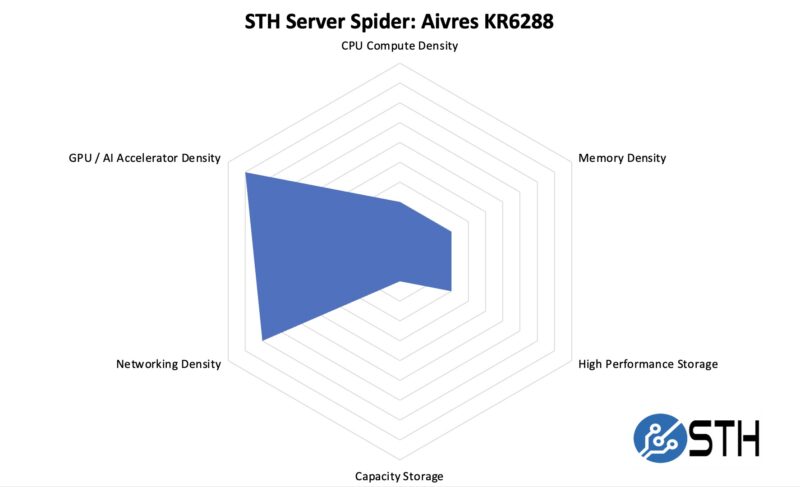

STH Server Spider: Aivres KR6288

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Modern AI servers optimize on the GPU compute as well as networking. Our “capacity storage” axis we have not seen in a long time on AI servers. In the future, we expect even more of a push towards the networking and AI accelerator/ GPU side with this class of servers.

Final Words

This was the first Aivres server we have tested, and it performed exactly how we would have expected. It is undoubtedly ahead of some of the other NVIDIA HGX H100/ H200 servers we have seen in the market.

The start of the show was the NVIDIA H200. With 141GB of HBM3e memory per GPU, we get more bandwidth and memory capacity for larger AI models. As a single GPU, this is not huge, but with eight GPUs, increasing memory capacity by over 75% is fantastic.

Hopefully, folks like this kick-off to our NVIDIA HGX H200 series. If you are considering a H200 system, it might be worth checking out the video. I went into quite a bit of industry perspective there. If you are reading STH, then you might be up-to-date on all of this. For others who are new to AI servers, it is a great resource. Although we did not test it, we should note that Aivres has both an Intel Xeon version that we looked at here as well as an AMD EPYC version of this server.

One thing is for sure: There is a ton of interest in these big AI servers, and they will only get larger in future generations.

“designed to house two processors, 32 DIMMs, nine or more 400G NICs, and eight GPUs with over 1.1GB of combined HBM3e memory.” Minor typo at the beginning, I think that you mean tb ;)

Aivres seems as another brand for Chinese Inspur – same as Kaytus.

Inspur was forced to exit since if they owned a company they couldn’t have a server with H200’s. Kaytus was the one who went to Singapore? Aivres was spun out as the US operations and sales as its own OEM. If they were a Chinese brand owned by Inspur they couldn’t get the H200’s for this server. I’m seeing a H200 server from them, and their business addresses are all in CA, so I don’t think it’s Inspur

@Honza & @FrankC – From Chinese translation of Wikipedia:

> “In 2015, a branch office “Digital Cloud Co., Ltd.” [ 2 ] was established in Taiwan , becoming a subsidiary of Inspur Information Co., Ltd., and moved into the Baiyang Building in Banqiao District, New Taipei City in April 2017 [ 3 ] . In August 2024, it was renamed Aivres and became an important overseas base of Inspur Information Co., Ltd. Its main business is overseas support for overseas Chinese customers and promotion of China’s new quality productivity.”

You can also Google for: “Hewlett Packard Enterprise Co v. Inspur Group Co, U.S. District Court for the Northern District of California, No. 5:24-cv-02220.” to see a similar claim, Inspur owns Aivres.

Dear Sales Team,

Good Afternoon

Please provide us with your best price and availability for AIVRES KR6288 6U EXTREME AL SERVER WITH HGX H200 8-GPU

01 UNIT

BEST REGARDS,

ADNAN SUBHANI

SGC INVESTMENT LLC.

DUBAI, UAE

CELL +971-58-2520101