Aivres KR6288 Internal Overview CPU and NICs

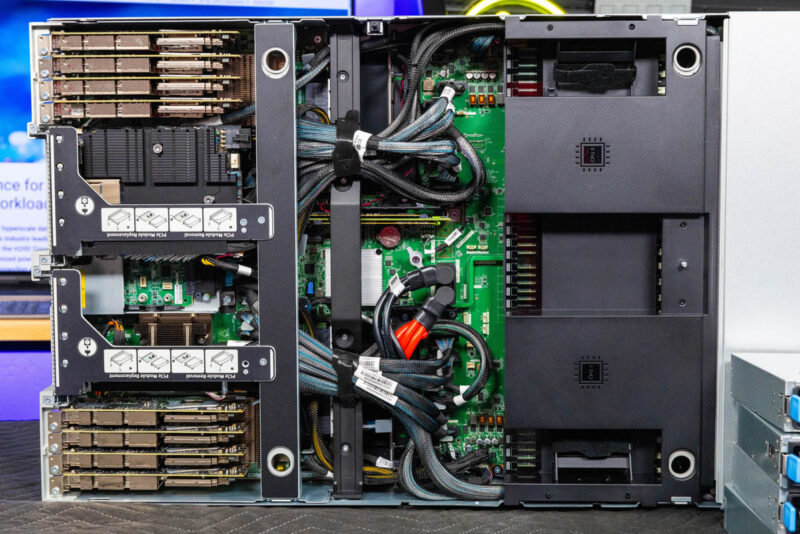

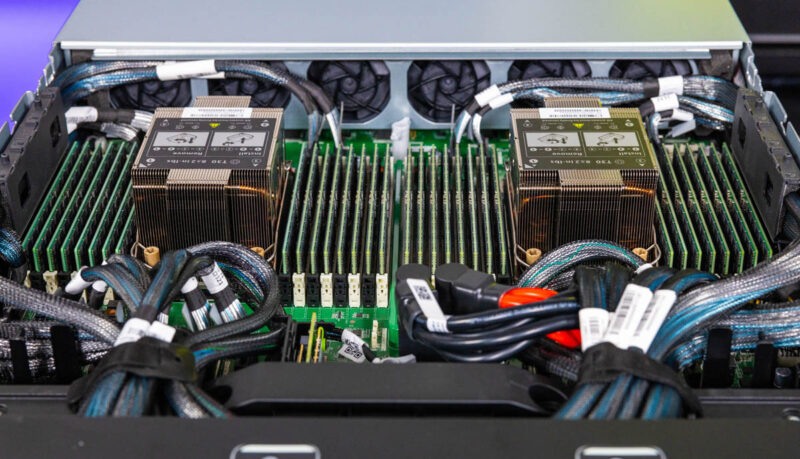

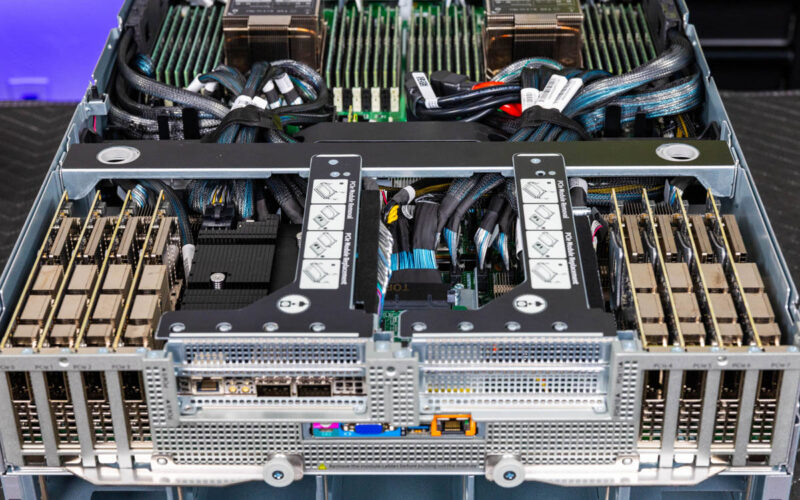

The top of the server looks like a very dense 2U platform in many ways. In the photo below, we will work from right to left. The airflow guide over the CPUs is quite sturdy.

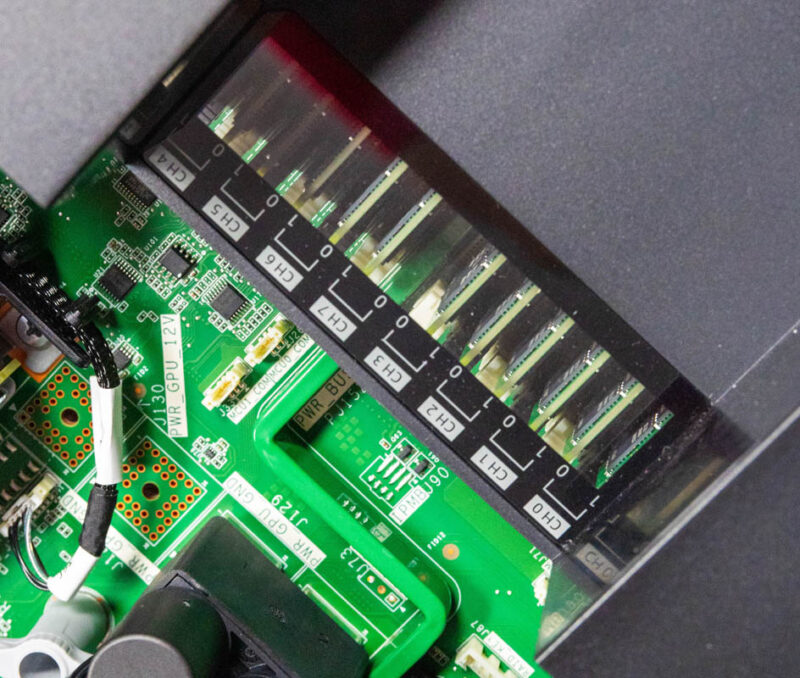

A fun detail is that the airflow guide labels the CPUs and the memory slots below it.

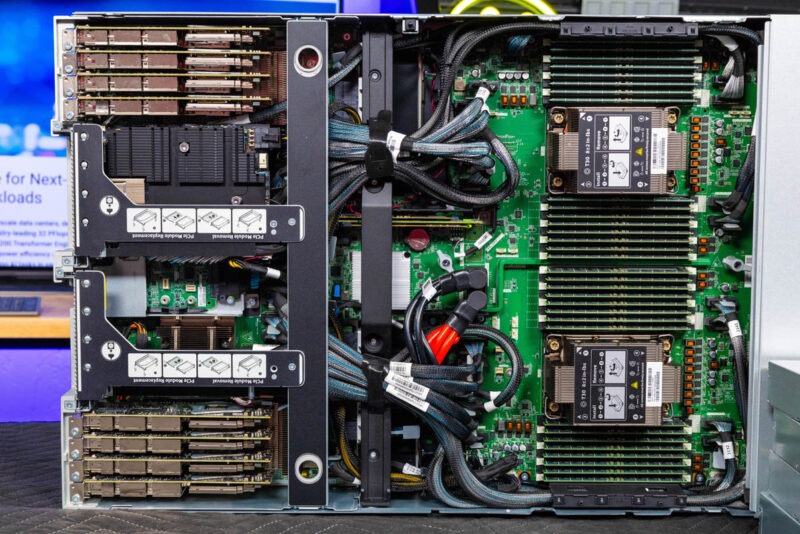

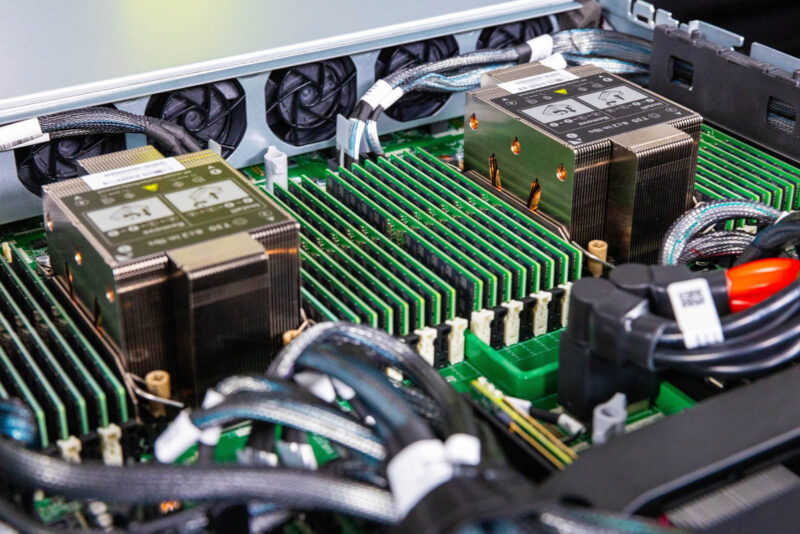

Removing that airflow guide, we can see the system.

Between the motherboard and the front storage is a set of six fan modules. We left the cover on top of these to help ensure the platform’s structural rigidity since it is not made to sit on its side.

Those six fan modules cool the system’s storage, CPUs, memory, NICs, and other components.

In terms of processors, we have dual 4th Generation or 5th Generation Intel Xeon Scalable processors. Each CPU has eight-channel memory and has two DIMMs per channel for 16 DIMMs per CPU and 32 DDR5 DIMMs total. That is important because with over 1.1TB of HBM3e memory on the GPUs, getting a DDR5 to HBM ratio of even 2:1 requires a lot of DIMMs.

Behind the memory is a dual M.2 riser for boot SSDs, so valuable front-panel SSD slots are not used.

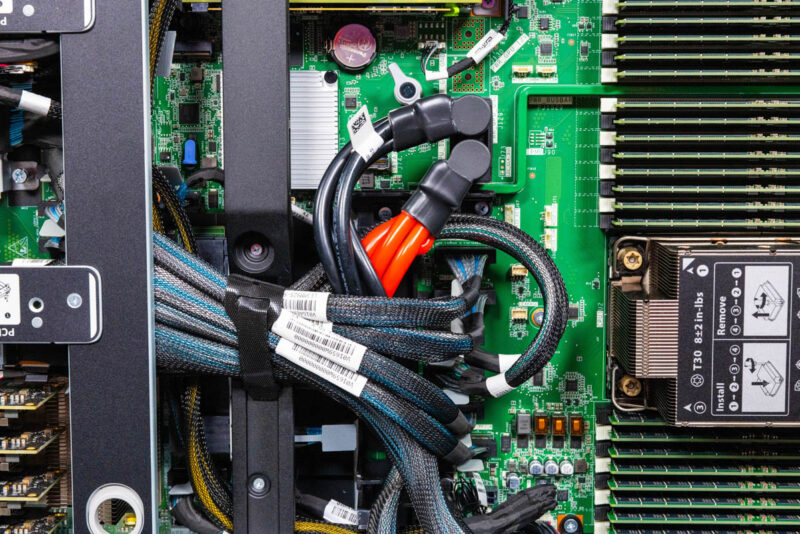

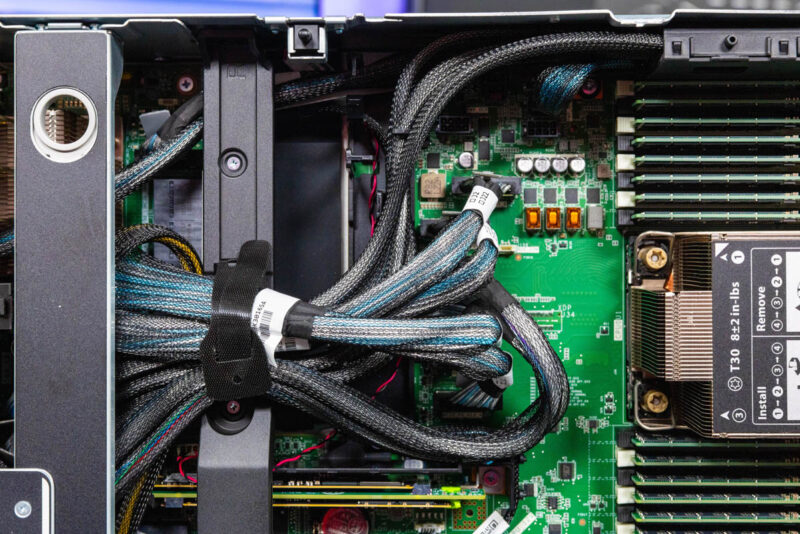

Next to this are big power cables and plenty of MCIO cables carrying PCIe Gen5 connectivity.

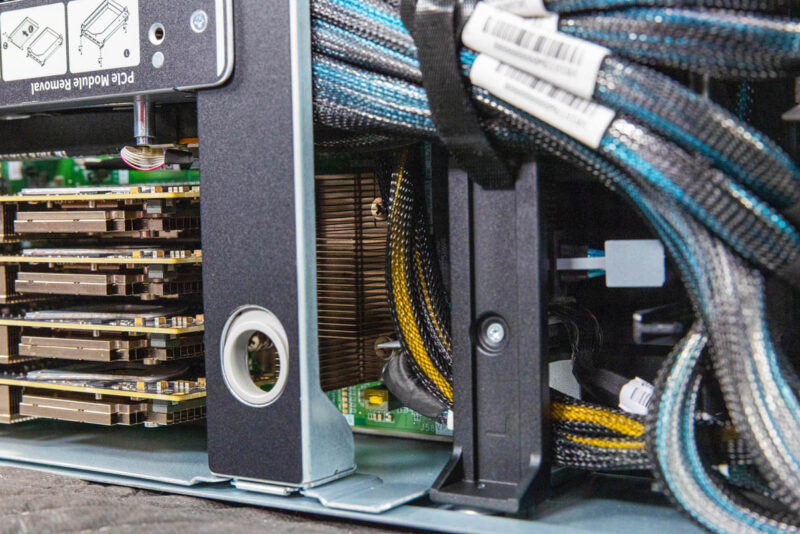

PCIe lanes are a significant challenge with 8x PCIe Gen5 x16 GPUs, 9x PCIe Gen5 x16 NICs, 8x PCIe Gen5 x4 NVMe SSDs, and more. As a result, there are PCIe switches.

Another benefit of the PCIe switches is that they provide a pathway for GPU to NIC communication without traversing the CPU fabric.

The other challenge in an extensive system like this is that it has multiple baseboards and backplanes, yet PCIe Gen5 signaling only goes so far. As a result, the MCIO cables are everywhere.

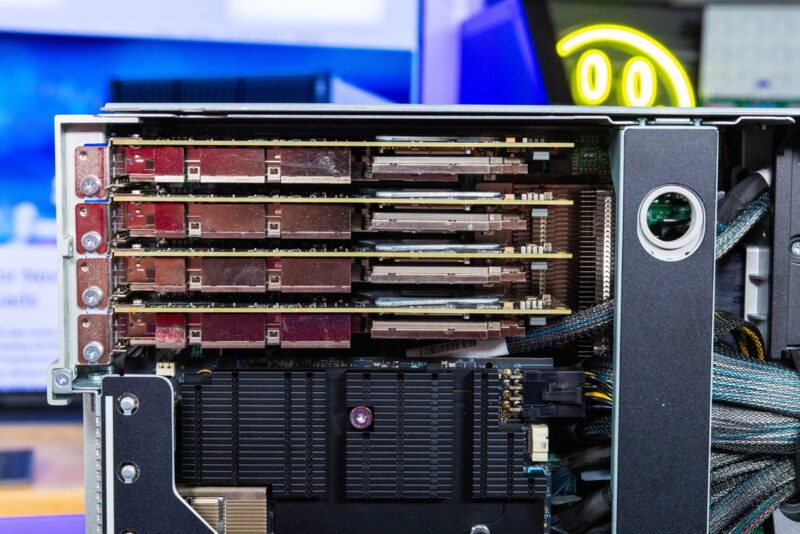

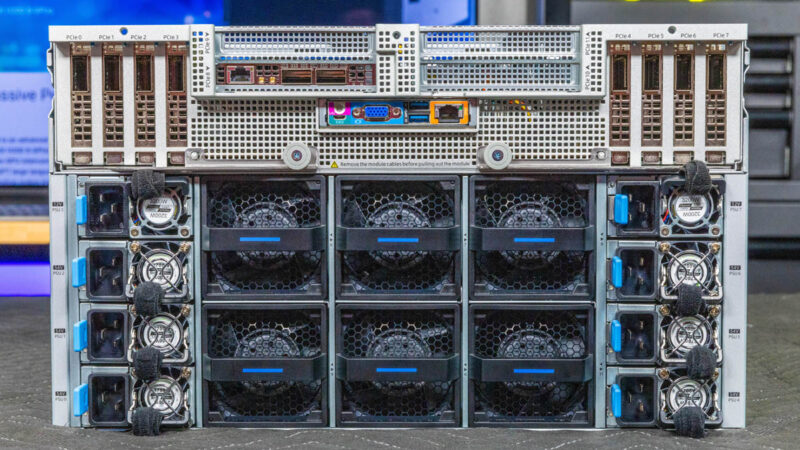

Here is the rear of the system with all of the NICs.

Here are the four NVIDIA ConnectX-7 NICs in the first set.

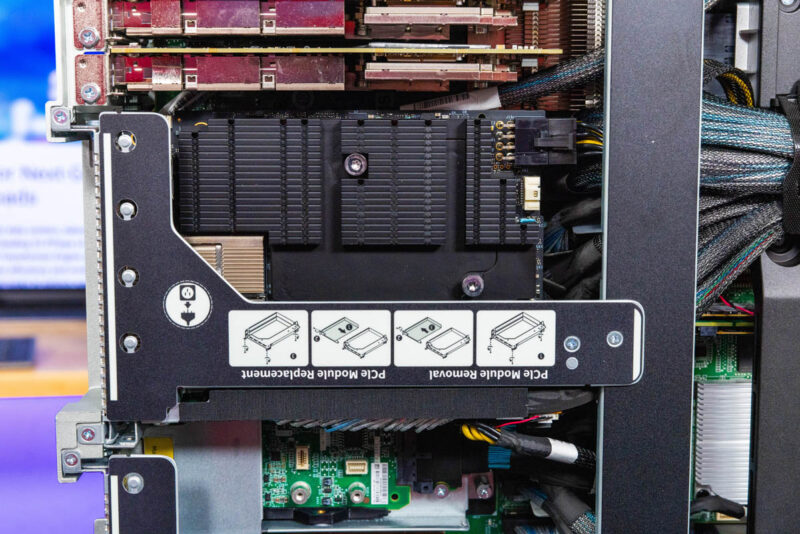

In the center, we have the full-height slots and one NVIDIA BlueField-3 DPU installed. Depending on the model, a BlueField-3 DPU can use up to 150W, so when we say this is a high-power and high-performance server, it is not just the GPUs.

In the center, we have the rear I/O and additional slots.

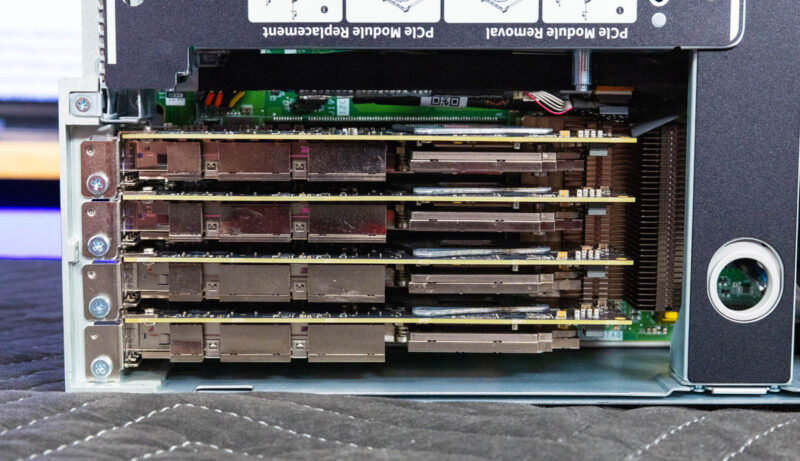

Here is the second set of ConnectX-7 NICs.

To give some perspective on the amount of networking here, we have nine 400G NICs for a total of 3.6Tbps of network bandwidth. If you use a 32-port 100GbE switch, a modern AI server has more bandwidth to the single node than that entire switch.

The hardware is cool, but we also had this in our testing colocation racks to give it a spin.

Aivres KR6288 Performance

Over the years, we have tested many AI servers. There are two major categories where the servers can gain or lose performance: cooling and power. The cooling side concerns whether the CPUs, GPUs, NICs, memory, and drives can all run at their full performance levels. The power side concerns whether we often get different power levels on the NVIDIA GPUs, sometimes due to air or liquid cooling choices. We are running at the official 700W GPU spec here.

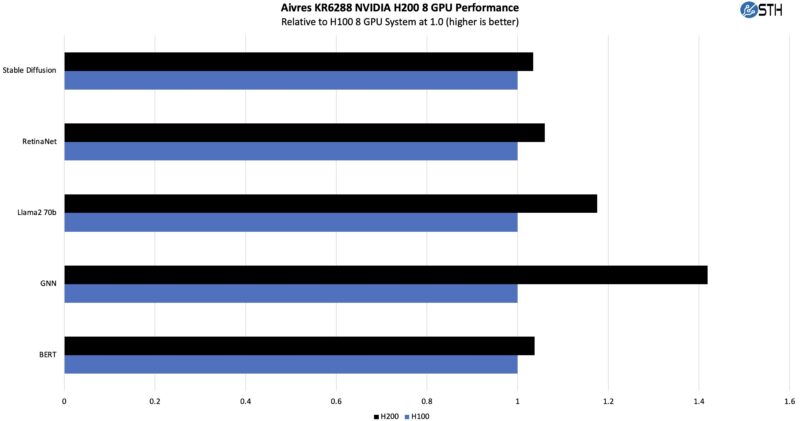

Aivres KR6288 GPU Performance

On the GPU side, NVIDIA has made it very easy to get consistent results across vendors. We were able to jump on a cloud bare metal H100 server and re-run a few tests.

NVIDIA claims the H200 offers up to 40-50% better performance than the H100. This is true when you need more memory bandwidth and capacity. Here is a decent range of tests and results. Of course, we did not run the H200s at 1000W, which would have had a bigger impact on the results.

As a preview, we have two more NVIDIA HGX H200 systems we are testing, and the systems are all within a low single-digit percentage of the performance of each other on these workloads when the GPUs are all set to 700W TDP. It is incredible how NVIDIA has made this relatively low drama.

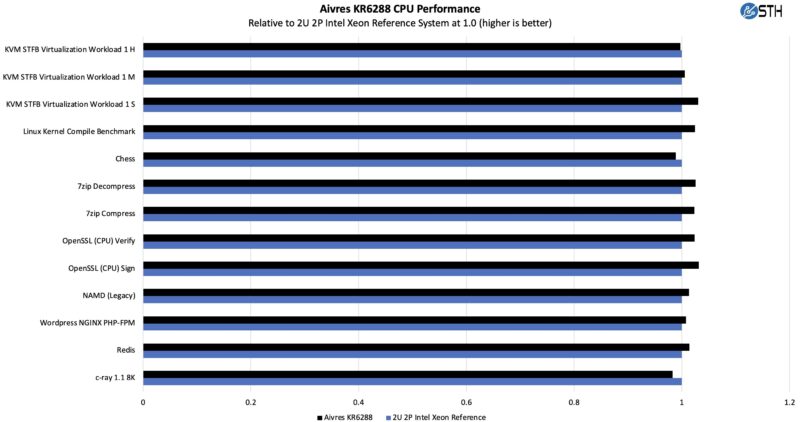

Aivres KR6288 CPU Performance

We ran through our quick test script and compared the Xeon side to our reference 2U platform.

This is more like a normal server variation, which makes sense given that the top of the server is essentially a 2U server.

Next, let us get to the power consumption.

“designed to house two processors, 32 DIMMs, nine or more 400G NICs, and eight GPUs with over 1.1GB of combined HBM3e memory.” Minor typo at the beginning, I think that you mean tb ;)

Aivres seems as another brand for Chinese Inspur – same as Kaytus.

Inspur was forced to exit since if they owned a company they couldn’t have a server with H200’s. Kaytus was the one who went to Singapore? Aivres was spun out as the US operations and sales as its own OEM. If they were a Chinese brand owned by Inspur they couldn’t get the H200’s for this server. I’m seeing a H200 server from them, and their business addresses are all in CA, so I don’t think it’s Inspur

@Honza & @FrankC – From Chinese translation of Wikipedia:

> “In 2015, a branch office “Digital Cloud Co., Ltd.” [ 2 ] was established in Taiwan , becoming a subsidiary of Inspur Information Co., Ltd., and moved into the Baiyang Building in Banqiao District, New Taipei City in April 2017 [ 3 ] . In August 2024, it was renamed Aivres and became an important overseas base of Inspur Information Co., Ltd. Its main business is overseas support for overseas Chinese customers and promotion of China’s new quality productivity.”

You can also Google for: “Hewlett Packard Enterprise Co v. Inspur Group Co, U.S. District Court for the Northern District of California, No. 5:24-cv-02220.” to see a similar claim, Inspur owns Aivres.