AIC SB201-TU 2U Internal Hardware Overview

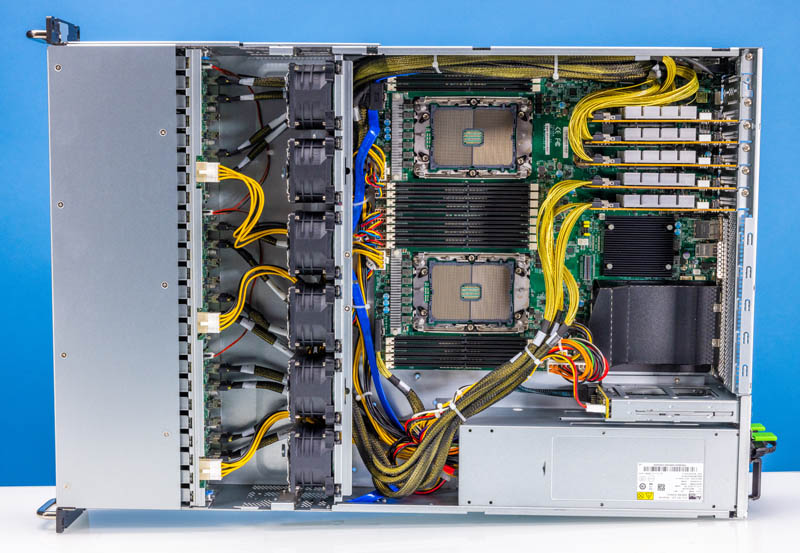

Here is the internal view of the server. One can see we have storage in front, then the storage backplanes, fans, CPUs and memory, then I/O expansion.

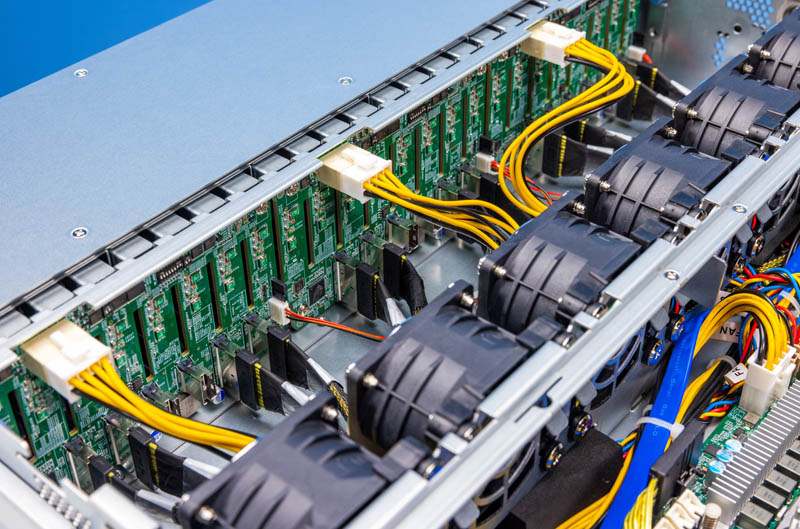

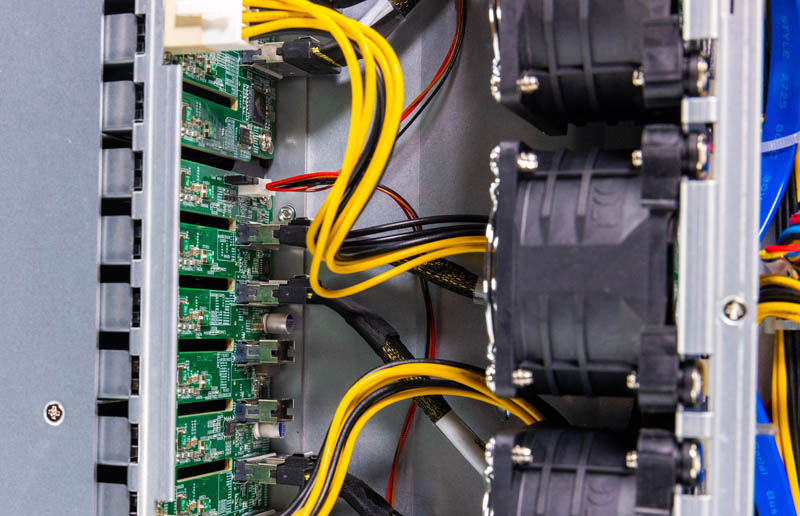

The drive backplane is designed for NVMe or SAS/ SATA. Our system is configured for 24x NVMe storage so all twelve connectors are PCIe Gen4 x4.

The drive backplanes also have fairly large slots for airflow between the drives and into the rest of the system.

Here are the six fans. These are not in hot-swap carriers, but that is a lot of cooling for a 24-bay chassis.

The airflow guide is a hard plastic unit that was easy to install and remove.

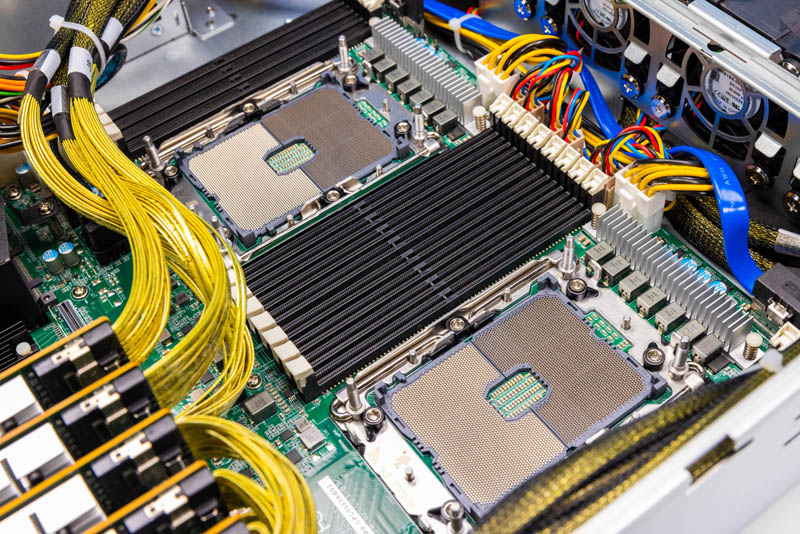

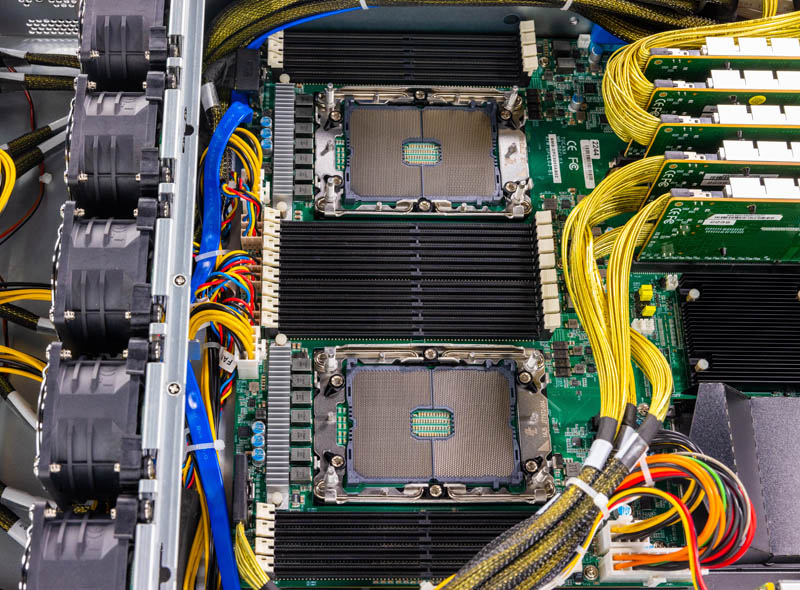

That airflow guide is there to cool two LGA4189 sockets. These are for Ice Lake generation 3rd Generation Intel Xeon Scalable CPUs.

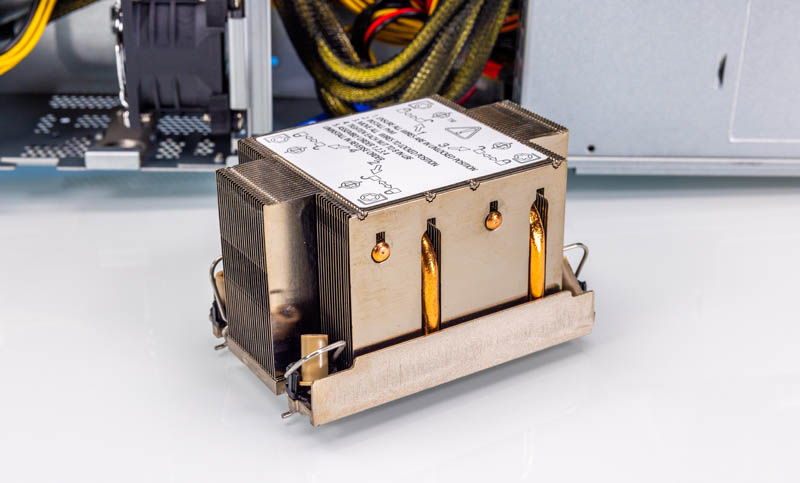

Here is a shot of the 2U coolers that come with the server for the CPUs. The motherboard supports up to 270W TDP CPUs, but the base configuration is set for 165W TDP CPUs. We realized this when we tried using Xeon Platinum 8368 CPUs. Those are not needed for NVMe storage servers and Intel has plenty of SKUs at 165W or below.

Speaking of below, taking photos of the eight DDR4-3200 per socket for sixteen DIMMs total was challenging given all of the cabling. AIC has another version of the motherboard with 2 DIMMs per channel, but that requires a proprietary form factor motherboard.

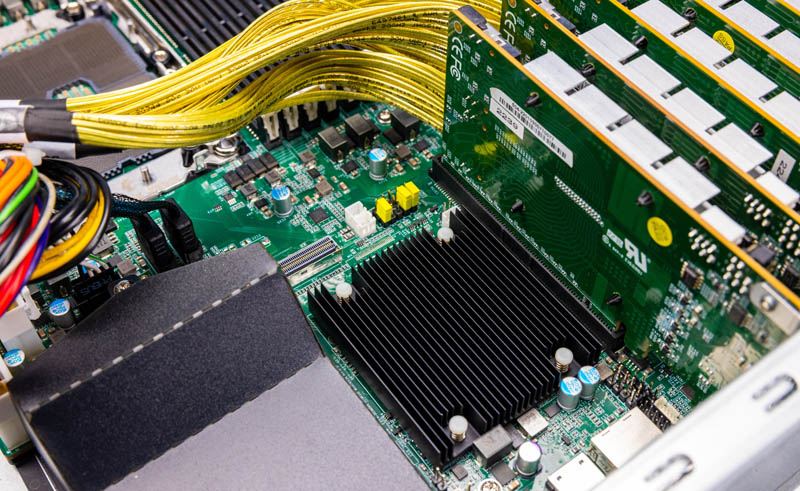

Behind the CPUs and memory, we have features like the OCP NIC 3.0 slot that is the primary networking slot.

The OCP NIC 3.0 slot has an airflow guide that we had a love-hate relationship with. It was effective, but it was significantly harder to get installed than the hard plastic CPU airflow guide. Then one of five times it just popped in place perfectly. Perhaps it was just us, but this felt like something that could be improved.

Next to the OCP NIC 3.0 slot is the Lewisburg PCH. This is an Intel C621A PCH.

Then there are the PCIe retimers. There are three x16 retimers and two x8 retimers. These are needed to ensure signal integrity between the PCIe slots and the front 2.5″ NVMe bays. The shorter runs from the front of the motherboard do not need retimers, but this is something we started to see more of in the PCIe Gen4 generation. In the PCIe Gen5 generation, we will see more retimers.

Something that we failed taking photos of just because we did not want to disturb the cabling is a duo of M.2 slots. Below this bouquet of cables, we have two PCIe Gen3 x2 M.2 slots for additional storage.

Next, let us get to the system’s block diagram.

Sure would be nice to see more numbers, less turned into baby food. For instance, where are the absolute GB/s numbers for a single SSD, then scaling up to 24? Or even: since 24 SSDs are profoundly bottlenecked on the network, you might claim that this is an IOPS (metadata) box, but that wasn’t measured.

The whole retimer card – cable complex looks very fragile and expensive, it’s hard to believe this was the best solution they could come up with.

The vga port placement is a mystery, they use a standard motherboard i/o backplate, they could have used a cable to place the vga port there (like low-end low profile consumer gpus usually do)

The case looks little too long for the application. Very interesting server, not sure if it’s in a good way but at least the parts look somewhat standard

Reviews are objective but useful

We get 100GBPS Read and 75GBPS write speed on a 24 bay all nvme server. Ultra high throughput server with network connectivity upto 1200GbE.

Would love for you to take a look.