Today we are going to look at one of the more interesting 2U server platforms we have seen recently, the AIC SB201-TU. This server has 24x PCIe Gen4 NVMe bays making it one of the densest solutions out there. What should pique our readers’ interest is the simplicity of the design and how AIC is building this system. With that, let us get to the hardware.

AIC SB201-TU 2U External Hardware Overview

The system itself is a 2U server. AIC uses purple drive trays to denote its NVMe storage. 24x 2.5″ NVMe bays on the front of the server are really the key feature of this server since this is a storage-focused platform.

The 2.5″ bays are tool-less making a system like this much easier to service than older generations with four screws holding drives in.

Just a quick thank you to Kioxia for some of the CD6/ CM6 drives that we used to test this server.

On the left side of the chassis, we have standard features like the LED status lights and the power button.

On the right side of the chassis, you may see something that looks mundane at first. The USB Type-A port that is on the side of the chassis is one of the more interesting designs we have seen on STH.

That USB 3 port needs connectivity to the motherboard. At the same time, the entire front of the chassis is dedicated to 2.5″ drives and cooling, so routing a cable is not straightforward. AIC’s solution: route a long USB cable in a channel outside the chassis.

Here is the blue USB cable transitioning between the CPU area and the channel on the side of the chassis. This is one of those small features but shows some straightforward engineering.

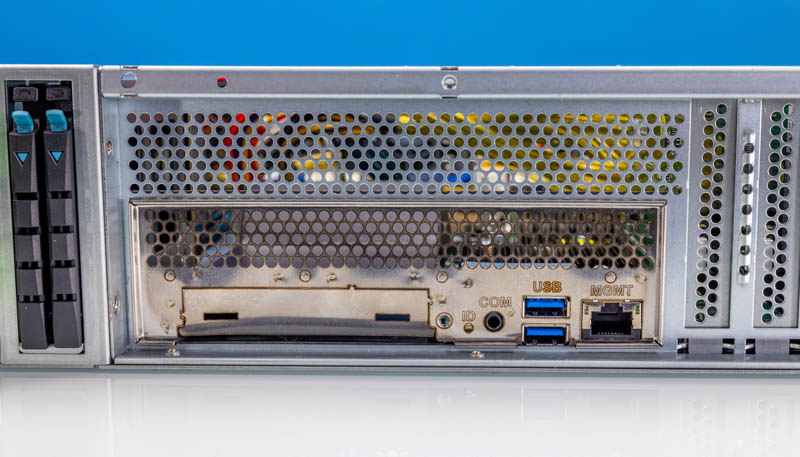

On the rear of the chassis, we have power supplies, 2.5″ boot drive bays, rear IO, and then a number of I/O expansion slots.

Redundant 1.2kW 80Plus Platinum power supplies provide power for the system. If one wanted to use higher-power CPUs and SSDs, then it might be worth looking at higher-power solutions.

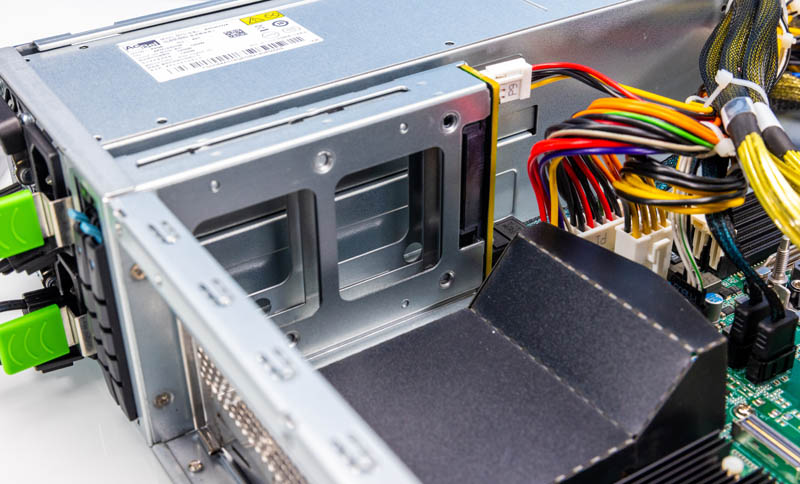

The two boot drive bays on the rear are for slim 7mm SATA 2.5″ drives. Here is the cage for both.

Next to that area is the rear I/O. The top of the section is an airflow channel. On the bottom is the rear I/O area, and that is a bit different. There are two USB ports, and an IPMI management port. There is also a COM port. Something not here is onboard networking. Instead, networking is provided by an OCP NIC 3.0 slot.

If you are looking for a VGA port, that is all the way to the right of the rear.

It may look like there are many low-profile slots available. In this server, they are all filled as we will see in our internal overview.

Next, let us look inside the system.

Sure would be nice to see more numbers, less turned into baby food. For instance, where are the absolute GB/s numbers for a single SSD, then scaling up to 24? Or even: since 24 SSDs are profoundly bottlenecked on the network, you might claim that this is an IOPS (metadata) box, but that wasn’t measured.

The whole retimer card – cable complex looks very fragile and expensive, it’s hard to believe this was the best solution they could come up with.

The vga port placement is a mystery, they use a standard motherboard i/o backplate, they could have used a cable to place the vga port there (like low-end low profile consumer gpus usually do)

The case looks little too long for the application. Very interesting server, not sure if it’s in a good way but at least the parts look somewhat standard

Reviews are objective but useful

We get 100GBPS Read and 75GBPS write speed on a 24 bay all nvme server. Ultra high throughput server with network connectivity upto 1200GbE.

Would love for you to take a look.