AIC JBOX (J5010-02) Internal Hardware Overview

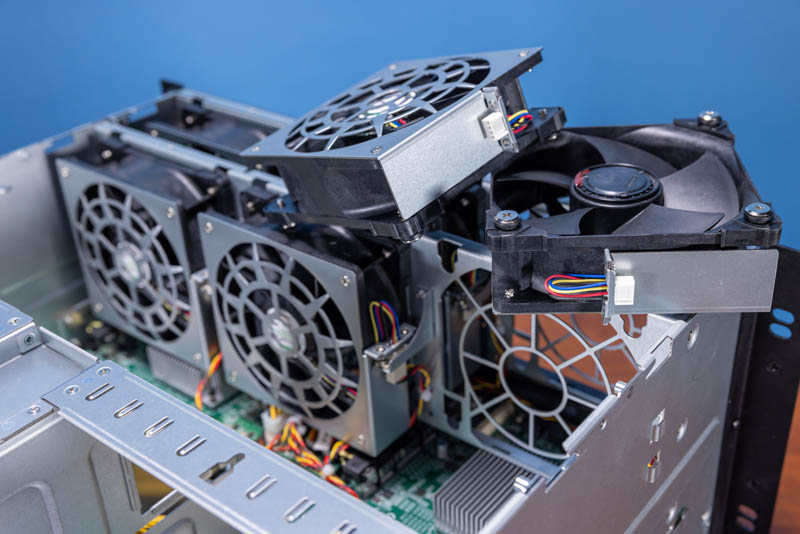

Inside the system, we are going to start with the front, and specifically those fans that we saw in our external overview.

While it may look like there are three fans from the front view, there are actually six large fans in the system.

These fans are actually tool-less and hot-swappable and that was something we were frankly surprised to see. This is not the fanciest design, but it has one awesome aspect and that is rubber grommets around the fan screws. With this rubber mounting, vibration is dampened. While this may look like solely a data center box, it is small features like these that will help this go into data closets as well.

We are going to get to the PCIe sleds in a bit, but on that service note, the sleds have two thumbscrews and levers to easily remove them. This is an important concept as we start to look inside the system.

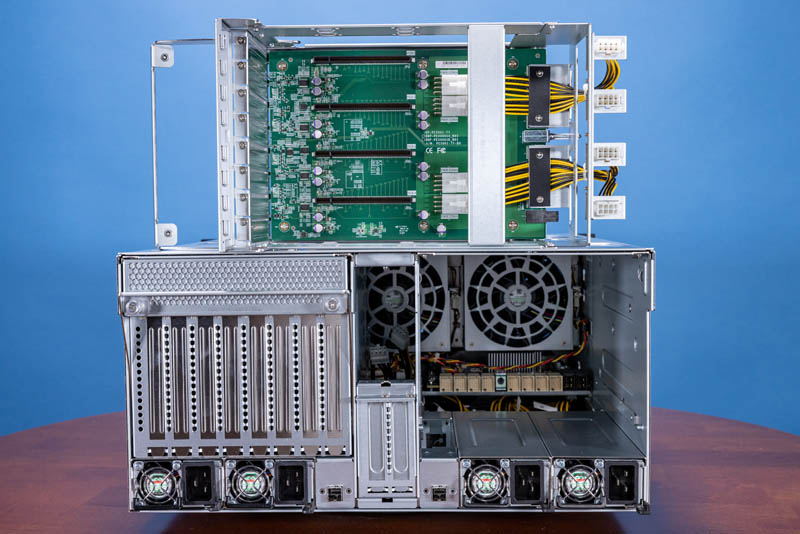

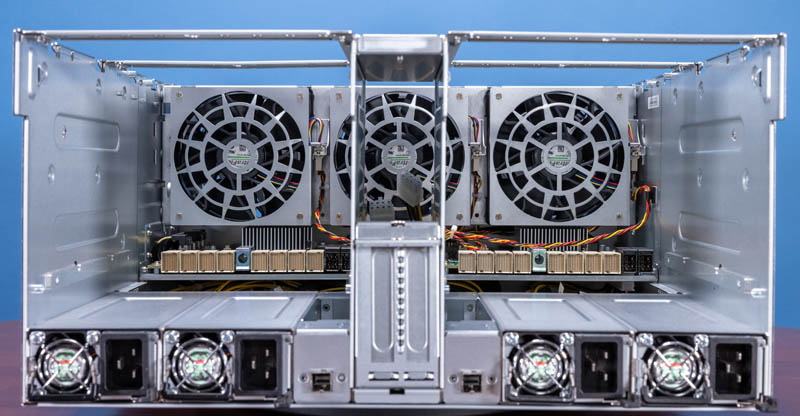

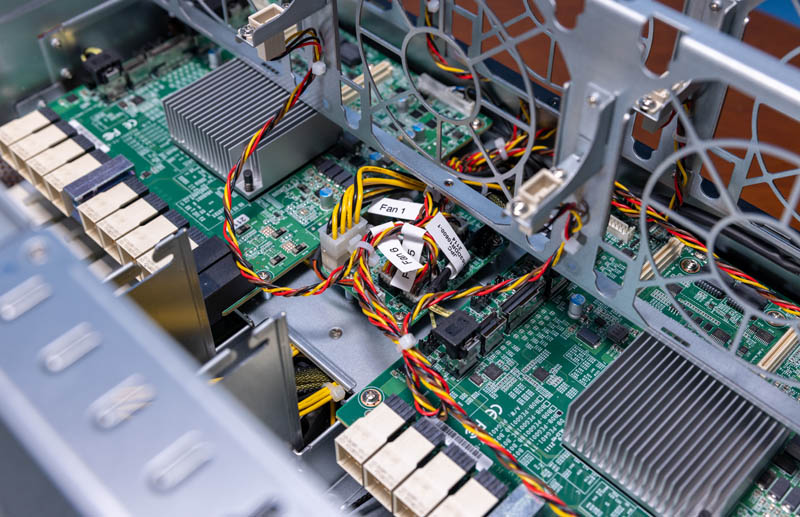

Removing both full-height 4x PCIe Gen4 x16 sleds, and the lids, we can see inside the system and just how much area is covered by those fans.

The other interesting point is that AIC is using higher-density connectors for data (white) and power (black.) You can also see the guide pin hole in the middle of the white connectors. Something that may not be obvious from online pictures is that this JBOX is designed to easily service GPUs, FPGAs, NICs, SSDs, or other accelerators.

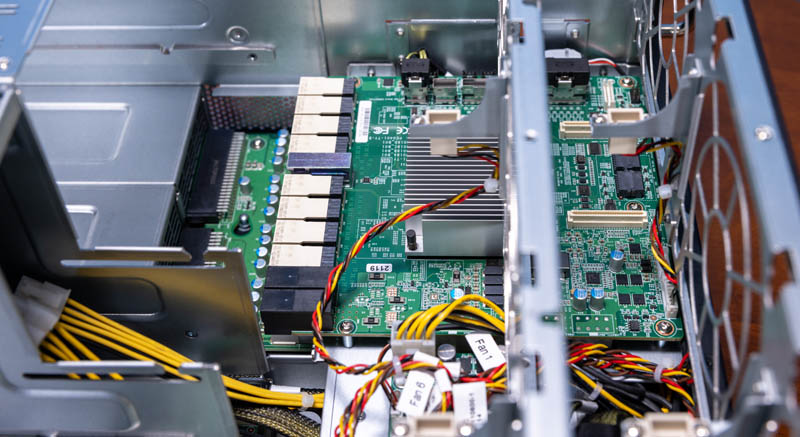

Here is a top view with the PCIe sleds and fans removed. There is a theme that we hope is coming through and that is simply that this system is set up as two different hemispheres sharing a chassis and fans.

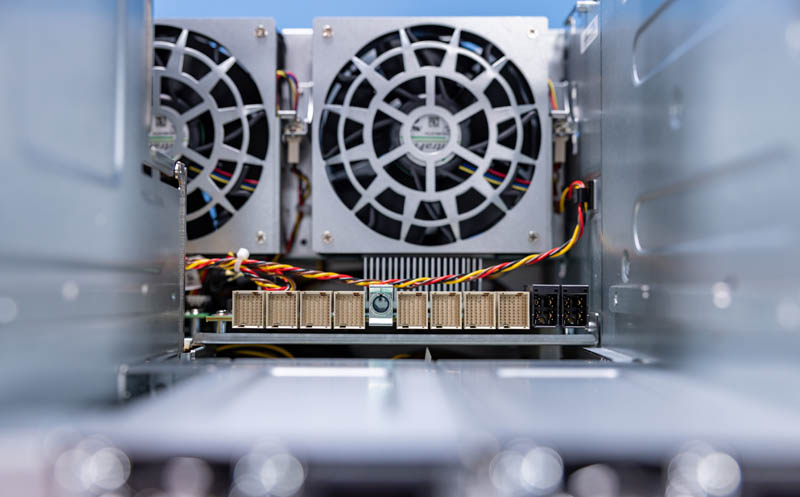

Powering the JBOX are the two Broadcom Atlas PEX88096 PCIe Gen4 switches. Each sits on its own PCB. We can see the high-density white connectors on the left of the photo below. There are cabled connectors for other ports on the far edge as well.

In the center, we get power distribution for things like the fans. One nice touch is that these cables are labeled. If they were not, this would be very hard to service.

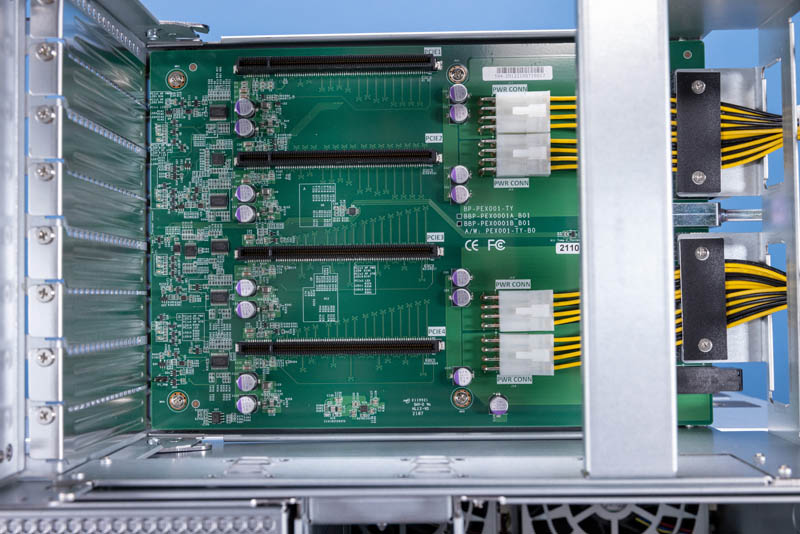

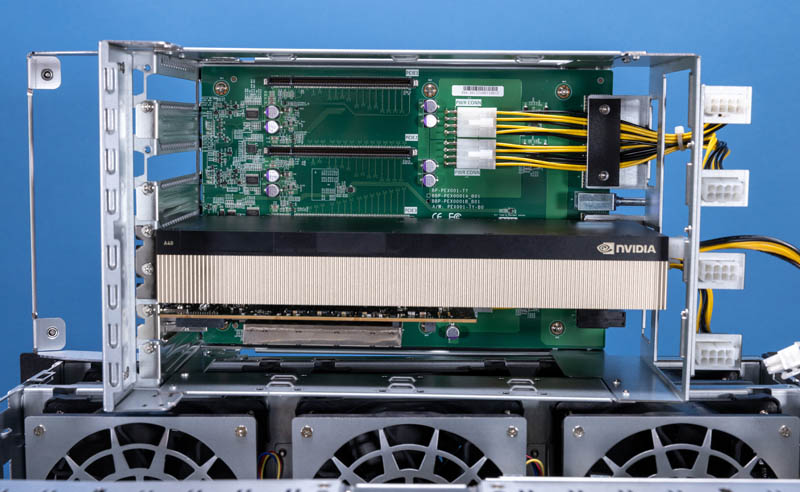

Let us now move to the PCIe sleds. Each sled has four PCIe Gen4 x16 slots. Helping to keep things serviceable, these are not locking slots, and that is great for this type of chassis. Each full-height x16 slot is double width. One will also notice the retention bar on the right above the power cables. That ensures large and heavy PCIe cards stay put. This bar uses four screws, two at each end, and was one of the two items in this chassis we wish was tool-less that is not. The other being that this system uses screws on the I/O brackets instead of a tool-less solution. Overall though, this was surprisingly easy to service.

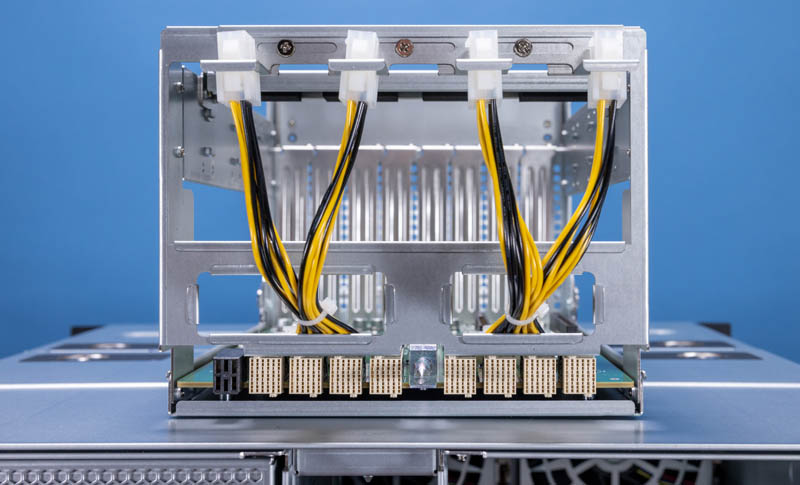

Here is the connector view of the PCIe sleds, and this shows an important concept. We have the eight data connectors and then the power connector that goes to the chassis. What AIC is doing here, and this is a great feature, is to push power to the PCIe sled, then use cabled connections to power each of the cards. This allows for the entire sled to be pulled out without having to worry about a cabled connection to the chassis. It also makes it easy to power various devices. This is a small detail, but in practice, this works surprisingly well.

Here is a PCIe sled with two different cards so you can see the basic formula. The empty slots are above. A single slot NVIDIA BlueField-2 DPU is on the bottom, so there is extra space below. In the middle, there is a NVIDIA A40 300W GPU. One can see how this GPU just barely fits snugly in the sled.

Let us now show some interesting PCIe configurations from just a day of using the box.

What an interesting device! Is there any idea on pricing yet? It would be very interesting if they brought out a similar style of device but with U.3 NVMe slots on the front instead, so you could load it up with fast SSDs but only take up a single x16 PCIe slot on the host.

Excellent device, Patrick! Great review.

I wonder though what that power connector on the sled is rated at? Can it handle 1200W for 4 A40 or similar cards? It does not look very chunky.

Are these any public examples of builds using the AIC JBOX?