At FMS 2022, we saw the AIC J2024-04. We normally do not take extra time to cover 24-bay NVMe JBOF chassis, but this one is different. It uses the NVIDIA BlueField DPU. The reason for this takes a bit of historical context, but it is also a successful platform worth noting.

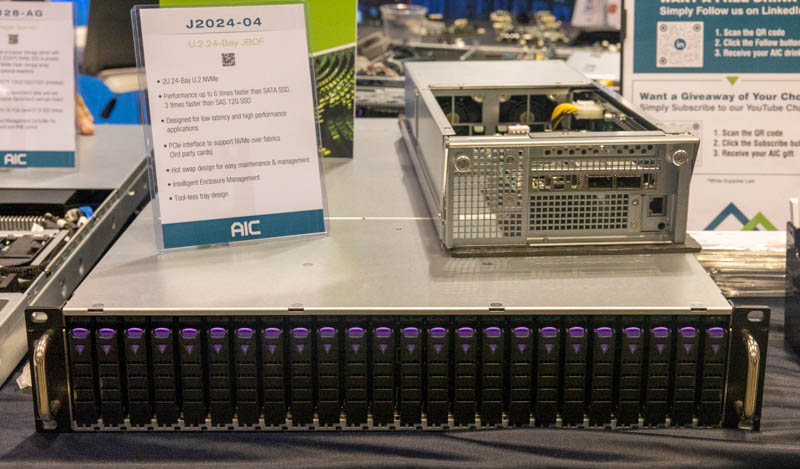

AIC J2024-04 2U 24x NVMe SSD JBOF Powered by NVIDIA BlueField DPU

The AIC J2024-04 is a 2U 24-bay NVMe SSD JBOF. The idea of having 24x 2.5″ drive bays in a 2U chassis is well established, as is just having them be a JBOF or JBOD. What is different comes down to the control nodes.

The AIC J2024-04 itself can support a number of different controller cards. There ar two controller trays for redundancy. In the version at FMS 2022, AIC had the NVIDIA BlueField DPUs installed.

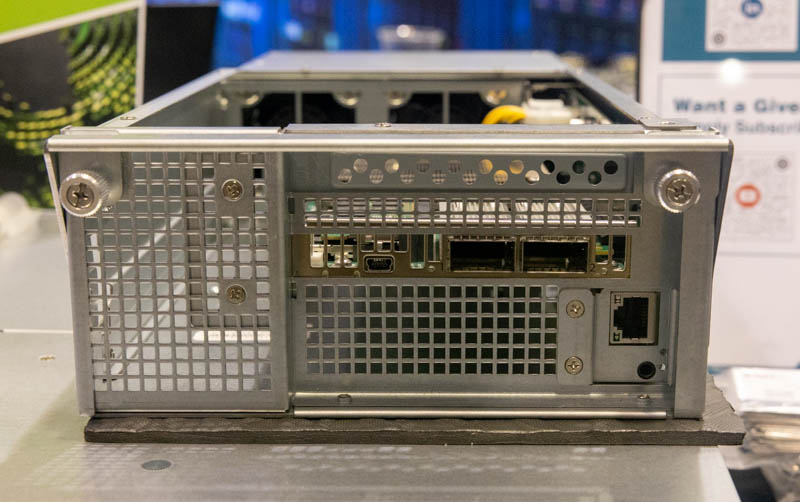

One can see the management port on the tray and then the two networking cages and a USB console port on the PCIe card.

These are NVIDIA BlueField DPUs. These DPUs are the first generation cards we covered in Mellanox BlueField BF1600 and BF1700 4 Million IOPS NVMeoF Controllers before the NVIDIA acquisition of Mellanox. The older BlueField cards can have up to 16 cores (from 8 on BlueField-2) and have additional memory bandwidth. While these are ConnectX-5 based parts, they were allegedly very good for storage markets before the BlueField-2 went in a more networking direction.

Although this is not the box, VAST Data told us it uses AIC and BlueField as part of its solution.

Final Words

While most of the industry focus is on BlueField-2, and the transition to BlueField-3, it is interesting to remember that BlueField (1) still is a product being deployed. It was cool to see AIC bring this out to the show floor so folks could see it. Our hope is that BlueField-3 is the generation where BlueField becomes more consistent in features as BF1 to BF2 and BF2 to BF3 are fairly significant jumps.

So is the DPU the pcie root and the host of the drives? And how do the drives connect off of the card — via a 16 or 32 lane pcie connector to a backplane? Since its a dual controller system (for redundancy as stated), are the drives dual port? So that’d be 4 pcie lanes per drive split to 2 to each controller, which is still 48 lanes per controller, so I’m assuming there is a pcie switch on the backplane board that the DPU plugs into.

Tell us the important details so we can all learn more about DPU use cases. I’d love to know what kind of throughput these setups have with a DPU as a root. Maybe an article can be done as a detailed write up of the nitty gritty of that, how to set it up (like actual instructions), and throughput testing. That would be a huge resource for the community.