AIC HA401-TU Power Consumption

In terms of power consumption, this system has an 80Plus Platinum 1.2kW power supply. There is, however, a 1.6kW option as well. That would have been our choice with a higher power system as we were running very close to the 1.2kW with the Intel Xeon Gold 6330 SKUs we used.

Idle for this one can expect to be over 250W and likely over 300W. That is going to depend a lot on how it is configured. Assuming one is using this server for its intended purpose of a high-availability storage node, then there is a lot of SAS power as well as the CPUs, memory, and NICs that will be active.

Something to keep in mind is that the high-availability feature necessitates that this uses more power than a non-high-availability 24-bay storage node.

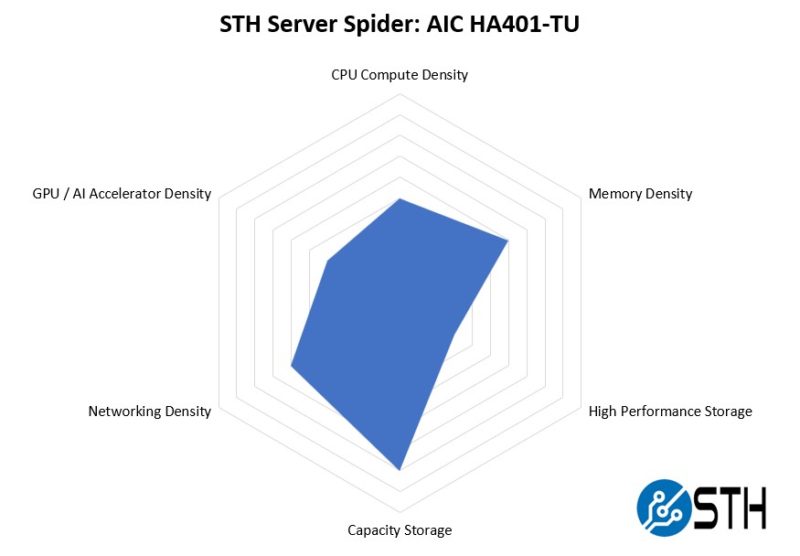

STH Server Spider: AIC HA401-TU

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is a really interesting system. It has more computing power than many single-node 4U storage systems as well as more networking. At the same time, we cannot say this is the highest-density solution because there are denser 4U single-node storage servers like the AIC SB407-TU 60-bay storage server that we reviewed.

Still, for a high-availability 4U server, this packs a solid amount of density.

Final Words

Overall, there is a lot going on in this system. While we have seen the Toucana motherboard before, the addition of things like this awesome board stack on the front of the node and the high-availability hot swap fittings was great to see.

To us, the main reason to purchase a system like this is if you want to design and/or deploy a high-availability storage appliance. While we spent most of this review discussing high availability within the context of the server itself, the multitude of rear PCIe slots means that one can also add external disk shelves to increase the capacity of the SAS3 storage array connected to the two controllers. Having two controllers accessing the same set of disks across multiple chassis has been the way high-availability storage servers have been made for years.

Overall, this was a great server to review. When we look to build our next-gen hosting cluster, we are debating whether to use high-availability or clustered storage. If we go high-availability, this will be a server we look at to use in the deployment.

Where do the 2x10GbE ports go? Are those inter-node communication links through the backplane?

Being somewhat naive about high availability servers, I somehow imagine they are designed to continue running in the event of certain hardware faults. Is there anyway to test failover modes in a review like this?

Somehow the “awesome board stack on the front of the node” makes me wonder whether the additional complexity improves or hinders availability.

Are there unexpected single points of failure? How well does the vendor support the software needed for high availability?

Nice to see a new take on a Storage Bridge Bay (SSB). The industri has moved towards software defined storage on isolated serveres. Here the choice is either to have huge failure domains or more servers. Good for sales, bad for costumers.

What we really need is similar NVMe solutions. Especially now, where NVMe is caching up on spinning rust for capacity. CXL might take os there.

Page 2:

In that slow, we have a dual port 10Gbase-T controller.

“slow” should be “slot”.

This reminds me of the Dell VRTX in a good way- those worked great as edge / SMB VMware hosts providing many of the redundancies of a true HA cluster but at a much lower overall platform cost, due to avoiding the cost of iSCSI or FC switching and even more significantly- the SAN vendor premiums for SSD storage.

“What we really need is similar NVMe solutions. Especially now, where NVMe is caching up on spinning rust for capacity. CXL might take os there.”

Totally agree- honestly at this point it seems that a single host with NVMe is sufficient for many organizations needs for remote office / SMB workloads. The biggest pain is OS updates and other planned downtime events. Dual compute modules with access to shared NVMe storage would be a dream solution.