AIC HA401-TU Compute Nodes

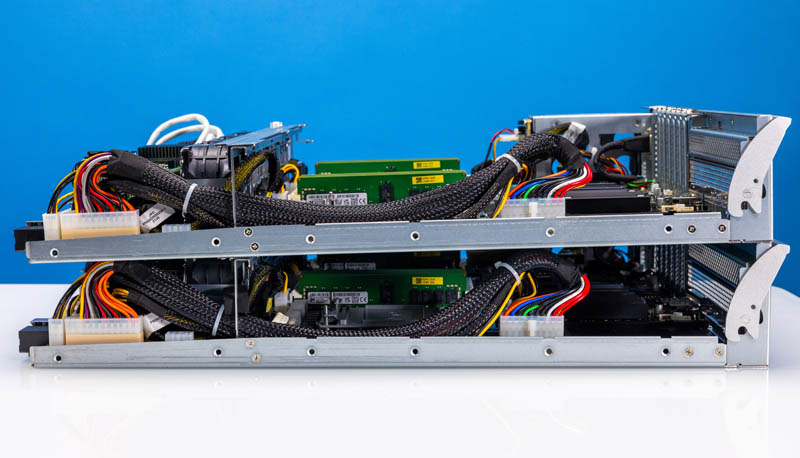

Here is the side view of the compute node stack outside of the chassis. From what we can tell, these nodes are identical.

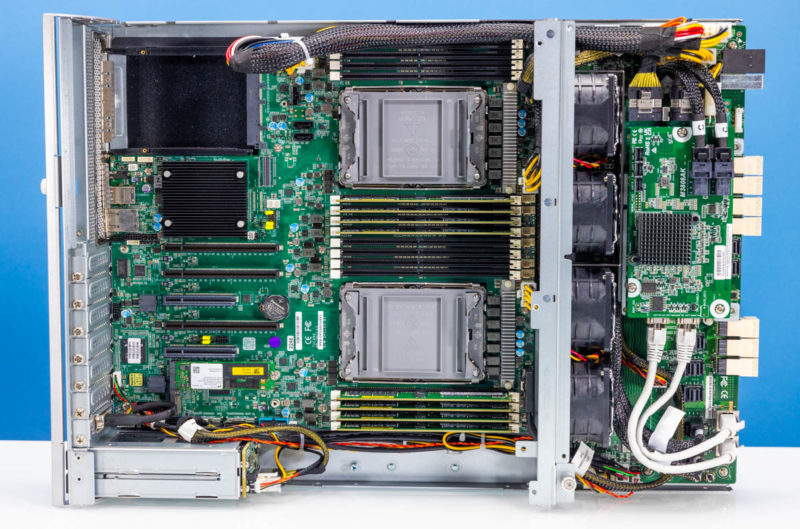

Each node has two CPUs, memory, NICs, boot drives, and power delivery paths. One notable design choice is that the fans are on the sleds instead of being part of the chassis. That means that the fans can be serviced by pulling one of the two redundant controllers and the remaining controller and drives can remain in the rack.

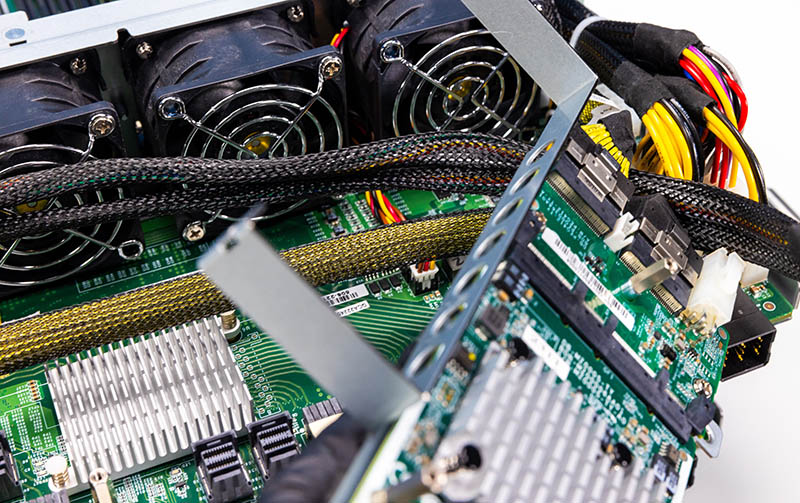

Here is a look at the rear of the nodes. As one can see, there are a lot of cables, including Ethernet cables.

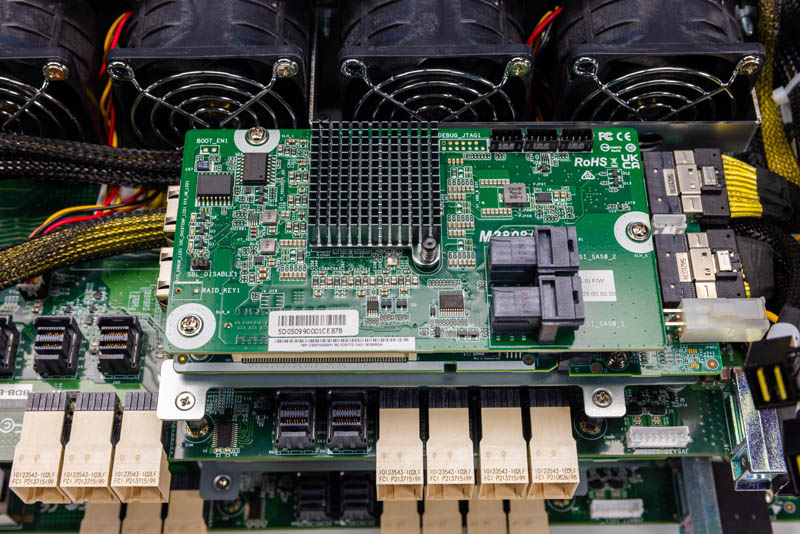

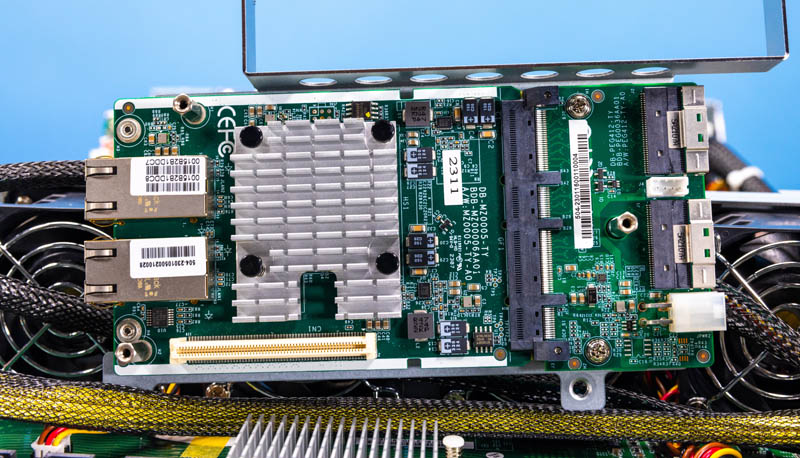

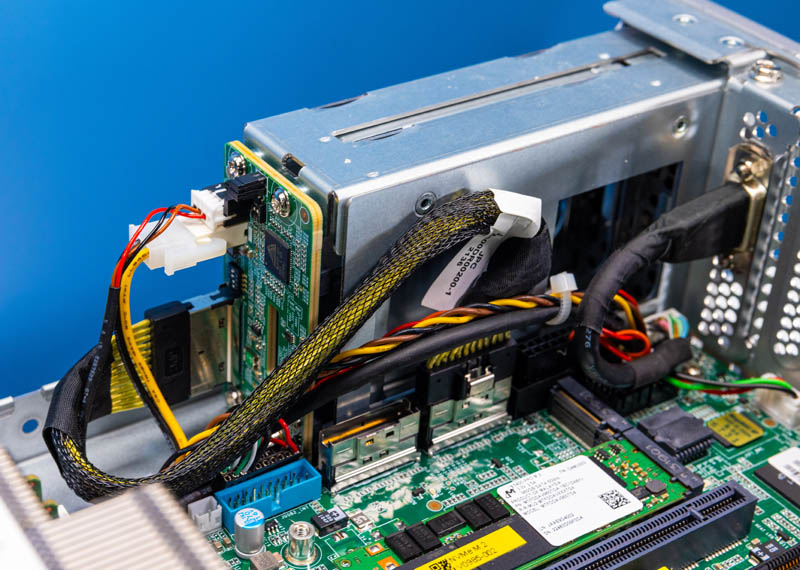

Taking a quick look here we can see a M3808AK controller for a LSI SAS3808 generation of PCIe Gen4 x8 SAS3 controller.

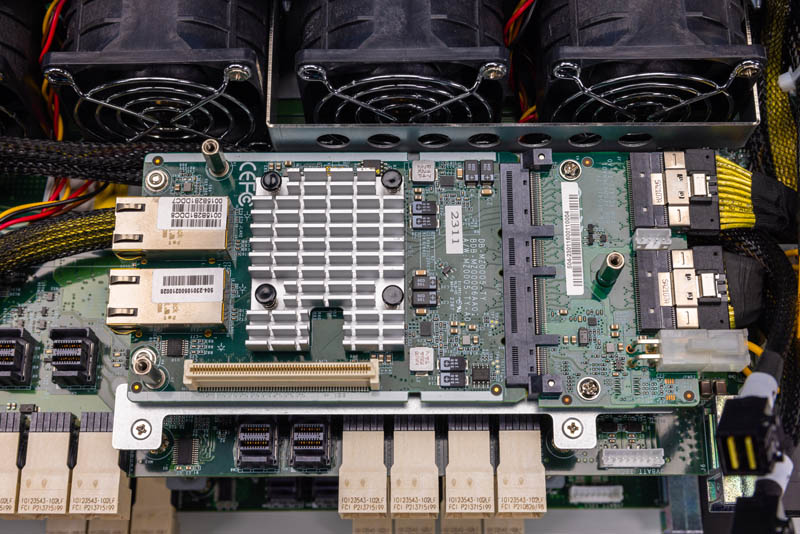

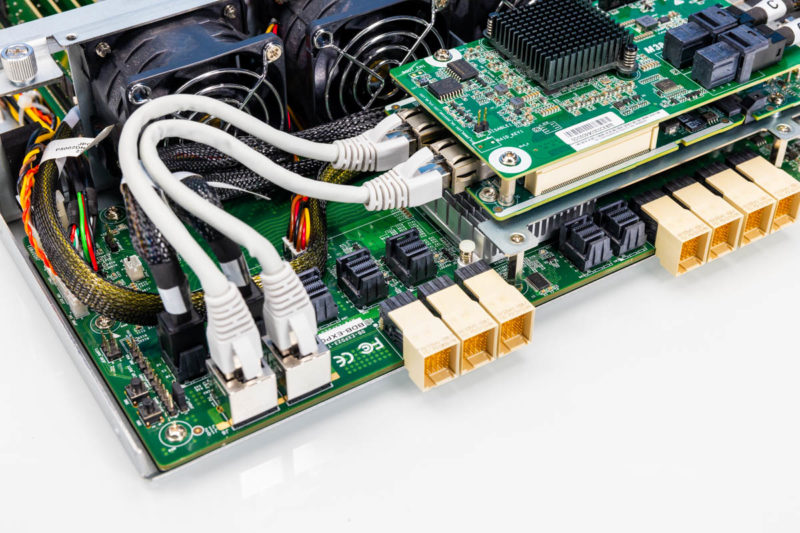

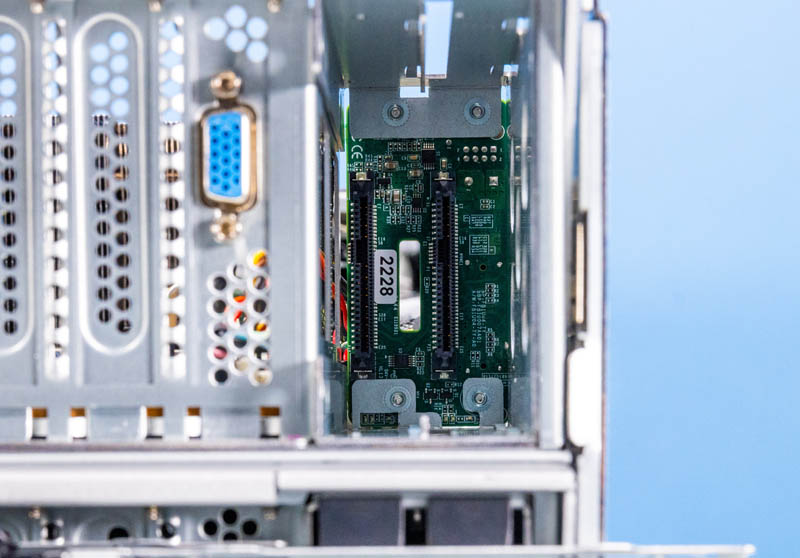

That connects into one of the most unique cards you will see. Here starting from the right we have two PCIe 8-lane connectors that go into a board with an OCP NIC 3.0 slot. In that slot, we have a dual port 10Gbase-T controller. That dual port OCP NIC 3.0 controller has a mezzanine attachment for the SAS3 controller that sits on top.

That 10GbE is part of the HA solution, but here is a look at this card which absolutely should wow you. This setup is absolutely our favorite part of the server.

These three layers sit atop the Broadcom SAS expander board.

Since we only have 8 ports with this stack we also need a Broadcom 35X48 SAS expander on each node to go from the 8 controller ports to the 24 drives on the backplane. We can see more SFF-8643 connectors for the SAS expander on the PCB here.

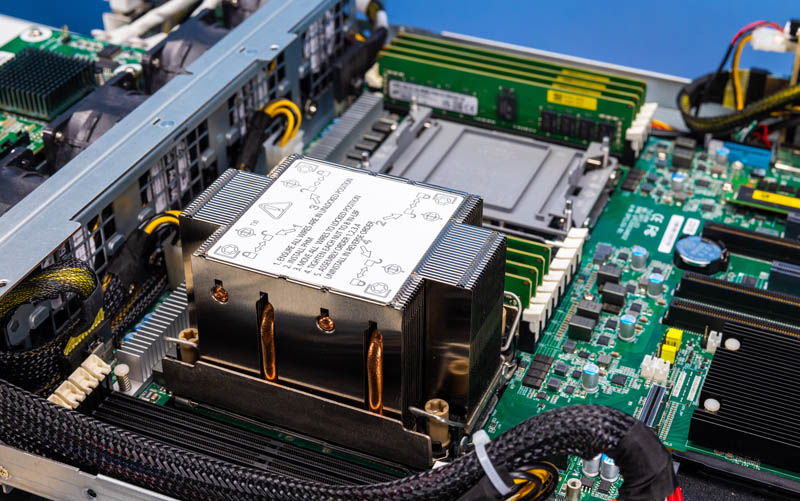

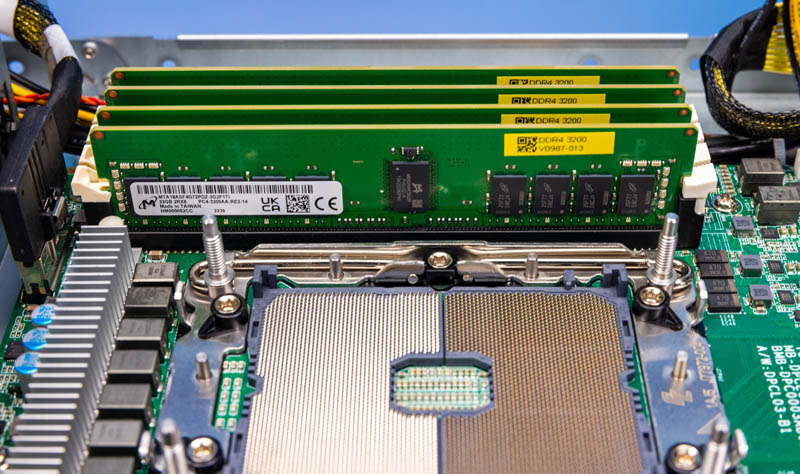

Behind the wall of fans, we get the CPU and memory. In the system, we get dual 3rd Gen Intel Xeon Scalable processor sockets (Ice Lake) each with 8x DDR4 DIMM slots.

Here you can see we are using Micron memory. Luckily, we had additional Micron DDR4-3200 RDIMMs and Intel Xeon CPUs to add to this chassis since a full configuration across both nodes takes four CPUs and 32 DDR4 RDIMMs.

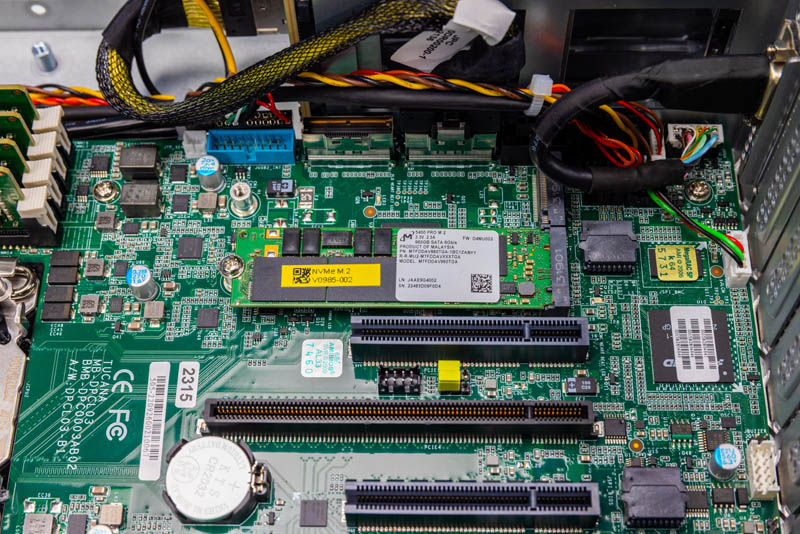

Although there are the 10Gbase-T NICs, and SAS3 infrastructure at the front of the chassis for storage and networking, that is not the only networking involved. Instead, there are a pair of M.2 slots inside, again with a Micron M.2 SSD.

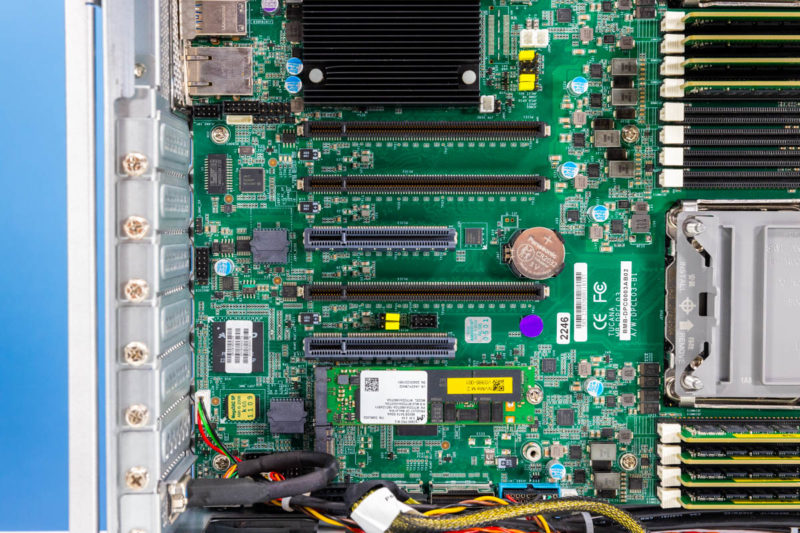

One can also see the PCIe slots. We get a total of 3x PCIe Gen4 x16 slots, 2x x8 slots for networking, AI accelerators (we tried the NVIDIA L4 and that had enough airflow), and external SAS HBAs.

Here we can also see our ASPEED baseboard management controller. AIC uses an American Megatrends MegaRAC solution for its management software providing an industry-standard management experience via IPMI.

Above the PCH, we also get the OCP NIC 3.0 slot for even more connectivity. This is likely the primary networking for a system like this.

An advantage of a SAS3 chassis and dual CPUs is that we get plenty of PCIe lanes.

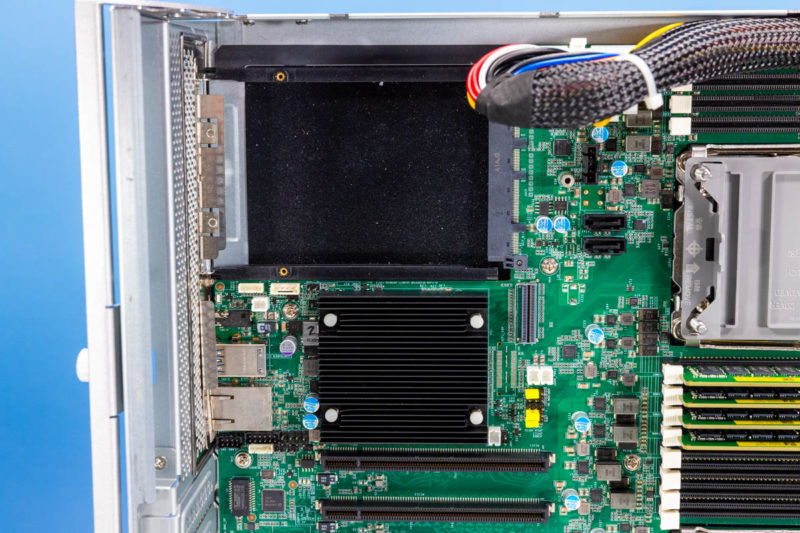

One other, feature is that we get 2.5″ drive bays on the rear. AIC has options for either PCIe/ NVMe or SATA drives. Our system is configured for NVMe as we can see from the big cable here.

These rear 2.5″ bays have nice tool-less drive trays as well.

With all that is going on, the next step is that we want to look at the block diagram. There is a LOT going on here so it is important to see how this entire setup works.

Where do the 2x10GbE ports go? Are those inter-node communication links through the backplane?

Being somewhat naive about high availability servers, I somehow imagine they are designed to continue running in the event of certain hardware faults. Is there anyway to test failover modes in a review like this?

Somehow the “awesome board stack on the front of the node” makes me wonder whether the additional complexity improves or hinders availability.

Are there unexpected single points of failure? How well does the vendor support the software needed for high availability?

Nice to see a new take on a Storage Bridge Bay (SSB). The industri has moved towards software defined storage on isolated serveres. Here the choice is either to have huge failure domains or more servers. Good for sales, bad for costumers.

What we really need is similar NVMe solutions. Especially now, where NVMe is caching up on spinning rust for capacity. CXL might take os there.

Page 2:

In that slow, we have a dual port 10Gbase-T controller.

“slow” should be “slot”.

This reminds me of the Dell VRTX in a good way- those worked great as edge / SMB VMware hosts providing many of the redundancies of a true HA cluster but at a much lower overall platform cost, due to avoiding the cost of iSCSI or FC switching and even more significantly- the SAN vendor premiums for SSD storage.

“What we really need is similar NVMe solutions. Especially now, where NVMe is caching up on spinning rust for capacity. CXL might take os there.”

Totally agree- honestly at this point it seems that a single host with NVMe is sufficient for many organizations needs for remote office / SMB workloads. The biggest pain is OS updates and other planned downtime events. Dual compute modules with access to shared NVMe storage would be a dream solution.