Windows Home Server v1 (WHS) was limited to using 2TB Master Boot Record (MBR) partitions in its storage pool, but the public preview of Windows Home Server V2 codename VAIL is not. This guide will show one how to use GPT Raid volumes passed through Windows Server 2008 R2 running Hyper-V into WHS V2 Codename VAIL. (That sounded way more complex than it actually is).

Background

Microsoft designed WHS and Drive Extender to only work reliably with MBR partitions. As part of the WHS design, Drive Extender would provide data duplication redundancy instead of a RAID 5, RAID 6, RAID-Z, RAID-Z2 parity calculation scheme. The net effect is that large storage pools under WHS require 50% of the total storage to be utilized for redundancy purposes, which is a costly proposition when many disks are used. With raid 6 for example, one gets two disk failure redundancy and better performance than with Drive Extender when many drives are used. When using Raid 5 or Raid 6 with WHS, each large array would need to be broken up into 2TB MBR partitions. Furthermore, on Adaptec and some other raid controllers, only 4 MBR partitions could be created on a raid set, essentially making an 8TB array limit.

Adding a 2TB+ GPT Volume

This week, Microsoft released the public preview beta of Windows Home Server v2 codename VAIL and changed the game for raid 5 and 6 users! GPT partitions CAN be used in WHS V2 Vail. Microsoft does not recommend using Raid with Vail so follow this guide at your own risk.

Since as of April, 2010 there are no drives larger than 2TB in capacity, one needs to create a Raid volume to test drive sizes larger than 2TB. For this test, I created one Raid 0 array with two Hitachi 2TB drives, one 7TB raid 5 array with Seagate 1.5TB 7200rpm drives, and one smaller Raid 6 array with portions of Western Digital Green 1.5TB drives (the other capacity is deployed elsewhere on the server).

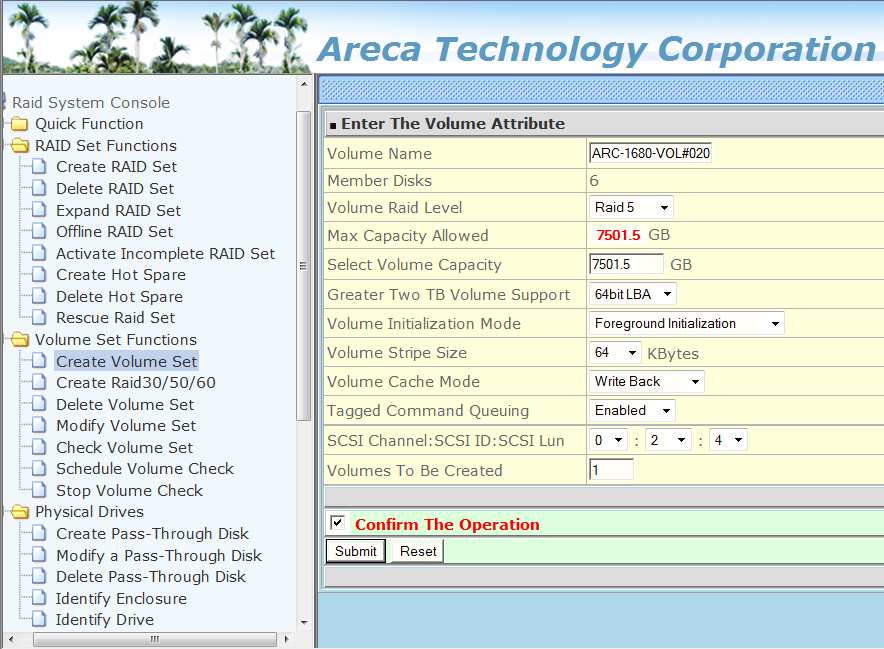

First, one needs to create the Raid array. For this I used an Areca 1680LP raid controller with disks attached through HP SAS Expanders. I logged into the Areca web GUI and created my three raid sets and volumes:

Note, since these are larger than 2TB arrays, you need to make a selection between 4K and 64-bit LBA. I chose 64-bit LBA (because it works!).

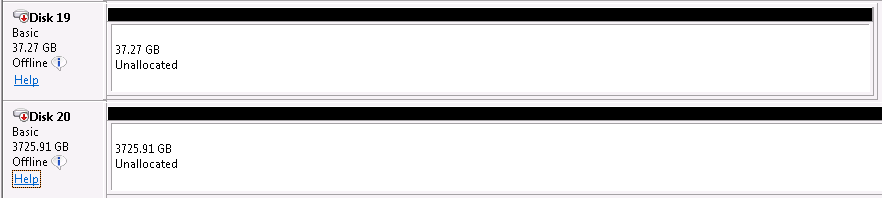

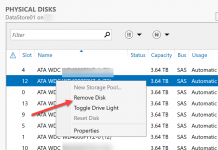

After this is done, one needs to initialize the disk as a GPT disk in Windows Server 2008 R2, then set the disk to “offline” as is best practice when passing an entire disk or Raid volume to Hyper-V.

In the event you are wondering, yes, that is an Intel X25-V 40GB disk showing 37.27GB usable in the above picture. The 3.7TB array is the two Hitachi 2TB drives in Raid 0. Now that the disk is initialized, it can be added to the SCSI controller of the Windows Home Server v2 codename VAIL Hyper-V virtual machine, even if the virtual machine is already running.

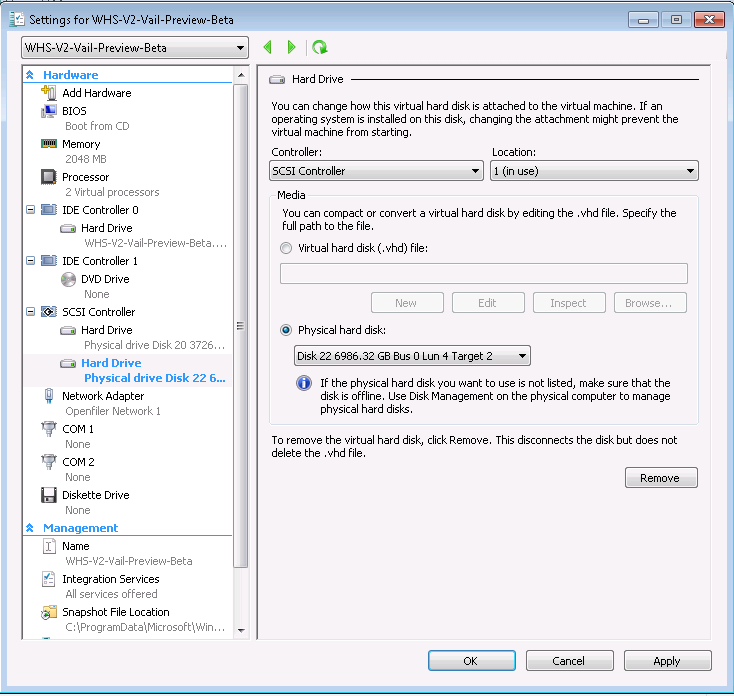

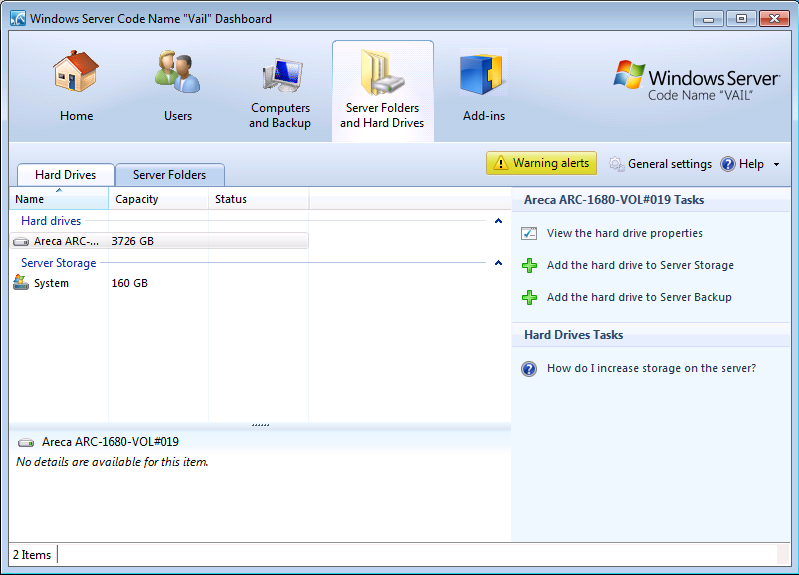

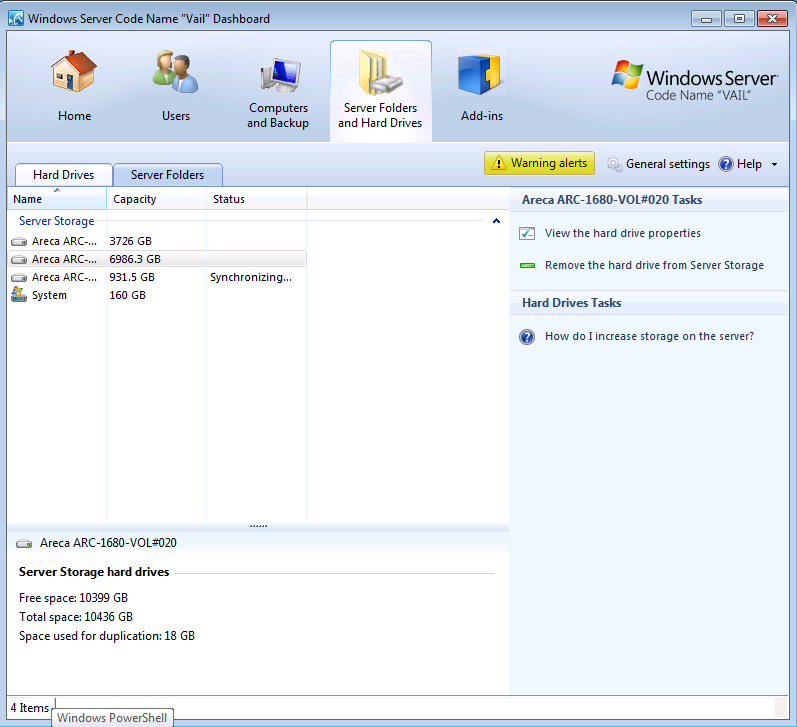

You can see from the above that the 3.7TB array has been added and I am in the process of adding the 7TB array to the SCSI controller. Once added to the SCSI controller, one can look at the Windows Home Server v2 codename VAIL virtual machine’s console and see the raid drive(s):

Of course, if for some reason you are not running Windows Home Server v2 codename VAIL in Hyper-V or another virtualized environment, then you can skip many of the above steps and just pass the Raid array from the raid controller to the operating system directly. Since this guide is being done with the Windows Home Server v2 codename VAIL public preview beta and because it is a more complex procedure, I am only showing this for Hyper-V.

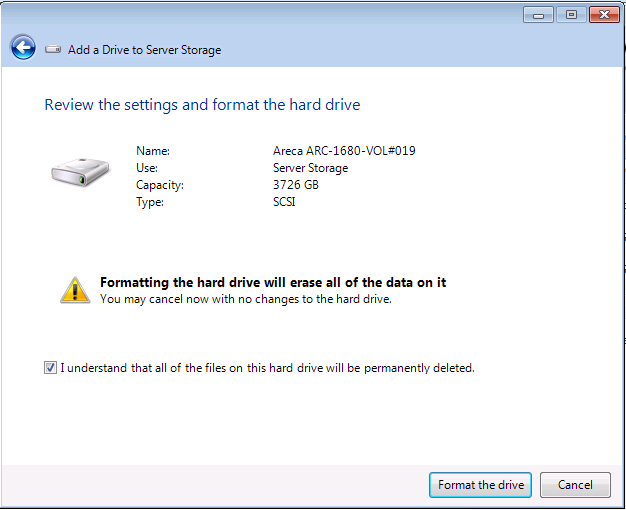

By selecting the drive in dashboard and then the “Add the hard drive to Server Storage” link on the Dashboard (as pictured above) one launches the add drive wizard.

This important screen reminds you that if you add the raid array to the storage pool you will lose all data. Since you will probably be using this with GPT disks with more than 2TB in capacity, heed the warning and make sure you are not about to experience massive data loss.

Above shows the Areca arrays all added. One can see the last 1TB drive is still being formatted and added to the pool. This disk shows “Synchronizing…” in the dashboard during this process.

That’s all there is to adding GPT disks larger than 2TB in Windows Home Server V2 VAIL in Hyper-V. This is a very simple process!

Conclusion

The support of GPT volumes is a great feature in Windows Home Server v2 codename VAIL that was sorely missed in Windows Home Server v1. Using large GPT volumes will help WHS V2 VAIL facilitate the impending disks with capacity over 2TB. Furthermore, it allows users to pass larger raid arrays to the server than its predecessor WHS V1. Thanks Microsoft for enabling greater than 2TB disk support in your new product!

For those, like myself, who have some tech knowledge but no RAID experience, is this how I can setup a 24TB RAID 6 (usable 10 X 2Tb = 20TB with 2X2TB for redundancy) instead of default folder duplication? If I want to add drives to the array, I figure I have to do that through the Areca GUI, but then do I have to re-initialize the array with WHS? Would mean I would lose all of my current data on the array?

For larger arrays, such that with a Norco 24 bay rack, do you recommend multiple RAID 6 arrays for better redundancy ((10 X 2TB drives plus 2X2TB redundancy) X2)??

That is basically how it works. The drives are managed through the Areca web interface, with the raid array created there, and are then passed through to Windows Home Server. Since WHS V2 VAIL has duplication on by default, you would need to turn this off manually for each share, just as you do for the current WHS.

As for adding drives check out online capacity expansion. You can add drives to an array and use online capacity expansion (OCE). Google that and there are some great resources to explain how it works for every manufacturer. If you plan on OCE, Adaptec and Areca are really good controllers.

On the 20TB array, would personally be just about the limit of what I would keep on one array but that is just because I would rather have more redundancy. The more data on an array the longer a rebuild takes. The more drives you have the more often you have a failure.

Also, with 10+ drives, you may want to think about having a hotspare. Basically it is a drive that sits connected in the chassis so that when a drive fails the raid array will start using the hot spare to rebuild the array immediately (save a few seconds of lag).

If you were going to do a 12 x 2TB raid 6 array, I would suggest that moving to two 6 drive raid arrays may be too conservative for a home application so maybe a 12 x 2TB + 1 hotspare 2TB.

Hopefully that helped. Let me know if anything was not clear.

My one concern was your statement “add the raid array to the storage pool you will lose all data”. Is it also the case when adding additional drives once the array has been initialized with WHS? When adding drives the array, does WHS consider it offline and once the array is rebuilt with the additional storage, does it needs to be re-initialized?

My plan was the Norco 4220 and a 24 2TB array. I thought with 24 drives, it would make sense to have 2 separate RAID 6 arrays as each array would have 2 X 2TB hotspares (I thought RAID 5 only has 1 hotspare). That would mean 20 usable drives and 4 for RAID 6 redundancy. I really don’t when to spend the time re-backing up my blu-rays. I’m assuming the term hotspare is meant for the redundant drive when the controller determines that an array drive has failed.

With Vail’s higher processing requirements, if I used a server board, would it make sense to use xenon vs i7 processors. I plan to use my WHS to back-up 3 home computers, serve media content to a home automation setup with 8 potential separate live streams (8 dune 3.0 media players), and eventual DVR with 4 live feeds (still thinking about the hardware for this part since I’m in Canada and we don’t have cable cards).

Does Vail have wake on LAN capabilities? I’m assuming the RAID controller is responsible for the spin up and down of the individual drives?

Thanks for your advice

Well, you don’t really add an “array” to the storage pool directly. You do it through volumes. So if you expand your raid array by 3TB usable, you will have 3TB of the array not in use, and be able to make a GPT volume out of that 3TB to pass to WHS, leaving the old volume completely untouched with OCE.

Two arrays is fine with raid 6, but you can share the hotspare among both arrays if you want. So the hotspare will be used by either array. With the number of drives you have, you may be fine with a single 2TB hotspare for now.

I haven’t tested VAIL on physical hardware, but if your hardware supports WOL, I’m guessing Vail will work fine.

Yes the RAID controller is responsible for spin up and down of drives. Decent cards have the ability to set the spin up/ down times that the controller uses. Staggered spin up is something that not every controller can do, so check that before you purchase if you want that feature.

I hope that helps.

Ahh, I think I understand. The array will take care of redundancy. When I add more drives, the array will re-populate but to WHS there will be the original volume (original drives) and the new volume (added drives). My only question is if the array controller decides to spread the current data over the larger array (I believe that whats happens when the array gets re-built when drives are added) what vail believes is a new volume will actually have data from the previous volume (original array) spread on to it and when vail goes to initialize it, it wipes the data. What will happen to that data that the array controller moved from the original array to the larger new array?

Or maybe I have how RAID works all messed up

I think the best way to explain it is that WHS sees Volumes. The raid controller has arrays that it splits into volumes. So if an old volume was 2TB and the controller expands the underlying array to 6TB, WHS will see the new 4TB worth of volume(s) but the old 2TB is still intact from a volume perspective. To WHS it is like it sees the old 2TB volume/ drive and then it sees new 4TB of capacity added.

This complexity makes perfect sense after you have used a hardware raid controller for a few days/ weeks. On the other hand it is why Microsoft does not support Raid underlying WHS. Imagine a customer service representative having to explain how raid works over the phone!

Thank you for this discussion, it perfectly describes my current dilemma in understanding the repercussions of using windows home server and its drive extender for my growing storage server. My problem was the drive extender using mirror type protection which costs way too much in space terms. Now we can have the protection, expansion and lower parity space usage requirements that our beloved raid cards give us with the nice consumer related features that whs gives us. Thank you so much for clearing it up :)