Last week, Asustor announced a new NAS line that was a bit different. The Asustor Flashstor 6 (FS6706T) and Flashstor 12 Pro (FS6712X) can handle up to 6 or 12 M.2 NVMe SSDs and have either 2x 2.5GbE or 1x 10GbE networking. Since we have been doing a lot with 2.5GbE recently, we got the 6x SSD 2x 2.5GbE FS6706T and have been working with that for the past few days.

A Quick Look at the Asustor Flashstor 6 FS6706T NAS

We have the ultra-quick 22-second short video version for folks as well trying to take in some feedback from the 1-Minute MikroTik RB5009 Video.

When we hear of an Intel N5105 quad-core NAS, one may think that the box is small. It is actually bigger than one might think. The sole USB 3 port on the front of the box should give some sense of just how large this is.

On the rear, we get two sets of features. One set is the dual 2.5GbE ports, power, and so forth that are typical of NAS units. The others are HDMI, S/PDIF, and so forth which are found more in desktops.

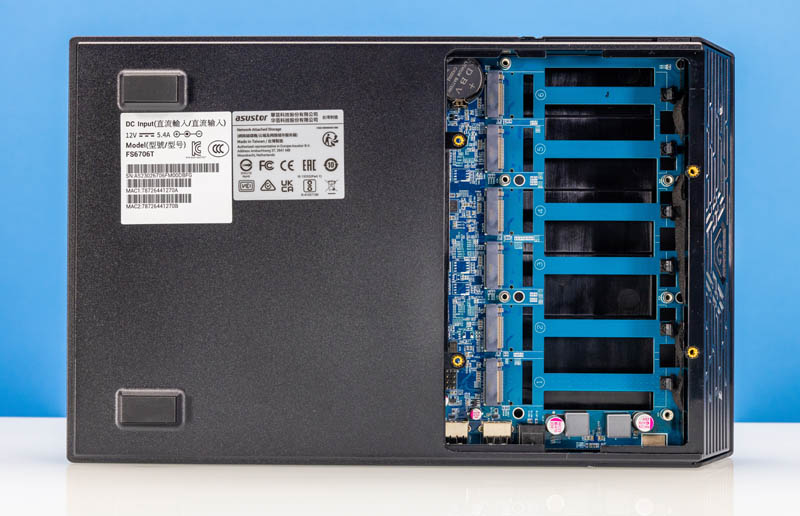

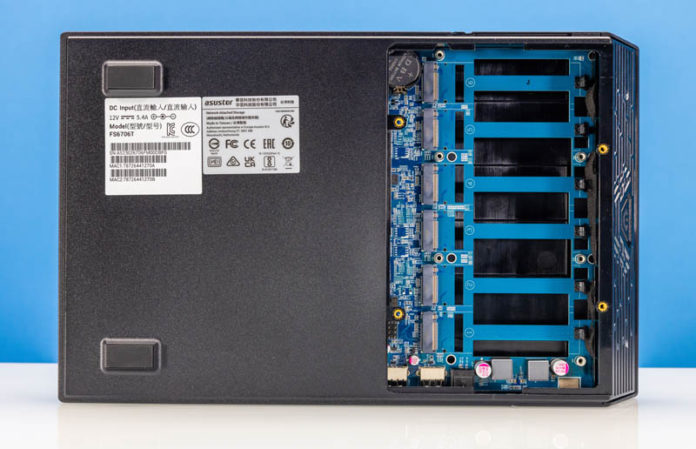

In the 6-bay unit, the bottom cover comes off using four screws. One nice feature is that the M.2 drives are installed via tool-less carriers.

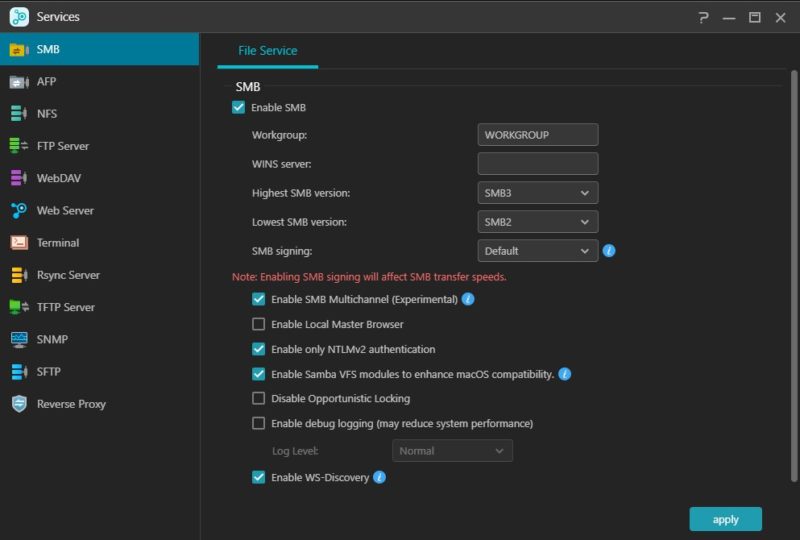

One of the awesome features is that one can just mark a checkbox and hit apply. That enables the SMB Multichannel SMB3 feature.

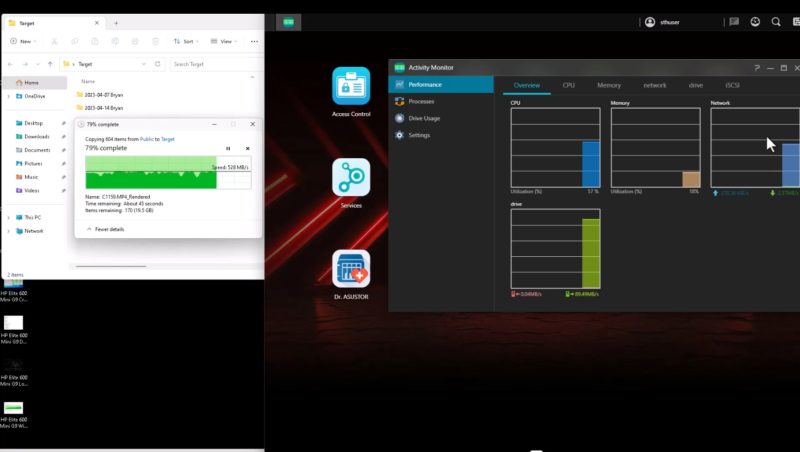

We had a TP-Link TL-SH1832 setup with a SFP+ to 10Gbase-T adapter to a HP Elite Mini 600 G9 with a 10Gbase-T adapter (more on that on STH soon) setup. We plugged both 2.5GbE ports into the unmanaged switch and saw speeds in excess of 500MB/s immediately. Below we can see 57% CPU utilization on the NAS transferring over 90GB of videos/ photos to the HP TinyMiniMicro node. This is RAID 5 with 6x 1TB super-cheap Crucial P3 Plus drives (Amazon affiliate link) using BTRFS.

The fact that more NAS units are supporting SMB multichannel is one of the reasons we have been doing the Ultimate Cheap Fanless 2.5GbE Switch Mega Round-Up. More users even with simple flat and small units will get the benefit of having multiple NIC ports engaged.

We will quickly note that 64GB was attempted in this system instead of the base 4GB. That 64GB (2x 32GB SODIMMs) was bootable but during the same transfer shown above the NAS unit failed. Going back down to 4GB fixed the issue so that is something we will investigate more for the full review.

Final Words

We decided not to cover the news of these last week because instead, we wanted to work with them. The Intel N5105 CPU only has 8x PCIe Gen3 lanes. That is enough for one lane per SSD and then one lane for each 2.5GbE port. The 12x SSD version has to use both ASM1480 MUX chips as well as ASMedia PCIe switches to even get enough connectivity. Still, this is an interesting unit that we just purchased on Amazon (affiliate link) so we wanted to try it out. We have already found some great things, and some not-so-great things, but it is working. We may also look at the 12x SSD unit that will arrive later this week.

If you want to follow along more, there is a STH forum thread.

hi all

great work being done on serve the home. may God bless you.

quick question: are we gonna see any small form factor computers with integrated power supplies being reviewed?

all the best and keep up the good work,

Taha

On my 12 bay unit, I wanted to see the native disk performance but struck out trying to find anything native in ADM. I instead wound up installing docker, pulling https://hub.docker.com/r/e7db/diskmark, and running “sudo docker run -it –rm -e PROFILE=nvme -v /volume1:/disk e7db/diskmark”.

Since I only had a couple of 1TB Intel 670P on hand while I wait for the actual drives I’ll be using to show up, this is what I saw, all with BTRFS:

—————-

Single 1TB 670P:

—————-

Sequential 1M Q8T1:

Write: 817 MB/s, 817 IO/s

Sequential 128K Q32T1:

Write: 811 MB/s, 6495 IO/s

Random 4K Q32T16:

Write: 207 MB/s, 53091 IO/s

Random 4K Q1T1:

Write: 89 MB/s, 23024 IO/s

———————–

Two 1TB 670P in RAID-0:

———————–

Sequential 1M Q8T1:

Write: 1602 MB/s, 1602 IO/s

Sequential 128K Q32T1:

Write: 1601 MB/s, 12812 IO/s

Random 4K Q32T16:

Write: 211 MB/s, 54250 IO/s

Random 4K Q1T1:

Write: 24 MB/s, 6219 IO/s

Could the failure at 64GB be due to the fact that Intel ARK only claims 16GB is supported “dependent upon memory type” ?

raid 5 btrfs! you mad man

Did someone mistakenly sit on it?

Integrated power supplies are rough in this market.

ADM is a fairly minimal linux. Your idea on the docker image is a good one.

On the 64GB failure, that is the operating assumption at this point. Need to find smaller DIMMs to use, so will pull apart some Project TinyMiniMicro nodes to get them.

Very mad.

Nobody sat on this one.

Seems a bit of a wasted opportunity to use high speed NVMe drives, limit them to 1 GB/sec each (PCIe 3.0 x1) and then further limit them to 0.5 GB/sec total, through a couple of slow 2.5 Gb Ethernet ports.

Even with the PCIe x1 for each device that’s almost 60 Gbps available for storage access, so it deserves a QSFP28 port running at 100G or at the very least a couple of 25G ports.

I would understand if there was such a thing as large, slow NVMe drives for bulk storage but at this point everyone still seems to be focusing on small high speed drives.

So this won’t be much use for anyone archiving large files (not enough capacity/drives too expensive), it won’t be useful for gamers who want high performance, it won’t be useful for video editors who want high performance AND high capacity…so the target market is people who only need to share small documents and not especially quickly – but who are also willing to pay a premium to use NVMe drives at a fraction of their potential over cheaper SATA options that would provide the same performance?

It doesn’t really make much sense to me, unless we’re about to see a bunch of slower 20+ TB NVMe drives come on to the market (which I would certainly welcome mind you).

Could I be the completely madman that wants to see 5 of these together in a ceph cluster, if it can be done with ADM? At ~$500/chassis plus drives,this would make for super cheap, but super fast, NVMe homelab storage!

It’s it possible to replace ADM with something else?

Getting stuck with the factory OS is my big complaint about NAS devices.

@Malvineous: The 12 bay unit is at least 10Gbe. I also don’t know if I agree with you regarding nvme drive prices. I ordered 12 Intel 670P 2TB drives for $38.59/TB including tax. Roughly 2 weeks ago I bought 9 Team Group AX2 2TB 2.5″ SATA SSDs for my other new all-flash NAS for $42.59/TB including tax.

To be a bit serious for a moment.

This device if operated in a RAID 5 or 6 mode will not suffer from having one PCIe lane per drive. The network connection is too narrow.

I have always built my servers from PCs, it’s more flexible. However for the price this thing is attractive.

I think the move should have been to 10GbE not 2.5 GbE. With the advent of SSDs, Ethernet – in it’s 2.5 GbE form – is still the bottleneck. There is time for the mobo makers to do the right thing…

Really looking forward to future NVME NAS devices.

As trying to kit something like this out yourself is very difficult in this form factor.

Sure you can get a PCIE card but motherboard needs bi-furcation to work correctly.

However there are server boards in ITX form factor that can do this. However it’s cost prohibitive.

I feel like they really missed the mark here. They could have went with a different embedded chip, with more PCI lans, and MINIMUM of 10GB NIC.

But otherwise, looking forward to a future filled with these types of devices in the market.

As I’m not a data HOARDER, and could honestly get buy with one of these with 4-5 2TB Drives. As I Imagine most normal people could.

Love the review and video! Looks like a great device. FYI, the max ram for the Intel Celeron N5105 is 16gb of DDR4-2933 ram. That is likely why the 64gb didn’t work. https://www.intel.com/content/www/us/en/products/sku/212328/intel-celeron-processor-n5105-4m-cache-up-to-2-90-ghz/specifications.html