Something that we have been working on in the lab is the Mellanox NVIDIA BlueField-2 DPU series. We have several models, and there is a bit of a misconception that we keep hearing in the market. Specifically, that these NICs are very similar to the typical ConnextX-6 offload NICs that one may use for Ethernet or Infiniband (NVIDIA has VPI DPUs that can run as Ethernet and/ or Infiniband like some of its offload adapters.) We wanted to just show a different view of the NIC to show a key differentiator.

Logging Into a Mellanox NVIDIA BlueField-2 DPU

First off, the 25GbE and 100GbE BlueField-2 DPUs that we have do not just have high-speed ports. There is also another port which is a 1GbE port. The interesting mental model one can use is of a standard Xeon D or Atom-based server where we have primary network ports plus an out-of-band management port. The 1GbE port is that management port.

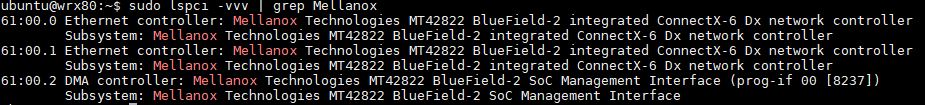

When we plug the NIC into a system, we can actually see the SoC management interface on the NIC enumerated. Aside from the OOB management port, we can also access the NIC via a rshim driver over PCIe as well as over a serial console interface.

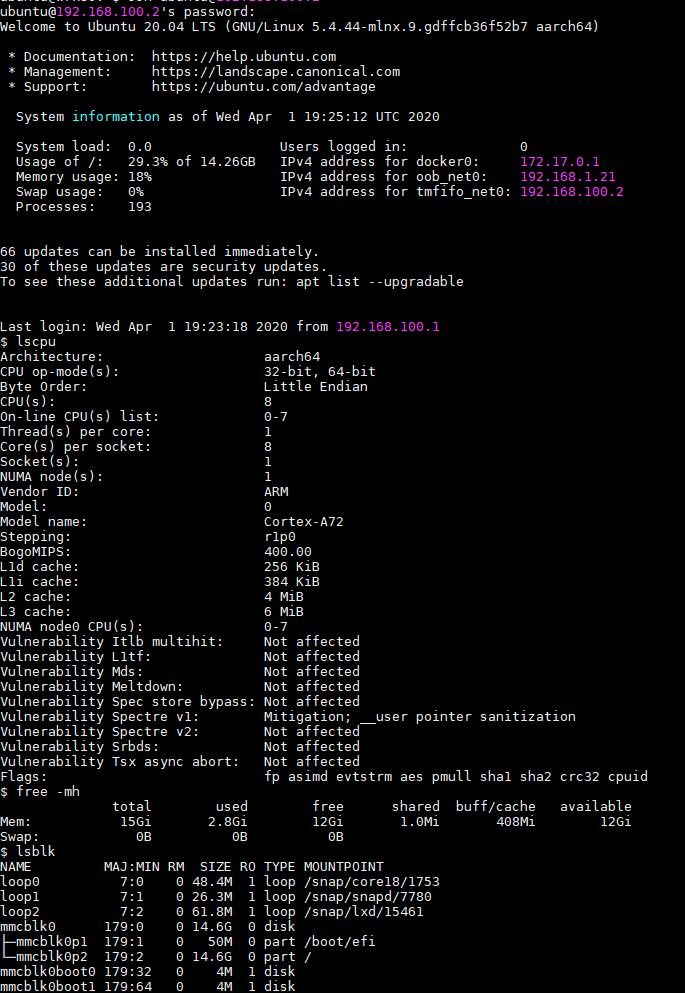

In the host system, we have tools to flash different OSes onto the NIC, but since the default OS is Ubuntu 20.04 LTS, that is what we wanted. Here is a quick look (the default login is ubuntu/ ubuntu on the NICs:

As you can see, we have 16GB of memory on the 100GbE card and 8x Arm Cortex-A72 cores.

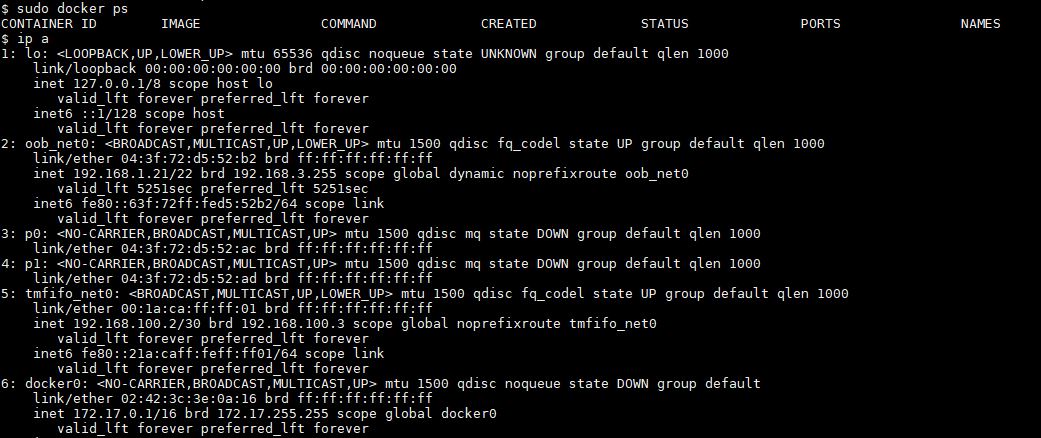

Something that we were not expecting, but makes sense, is that we could use sudo docker ps and docker was already installed on the base image. We can also see the various network interfaces.

Here we can see the high-speed ports (down in this screenshot) but also two other ports that are important:

- oob_net0 is the out-of-band management port. There is not a web GUI, but this is similar to an out of band IPMI port where one can access the SoC outside of the main NIC port data path.

- tmfifo_net0 is the port we use to connect to the DPU over the host’s PCIe link

- docker0 is the network for the DPU’s docker installation, not the host’s docker installation

What is interesting here is that the higher-speed networking ports are being shared with the host, but the NIC has its own OS and networking stack.

Final Words

Overall, the key that some have as a misconception is that the BlueField-2 is a re-brand of a ConnectX-6 NIC. Hopefully this shows some of the features that this is effectively its own server. There is high-speed networking, out-of-band management, an 8-core CPU, 16GB of RAM, 16GB of on-device storage, and ways to get to a console outside of the OOB/ host ports. These cards are low-power CPU/ low RAM servers themselves. That is why one of the key applications for the BlueField-2’s early adopters is as a firewall solution.

More on DPUs in the very near future, but we have many of these so there will be more coming over the next few weeks on STH.

So what is the use case apart from providing highly-offloaded networking for host CPUs?

Ram – we have a second piece and video going live in <12 hours that gets into that more and what the different DPU solutions are.

Applications for this kind of stuff could look like this:

http://www.moderntech.com.hk/sites/default/files/whitepaper/SF-105918-CD-1_Introduction_to_OpenOnload_White_Paper.pdf

http://www.smallake.kr/wp-content/uploads/2015/12/SF-104474-CD-20_Onload_User_Guide.pdf

https://blocksandfiles.com/2018/08/25/solarflare-demos-nvme-over-tcp-at-warp-speed/

https://www.xilinx.com/publications/results/onload-memcached-performance-results.pdf

https://www.xilinx.com/publications/results/onload-nginx-plus-benchmark-results.pdf

https://www.xilinx.com/publications/onload/sf-onload-couchbase-cookbook.pdf

https://www.xilinx.com/publications/results/onload-redis-benchmark-results.pdf

The Links are some quick Goggle hits

Will be interesting to see if there will be adoption in the open source community of similar initiatives to VMware project Monterey. Running your hypervisor from the DPU and having 100% of the physical host available for compute as well as intelligent network routing on the DPU will be revolutionary.

Of course AWS has done this for a long time, but sadly doesn’t seem to be interested in open sourcing the Nitro hypervisor.

David – you still need to run ESXi on the host. At least that’s how Project Monterey works. You have two ESXi per host. ESXi on host CPU will do all the virtualization while DPU will handle other tasks like firewall, vsan etc.

I’m curious, how exactly NIC sharing works? Do they act as separated interfaces with their own MACs? Or it’s exactly the same device present to both host system and DPU? If the latter, I’m not sure how that’s gonna work. You can’t have two networking stacks handling the same packets.

Seems more like a solution looking for a problem. I’ve yet to run into a concern where offloading the hypervisor would buy back resources amounting to anything on a modern Epyc/Xeon platform.

hi STH team!

I’m very interested in your opinions on this paper of performance of the BLUEFIELD 2 adapters, particularly because I have been a huge advocate for offload like this within my own company and among our ecosystem stakeholders extensively for as long as I can remember — anyway this paper is the first discouraging report I’ve come across, and I would very much like to understand how the benchmarks in this paper relate to applications in the field if anyone can talk about such things (my customers became investors with board seats for a excuse to close my loud mouth about such things in my defense it used to be great for recruitment)

https://arxiv.org/pdf/2105.06619.pdf

jmk – This is 8x A72 cores so any time you go into the CPU complex it is slow. Although BlueField-2 is a 2nd gen product, it still feels a bit like an early technology.