The NVIDIA GH200 is by far one of the most misunderstood packages out there today. It feels like it is time to put together an easier-to-understand version of what the chips are, and how they can be used. Originally, we were going to review a NVIDIA GH200 system today. When I was doing a pass of the article, and seeing many of the comments we have had over the past few quarters, I realized we needed a level-set on this super cool offering from NVIDIA. At the same time, when we say “GH200” there can be vastly different meanings of the hardware. Where AMD or Intel might advertise six or more different SKUs, NVIDIA advertises one. So let us get to it.

A Quick Introduction to the NVIDIA GH200 aka Grace Hopper

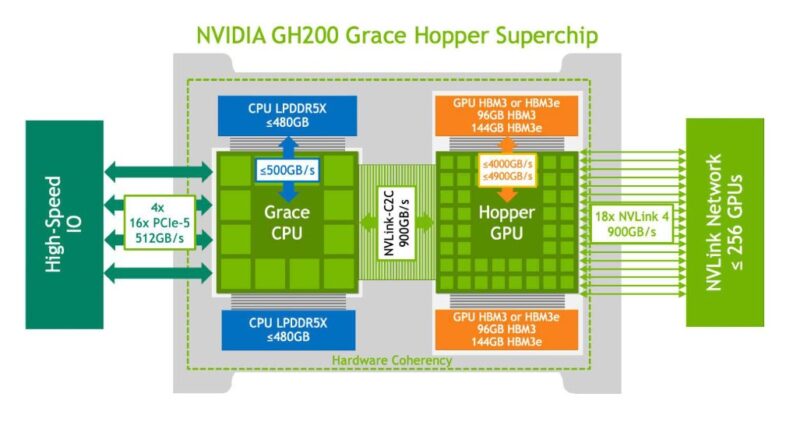

If you want an Arm CPU with LPDDR5X memory with a high-speed interconnect to a Hopper GPU today, you are probably looking at the NVIDIA GH200. Here is what NVIDIA’s official diagram looks like for the part:

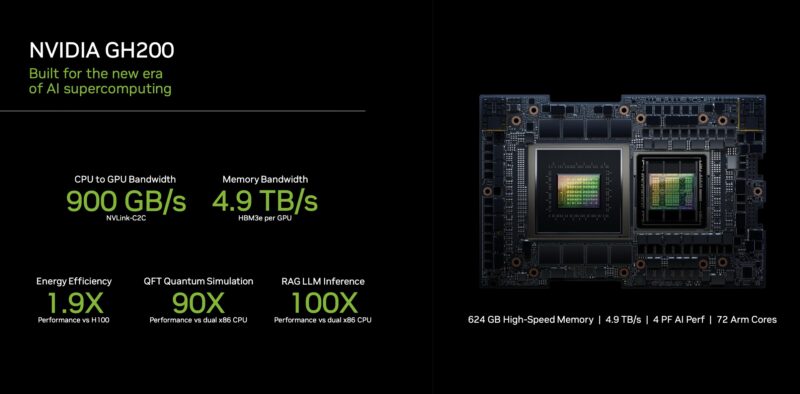

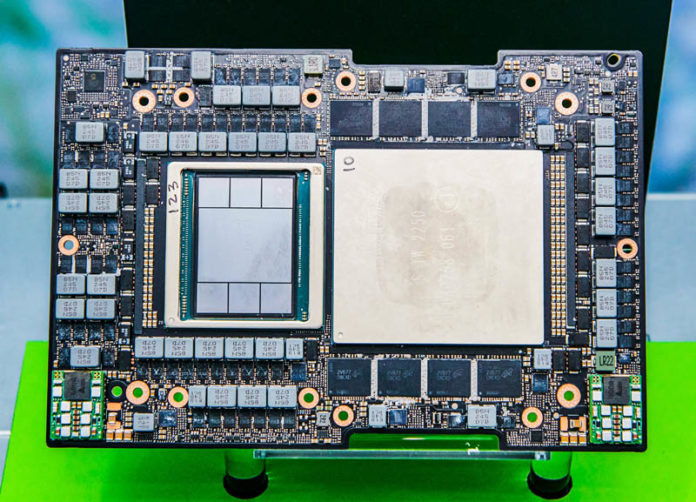

NVIDIA has two key innovations here that really set it apart from what you can get elsewhere. First, the LPDDR5X memory is soldered onto the package. Second, the NVLink-C2C is a high-bandwidth interface between the CPU and the GPU. The 72 Arm v9 cores are Arm Neoverse V2 from 2022 so they are not exactly unknown new technology. NVIDIA is also not designing its own Arm cores like Apple, Qualcomm Oryon, or AmpereOne.

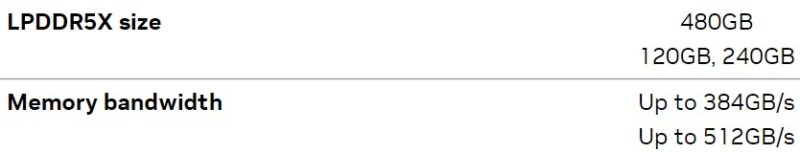

On the topic of the LPDDR5X, most will simply say that the GH200 has 480GB of memory. Reality is a bit more complex. There is also a 120GB version that has been sold as a bandwidth-optimized version, as well as a 240GB version. If you look at the NVIDIA specs, the 480GB version has up to 384GB/s of memory bandwidth while the 120GB and 240GB versions have 512GB/s.

One of the big innovations here is that by moving the memory on-package, NVIDIA does not need to traverse a motherboard and DIMM slots. That allows NVIDIA to drive high-performance at lower power. It also means that the NVIDIA GH200 power includes system memory.

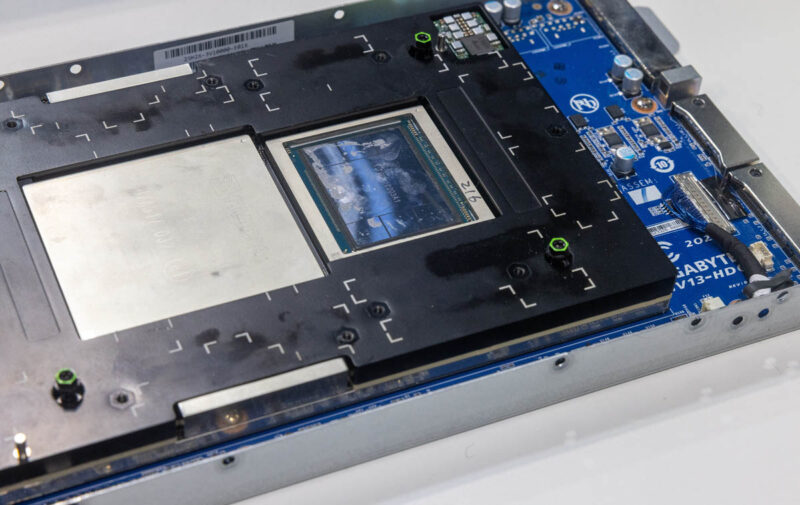

We often show the GH200 and Grace Superchip top side photos which show eight LPDDR5X packages per 72 core Arm CPU.

There are also LPDDR5X packages below as well for a total of sixteen per CPU.

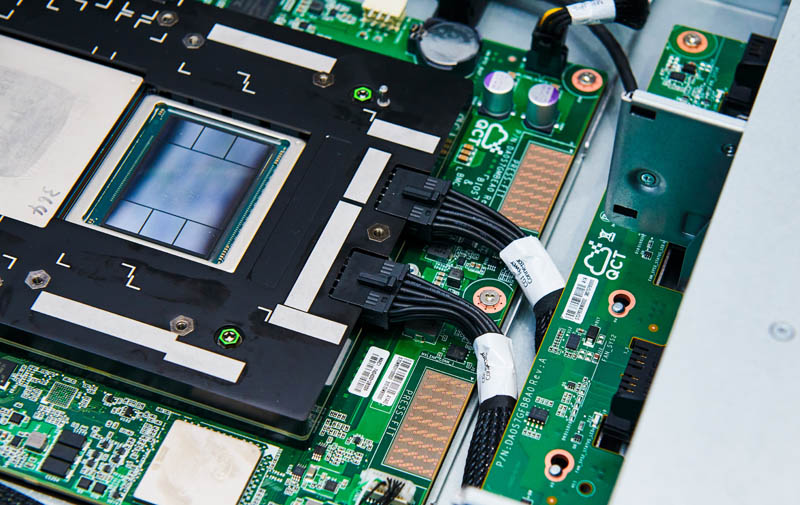

The Grace CPU side of the GH200 offers 64x PCIe Gen5 lanes which are arranged into four x16 root complexes. This is a lot lower than a standard server part, but since the Hopper GPU is attached via NVLink-C2C instead of PCIe, it is not directly comparable to an AMD EPYC, Intel Xeon, or other CPU. Still, these are used for attaching to InfiniBand or Ethernet adapters/ DPUs. GH200 systems do not have crazy PCIe connectivity by any means. If one uses two boot drives, that is 8 of the 64 lanes. An InfiniBand adapter for scale-out and a BlueField-3 DPU for the storage network would use 16 lanes each for half of the 64 lanes. NVIDIA is actually doing something really neat here. Alongside the system review next week on the STH main site, we will deep dive into what NVIDIA is building for the future in our Substack.

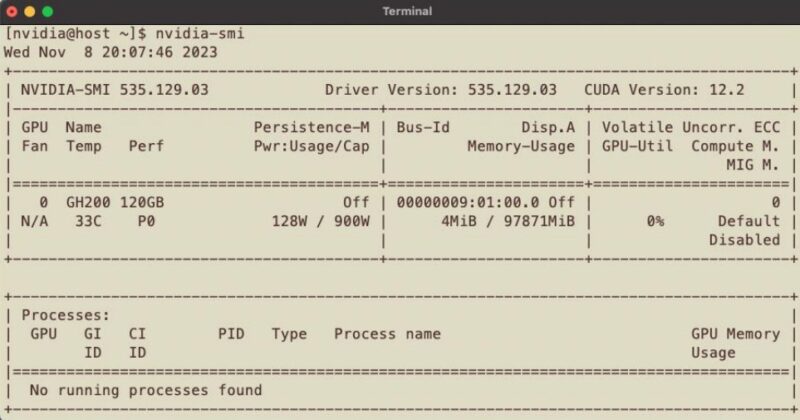

On the GPU side, we call this a “GH200” but it does not mean that the GPU onboard is necessarily a H200 variant. There are two versions. There is a 96GB and a 144GB version. Standard, a NVIDIA H100 has 80GB HBM2e for PCIe, but 80GB of HBM3 for SXM5 GPUs. Even the H100 memory configurations were different, but many gloss over this. The 80GB on standard H100s is delivered in five stacks. 80GB of capacity in 5 stacks is 16GB per stack.

96GB version then is all six stacks that you see around the package populated. You may also see 94GB versions where we were told some is reserved for yield reasons. This is a similar reason to why we see the H200 141GB variants with 144GB onboard.

So when we say NVIDIA H100, we can have 80GB or 96GB and it can be HBM2e or HBM3. When we say NVIDIA H200, we mean the 144GB (141GB) HBM3e version. When we say “GH200” the Hopper side of the equation can be either the 96GB H100 HBM3 GPU or the 144GB (141GB) HBM3e H200 GPU. I was corrected for saying the GH200 was an update of the GH100, because apparently both use the “GH200” name, even though you can get the H100 GPU in the GH200.

At this point, you may be thinking, three LPDDR5X memory configurations, and two HBM configurations, that is a lot to cover with a single GH200 name. You would be right, but there is one more fascinating dimension: power.

NVIDIA GH200 Power

A NVIDIA GH200 can be set to run between 450W and 1000W. Most running the 1kW level will be using liquid cooling. 450W is extremely low for a CPU, GPU, and memory. NVIDIA sells its GPUs for a range of configurable TDPs simply using the “nvidia-smi –power-limit=” tool. Average frequencies do not scale linearly with voltage, but if you have a 500W TDP and a 1000W TDP there will be a big difference in performance.

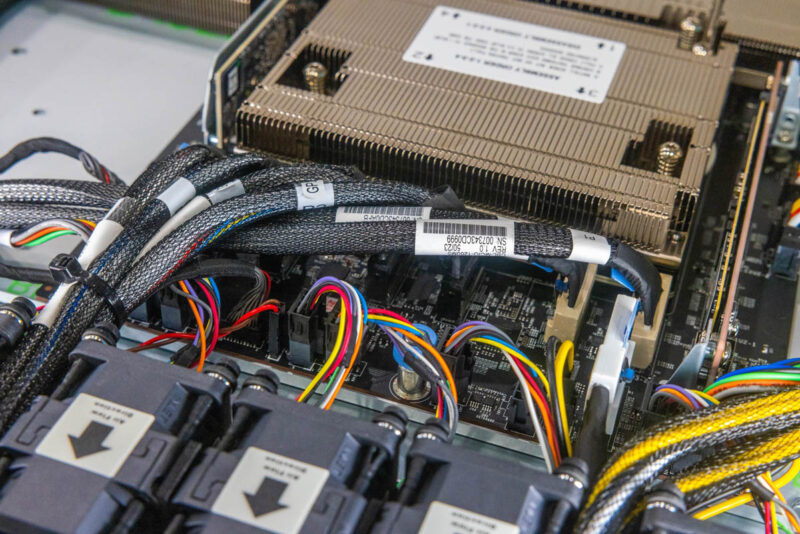

For those who do not know this, another fun part of the GH200 is that the power arrives directly from the power supplies plugged into the GH200 package. In a typical server, CPUs get their power from the motherboard.

The NVIDIA GH200 Recap

So just to recap, when someone mentions a GH200, there are some wild variances in what that can mean alongside the fact that there are Arm Neoverse V2 cores and a NVIDIA Hopper GPU. We can have:

- 120GB, 240GB, or 480GB of LPDDR5X Memory for the CPU

- 384GB/s or 512GB/s of LPDDR5X Memory Bandwidth for the CPU

- 96GB HBM3 or 144GB (141GB) HBM3E for the GPU

- 4TB/s (HBM3) or 4.9TB/s (HBM3E) of GPU Memory Bandwidth

- A huge range in performance depending on the TDP set

This is an enormous range to be called a “GH200”.

Final Words

Since the GPU in the GH200 can be either more like the SXM5 H100 or the H200 and TDPs can be very different, it is important to be clear about the NVIDIA Grace Hopper version one talks about. We have heard vendors try to tell customers that a GH200 96GB is a H200 because it is in the name, but that is not really the case. Aside from the memory capacity for the CPU side, be sure to know what you are getting in a system when it comes to the GPU side and also what TDP a system supports on the GH200. If it is air-cooled, you probably also want to confirm the TDP at what ambient temperature.

Hopefully, this quick guide will help folks better frame discussions and decisions around the NVIDIA GH200. It is a very cool part, but there are also enormous variances between the different configurations.

I think we need a 5 dimensional cube to tabulate the models by each axis of varying spec.

Anyway, thankyou Patrick for making plain what GH200 means. I certainly was under the impression that it was one thing and not a family.

I thought it was a product, not a family of products too.

Great write-up. I’ve been working with the GH200 for a couple of months and honestly really like it. The arm64 means a lot of newer apps needs to be built from source, but the performance is pretty good when clustering a few of them together. I definitively recommend using tcmalloc to utilize that CPUGPU memory transfer speed. When comparing 4xGH200/BF3 cluster to my 4xA100 watercooled system, it is more than twice the speed. Looking forward to seeing what the new GB200 brings with it.

I’m shocked. We’ve got a few thousand H100s and I thought GH200 meant all were H200 type.

Best GH200 resource out there other than what NVIDIA’s own site’s got.

So if you’ve got the 480GB GH200 you don’t have insane memory bandwidth? The combined ranked DIMMs will hit these numbers on normal CPUs ez.

Thanks, great overview. ChipsandCheese had an interesting look at the Grace CPU, and one of their Conclusions was that the Neoverse V2 -based Grace CPU showed some of the downsides of Nvidia using a not-customized ARM Neoverse V2 Design probably left performance on the table. https://chipsandcheese.com/2024/07/31/grace-hopper-nvidias-halfway-apu/