Today we wanted to take a look at the liquid cooled Supermicro SYS-821GE-TNHR server. This is Supermicro’s 8x NVIDIA H100 system with a twist: it is liquid cooled for lower cooling costs and power consumption. Since we had the photos, we figured we would put this into a piece.

A Look at the Liquid Cooled Supermicro SYS-821GE-TNHR 8x NVIDIA H100 AI Server

Regular STH readers may have seen this system recently in our Supermicro liquid cooling video from a visit over the summer:

We did not tell Supermicro that we were doing this piece, but since they paid for the flights out on the original piece we have to say this is sponsored.

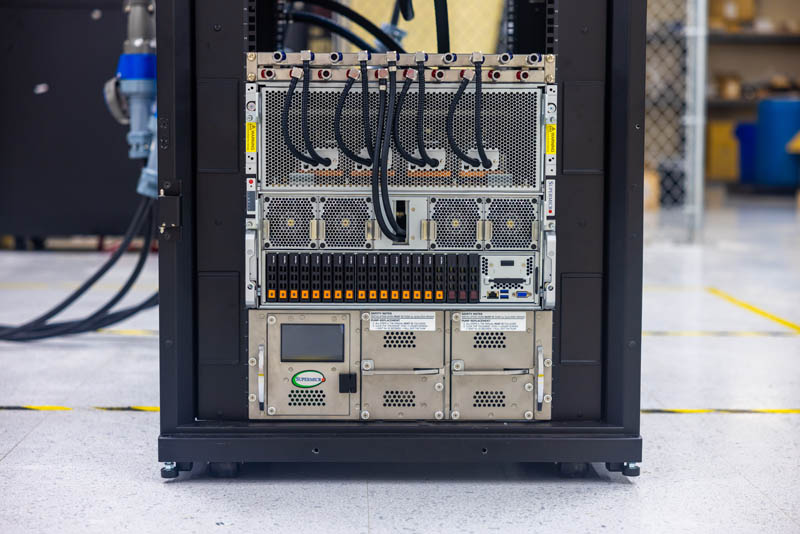

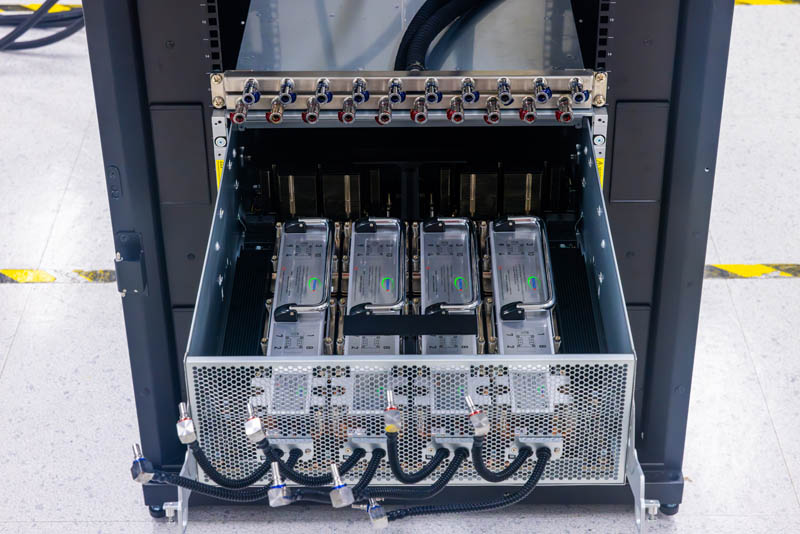

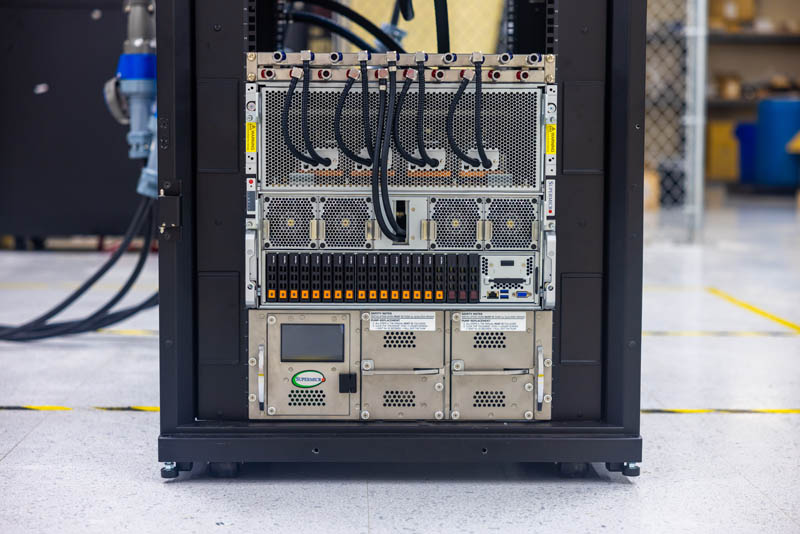

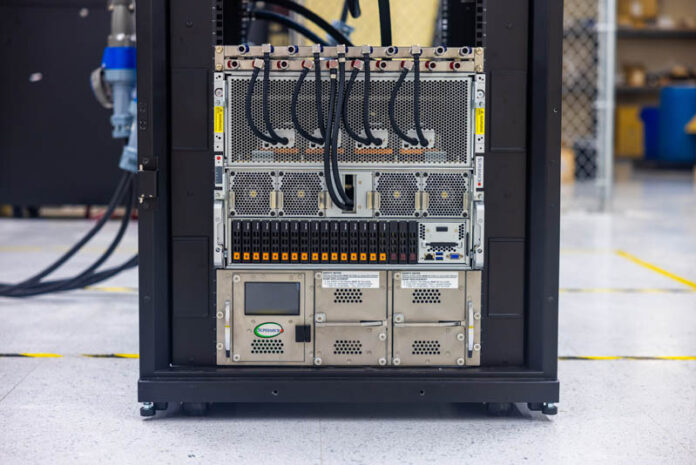

Here is the system pictured with the horizontal rack manifold on top and the Supermicro cooling distribution unit (CDU) on the bottom.

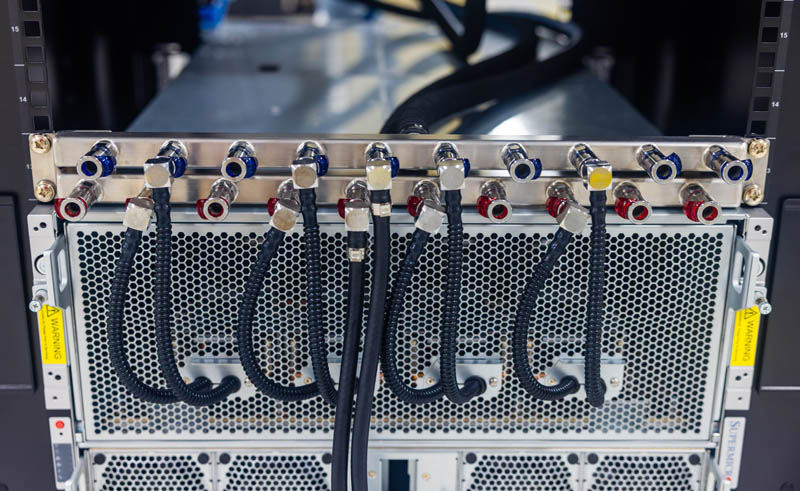

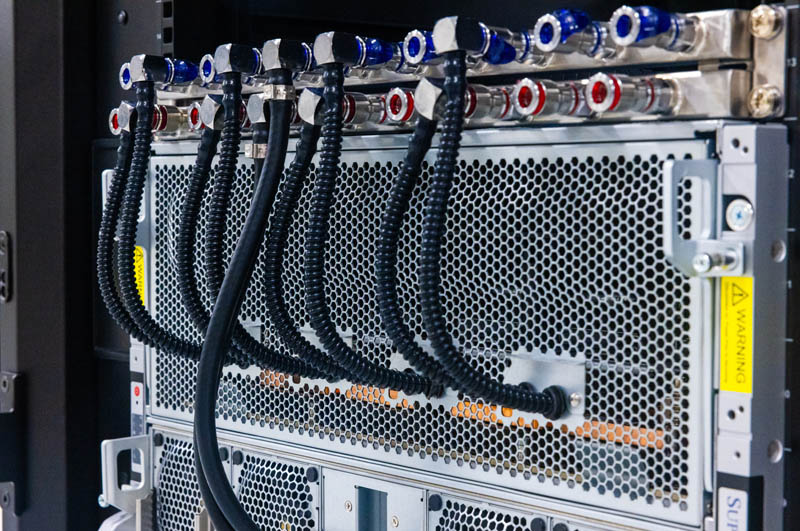

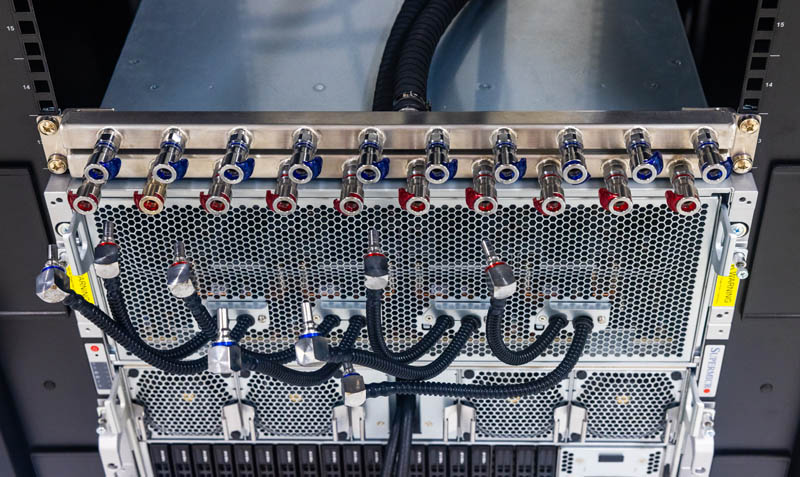

As one can see, the horizontal rack manifold allows for five different inlet/ outlet pairs for liquid cooling.

Four of these pairs go to the top tray and one to the CPU tray.

Here are the hoses, all disconnected. It took roughly 20 seconds to disconnect all ten quick disconnect fittings.

The GPU tray on this system slides out. There are still a few systems on the market where the GPU tray does not slide out this easily, which is a differentiator. GPUs actually do fail especially in 24×7 HPC or AI clusters, so this is pretty much a required feature for high-end setups.

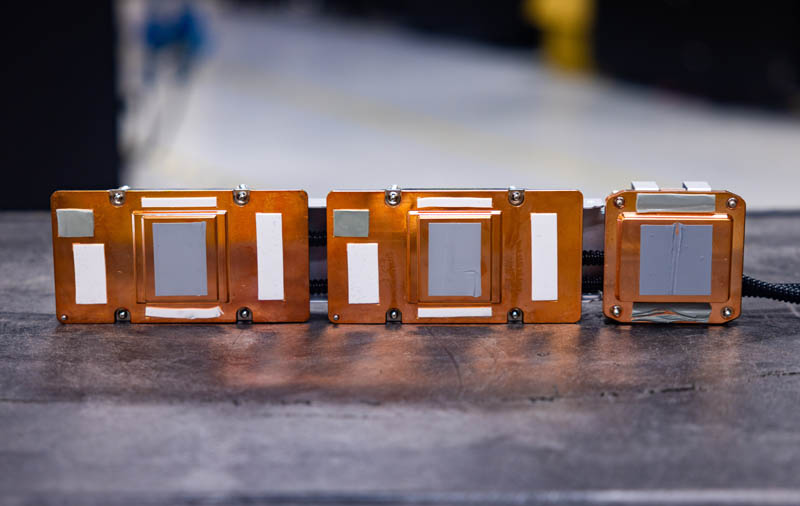

Inside the tray, we can see four sets of dual GPU liquid cooling blocks with a single NVSwitch block. All three components are cooled using a loop, and the system has four loops for GPUs.

Here is another look with the NVSwitch side being on the front of the chassis.

We have seen some other liquid cooling solutions that do not cool the NVSwitches, but since theses are well over 100W each, they need to be liquid-cooled to keep fan speeds down.

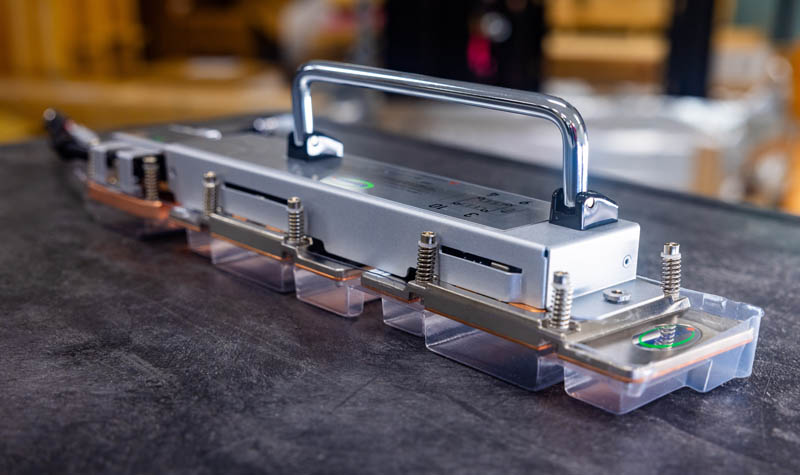

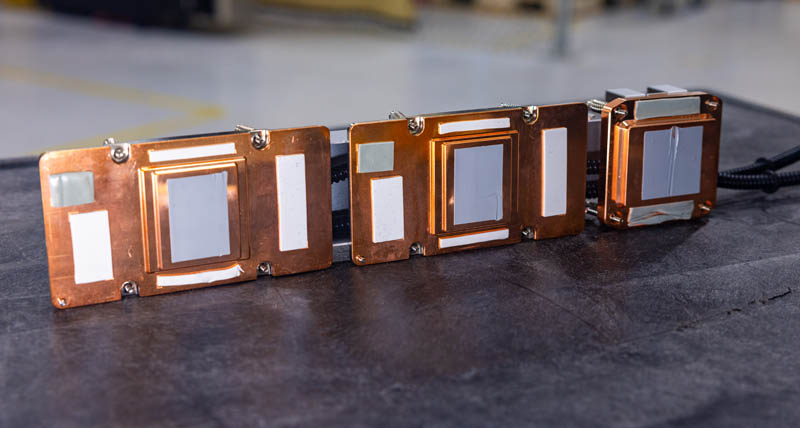

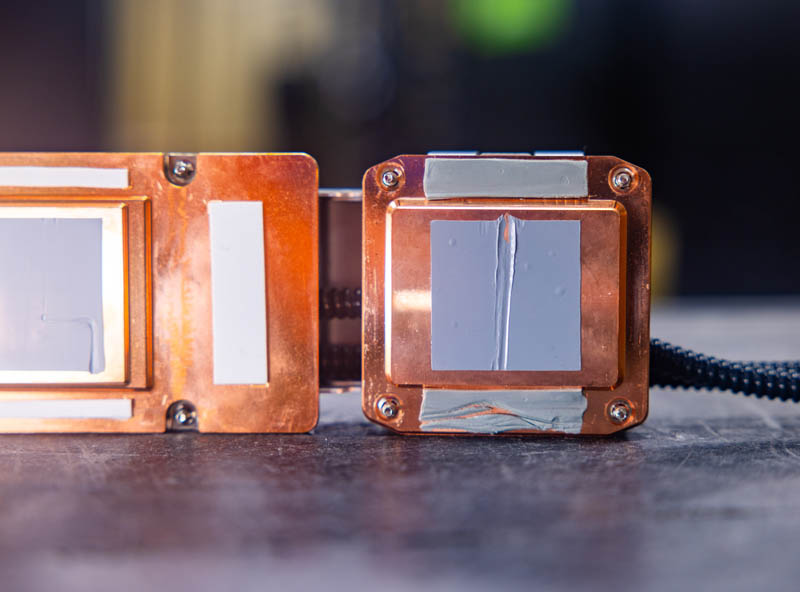

Here is a look at the liquid cooling block from the GPU side.

Here is the other side.

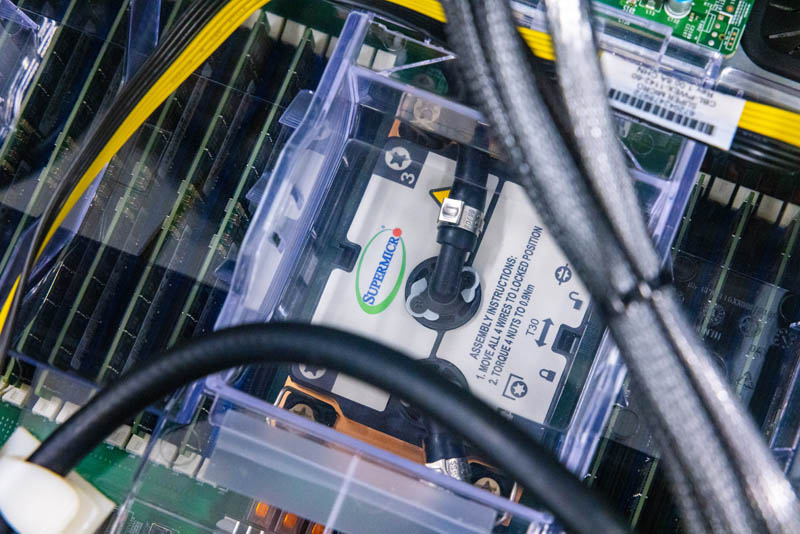

These even have little logos since they are Supermicro designed.

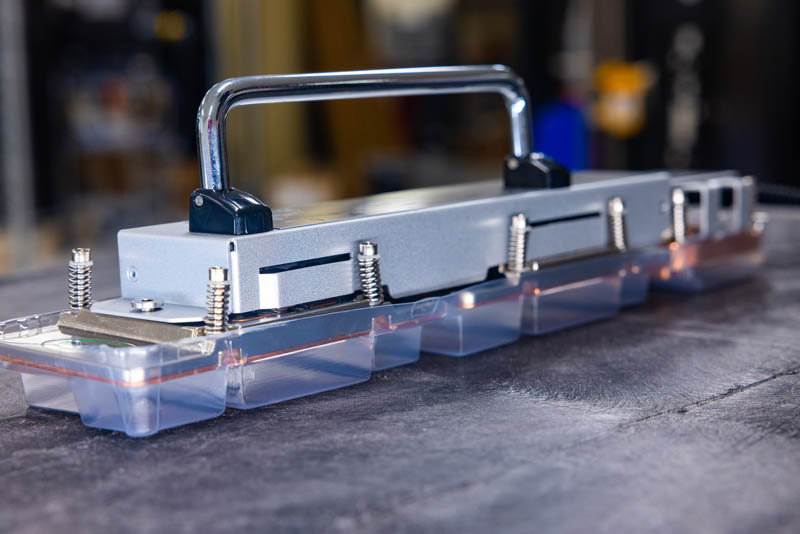

Here is the smaller block for the NVSwitch.

Here is the bottom with the two GPU cold plates and the NVSwitch cold plate.

Taking a look at another angle, we can see paste and pads for all of the key H100 components.

Here is the NVSwitch block that we may or may not have messed up the paste on when moving it.

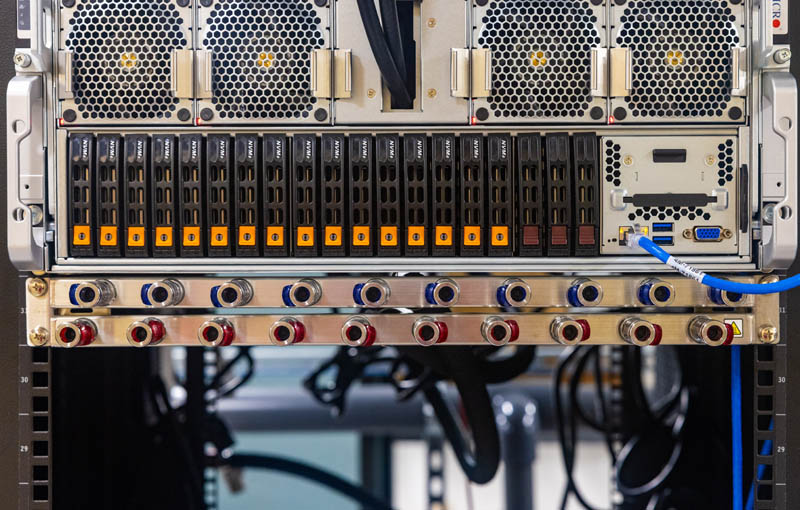

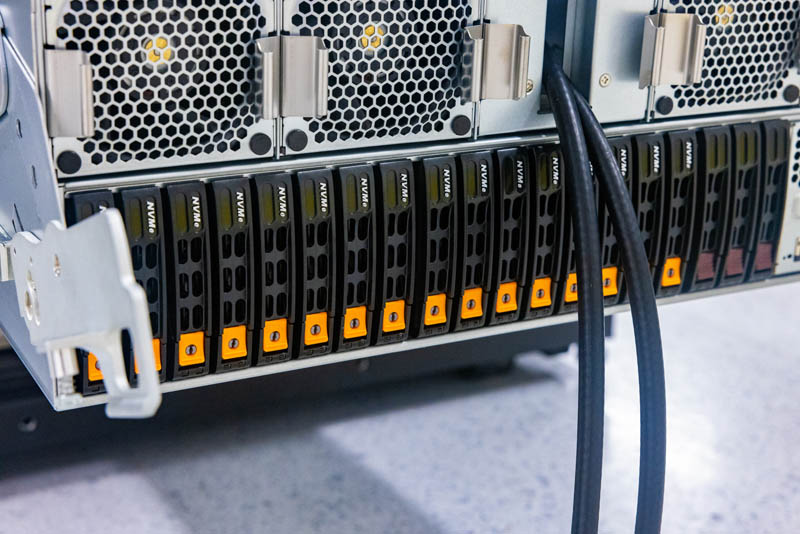

Below the GPU tray is the CPU and storage tray.

Here, we can see an array of storage for the system and two inlet hoses for the CPU cooling loop.

On the right, we get the chassis management module for features like IPMI and local management.

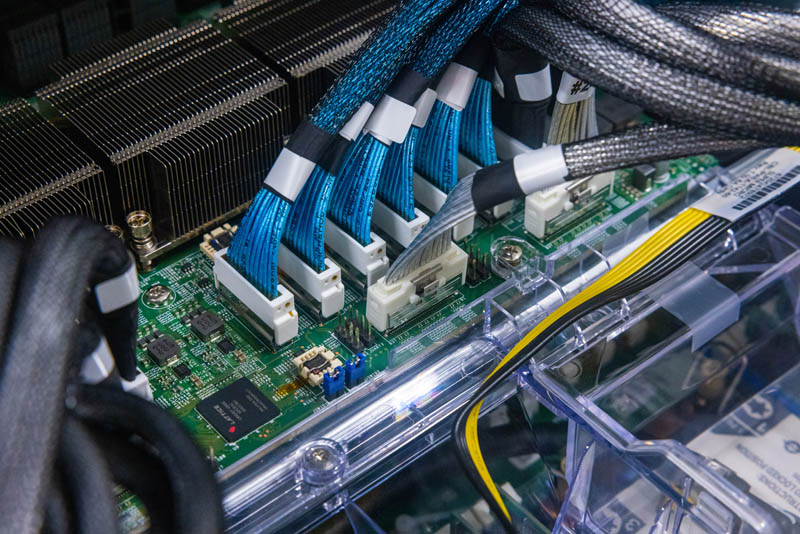

Here is a better look at the CPU cooling loop and fans. The fans are still needed to cool all of the lower-power components like DDR5 modules, PCIe switches, and so forth.

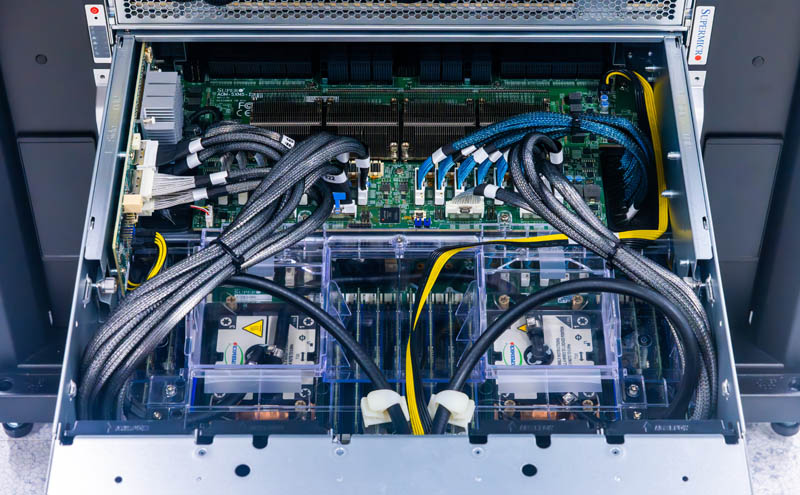

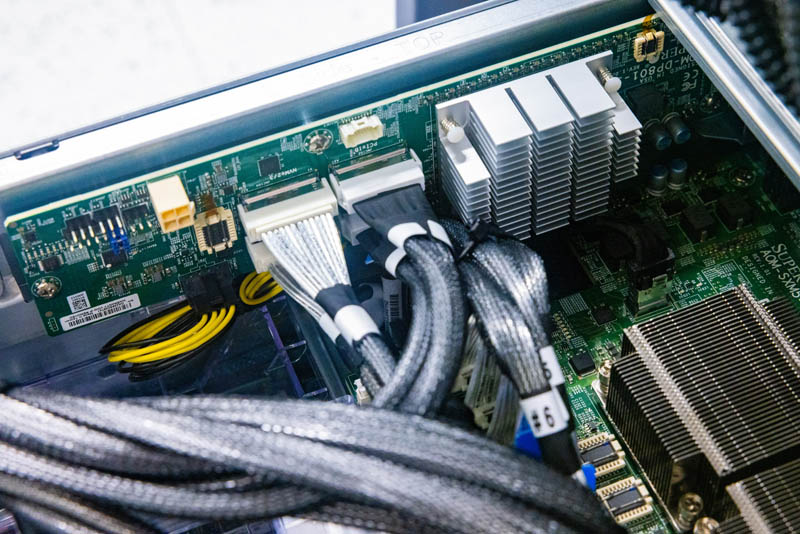

Pulling the CPU and storage tray out, we can see a lot going on.

There is an airflow guide to route air over the 32 DDR5 DIMM slots.

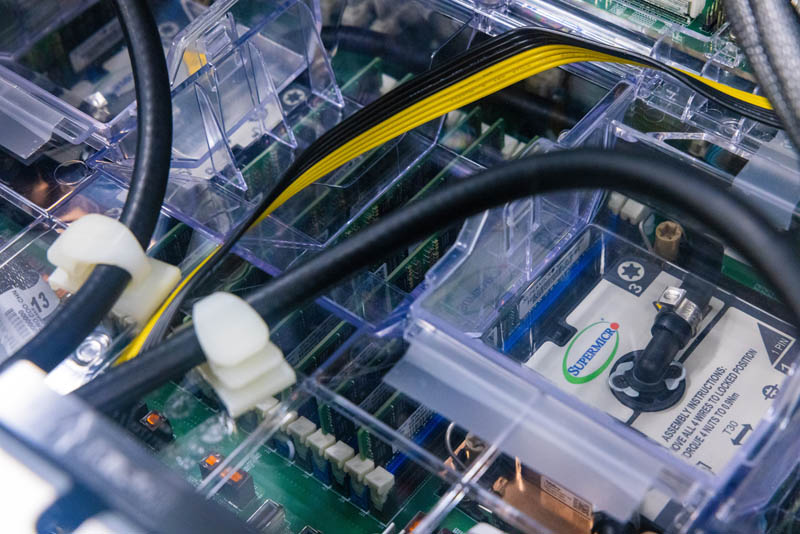

Here we can see the CPU liquid cooling block. Something to note is that Supermicro has both Intel Xeon and AMD EPYC CPU trays that can be used in this server so if one wanted to use something interesting like Intel’s onboard accelerators or 128-core AMD EPYC Bergamo parts, that is fairly easy to customize.

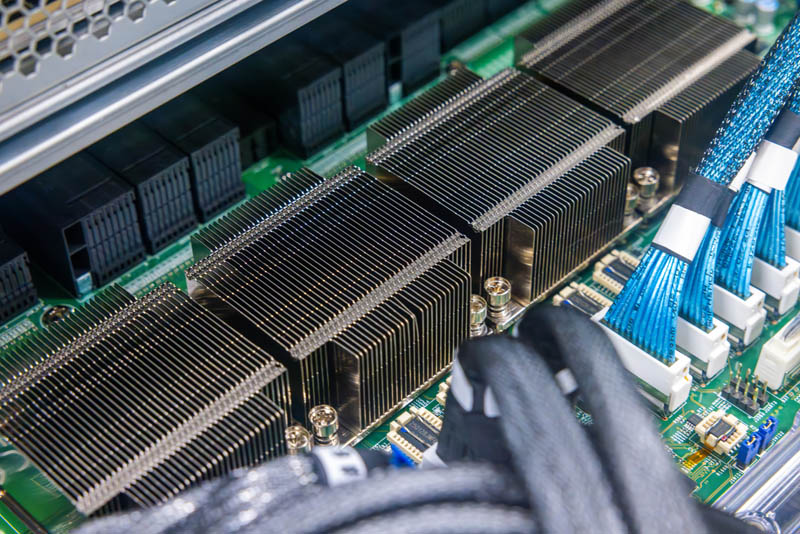

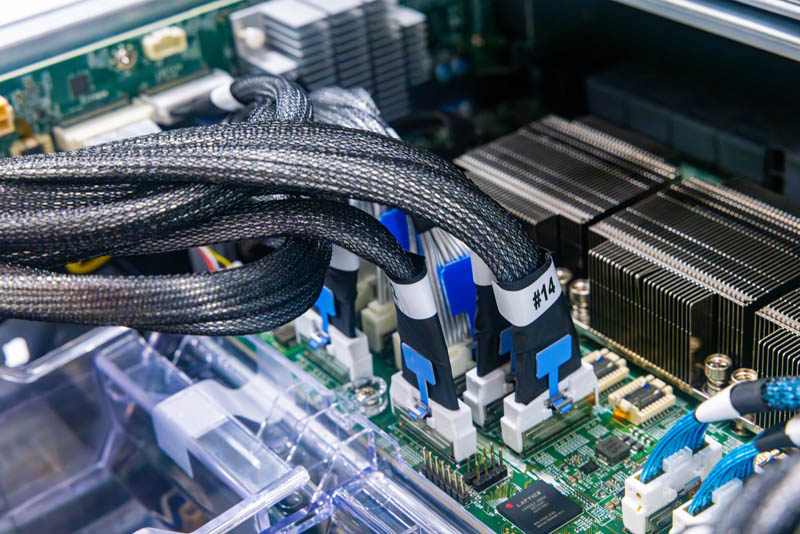

A big part of AI servers these days are the PCIe switches. Here we can see the PCIe cables since we need cabled connections between the CPUs, PCIe switch board, and other components.

Under these heatsinks, we have PCIe switches.

Here is another set of PCIe cables.

Here is a top view of these.

Here we can see the DP801 expansion model. There are NIC DP801s, PCIe expander DP801’s, and more.

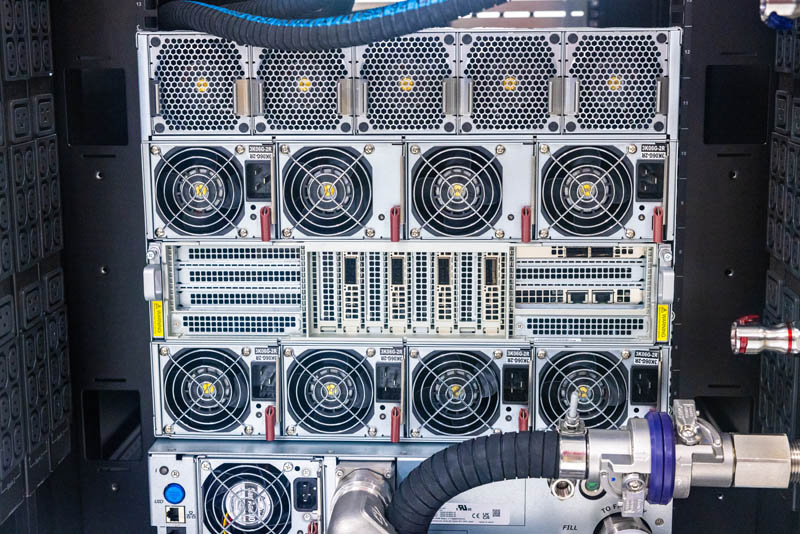

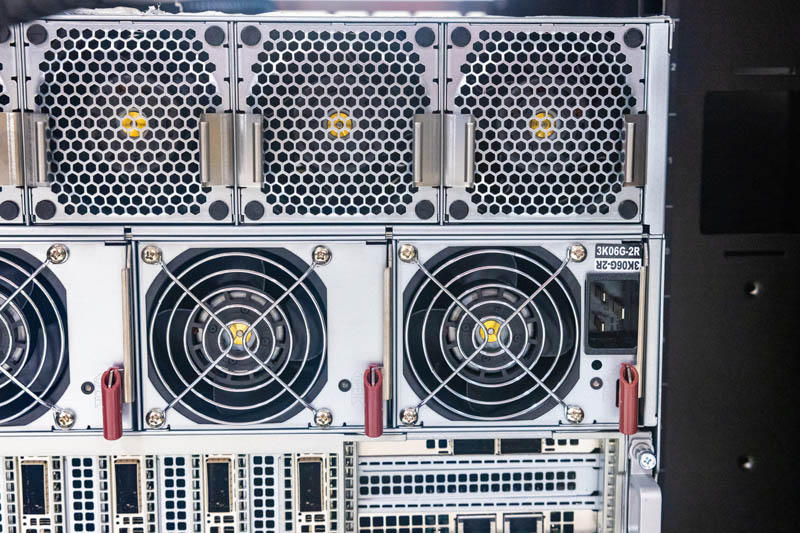

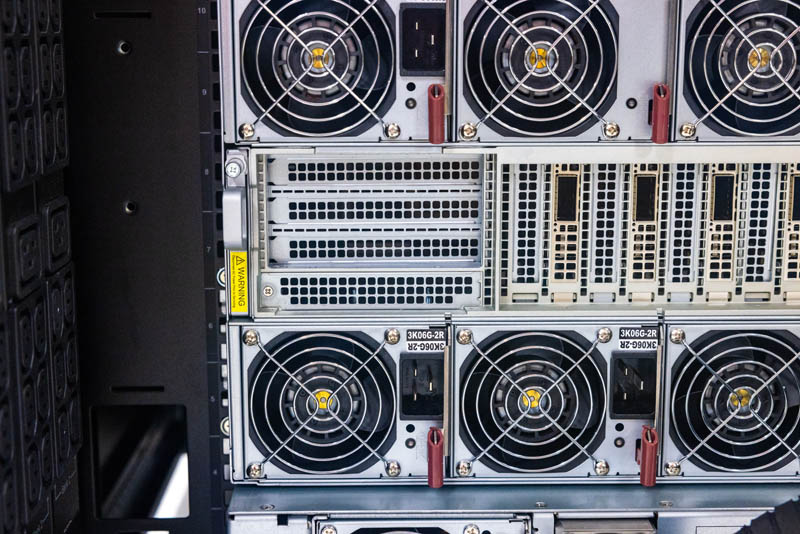

Moving to the back of the system, we see a very standard feature set from the major AI systems players. The rear is power supplies, networking, and fans.

The fans are hot-swappable and the power supplies are big.

Each power supply is a 3kW PSU. As one might imagine, Supermicro built this system not just for current-gen GPUs but also for future GPUs. The system is also designed alternatively for air cooling which is where we would expect higher chassis power draw.

Here is a look at the networking tray. The networking tray can have Infiniband, 100GbE, 200GbE, 400GbE adapters, and more. We even see two copper ports here.

As one can see, the networking tray can be removed for easy servicing and customization.

As you may have noticed, we looked at more than one of these servers. When we looked at the performance of the NVIDIA H100 80GB GPUs for air cooling versus liquid cooling, the performance was effectively the same. The reason one would choose the liquid cooling option is for lower power consumption leading to lower operating costs and potentially higher rack density.

Final Words

Often folks assume that all NVIDIA H100 8-way Delta Next systems are the same. Now that we have had hands-on time with almost all of the options out there, it is evident they are not. Air cooling and Intel Xeon Sapphire Rapids (and soon Emerald Rapids) are table stakes at this point. Pricing, availability, serviceability, and the ability to customize with things like liquid cooling, AMD options, different NICs, and more are the big differentiators at this point.

Hopefully, this was a cool look at the Supermicro SYS-821GE-TNHR system. This is one of the massive systems that is extremely popular for AI. We have been looking at Supermicro AI GPU training systems since 2016/2017, and it is cool how we have come from 8x and 10x PCIe GPU training servers to today’s very flexible liquid-cooled options.

you know what’s funny is those akihisa hose clamps are actually one of the worst choices. those were the first ones i picked too based on my guess they were going to be the most secure but it turns out the only test they excelled at was how much torque you could give them before they broke

Would be great if you had mentioned what the MRSP for this system might run.