Supermicro ARS-210M-NR Internal Hardware Overview

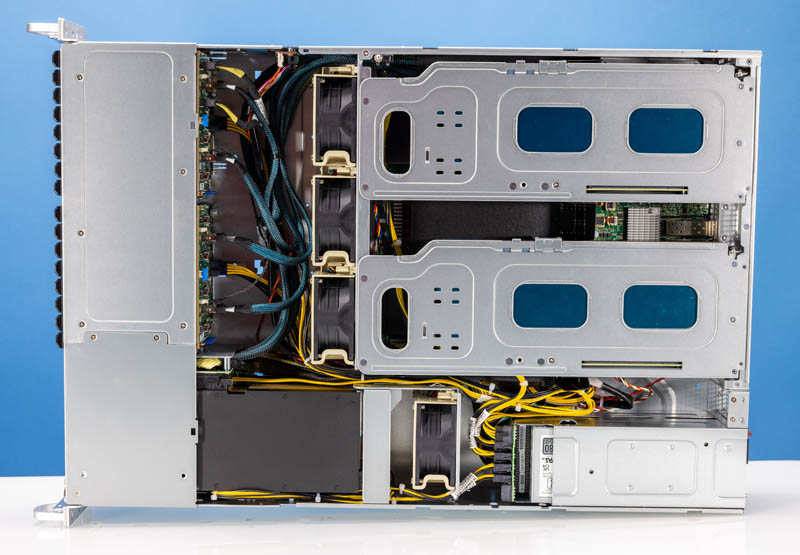

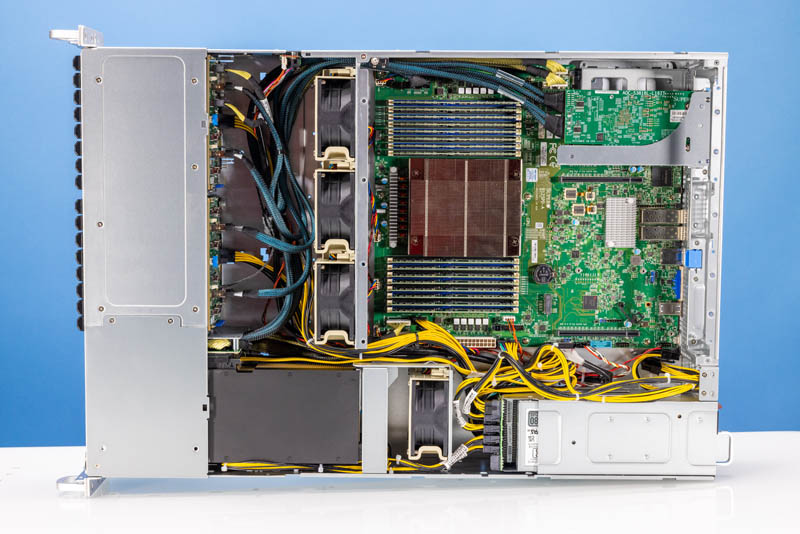

To help orient our readers, here is the system with everything configured. One note we will make is that this is a pre-production system, so some features like stickers, as well as the CPU airflow guide, are not in their final form.

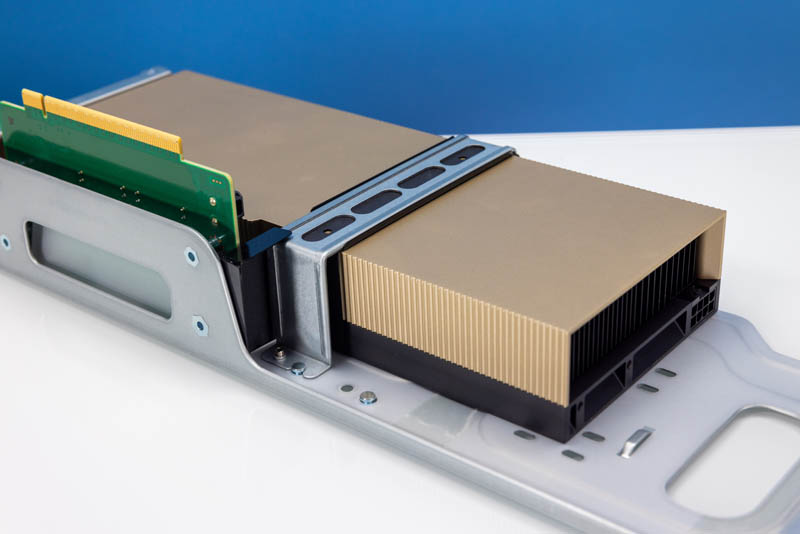

On the subject of those GPU risers in the rear, they are very interesting. Here is one out of the system with a NVIDIA A16 installed. There is a strap that goes around the GPU to hold it in place. This strap was not too difficult to install and required only one screw. That was one of the only screws on needs in order to configure this system.

Here is a look at another small feature of this generation of servers. There are two retention clips for PCIe cards, so one does not have to use screws to lock cards in place.

Here is a look at these locking mechanisms in the unlocked position. Some vendors do a single lock across all slots on risers. That means one lock to access all cards. This is a unique solution because it allows, for example, a single-width card to stay locked in place while accessing a second one in the riser. While it may be a small feature, this is STH so we are going into that level of detail.

Achieving the option of having either two single or one dual-width card in the riser is simple. Supermicro’s risers have slots for two cards. There is the PCIe x16 connector on the bottom of the riser, but if one wants to use a second card, PCIe lanes can be brought over via cables. That is a very flexible design.

Here is the NVIDIA A16 in the riser without the strap. These risers remove without screws.

With the risers out and the pre-production airflow shrouds as well, here is a look inside the server. Supermicro told us the thin airflow guides we had would be replaced by hard plastic versions later, they were just not ready when we got the servers. That is why we are not focusing on them.

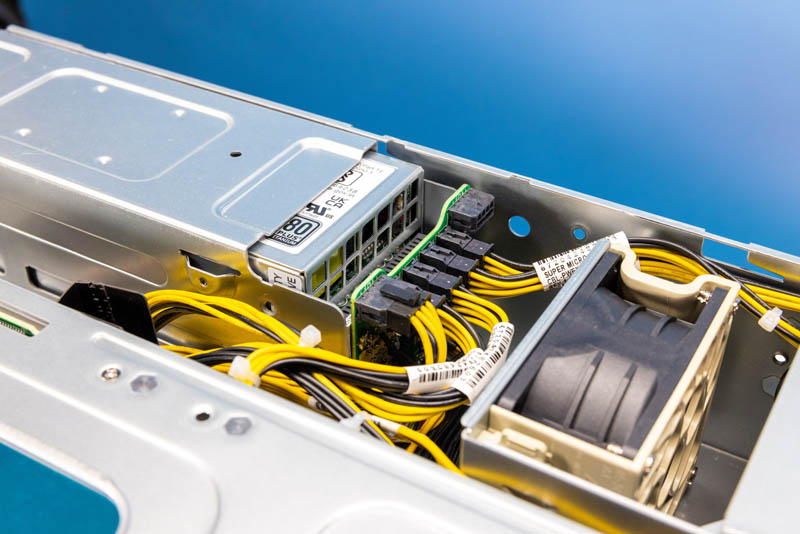

The power distribution in a GPU server is always interesting. Between the front GPU fan and the PSUs is the power distribution board that sits vertically in the chassis. This is where the power is distributed via cables to the motherboard as well as the GPUs.

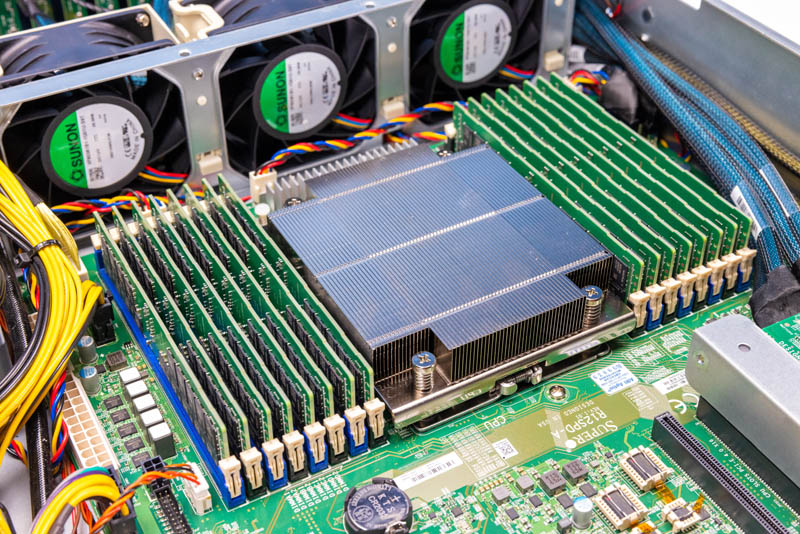

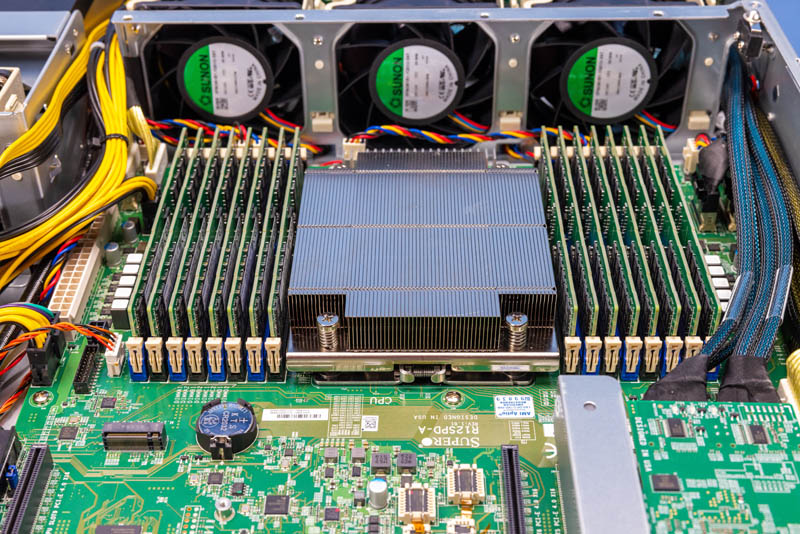

Cooling for the main part of the server utilizes three large hot-swap fans.

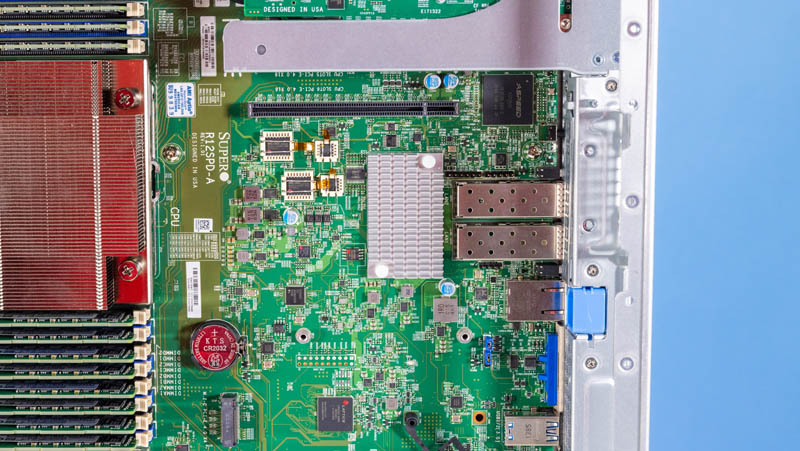

Behind the fans is the Supermicro R12SPD-A motherboard. This motherboard’s main feature is the Ampere Altra socket. It is capable of accepting both the Ampere Altra (up to 80 cores) and the Altra Max (up to 128 cores) CPUs.

The CPU socket is flanked by 16x DDR4-3200 DIMMs in 8 memory channels.

Given this is 2023, 128 cores and this DIMM configuration may seem top-end, but for context, this is about half the memory bandwidth and CPU performance of a top-end AMD EPYC 9654 “Genoa” part. On the other hand, it is also much more cost-optimized using DDR4 memory, PCIe Gen4 motherboard and cabling, and lower-power CPUs. The real benefit here is that for many applications that are designed to run on mobile devices like smartphones that utilize Arm instruction sets, this is a server that utilizes the same.

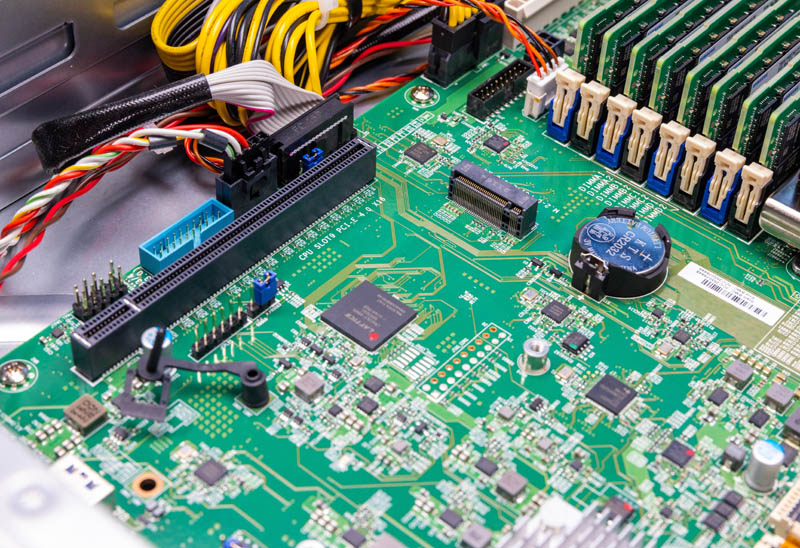

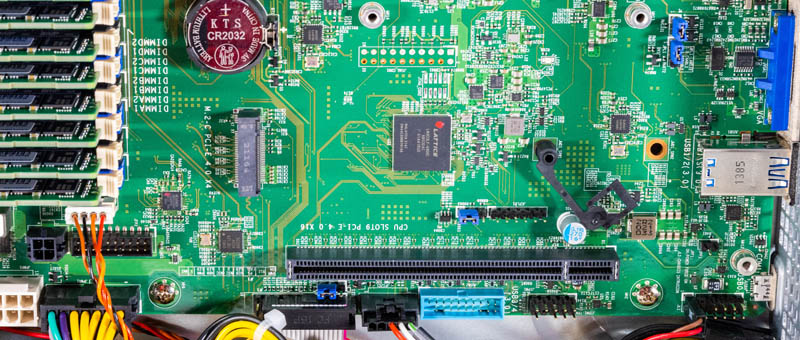

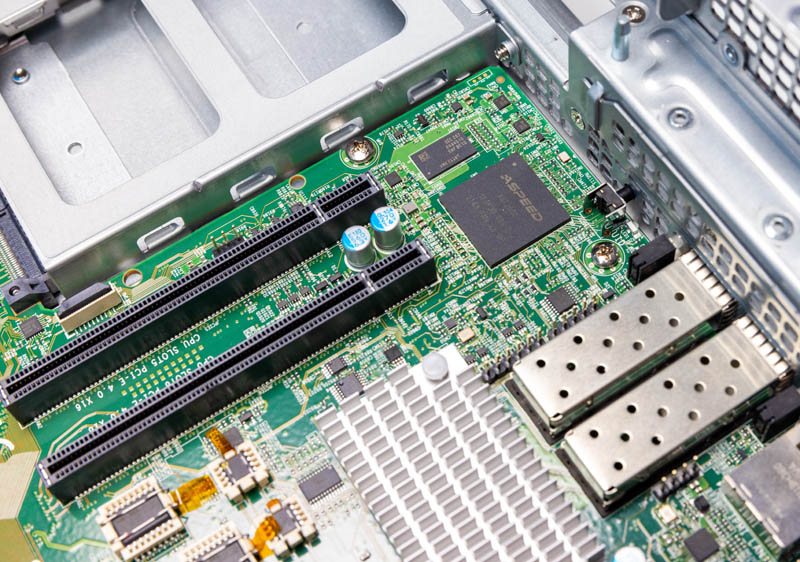

Behind the CPU and memory, we have the rest of the motherboard. Here is one of the PCIe Gen4 x16 slots and a M.2 boot SSD slot. We did not utilize this M.2 slot. Specs say it should be an x4 slot. It is also toolless, so there are no small screws to retain the drive.

Here is another look at this area. One small item to note is that Supermicro’s Mt. Hamilton motherboard is not using a standard ATX power input here, although it is available on the board. This is different from many of the servers we see these days with the proprietary motherboards that have PSU receptacles directly on the board. This is a more general way to make a motherboard, and is why we also see it in other platforms like the Supermicro ARS-210ME-FNR we reviewed.

The BMC in this server is the ASPEED AST2600. As we will show in the management section, it is running OpenBMC.

The big feature behind the CPUs is a heatsink with two SFP28 cages. Unlike on an Intel Xeon server where a heatsink behind the CPU is often a PCH, this is something different. It is a NVIDIA (Mellanox) ConnectX-4 Lx dual port base networking solution.

The final portion of the motherboard we wanted to look at is this section with the cabled PCIe lanes as well as two more expansion slots.

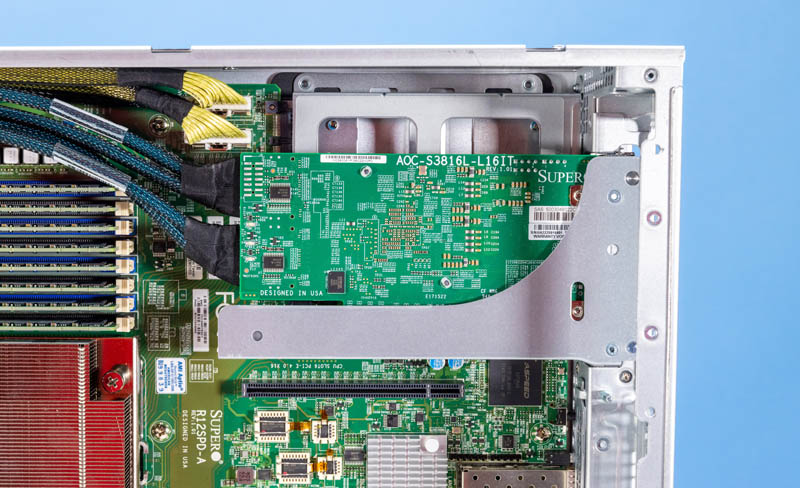

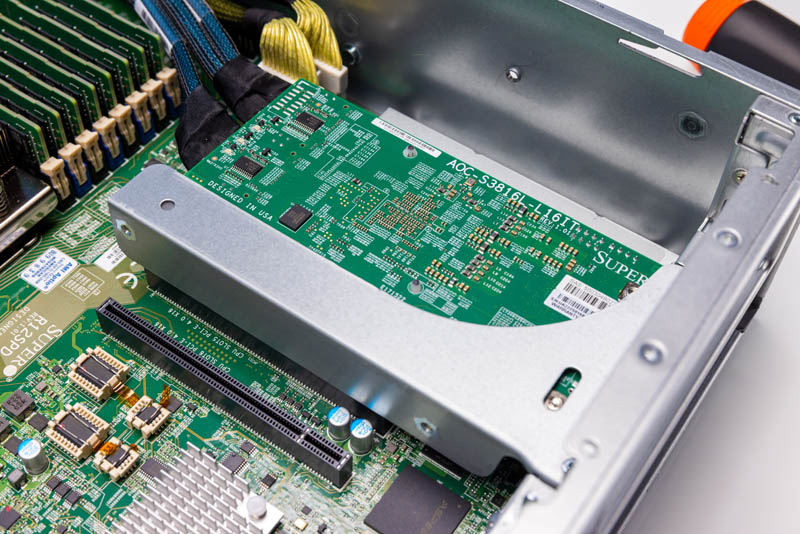

There is a low-profile PCIe Gen4 x16 slot. In that slot we have a Supermicro AOC-S3816L-L16IT which is an IT mode HBA based on the Broadcom SAS SSAS3816 tri-mode controller.

If one were not using more than a few drives in the front, one could instead use this for additional networking or another PCIe accelerator. This riser is also toolless.

Below that riser, we have an OCP NIC 3.0 slot.

Next, we are going to get to the topology section of this review because it is very different than others that you may have seen.

STH review of NVIDIA A16 when? I loved your old GRID M40’s https://www.servethehome.com/nvidia-grid-m40-4x-maxwell-gpus-16gb-ram-cards/

But… can it run 16 copies of Crysis at 1080?

Title is kind of confusing as there are not 16x GPUs in the system reviewed.

The A16 is a card that contains 4xA2s each with 16GB of dedicated ram. With 4xA16 you would have 16x A2s.

We ran the Bombsquad-stress in this system which up to 128 instances (concurrent users) with 1080P@60fps.

When running the high-quality Genshin Impact this system can support 48 instances and all running 1080P@60fps.

If this has 16x GPUs, then the First Gen EPYC dual-socket configuration had 8-CPUs.

It’s how Nvidia wants to market it but it’s not how we’ve discussed these sorts of products in the past. If one of the GPUs goes bad, how many do you need to replace? 1? Nope, you need to replace 4.

DDR5 almost had this problem but we’ve all settled on calling the current systems by the number of DIMMS instead of sub-channels.