Some days we get to review machines that are nothing short of being fun. This is one of those. The Supermicro ARS-210M-NR is the company’s 2U MegaDC server designed for scale-out deployments. It utilizes Supermicro’s Mt. Hamilton motherboard and the Ampere Altra/ Altra Max series processors. We previously reviewed the Supermicro ARS-210ME-FNR 2U Edge Ampere Altra Max Arm Server that had more of an edge computing focus. This is a 2U server in a configuration designed for dense cloud gaming with 16x GPUs via 4x NVIDIA A16 cards. With that, let us get to the system.

Supermicro ARS-210M-NR External Hardware Overview

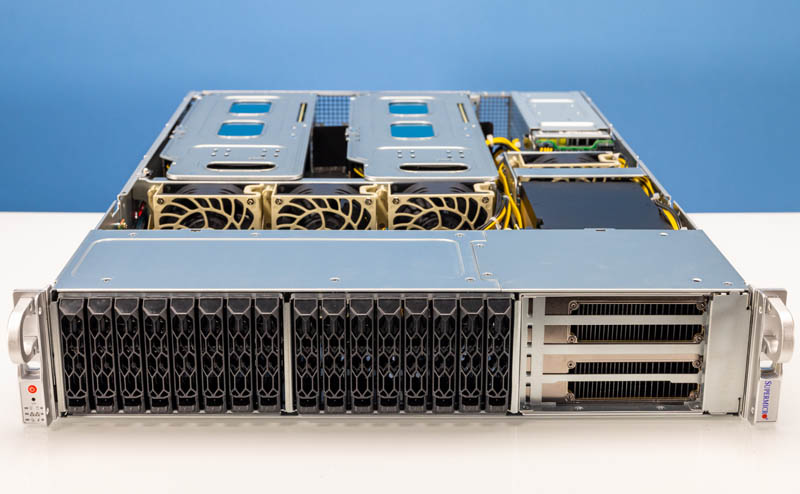

The system’s front tells us that there is going to be something different about this system. The front of the system has both storage and GPUs present.

On the left side, we have a mix of NVMe as well as SATA/ SAS storage, but this is configurable. The 2.5″ drive trays are Supermicro’s toolless design. Also, we will note that Supermicro has versions of this server without the front GPUs and more storage bays instead.

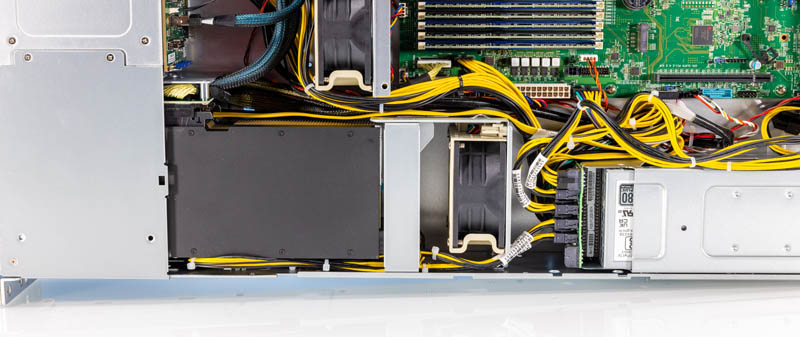

Since the internal overview is extensive, we are going to show a bit of the inside, such as the backplanes in our external overview. Here we can see that there are four of the bays wired for NVMe, while the rest lead to a Broadcom SAS/ SATA controller we will see later in this review.

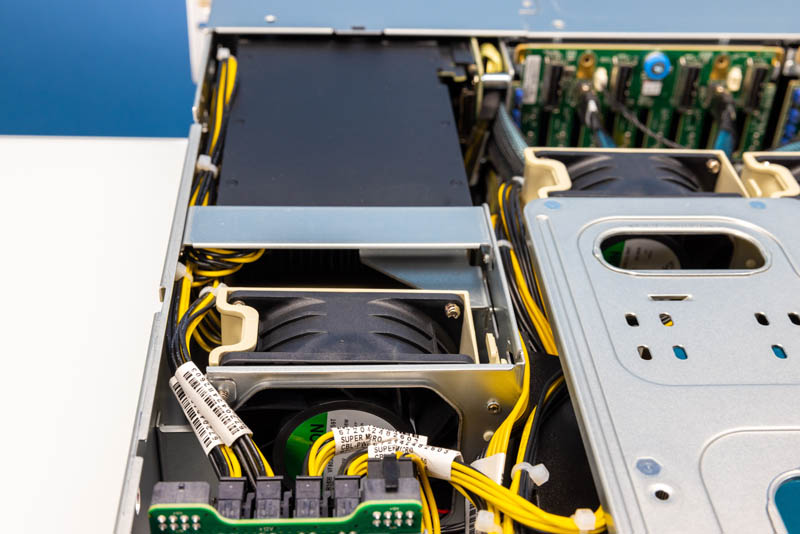

The right side of the chassis has two double-width GPU slots. There is another option to run all four of the double-width slots that we will show as single slot instead, but this server has a unique configuration.

The two front GPUs are cooled by a giant fan.

Here is another look at the GPUs and the fan behind them from a different angle.

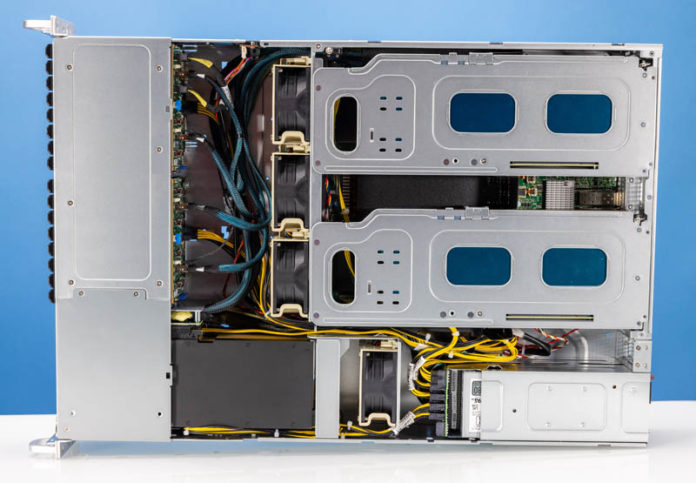

The rear of the system is also very busy with power supplies, GPU risers, and rear I/O.

The power supplies are 2kW 80Plus Titanium redundant units. In this server, these are needed.

The rear I/O includes two USB 3.0 ports, a VGA port, an out-of-band management port, and two SFP28 ports for 25GbE networking.

Above the rear I/O and also to the right side are two GPU risers. Again, we have double-width NVIDIA A16 GPUs installed, but we will show how these work with single-width cards in our internal overview.

Below the right GPU riser, we have a PCIe Gen4 x16 low-profile riser slot and an OCP NIC 3.0 slot that also gets x16 lanes.

Next, let us get inside the system to see how it is configured.

STH review of NVIDIA A16 when? I loved your old GRID M40’s https://www.servethehome.com/nvidia-grid-m40-4x-maxwell-gpus-16gb-ram-cards/

But… can it run 16 copies of Crysis at 1080?

Title is kind of confusing as there are not 16x GPUs in the system reviewed.

The A16 is a card that contains 4xA2s each with 16GB of dedicated ram. With 4xA16 you would have 16x A2s.

We ran the Bombsquad-stress in this system which up to 128 instances (concurrent users) with 1080P@60fps.

When running the high-quality Genshin Impact this system can support 48 instances and all running 1080P@60fps.

If this has 16x GPUs, then the First Gen EPYC dual-socket configuration had 8-CPUs.

It’s how Nvidia wants to market it but it’s not how we’ve discussed these sorts of products in the past. If one of the GPUs goes bad, how many do you need to replace? 1? Nope, you need to replace 4.

DDR5 almost had this problem but we’ve all settled on calling the current systems by the number of DIMMS instead of sub-channels.