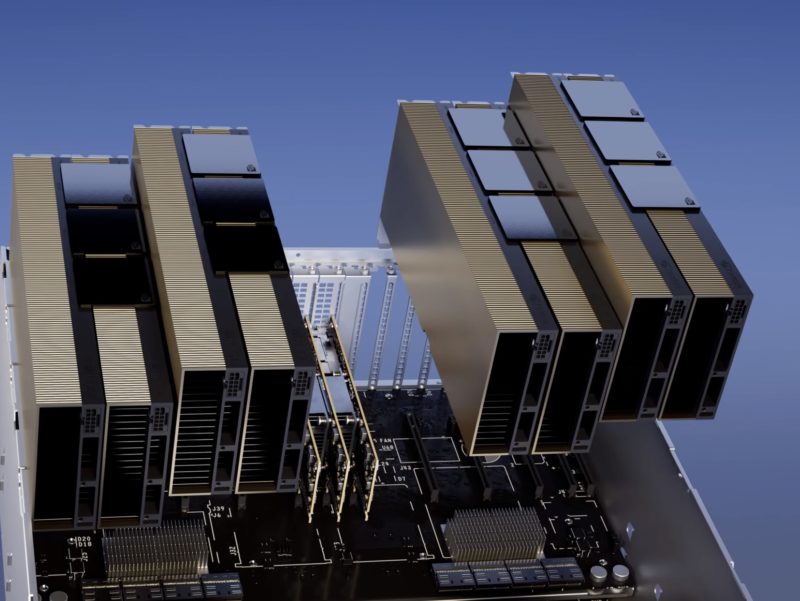

When the NVIDIA H100 NVL 188GB solution was first launched, it was shown as a 2-card solution with the 188GB of HBM3 memory. At the time, we noted this was essentially two PCIe cards linked over a NVLink bridge. Then, the NVIDIA H200 NVL 4-Way solution was shown at OCP Summit 2024. Something our coverage has always shown was the NVL cards being installed with the NVLink bridges. A reasonable question is whether the cards can function alone, so we tested it.

Can You Run the 94GB NVIDIA H100 NVL PCIe as a Single GPU

The original NVIDIA H100 PCIe option was the 80GB HBM2e card. This card has the NVLink bridge connectors on it.

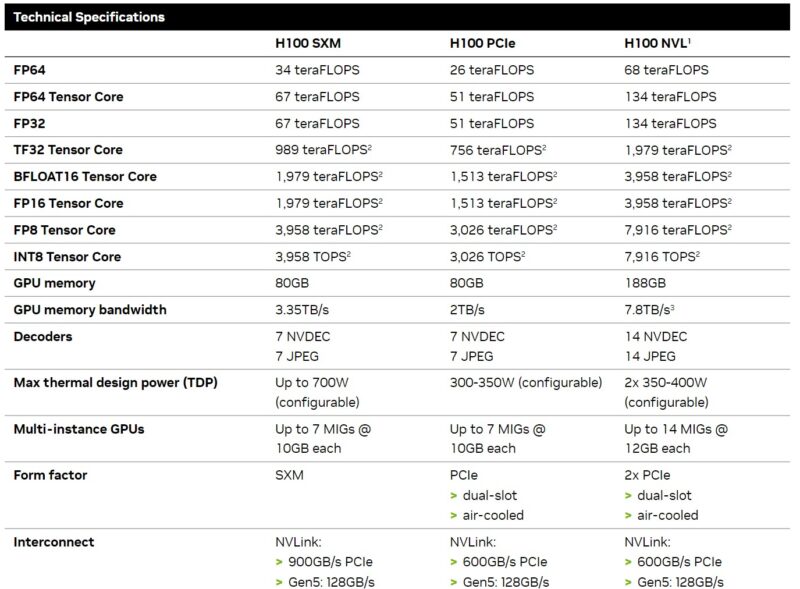

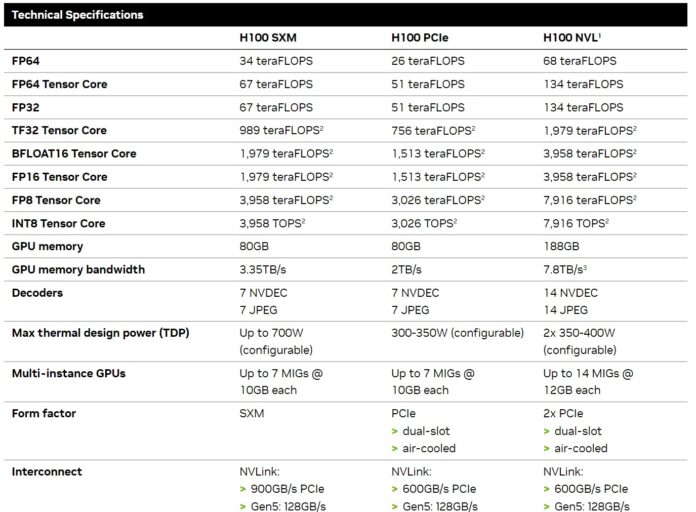

As NVIDIA rolled out the H200, the PCIe card was revised to become the NVIDIA H100 NVL with 94GB of HBM3 memory. That is significant because the SXM version of the H100 is still at only 80GB of memory. Still, that H100 NVL solution was almost always marketed with at least two GPUs. Here is the H100 spec table from when we did the L40S and H100 piece.

You will notice that the H100 NVL was being presented as two cards here and it is almost always shown as two cards with the NVLink bridges.

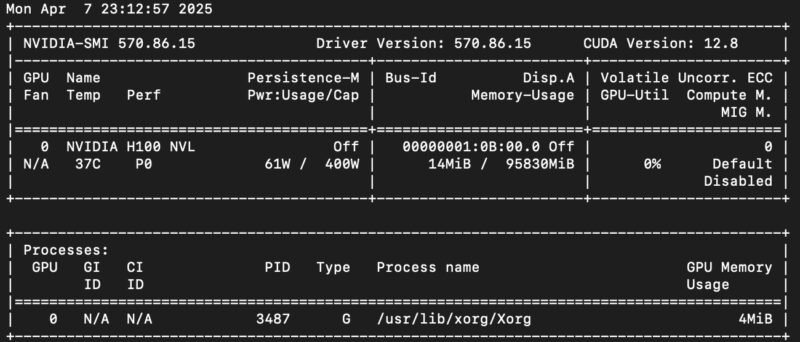

Still, this was one where it felt like if you wanted a single GPU, perhaps for a 1U/ 2U server that could only fit a dual slot PCIe card, but not two with bridges, that it should work. Recently we tried it out, and indeed, here is the nvidia-smi output:

That is exactly what we would expect. For folks buying servers with a GPU, the H100 NVL version is a significant upgrade on the memory side from the H100 non-NVL. In some cases like for raw memory bandwidth or MIG instances, the NVL version can actually be better than the SXM version as well, and at a lower power level.

Final Words

At the NVIDIA GTC 2025 Keynote (Ryan Smith covered for STH), Jensen Huang, NVIDIA’s CEO, said that the Hopper generation was not worth buying anymore. What that might mean is the H100 generation of GPUs will become more available on the secondary market. This is especially so given that the H200 NVL refresh came out with 141GB of memory making this an older generation part. For those looking for a PCIe GPU to use one of in a system, this might actually be a neat option either today or in the future as Blackwell continues to roll out.

This feels almost silly to publish, but there are so many online resources showing these only being installed in pairs that we thought we should quickly set the record straight.

I have a single 94 GB H100 running, no issues. For FEM workload it really needs PCIe5, for AI work, PCIe3 is fine.