The ASUS ESC8000A-E13P brings a thoughtful 8-GPU AI server design into the modern generation. With two AMD EPYC 9004/ 9005 processors and plenty of room for high-speed networking, this is a neat server. Of course, we need to get into it as the theme this year at the high-end is multi-GPU systems.

Regular STH readers may remember that we reviewed the ASUS ESC8000A-E11 two generations ago. When we had a few folks visit Taipei earlier this year, we managed to grab a system and get it online to use. Here is the quick 1 minute overview:

Now, it is time to get to the hardware.

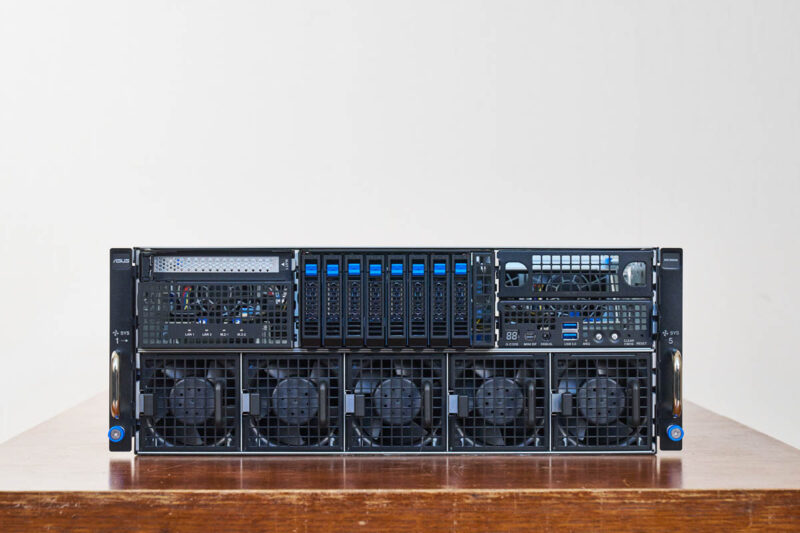

ASUS ESC8000A-E13P External Hardware Overview (Front)

The ESC800A-E13P is a 4U NVIDIA MGX design. It is 800mm or 31.5″ deep, so it will fit in most racks, especially those with the power capacity for a system like this.

On the front of the system, we can see one of the biggest design elements. The fans on the bottom provide airflow to the bottom section with the CPU, NICs, and memory, while the fans at the top are there to cool the GPUs and storage.

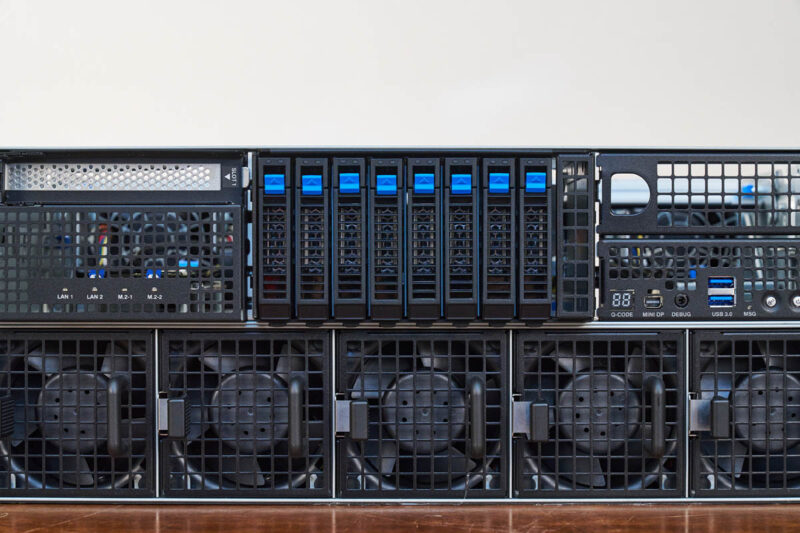

On the front right, we can see the front I/O. Here, that means a mini DisplayPort and two USB ports. There is also a Q CODE display for checking the POST codes. This is for cold aisle service, which is generally preferred with hot GPU systems.

In the center, we get 8x 2.5″ bays for NVMe storage.

These are hot-swap designs as one might expect.

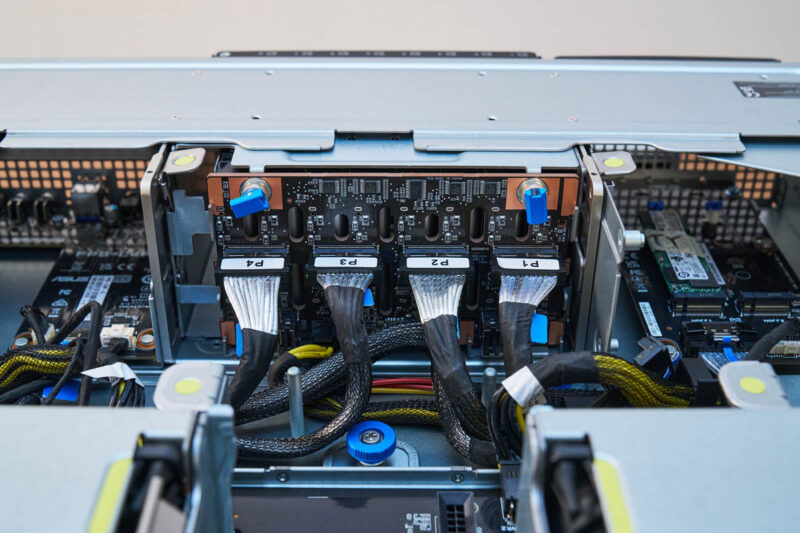

The NVMe backplane is fed by four MCIO x8 connectors. Something else to notice is how the backplant is held in place by tool-less locks with blue tabs.

On the lect side, we get status LEDs hiding something need behind them, as well as an expansion slot.

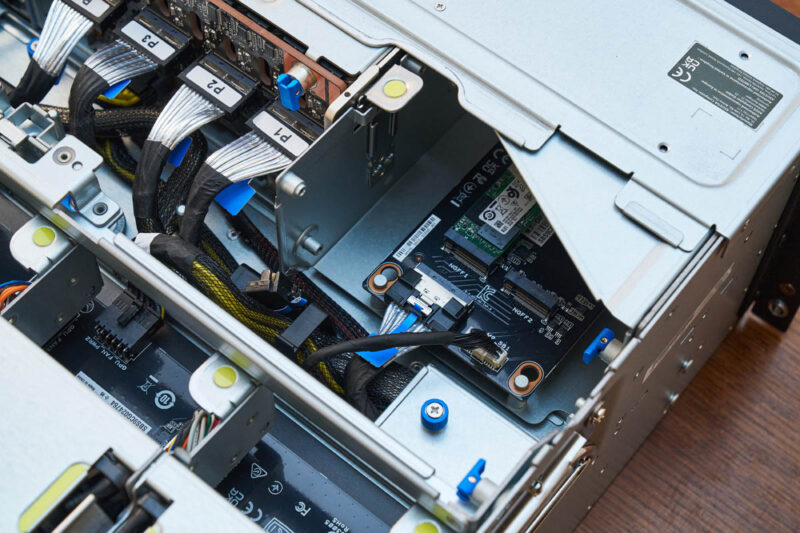

The expansion slot is a PCIe Gen5 x8 design that you can remove with a few tabs. Here I am removing the slot for service.

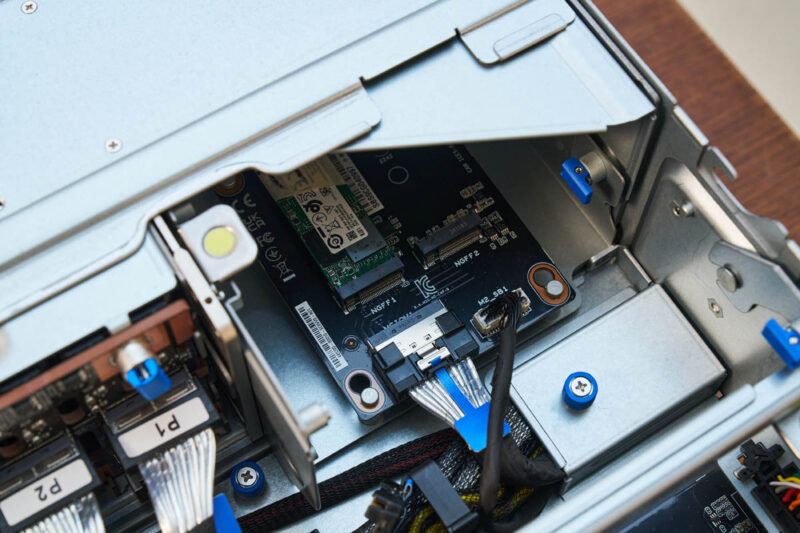

Under the slot, and behind the status LEDs is a neat little board. This board has two M.2 slots that can accomodate up to M.2 22110 (110mm) NVMe SSDs for boot. This makes the slots easier to service than if they were on the motherboard.

We only have one drive installed, but you can see how this works.

On the bottom of the front, we get the fans that keep the motherboard section cool.

These fans are all hot swappable at the front of the chassis.

Next, let us get to the rear of the system.

I like that this one’s got the PCIe switches better because it’s got more slots for NICs. I’m not totally behind just having 32 Gen 5.0 lanes back to the CPU.

There is a flaw in the block diagram, the DIMMs run from the PCIe switches in stead of the CPU’s.

I assume this is not a CXL solution (12 DIMMs through 32 lanes PCIe Gen5) ;-)