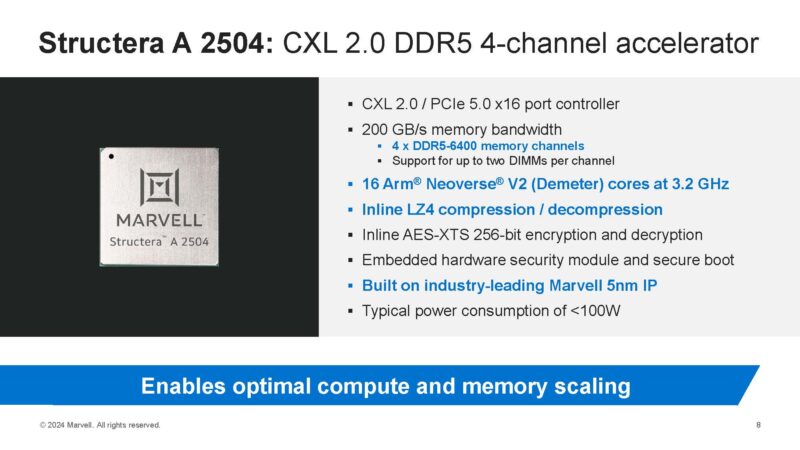

At Marvell Analyst Day 2024, we saw the new Marvell Structera A memory expansion device with 16 Arm compute cores. CXL Type-3 memory expansion is (finally) happening. For hyper-scalers, one major use case is re-purposing DDR4 DIMMs from decommissioned systems and adding them to new systems as memory. Aside from the cost benefits, from a carbon emissions standpoint, the upcycled DDR4 DIMMs do not need to be manufactured again. While Marvell has Structera X for both DDR4 and DDR5 memory expansion, Structera A adds something different. It adds 16 Arm Neoverse V2 cores along with the DDR5 memory to scale compute and memory capacity in a system.

This is Marvell Structera A: The CXL Memory Expander with 16 Arm Cores

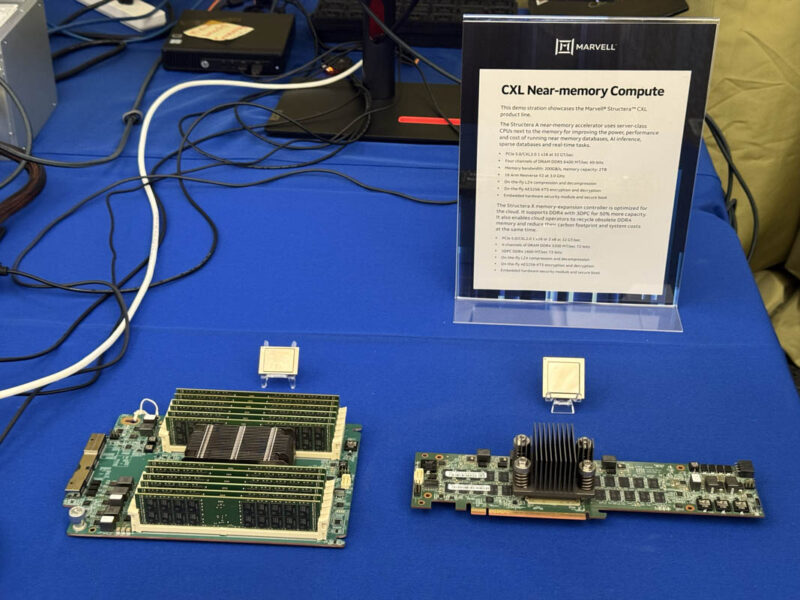

At this table, we saw an Intel Xeon development platform running. Specifically, this appears to be the Intel Xeon 6900P open bench development platform. That system is running a PCIe connection between the main server, and a Marvell Stuctera CXL Type-3 memory expansion device. This demo showed many things we normally see, like latency and the additional CXL memory attached to the system.

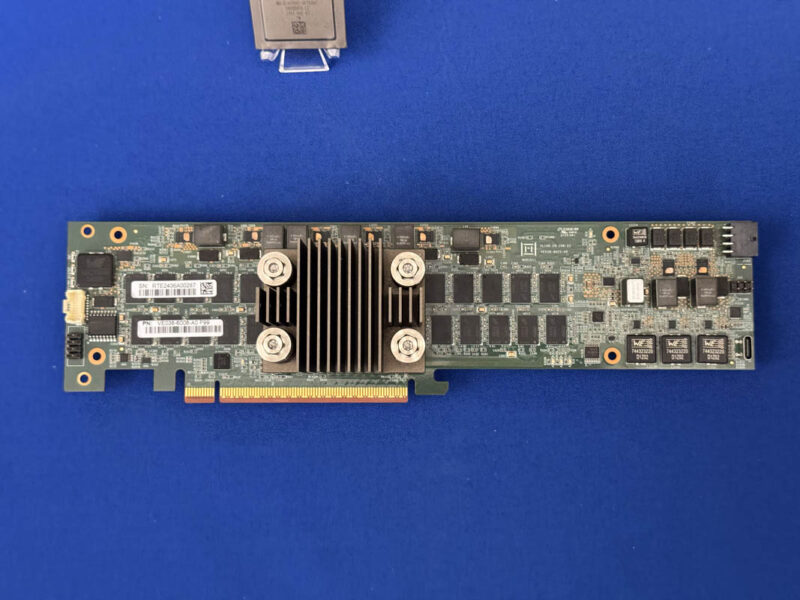

Sitting on the table next to the demo was “CXL Near-memory compute” and two chips and cards.

The left card was the DDR4 variant of the Marvell Structera X. We covered this board previously in Marvell Structera X CXL Expansion Displayed at FMS 2024.

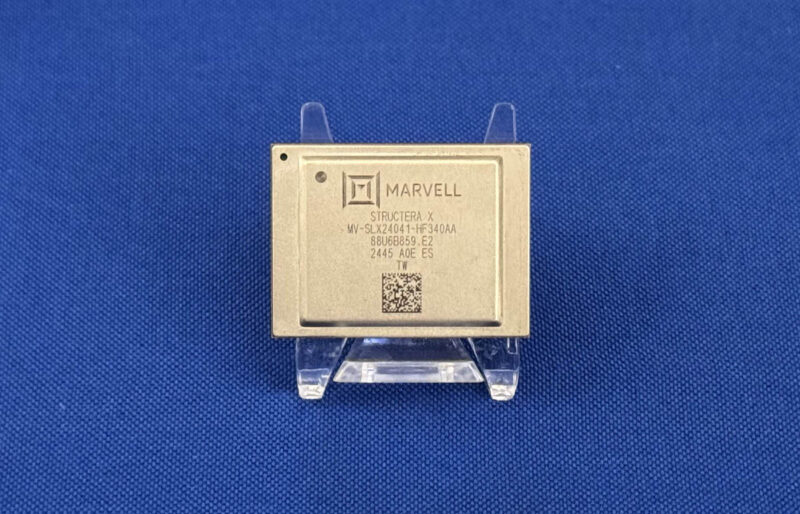

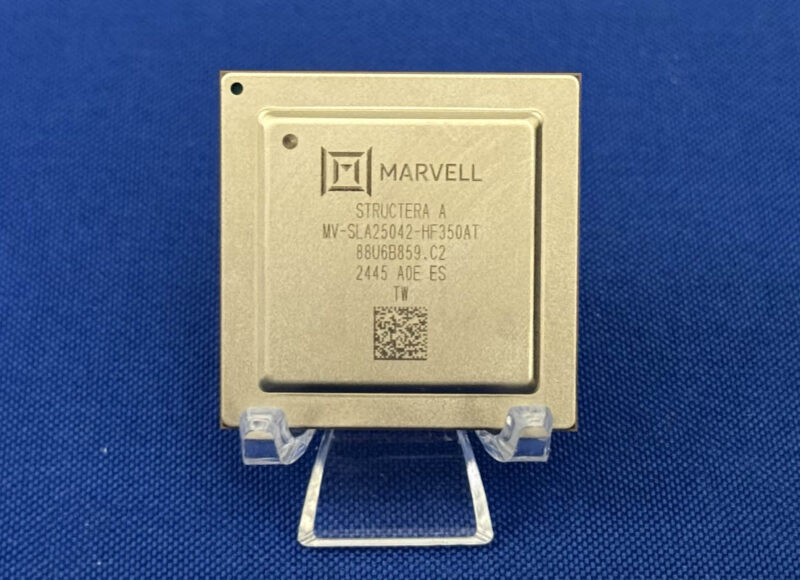

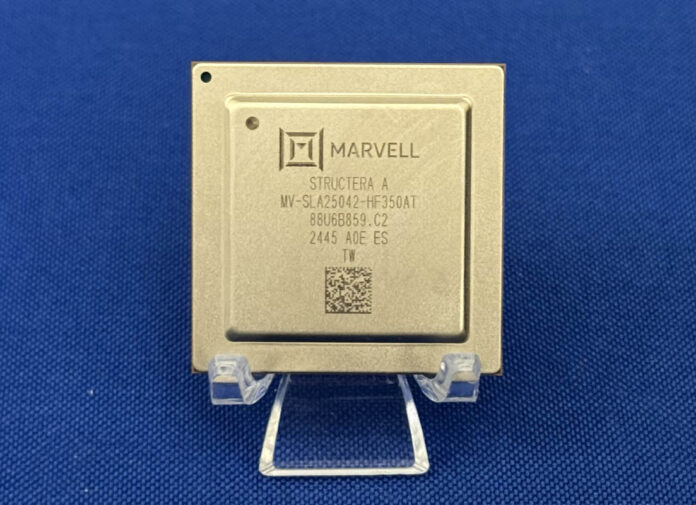

Sitting next to it, was something perhaps more exciting. The more square chip is the Marvell Stuctera A, and this is the first time we have seen this chip.

The Structera A is a CXL controller with 16 Arm Neoverse V2 Demeter cores with features like LZ4 compression/ decompresison and encryption/ decryption. This is a 5nm chip that also has four DDR5-6400 memory channels to fill a x16 link back to the main CPU or a switch.

Marvell had both the chip, as well as a board with the Stuctera A chip and memory attached. Here we can see the chip under a heatsnink, then ten memory packages on each side of the chip.

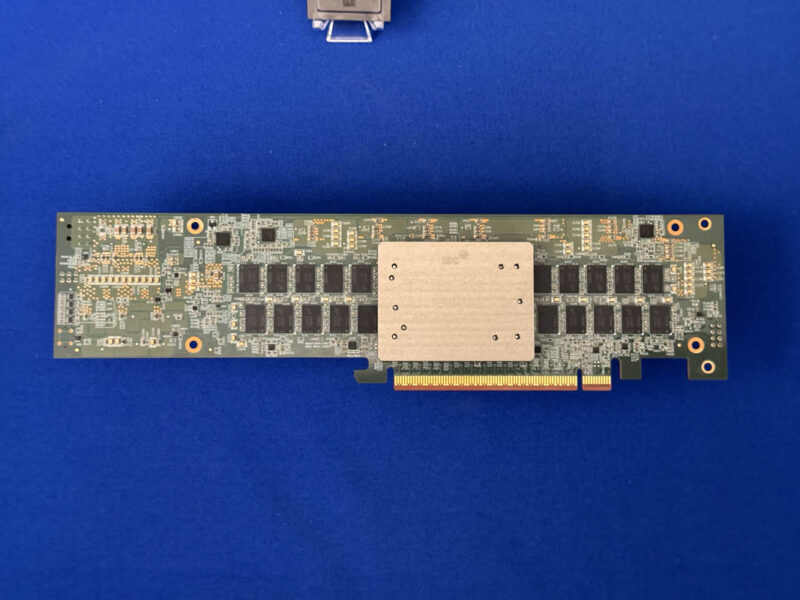

Flipping the card around, we see another ten DRAM packages.

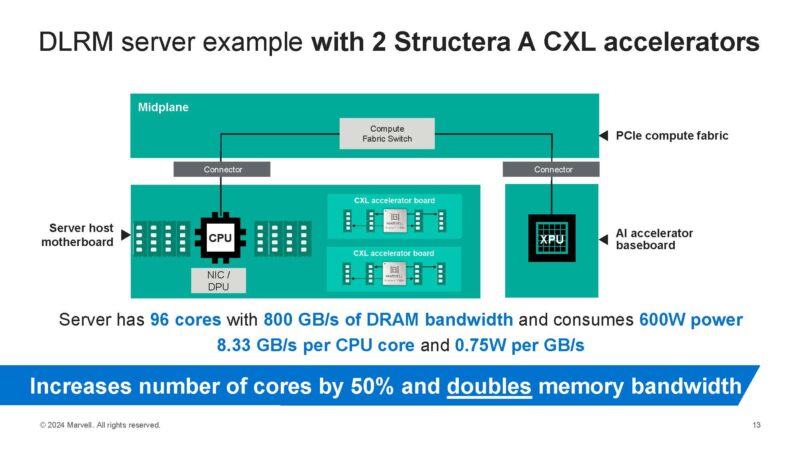

This is fascinating because it allows a server to scale memory along with compute. If you had a 64 core Arm Neoverse V2-based CPU design, for example, the server should see an additional set of memory in a NUMA node with 16 Arm cores. This is adding both cores and memory at the same time in a server.

Or let us make this even more exciting. This is adding memory and CPU cores into a system through a PCIe Gen5 x16 connector (running CXL) on a single card. Imagine being able to deploy a 64 core general purpose CPU, then adding more cores, memory, and total memory bandwidth to a system through a card.

Final Words

It feels a lot like this is a design for a hyper-scaler since it allows the hyper-scaler to deploy a base CPU design, then add additional compute and memory as needed. Also, the 16 Arm Neoverse V2 cores are Arm’s performance cores. For example, the Arm Neoverse V2 cores launched with NVIDIA Grace. This is not just meant for some low-performance scale-out CPU core application. Arm Neoverse V2 is a P-core design used in some high-performance CPUs.

I already voiced my desire to get ahold of these plus Structera X to show STH. Hopefully in 2025 that will happen. CXL servers and devices, as a whole (speaking beyond Marvell here) still need some time to mature from a software/ firmware side. I keep challenging companies that CXL must be plug-and-play and not work just in qualified system firmware/ device firmware combinations. I think this will happen in 2025.

“It feels a lot like this is a design for a hyper-scaler since it allows the hyper-scaler to deploy a base CPU design, then add additional compute and memory as needed.”

Presumably you wouldn’t even need to do this, you could start with a self-hosted NIC like the Bluefield-3 looked at a few weeks ago, and then add the compute / memory via CXL.

Oarman – That would be true, but NVIDIA does not support CXL even on its Grace Superchip at this point.

The big thing is the inline compression and encryption of the memory. Say you put 512 GB of DDR4 on it and you could leverage it as 1 TB on a new system supporting CXL. Not a bad use-case for the older DDR4 if you invested heavily into higher capacity modules. Similarly this would be good if Optane DIMMs could be leveraged with these CXL carriers.

While I get the desire to leverage standard PCIe 16x slots of these cards, I think it is time to explore MCIO connectors more of these types of devices. Being able to put 8 slots on a single card does not fit cleanly into any normal form factor.

CXL memory will go the same way as Optane – lot of hype and hope, only to realize the real world gain is not there, noone wants to deal with two-tier RAM and failing DDR4 impact stability. All at a time when CPUs are mostly bottlenecked by memory throughput, lets use low throughput memory with worse reliability, what could go wrong.

Looks great in tests and powerpoint, will be sunk invest.

@Name

Optane never got to flex it’s full potential due to fundamental software changes necessary. It has the opportunity to merge the idea of separate working execution memory and long term storage memory. Thus to do any work on a system it first has to be copied. Optane would permit the removal of that step. Loading files for a program would simply be remapping address spaces from a file system to application memory. Instead we just got long term storage on the memory bus or slower/higher capacity working memory space out of Optane which is fine for initial launch but far short of its potential as a technology.

I do not think CXL is going to go away and it wouldn’t surprise me if some company choses to leverage only CXL memory as its main source of bulk memory (ie to supplement on package HBM etc.) as a means of simplifying socket layouts. While not CXL, IBM is currently doing something similar with their POWER10 and soon POWER11 chips. 12 channels of DDR5 per socket is difficult and expensive to pull off, CXL would reduce the pin count per socket and provide more flexible IO simultaneously. Yes there is a performance hit vs. a native wider solution but this is is a fair trade of when looking at memory capacity and system scalability.