At OCP Summit 2024, we wanted to take a look at a few ASUS servers. Since there were many on the show floor, we wanted to cover both what was there and what was not on the show floor. Let me explain. We heard that there was a server or two that did not make it to the AMD Advancing AI event with the AMD EPYC 9005 Turin launch. So we took a field trip from OCP Summit 2024 to head to ASUS headquarters to see three machines, including one with a CXL Memory subsystem, that was unique.

ASUS EPYC CXL Memory Enabled Server and More OCP Summit 2024

We found one system that we were awestruck with, and ASUS asked us to cover a few more AMD systems as well for the EPYC 9005 “Turin” launch.

If you just want the quick 1 minute overview, we have a short.

ASUS RS520QA-E13-RS8U Awestruck

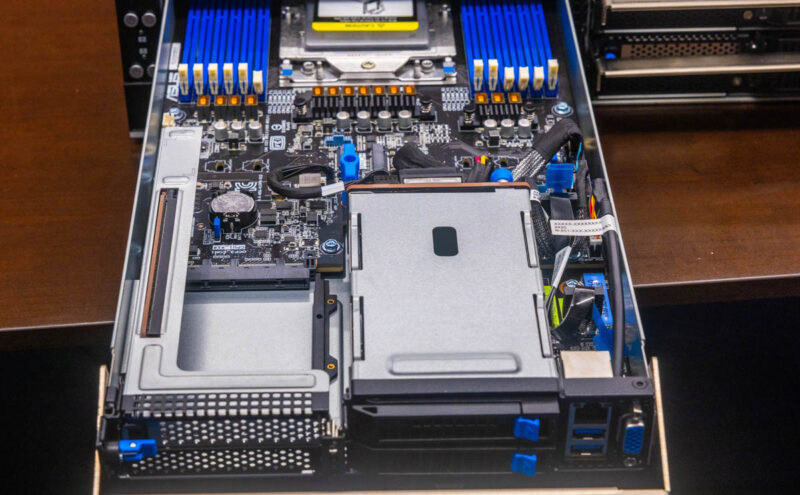

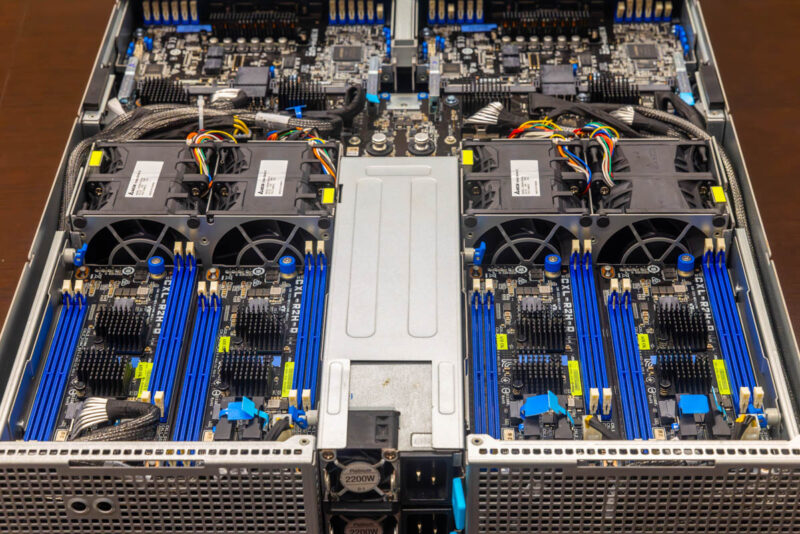

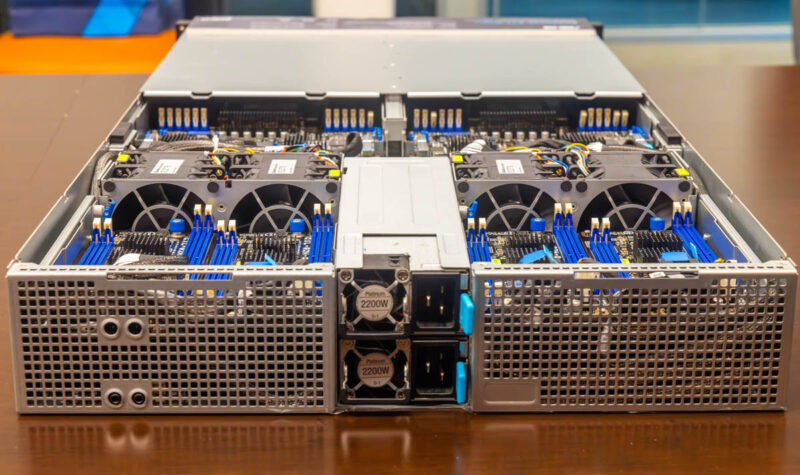

This is a 2U 4-node server but with a twist.

Each node has front storage and I/O which is a trend that we have seen on many recent 2U 4-node designs since the hot aisle behind these is usually very warm.

The 2.5″ bays take up a lot of the front panel, but there is still a low-profile PCIe Gen5 expansion slot and an OCP NIC 3.0 slot.

Behind that, we have two M.2 slots.

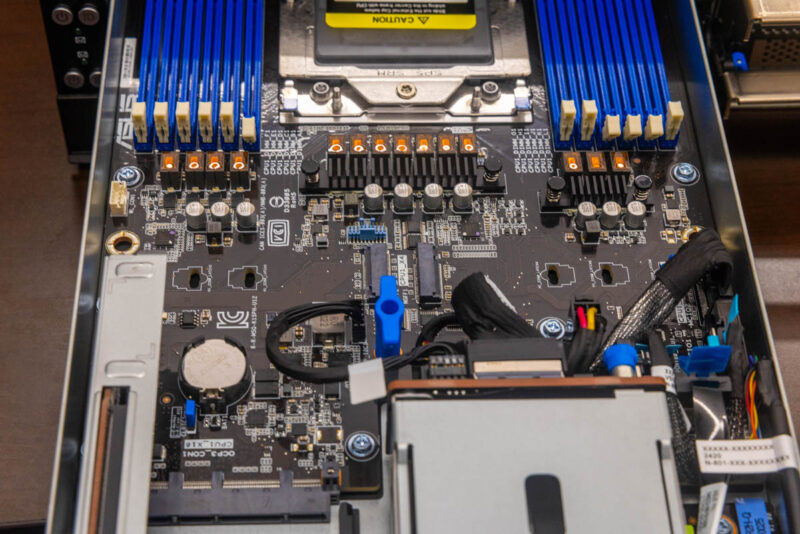

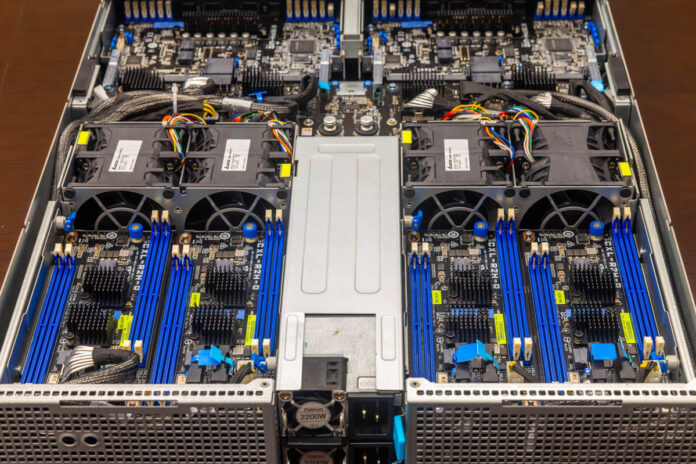

The first thing that makes this platform unique is that each node is a single socket 12x DDR5 DIMM design. Each node takes a single AMD EPYC 9005 “Turin” processor with up to a 400W TDP.

We have seen 2U 4-node single socket systems before, but behind the nodes we see that there is something going on with the rear connectivity.

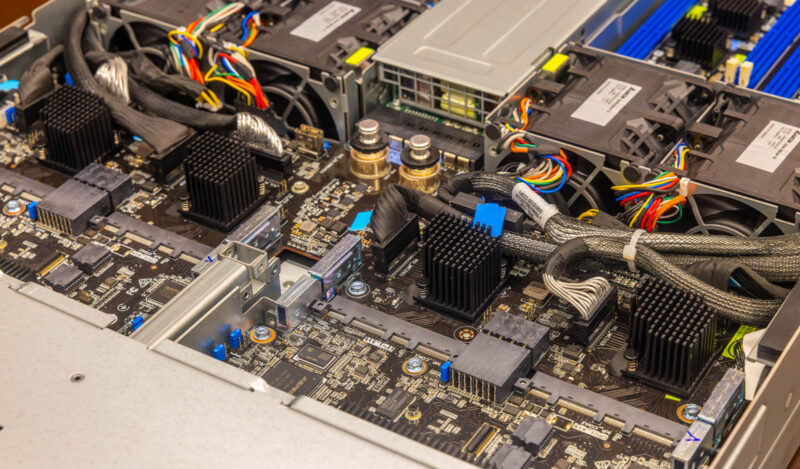

Behind the fan partition, there are extra CXL memory expansion modules. These look like Montage CXL Type-3 controllers and we get two DIMM slots per CXL controller.

That gives us up to 20 (12 CPU + 8 CXL) DDR5 DIMM slots per node.

The rear has two power supplies. What we can say is that this is an awesome design and one of the big reasons we made the trip to ASUS in California during OCP Summit 2024.

Next, let us get to some GPU servers.

On the CXL system; is there any sort of inter-node connection to allow flexible allocation of the additional RAM; or is it purely a measure to allow more DIMMs than the width limits of a 2U4N and the trace length limits of the primary memory buss would ordinarily permit?

I’d assume that the latter is vastly simpler and more widely compatible; but a lot of the CXL announcements one sees emphasize the potential of some degree of disaggregation/flexible allocation of RAM to nodes; and it is presumably easier to get 4 of your own motherboards talking to one another than it is to be ready for some sort of SAN-for-RAM style thing with mature multi-vendor interoperability and established standards for administration.

It is not obvious where the other four GPUs fit into the ASUS ESC8000A E13P. Is it a two level system with another four down below, or is ASUS shining us a bit with an eight-slot single slot spacing board and calling that eight GPUs?

Oh, nevermind. Trick of perspective, the big black heatsink looks like it would block any more slots.

Page 1 “The 2.5″ bays take up a lot of the rear panel”

I think you mean “The 2.5″ bays take up a lot of the **front** panel”

Hard to imagine telling us any less about the cxl! What’s the topology? Can the cxl RAM be used by any of the 4 nodes, and how do they arbitrate? What’s the bandwidth and latency?