Delta showed off a next-generation switch. While we have seen many 2024-era 51.2T switches, including in the recent 100K GPU xAI Colossus Cluster and the Marvell Teralynx 10 51.2T Switch, in 2025, we will have switches that are twice as fast. This is a 102.4T switch for the 1.6TbE generation.

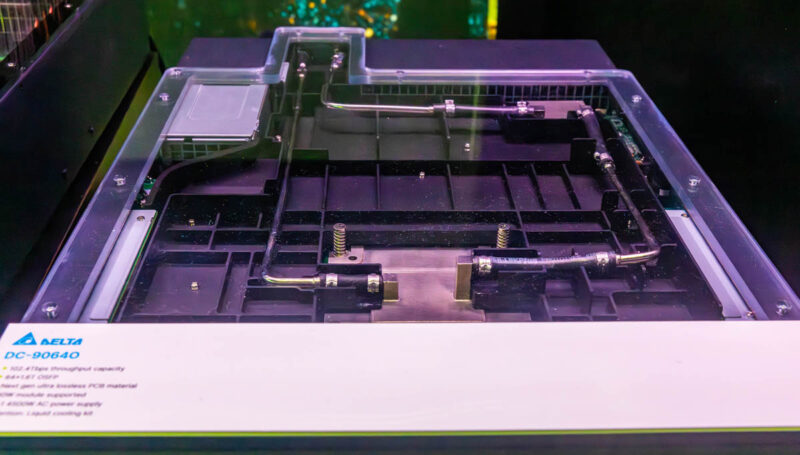

Delta DC-90640 A Next-Gen 2025 102.4T Switch

The Delta DC-90640 had a clear cover at OCP 2024, but then had a massive internal air baffle. We can see this is a liquid-cooled design, but not necessarily one that appears to be using the ORv3 blind mate solutions we have seen, so it is likely a standard server version instead of an OCP solution (the power supplies also add to this thought.)

On the front, we have 64-ports via 1.6T OSFP cages for 1.6Tbps networking.

Delta also says that this is powered by a pair of 4.5kW power supplies and supports 30W modules (30W x 64-ports = 1920W if you were wondering.) It also says that they are using a “Next-gen ultra lossless PCB material.”

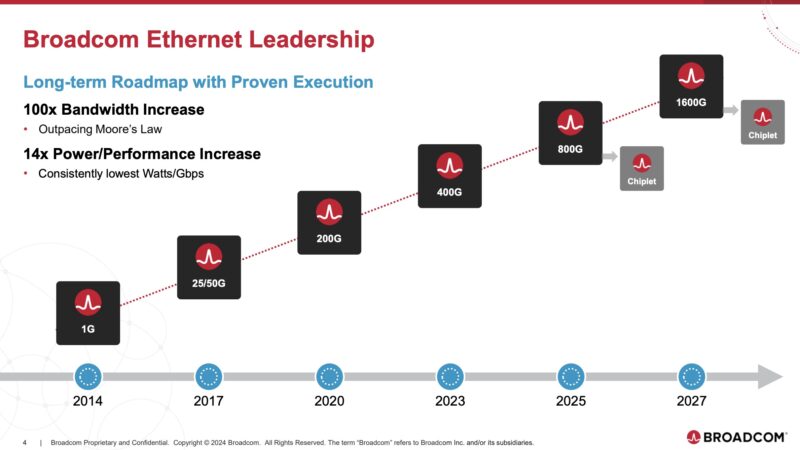

Although Delta did not say which vendor makes the switch chip, Broadcom has previously said that Tomahawk 6 is slated for 2025. Broadcom’s 1.6T adapters are slated for 2027. One key feature of these higher-end switches is the ability to split into 128 ports of half speed and sometimes 256 ports of one-quarter the speed. So even when we get PCIe Gen6 NICs for 800GbE, a 102.4T switch enables 128x 800G connections per switch.

NVIDIA for its part has its Spectrum X1600 slated for the Rubin era after Blackwell along with its ConnectX-9 SuperNICs.

We are going to have to wait until the PCIe Gen7 era for 1.6Tbps NICs. Putting that into perspective, an early 2021 Intel Cascade Lake Refresh had 48x PCIe Gen3 lanes, which could, in total, only push around three ports of 100GbE total. It would take two and a half of these servers, with all of their PCIe lanes dedicated to 100GbE NICs to saturate even a single port on the next-generation of 2025 switches like this one from Delta.

Final Words

Networking has come into focus, or more specifically the NICs, switches, and software stacks as we have moved into this era of AI buildouts. Creating massive clusters requires many switches and higher bandwidth switches can reduce radix. The other aspect is that the entire network is optimized to minimize tail latencies as time spent waiting for networking is often time that GPUs and AI accelerators are idle.

With server CPU market fully adopting chiplets for their designs, it won’t be long before high speed NICs with photonics are integrated on package for networking. That’d bypass the need for PCIe Gen 7 to reach 1.6 Tbit data rates as it’ll come directly off socket. This will also have benefits for things like UltraEthernet/RMDA too.

Similarly I’d expect that various AI/ML accelerators to follow suit as they’re also moving to chiplets and the current trend is to have a NIC per accelerator to move data around.

And that’s exactly what Intel did with the integrated OmniPath fabric controllers on their Xeon Phi lineup, back in 2016. It seems they were a bit early to the party, but it’s interesting to see that after all, that may have been the right direction, but a decade too early.

@Robert, Omni-Path seems like two distinct morals of the story: as an implementation making high speed networking that leaned a bit closer to infiniband than to ethernet in terms of having an HPC interest seems fairly forward looking.

As an “Intel takes proprietary pet technology, sees tepid uptake in discrete adapters; gives tying it to Xeons a try” incident it seems to have gone relatively poorly; it’s not technically dead, since they spun it off to some quiet little outfit that it still plugging away; but it essentially didn’t even live long enough to see Infiniband get acquired into being basically Nvidia’s pet technology closely tied to their compute products; not that that necessarily would have helped it given Intel and Nvidia’s relative fortunes at the time.

The contrast is especially striking vs. the (relative to the size of the intended system) fairly fat chunk of embedded ethernet that some of the Xeon-D and datacenter Atom-based parts get. That seems to be quite popular; but is just Intel taking advantage of an integration opportunity and letting you use QAT within the product software if you want lower CPU utilization; rather than trying to use Xeons as leverage to promote the optane of networking.