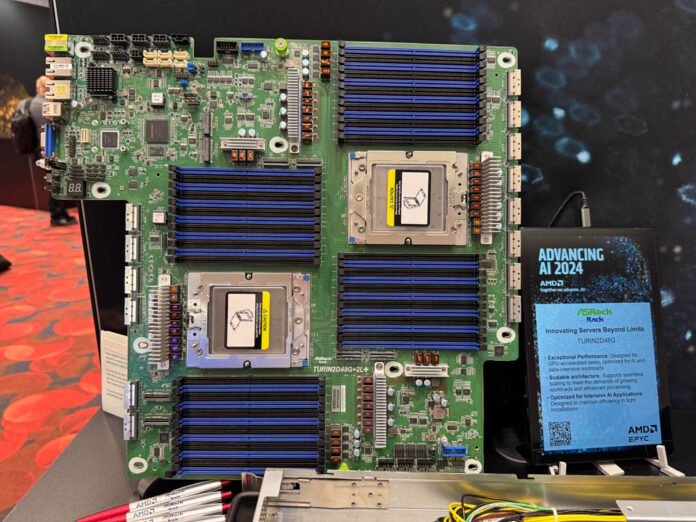

Yesterday Patrick saw a new, and very cool motherboard. The ASRock Rack TURIN2D48G is a huge board capable of handling two 500W TDP Turin generation CPUs, along with a full set of 24 DIMMs each. That means it has 48 DIMM slots total across the two sockets.

ASRock Rack TURIN2D48G 48 DIMM Motherboard Launched

Walking around the AMD’s AI event after the AMD EPYC 9005 Turin launch, we spotted this motherboard. We see many motherboards with 24 total DDR5 DIMM slots, but very few with 48. The reason is simple: 24 DIMMs and two CPU sockets barely fit across the width of a server chassis. To fit this many DIMMs, the CPU sockets need to be offset which creates more challenges for PCB designers. Even with that, some folks just want a lot of memory, and that is what they get here.

We found TURIN2D48G-2L+ specs on the ASRock Rack website:

- TDP up to 500W

- Proprietary (18″ x 16.9″)

- Dual Socket SP5 (LGA 6096), supports AMD EPYC™ 9005/9004 (with AMD 3D V-Cache™ Technology) and 97×4 series processors

- 24+24 DIMM slots (2DPC), supports DDR5 RDIMM, RDIMM-3DS

- 12 MCIO (PCIe5.0 / CXL2.0 x8), 4 MCIO (PCIe5.0 / CXL2.0 x8 or 8 SATA 6Gb/s)

- 2 MCIO (PCIe5.0 or 8 SATA 6Gb/s), 2 MCIO (PCIe5.0 x8)

- 1 SlimSAS (PCIe3.0 x2)

- Supports 2 M.2 (PCIe3.0 x4 or SATA 6Gb/s)

- Up to 34 SATA 6Gb/s

- 2 RJ45 (1GbE) by Intel® i350

- Remote Management (IPMI) (Source: ASRock Rack)

Supporting 500W TDP CPUs is important since it supports the top end 192 core AMD EPYC 9965 we featured.

Final Words

Something neat about this motherboard is that beyond supporting a huge number of cores and DIMMs, it is also offers something Intel does not have. The Intel Xeon 6900P also has 12 channel memory, but can only support 1 DIMM per channel. As a result, the 12-channel 2DPC offering from AMD offers something beyond just the maximum raw core count for AMD EPYC Turin platforms over Intel Xeon platforms. It is also cool to see such a large motherboard!

Me: I’d like the double Turin combo please.

AsRock: Would you like to super size the RAM?

Would SAP HANA and other in-memory databases be the main application where a good trade is twice the memory at 26 percent less bandwidth?

Someone should ask Mr Turin what happened to MR DIMM.

@Eric Olson

MR DIMM as in the JEDEC standard is not ready yet. Intel is using their own proprietary MCR DIMM rebranded as “MR DIMM” which will not be compatible with the industry JEDEC standard.

Wait… so it could have 48 slots of DDR5 directly… and another dozen CXL2.0 devices stuffed full of DDR4?

And the maximum supported memory capacity in this configuration is exactly what?

Well, this is one way to address the layout problem regarding having 48+ DIMMs on a motherboard. I’m a little surprised they don’t just put the two processors one in front the other with the memory sets neatly lined up vertically along the long dimension of the board. That way they’d have extra room for more DIMMs on either side of both processors, possibly enough for a good 6 or 8 more DIMMs per CPU. Anyway, very cool server board.

@Stephen Beets

Yabbut, 6 or 8 more would be weird when you have 12 memory channels. Also you need to consider the strain on the memory controller.

And in 2024 we’re still putting GigE ports on systems like this. Sigh.

@snek

Yes, that’s true. But the layout I’m suggesting would allow for future processors that support 16 or possibly even 20 channels of memory. They’d still be able to do only 12 channels per CPU with my revised layout. They’d just have a good bit of extra space along the left and right edges for all those VRMs and glue logic chips. And when they get to 16-channel memory I/O, those VRMs and glue logics can be re-arranged to the top and bottom edges of the CPU and DIMM blocks, with room left over for all the MCIO and NVMe connectors for everything else. They’re already using an 18-inch wide proprietary board anyway, might as well use that space. Either way, the internal volume of server frames is going to have to increase unless the engineers make the decision to use multi-channel RAM modules with local memory controllers on them as has been done before in some of IBM’s mainframes and POWER architecture servers.

@snek

Plus, it looks nicer and more symmetrical and I like a symmetrical looking board layout. :-)

Bei der Power sollten noch 10/25Gbit Anschlüsse Standard sein. Aber bis zu 24tb RAM ist schon ne Ansage. (nutzt man 512gb Module)