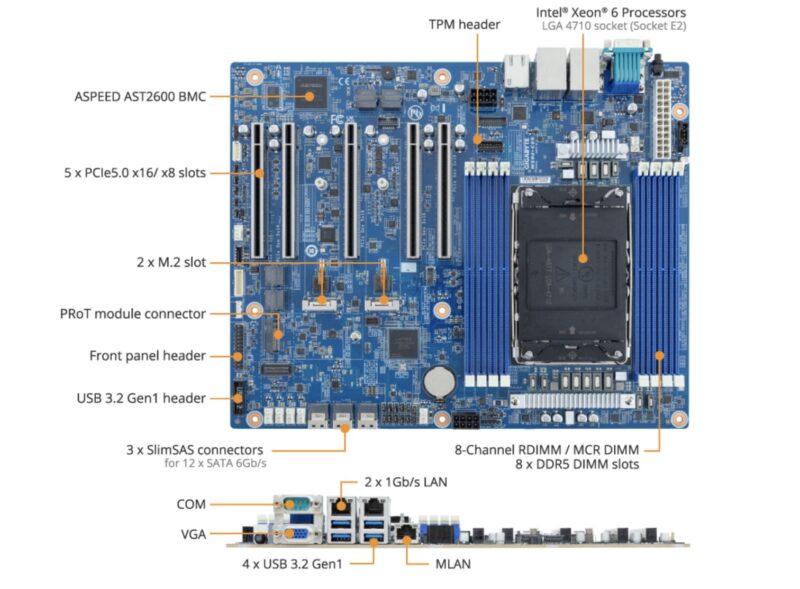

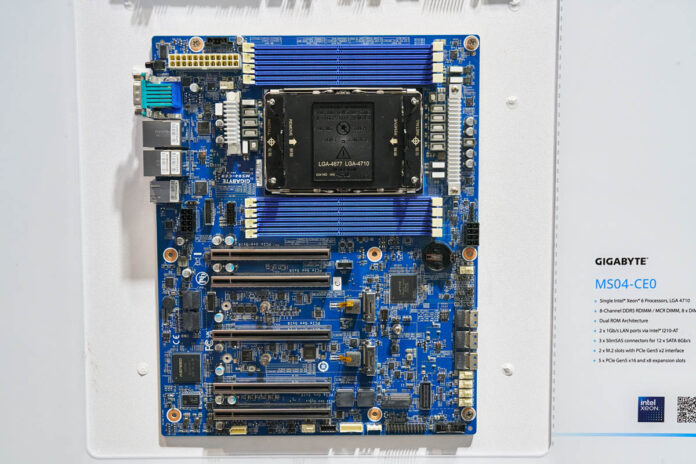

Earlier this month at Computex 2024, Gigabyte showed off its new Intel Xeon 6 motherboard. The Gigabyte MS04-CE0 is a new motherboard for the Xeon 6 processor line. More specifically, this is for the LGA4710 -SP parts, not the bigger Xeon 6 socket. As a result, it fits a lot of features into a compact motherboard.

Gigabyte MS04-CE0 Intel Xeon 6 Motherboard Shown

The motherboard is a single-socket only, and 1 DIMM per channel (1DPC) only motherboard. Still, that allows for the motherboard to fit in a standard ATX form factor which is much smaller than many of the single-socket EATX motherboards we see these days.

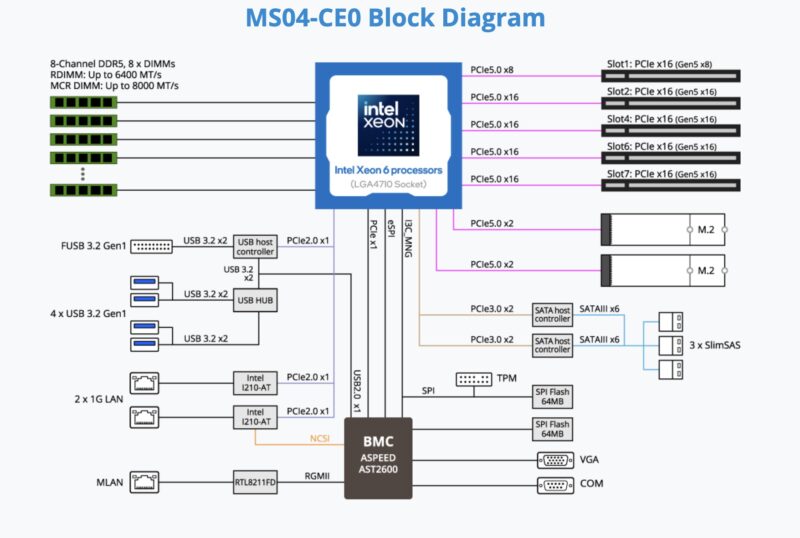

The motherboard supports four PCIe Gen5 x16 slots and one x8 electrical x16 slot. There are also two M.2 PCie Gen5 x2 slots onboard.

Gigabyte is also using lower-speed Intel i210-AT 1GbE networking here.

When we pulled the block diagram, one of the more interesting parts is that there are two PCIe Gen3 x2 SATA host controllers, each controlling six SATA III lanes. Those twelve SATA ports are routed to three SlimSAS x4 ports. That SATA configuration is very different than previous generations. Previously, we would see a PCH with features like the ASPEED AST2600 BMC for management LAN and the SATA connectivity. Since Xeon 6 does not use a PCH, the motherboard has a different layout.

Final Words

Overall, this is a great motherboard to see. While we focused on the 144 core Sierra Forest parts for our initial Xeon 6700E review, there are some lower core count parts that offer solid core counts at lower costs. Those might be a great fit for this motherboard. Also, if you have a bunch of Xeon E5 V4 and earlier servers still, you can do a fairly massive consolidation into fewer nodes with less power all on a single socket node like this.

If you want to learn more about Xeon 6, you can check out our video as well here:

A board of compromises.

PCI-Express 3.0 x2 is ~2 GB/s, they connect it to a 6x SATA III controller which is 6x ~550MB/s for SSDs giving 3.3 GB/s total. For HDDs it doesn’t matter, but for SSDs it definitely is a bottleneck.

M.2 slots are also x2 instead of x4. There aren’t many 5.0 enterprise M.2 drives yet, so it’s going to limit the popular 4.0/3.0 ones.

Depending on the use case it might matter or it might not.

Weird that they went with 1Gb nics and not an intel 10Gb controller, and all the other compromises noted. Nice to see the PCIe slots though

@Dr_b_

This board screams SOHO/Low power/Management node to me … and there 10GbE may not be needed yet would suck power even run at 1Gb.

Besides, it has enough slots to put a NIC of one’s choosing – aka certified for one’s infra. Was it a 1U-focused board, it would be a different discussion.

I would rather to have the PCIe lanes exposed as slots than for an embedded controller that may not be used due to its driver stack ..