This is probably the worst title for an article, but let us call it what it is: MLPerf Training V4.0 is boring. That is not being said in a good way. While NVIDIA showed that the H200 is faster than the H100, which is no surprise, there was not a lot of comparable data. Still, there is a single interesting data point that we can compare. MLPerf Training V4.0 had a NVIDIA GeForce RTX 4090 pitted against an AMD Radeon RX 7900 XTX.

NVIDIA MLPerf Training V4.0 is Out and Very Boring

One of the big challenges with the current MLPerf Training is that it has become an effective NVIDIA benchmark exercise. We know the AMD Instinct MI300 is a multi-billion dollar product. We know Cerebras is over a billion-dollar run rate line. In the cloud segment, companies like AWS have their own training processors, but they are absent. Google submits the TPU results but does not sell the TPU outside of its cloud, so it is not competing with NVIDIA.

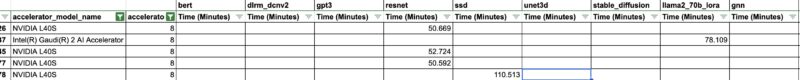

There were two Intel results, using Gaudi 2, not Gaudi 3. Those results are not useful to compare either. Just for some perspective on this, eight Gaudi 2 accelerators with the scale-out networking onboard the UBB cost $65000. That puts the pricing closer to an NVIDIA L40S that we reviewed. One might then look at the results and see if the Gaudi 2 can compare to the L40S.

Both of these are more inferencing solutions, but they are in the training benchmark. The L40S has resnet and one SSD result. The Gaudi 2 has a llama2 70b result. There is nothing to compare here since they are running different benchmarks. This is probably the best on-prem comparison, and there is nothing to compare. This is not just the two results because of the 89 submitted configurations, on average, the configurations are only submitted on around 2 of the 9 benchmarks in the suite.

There is, however, one easily comparable result, and it is a fun one. There is a comparison between a 6-way NVIDIA GeForce RTX 4090 and a 6-way AMD Radeon RX 7900 XTX. On the resnet test, NVIDIA is around 37% faster. Given the GPU price difference, that makes AMD a better value.

Final Words

The moral of the story is that AI training is H100 or H200. NVIDIA sells tens of billions of dollars of these, so that makes sense. Google is submitting TPU results for some reason, but they do not really have competitors on the list since they are not competing except internally at Google with anything else on the list.

Without any real competition, MLPerf Training is, more than ever a NVIDIA benchmark. We know there are other solutions out there selling in lower volumes, but they are selling without submitting to MLPerf. The good news is that MLPerf Inference is usually much more interesting, but MLPerf Training might as well be tied to NVIDIA new product introduction.

Have you asked the other vendors why they’re not submitting more complete results (or results at all)?

You’re in the position to do so but it seems like you mostly want to complain that the results don’t exist rather than ask someone that can actually give you the answer

John I have many times. The answer is that they do not need to submit to sell, so if an organization has limited engineering resources, they prioritize sales and customer support efforts over MLPerf. It has been happening for years and we have that tidbit in a number of articles. That covers why hardware is not being submitted period (e.g. MI300A/ MI300X)

On why not submit more benchmarks, the reason I have gotten is that they are using MLPerf just to show specific applications. MLPerf was designed to allow for single benchmark submissions, instead of requiring the whole suite. So if you are going through the work to optimize for a public benchmark, then you are only going to pick the ones you think are going to either show great performance or help you sell.

It all comes down to effort versus impact.

Patrik, no disrespect, but you look very naive.

1. The obvious reason AMD doesn’t participate is because they loose. It will hurt their message and marketing. And bigger theoretical numbers is the only argument they have…

2. When a benchmark suite has dozens of workloads, it becomes relevant and more difficult to optimize for. And if you do so, it’s just good practice that will translate by better performance in Real World.