Intel needs a Xeon shake-up, and that is finally happening. For over two years, ever since we published Intel Sierra Forest the E-Core Xeon Intel Needs, Sierra Forest has been on our radar as the most important part we have anticipated in Intel’s stable. Now dubbed an Intel Xeon 6, this is truly the first part of a massive Xeon transition at Intel. As I am writing this, I am also fairly convinced that the next-generation Clearwater Forest will be a much bigger commercial success, but I think it also would be wrong to sleep on Sierra Forest.

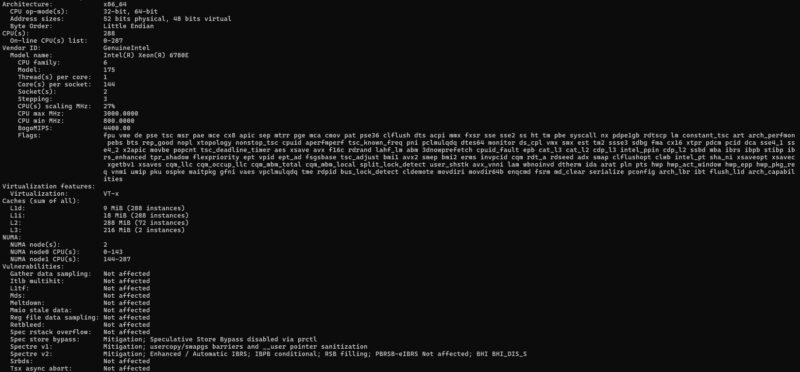

Let us just put it out there: 144 Xeon cores with DDR5 and more PCIe Gen5 in 250W TDP are here. These are E-cores without Hyper-Threading, an important trait when hyper-scaler’s own Arm designs shun SMT implementations. What is better is that these are x86 cores that are ready to transition legacy workloads without the fuss of changing architectures. As we will get into, Sierra Forest is not perfect, but it sets Intel Xeon down a new and fascinating path.

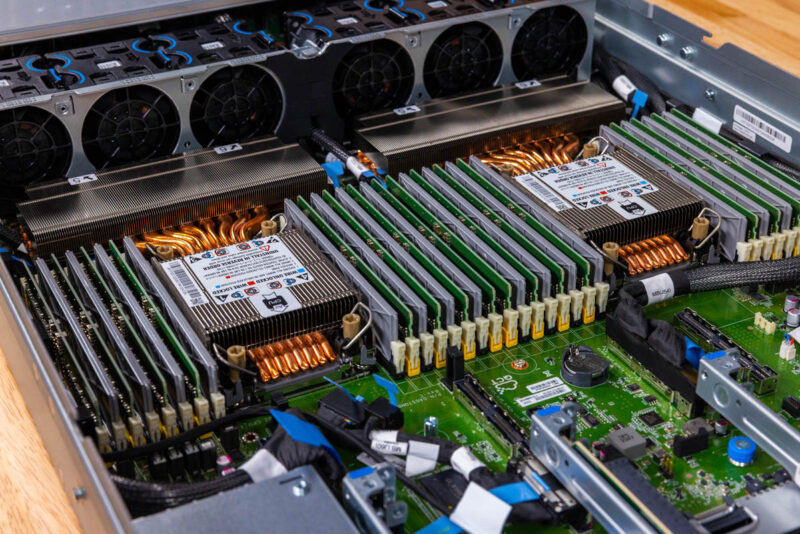

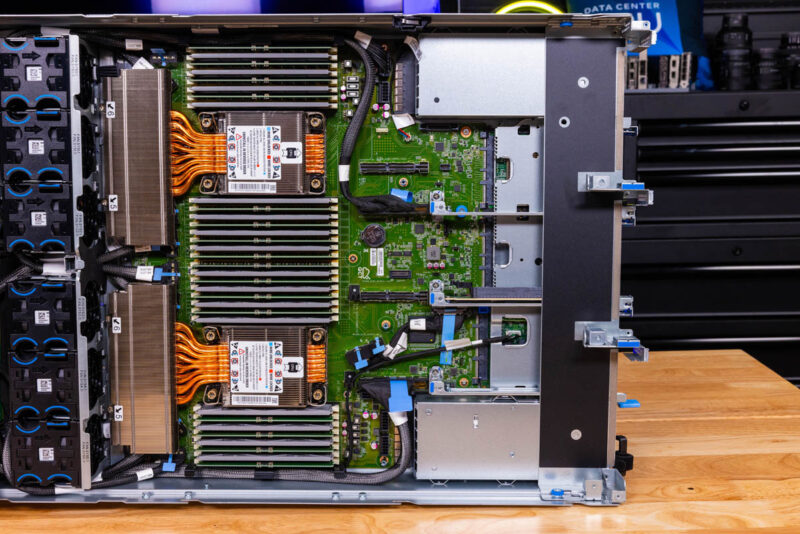

As a quick disclosure, we are going to say that Intel sponsored this, as one might imagine since we had access to the chips before the launch. Intel sent a QCT development platform to test on. Given the timing of getting the system only a few days before the launch, we had an SOS out to Supermicro for a second system to use, which ended up arriving the morning after the Intel-QCT machine. A quick thank you to all those involved in making this happen.

Update: Since this was a night launch, we waited until the morning to upload the video. Here it is for those who would prefer to watch or listen.

As always we suggest watching this in a dedicated tab, window, or app for the best viewing experience.

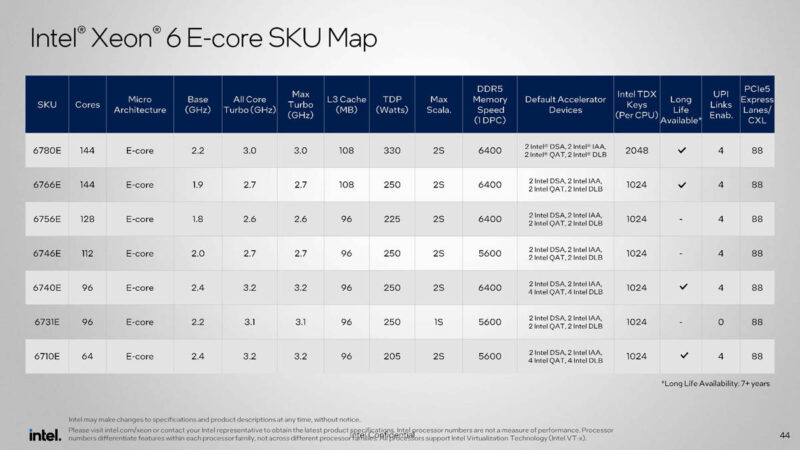

P-cores and E-cores. New platform features. Different platforms. There is a ton here, but for those who just want to know what the parts they can buy are, we are going to get to the Intel Xeon 6700E SKUs first. If you want to learn more about the P-core and E-cores, platform features, release schedule, and so forth, we will get to those next.

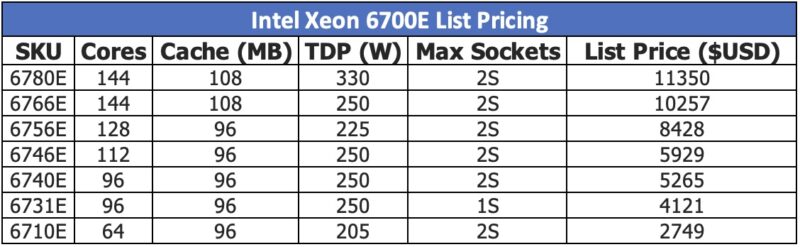

Intel Xeon 6700E SKU List

This is one of those launches where I think folks will focus on the top-bin parts, but there are some fascinating SKUs on the list. For the record, I think Intel has enough complexity with sockets, core types, MCR DIMM support, CXL Type-3 memory support, and so forth, that the whole differentiation using DDR5 memory speeds needs to just stop. Intel is in dire need of less complexity in decoding its products, not more.

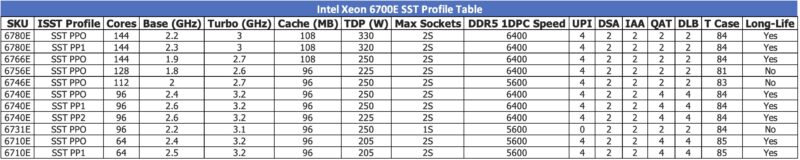

On the plus side, there are no more precious metal names and no more “Scalable,” which is a huge win on the branding side. These SKUs also have SST profiles for use in embedded spaces. Here is the table we found for that.

The “E” in Intel Xeon 6700E parts is to denote efficient cores versus the “P” parts for performance cores. At the top-end, there are 144 core Intel Xeon 6780E and 6766E SKUs each with 108MB of L3 cache.

Those SKUs are the ones we received to use for this piece.

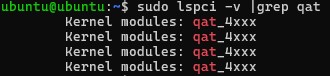

It is awesome that we can see two DSA, IAA, QAT, and DLB accelerators active in the parts but there are up to four available.

One part that you should not sleep on is that even with these accelerators and 144 cores, these are 330W and 250W TDP parts. A theme of this piece is going to be the concept of moving legacy VMs and microservices to E-cores from older-generation hardware. An Intel Xeon Gold 5218 was a mid-stack 16-core CPU from the 2019 era “Cascade Lake” 2nd Gen Intel Xeon Scalable with a 125W TDP. We are going to be talking about 4.5:1 to 9:1 consolidation ratios over something like that. When you see 250W, think that, in the worst case, you are saving 2.5x the power at the server level and likely a lot more than that.

This is going to be a rough one conceptually for folks. Still, anything 2nd Generation Xeon Scalable and older that is not heavily using AVX-512 is going to be hard to justify going forward. When we say E-core if you want to translate to P-core 5th Gen Xeons currently available for non-AVX-512 or AMX workloads, divide the E-core count by 1.8 to 2.0 or so, and you will be close to the same level of performance. More on that later.

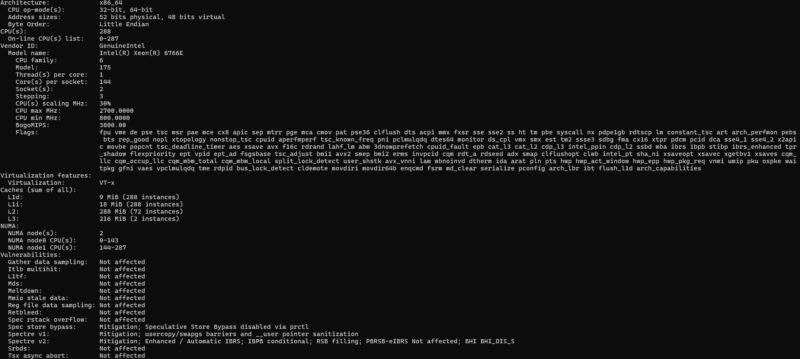

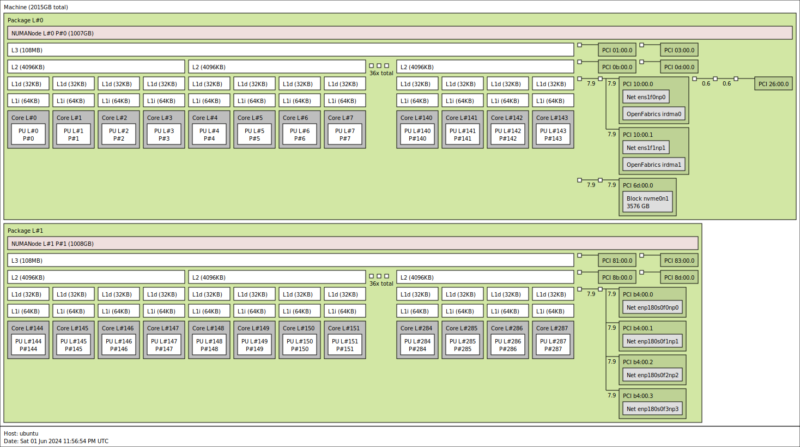

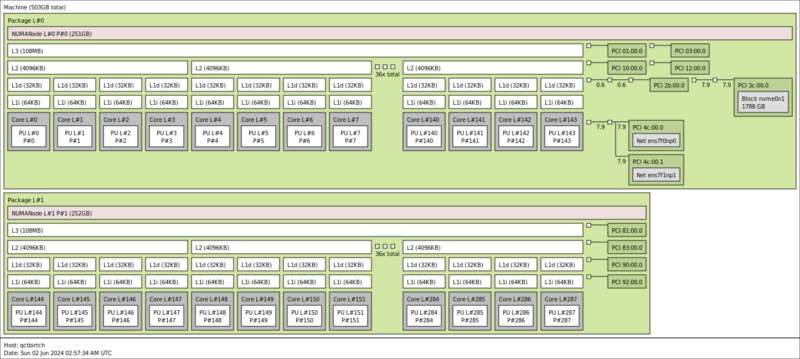

Here is the topology map for the 144-core Intel Xeon 6780E in a dual-socket configuration.

We can see the 4MB L2 cache blocks shared among four cores. That is the same as we can see looking at the Intel Xeon 6766E system, which makes sense since it should mostly be just a lower TDP and clock speed part.

Notably absent here is the I/O sitting off of a PCH because these are now PCH-less CPUs. Finally! AMD EPYC has been PCH-less since the original EPYC 7001 series in 2017. Intel has used its PCHs to provide lower-speed I/O, SATA, and so forth. There were even Lewisburg PCH versions with Intel QAT acceleration (and those PCHs also were on cards for PCIe QAT accelerators.)

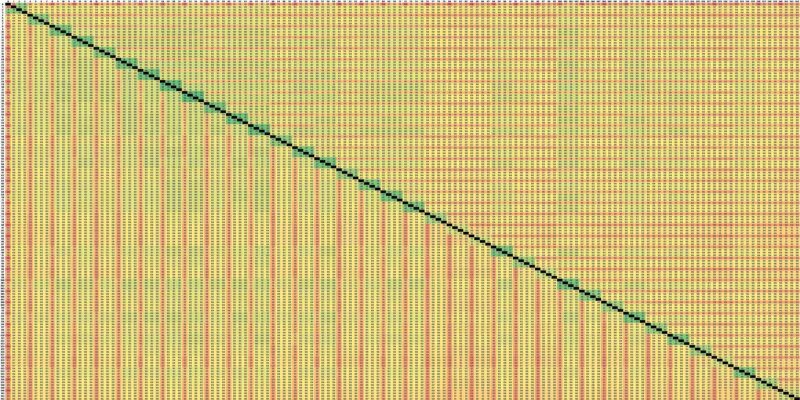

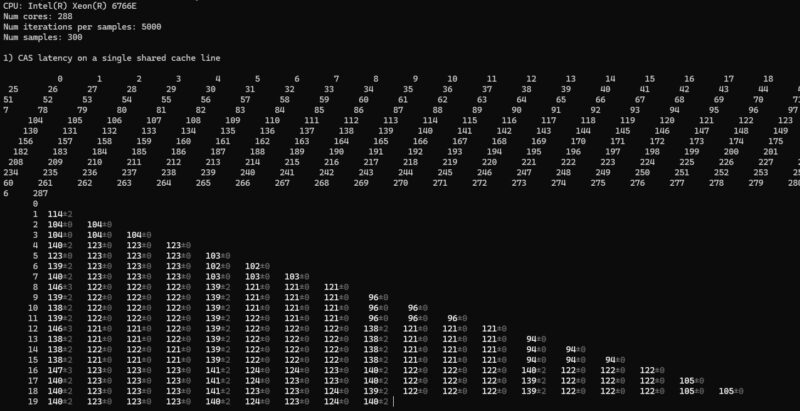

Doing a “quick” core-to-core-latency run, we can easily identify the clusters of four cores. The immediate clusters seem to be in the 86ns range. Most of the cores tend to fall in the 99-105ns distance. Then, for every four cores, we hit a different latency pattern in the 112-124ns range. It is really cool to hear an architectural feature of 4MB L2 cache per core and then see patterns repeating for clusters of four cores.

By “quick” this takes hours to run, so when you have only a few days with a system, we can only run it once. At the same time, you can easily see the four core L2 cache clusters.

The Intel Xeon 6766E was very similar to the Xeon 6780E, which appears to be a trait of the Sierra Forest design.

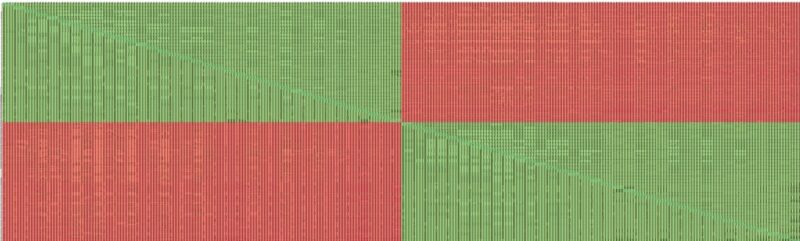

Socket-to-socket, the numbers go way up.

This is common among dual-socket systems. At the same time, the latency figures are not what we would expect to see from a P-core-only CPU these days. This is fair enough since we are trading maximum performance for density.

On the subject of pricing, Intel had the list pricing go live just after we published this piece so we have a ninja update. Here is the table.

Intel is in a bit of a pickle since the Intel Xeon 6780E provides more integer performance than its top-end Emerald Rapids by >20% and has more cores. As such, it needs a high list price. Still, the platform is going to be less expensive than larger socket designs, so keep that in mind at a system level. The 205W TDP 64 core Xeon 6710E part is also fascinating. If you have older 16 core and smaller servers, that is going to get a lot of the consolidation benefit at a low price.

Next, let us put this into the context of P-cores versus E-cores and the different platforms.

The 2S core-to-core latency image is too low-res to read unfortunately.

The discussion about needing more instances of the workload for a good kernel compile benchmark is exactly what the concepts of weak scaling vs. strong scaling in the HPC world are. It is a property is the application. If when using N cores, the calculation finishes in 1/Nth the time of a single threaded instance, it is said to have a strong scaling property. If when using N cores, the problem size is also N times bigger and the calculation finishes in the same time as the reference problem on 1 core, it is said to have a weak scaling property. In both cases perfect scaling means that using N cores is N times faster, but for weak scaling that only holds if you also increase the problem size.

I am curious about the PCH. If the PCH goes away will the SATA controller also move into the CPU or will the motherboard manufacturers have to add a SATA controller, or is SATA simply dead?

I see on the diagram the network cards, the M.2 and the other PCIe lanes, but I don’t see the SATA controller that usually lived in the PCH.

Or maybe there will be a simpler PCH?

I hope you’d do more of that linux kernel compile benchmark partitioning. That’s what’s needed for chips like these. If you’re consolidating from old servers to new big CC CPUs you don’t need an app on all cores. You need to take an 8 vCPU VM on old and run it on new. My critique is instead of 36 vCPU I’d want to see 8 vCPU

“Ask a cloud provider, and they will tell you that 8 vCPUs and smaller VMs make up the majority of the VMs that their customers deploy.”

As someone who runs a small cloud hosting data center I can confirm this. We have 9 out of 150VMs that have more than 8 vCPUs. Of those 9 I can tell you that they could easily be cut in half and not affect performance of the VMs at all. However, the customers wanted and are paying for the extra vCPUs so they get them.

OK, auto-refresh of page wiped my comment … a ahem great way to prevent any bigger posts. So will keep the rant and skip analytical part of the comment I wanted to post for others ..

——–

The conclusion is, well, Intel-sponsored I guess.

On one hand you give one to Intel, stating Sierra Forest is really positioned a step below Bergamo – which is correct.

Then, one paragraph later, you criticise Siena for the same fact – that it is positioned (even more so) below Sierra Forrest.

A lost opportunity.

For the Bergamo comparison -“but again, remember, a large double-digit percentage of all infrastructure is determined by the ability to place a number of VMs and their vCPUs onto hardware. 256 threads is more than 144, but without SMT that becomes a 128 v. 144 discussion.” That is such a contrived conclusion. I doubt how many service providers actually think like this/

Divyjot I work at a big cloud provider so my thoughts are my own. You might notice all the cloud providers aren’t bringing SMT to their custom silicon designs. SMT with the side channel attacks is a nightmare. You don’t see scheduling across different physical cores in large public clouds for this reason.

That conclusion that Bergamo’s Zen 4c is too much perf per core is also on target.

I’d say they did a great job, but I’d also say the 288 core is going to be a step up. I’d rather have 288 physical cores than 384 threads using SMT.

AMD needs a 256 core Turin Dense. What they’ve missed is that Intel offers more than twice the E cores than the P. We’re buying Genoa not Bergamo top end even with STH saying Bergamo is great because we didn’t want to hit that low cache case in our infrastructure. 96 to 128 is only 33% more. You’re needing to show a bigger jump in core counts. 128 to 192 is only 50% more. AMD needs 256.

I think this is just an appetizer for Clearwater Forest next year with Darkmont cores on Intel 18A. That would be a serious product for most workloads except ones requiring AVX512.

Oh wow, a truly rare unicorn here, a Patrick/STH article right out of the funny pages, which is great, everybody likes to laugh once in a while!

Hurray, cloud providers are getting more efficient. Meanwhile, I’m not seeing the costs for these low end minimalist servers going down. It’s impressive how many more cores and how much more RAM and how many more gigabits of networking you can buy per $ only for the price from year to year to stay the same…

It would be great if your benchmark suite reflected some more use cases to reflect the weird CPUs, especially for the embedded parts.

Things like QAT for nginx or an opensense router or Tailscale exit node or SMB server. I know they aren’t traditional compute tasks but they do need CPUs and it’s what most STH readers probably actually use the devices for.

@Patrick: Please stop with this ridiculous pro-Intel framing

You say that Bergamo is “above” Sierra Forest but they basically have the same list prices. The 9754S with 1T per core is even cheaper and I would have loved to see a comparison of that 1T1C for both AMD and Intel.

“What Intel has here is something like a Siena”: No, you really need to change your conclusion after Intel published their price list.

BTW

Bergamo 9754 is going for 5400 Euros (including 19% VAT) at regular retailers in Europe and 9734 for 3600 Euro. I really don’t think Bergamo will be “above” Sierra Forest even at “real world” prices for larger customers.

Forget AMD. I think this article is sponsored by Ampere or Arm. Ampere or Arm must have paid to not have its chips in these charts. Intel’s 1G E Core Xeon is more than 30% faster per core than the Altra Max M128 even with more cores in the same power. You’re also not being fair since Sierra’s using DDR5 so that’s gap for memory. PCIe Generation 5 is higher power and faster. So Intel’s 250W is being used some for that. 144 cores at 250W is amazing. We’ve got so much older gear and even still low utilization so BOTE math makes this a big winner. We’ve got renewal at the colo coming. I can’t wait to watch how they’ll take reducing 40 cabs to 4.

I think AMD’s faster on AVX512 but web servers will get much more benefit from QAT than they do AVX512. I don’t think that’s being taken into account enough. You’re handicapping Intel versus AMD by not using that.

If you do the math on the 9754S loss of threads that’s about 14% below the 9754. Intel’s got integer performance 25% above the 8594+ so you’d end up at 19% lower perf for the 6780E than the 9754S, not taking into account QAT which you should but it won’t work for integer workloads.

With that 19% lower performance you’ve got 12.5% more cores on Intel, so that’ll have a larger impact on how many vCPUs you can provision. You’re at a lower perf per core with Intel, but more vCPU capacity.

When we look at power though, that 6780E screenshot is 302W so it’s 58W less than the 360W TDP 9754S since AMD typically uses its entire TDP. That’s just over 16% less power. I’d assume that extra 28W is for accelerators and other chip parts.

So Intel’s 19% less perf than Bergamo without SMT at 16% less power. Yet Intel’s delivering 12.5% more vCPUs and that QAT if you’re enabling it for OpenSSL offload or IPsec will more than outweigh the 3% perf/power difference. I don’t think QAT’s as important on super computer chips, but in this market, it’s aimed directly in target workloads.

If you’re just going vCPU / power and don’t care about 20% performance, then the 6766E is the clear winner in all of this. We’ve got over 70,000 VM’s where I work and I can tell you that we are 97% 8 vCPU and fewer. Less than 15% of those VMs have hit 100% CPU in the last quarter.

What this article fails to cover is the 1 vCPU VM. If you’re a poor cloud provider like a tier 5 one maybe you’re putting two different VMs on a SMT core’s 2 threads. For any serious tier 1 or tier 2 cloud provider, and any respectable enterprise cloud, they aren’t putting 2 different VMs on the same physical core’s 2 threads.

I’d say this is a great article and very fair. I don’t think SF is beating AMD in perf. It’s targeting what we’ve all been seeing in the industry where there’s so many small VMs that aren’t using entire vCPU performance. It’s the GPU moment for Linux virtualization.

@ModelAirplanesInSeattleGuy

“more vCPUs”: Don’t know where you’re working but no company I’ve been at cares about just more VMs. It’s about cost(including power) and performance. We never consolidate to a server where the VMs don’t offer significant performance upgrades. It’s about future proofing.

“since AMD typically uses its entire TDP” : Like all CPUs it depends on the workload. Your calculation is worthless

Regarding QAT: What is the performance of these 2 QAT (at least Xeon 4th/5th gen platinum has 4 units) units when you use 144 VMs(like your example, or just 32) accessing QAT through SR-IOV? The fact that it’s hard to find any information on it shows that very few are using QAT despite all this talk. Anyone looking for such an extreme use case would use DPUs.

xeon 6 is new socket or it can be used in Sapphire rappids motherboards?

Xeon 6 processor is based on a new socket and is not compatible with the Sapphire Rapids motherboard/socket.

I wonder if it wouldn’t have made more sense to pursue higher dimension p-cores, instead of the me-too e-cores

Imagine a 32x P-core with 4 or 8 HTs each.