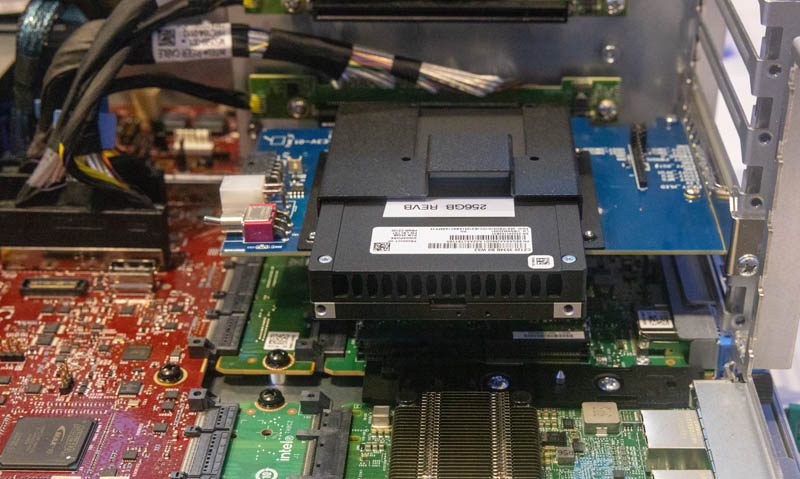

At Intel Vision 2024, we saw the new CXL memory expansion modules running. The Micron CZ120 modules are PCIe Gen5 x8 modules that are 128GB or 256GB in size. These are drives we saw previously at FMS 2023, but now they are running on the Intel Xeon 6 platform.

Micron CZ120 CXL Memory Module Spotted at Intel Vision 2024

Months ago at FMS 2023, we saw a 256GB module working on a SPR/ EMR platform.

At Intel Vision 2024, this changed to seeing more production-ready drives in 128GB and 256GB capacity. We learned a number of really interesting things. First, Micron has been doing work to get these running in a striped mode. One option is to use CXL memory to expand the capacity of memory, but the other use case is also to provide more bandwidth. To think about this a bit logically, the CXL 2.0 memory uses PCIe Gen5 lanes and so the aggregate bandwidth for memory can be the DDR5 memory channels at full bandwidth plus the PCIe Gen5/ CXL lane bandwidth. To do this, matching the size of the CXL 2.0 memory modules to the capacity of the RAM becomes important. Think of this like doing RAID 0 across SSDs, you would want SSDs to be the same capacity.

The other thing we learned is that the PCIe Gen5 x8 modules or E3.S 2T x8 modules offer a lot of flexibility over the Astera Labs Leo CXL Memory Expansion solution. As a lower-speed x8 interface, in theory, Micron could use DDR5 or even lower-cost DDR4 on the CXL device.

At the show, Micron and Intel had these running MLC on a future Intel Xeon 6 platform using engineering sample CPUs.

Final Words

CXL memory is one of the technologies we have been excited to use for many years. We just got the updated Astera Labs Leo CXL memory expansion model so expect to see more content on CXL coming soon. The current state of DDR5 memory is that there is physically only so much space on CPU packages to put pins for more memory channels and only so much motherboard space to put more memory. As a result, those who want more memory and more memory bandwidth will have the option to use CXL soon.

Can CXL be the next HBM?

I’m really confused about this block in the article. (Separated by a picture)

“As a lower-speed x8 interface, in theory, Micron could use DDR5 or even lower-cost DDR4 on the CXL device.

At the show, Micron and Intel had these running MLC on a future Intel Xeon 6 platform using engineering sample CPUs.”

So it wasn’t even using RAM in the CXL modules, but MLC NAND FLASH?

I’d believe MLC in this context to be Memory Latency Checker not MLC NAND

Why there is PSID? I feel Micron is not revealing all their cards yet.

ps. STH says incorrect email address to a valid address.

@Benito:

Quote from Micron’s site:

“Micron’s high-capacity, CXL-based memory expansion modules allow the flexibility to compose servers with more memory capacity and low latency to meet application workload demands, with up to 96% more database queries per day and 24% greater memory read/write bandwidth per CPU than servers using RDIMM memory alone.[2]”.

[2] MLC bandwidth using 12 x 64GB Micron 4800MT/s RDIMMs + 4 x 256GB CZ120 memory expansion modules vs. RDIMM only.