A few weeks ago we looked at the cheap NICGIGA 10Gbase-T adapter. Within a few hours, Amazon sold out of the adapters (they are back at the time we are publishing this one.) In the meantime, we saw another option in the $70-$75 range which was the QFly 10Gbase-T adapter. This is a nearly identical Marvell AQC113 10GbE card to the one that we saw previously.

QFly 10Gbase-T NIC Overview

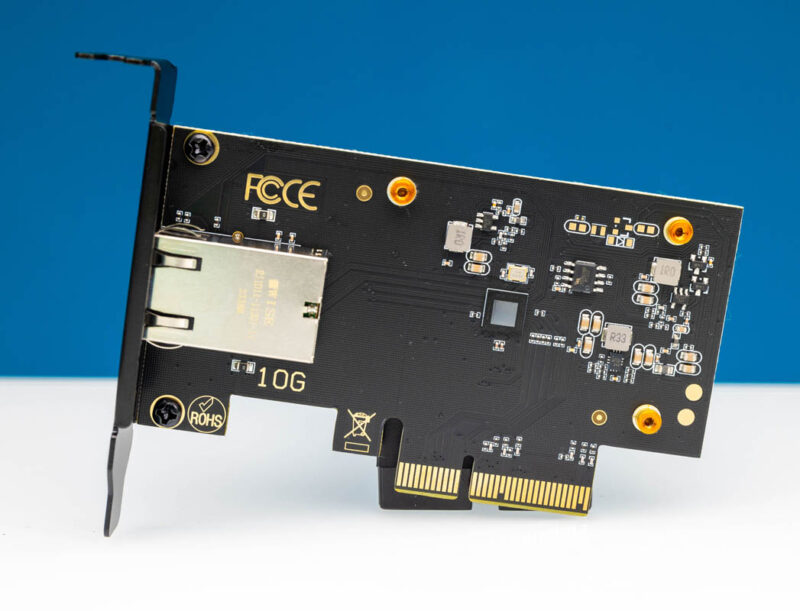

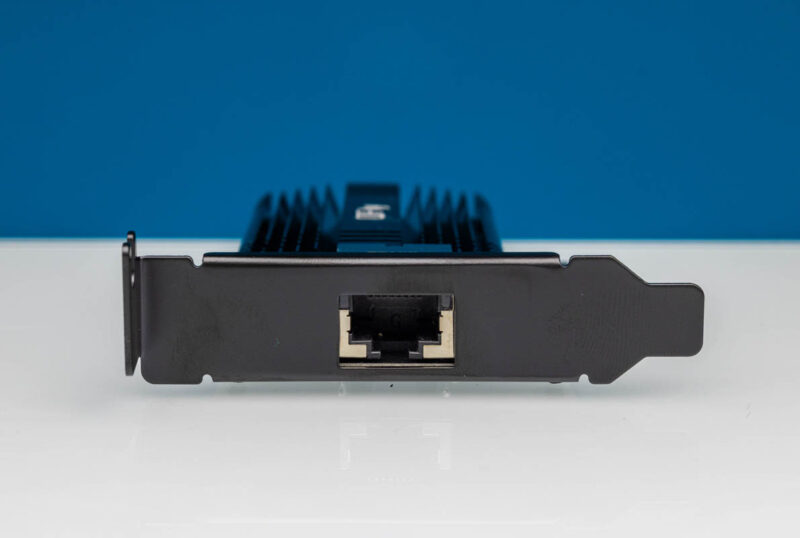

The card itself is a single-slot PCIe x4 card.

A modest heatsink is all that is needed to cool the Marvell AQC113C since that is generally a sub-4-5W part.

We also get both full-height and low-profile brackets.

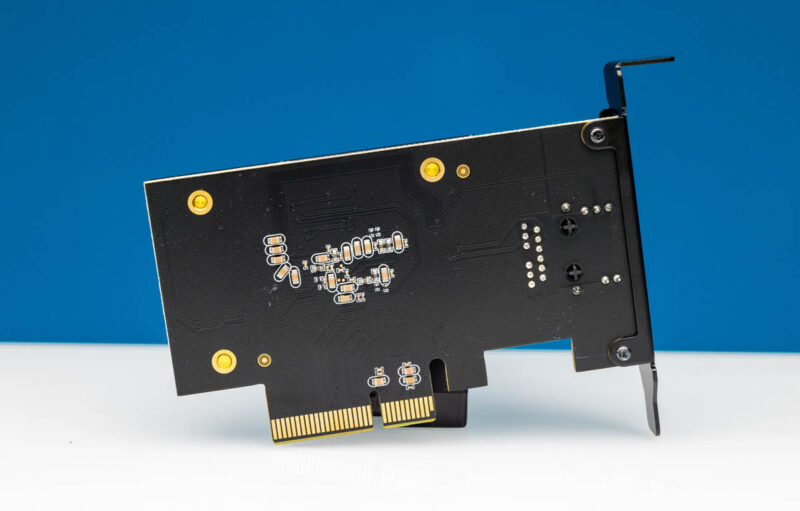

Here is the back of the PCB. There is not much going on here.

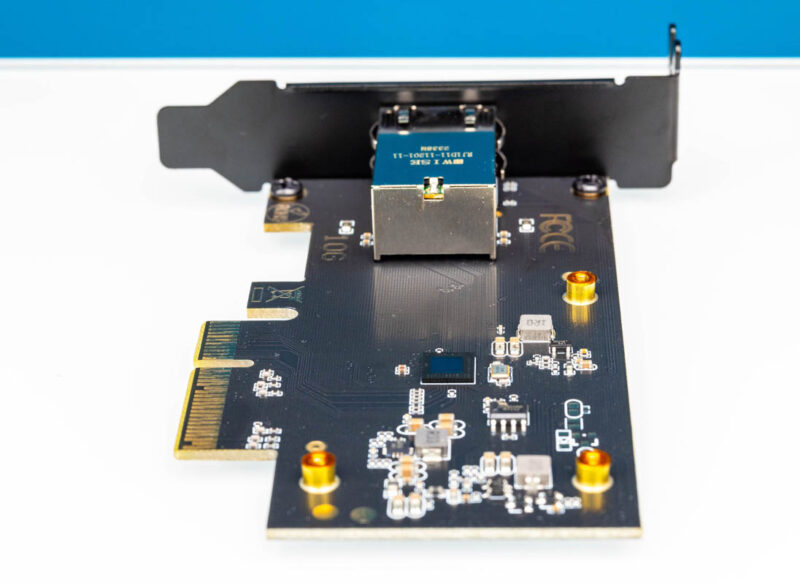

We removed the three screws and the heatsink popped off with a small thermal pad where it makes contact with the chip.

Again, there is not a lot going on here either. This is a very simple design, and that is part of what makes the Aquantia line from Marvell popular with motherboard and system designers.

While the heatsinks are different, the boards themselves seem to be the same between the two. We put the low-profile bracket on both and looked at them side by side.

They are so similar, they clearly come from the same factory.

Cheap NICGIGA 10Gbase-T Adapter with Marvell AQC113C Performance

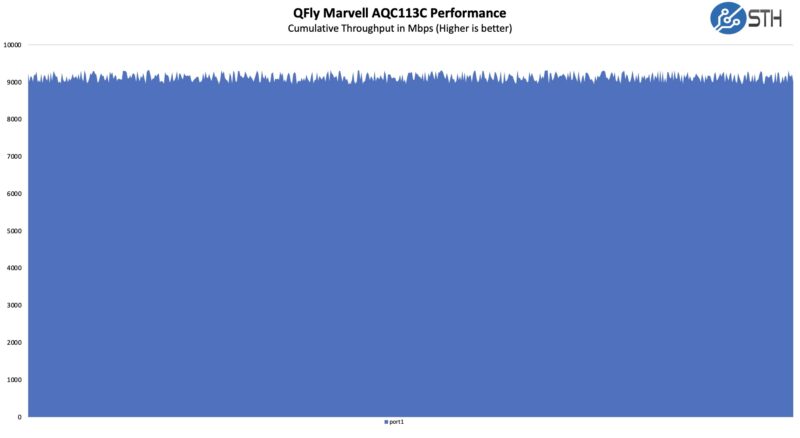

Overall, we got 10Gbase-T speeds as we would expect using iperf3:

Again, this is what we would expect from the adapter, as it is similar to the other one we looked at.

Final Words

As a super low-power card, this is one where we could add it to the system with a minimal impact on thermals. If you want to see specific OS compatibility, it is easy enough to look up AQC113 which is what this card is using but most modern OSes will support the NIC as it started popping up 2-3 years ago in devices.

Again, nothing about this card feels very premium. At the same time, for $70-75, this might be an easy way to add 10GbE for folks who want faster networking without having to resort to some ancient data center NICs that can use 8-10W more per car and that do not support speeds like 2.5GbE.

Our best advice between the two that we have reviewed is simple: get whichever is cheapest and in-stock.

If you want to see a quick video of the other version that shares this platform (just with a slightly different heatsink, you can find that on the new STH Labs YouTube channel.

We did not want to make a duplicate for this one.

Where to Buy

We purchased our unit from Amazon. Here is an affiliate link.

Note we participate in a number of affiliate programs, so we may earn a small commission if you buy one through this link. That helps us purchase more cards to review.

Note that the AQC113c has a design bug. Marvell issued a May 2022 notice that the 113c may fail to link up with PCIe during power on, reboot, or sleep/wake in many PCs. Marvel has consequently discontinued this part, and QFly must have gotten them at a serious discount, not particularly caring about their users. If the card works for you, then you are a lucky one.

Thomas does that cover all the Apple Mac minis that are using the same part?

I just found a dual port 100Gb IB ConnectX6 on ebay for $200; I still have some 3km single mode transceivers for it bookmarked for $33 / each, which should be short enough range not to need attenuators between cards in the same building. Runs of fiber in the 30m range are cheap. ConnectX6 cards offload practically everything from the CPU; they’ve even got onboard ASICs that can do line rate Reed Solomon code calculations to effectively turn them into RAID 6 controllers. They’re PCIe 3.0 x16 so even many of the low lane count consumer boards being sold these days should have a slot for them that won’t interfere with the GPU (assuming it doesn’t overlap every other usable slot) or the other… what is it, 4 lanes? Of gen 4 or 5. Dual port 200Mb are sub $500 as well but you won’t find transceivers cheap for those and they have a daughtercard to allow them to occupy 32 lanes because that workaround was needed while Intel took their sweet time making anything with gen 4 on it.

Going back in time further, Dual port PCIe 3.0 x8 40GbE cards are $22 a pop, and Dual port 40GbE / 56Gb FDR Infiniband converged cards are $33. They’re mellanox so they’ll do a proprietary 56Gb ethernet if you don’t want to mess with IB. That’s right, minus the transceivers and fiber which just keep getting cheaper you can have point-to-point 112Gb/s connections, or about enough for full bandwidth between two gen 4 NVMes or 4 gen 3 for less than these pieces of trash and I’d bet anything they won’t be hammering the CPU with interrupts as badly. Or 3 of the 40s and 3 linked servers. 56Gb mellanox switches are selling for $120. This chip is integrated in my threadripper board and doesn’t even support controllable DMA coalescing for r/w like the onboard gigabit intel NIC… not to mention half the driver settings will just kill the connection like they were for a different chip.

There’s literally no reason to pay this kind of criminal pricing for what should have been recycled as e-waste a decade ago if motherboard manufacturers weren’t spending more time putting pretty looking heatsinks and RGB headers on consumer equipment. I dunno about everybody else but Metronet still hasn’t rolled out the 2 through 5Gb internet speeds to my area and I’m not planning on upgrading when they do unless the price is dropped to the same as gigabit costs now. Most websites throttle things too badly. That just leaves the very few machines that actually benefit from fast links in a given setup and those would almost always do better with something faster than 10GbE. The ones that can properly handle it / actually need it are going to be gen 1 epyc or 2s e5 v4 xeons or something else that can be had very cheap. Converting and running HDMI 2.1 across a home might be another use case for 56Gb depending on how overpriced active optical HDMI cabling is. I personally stopped trusting it the second I saw them advertising that it would speed up gaming… using… elf magic I guess? The delay induced by transceivers gives them worse latency than copper over short ranges IIRC. The only thing that keeps stopping me is the lack of any kind of support for RDMA file transfer on non-server Windows. You might think that when Pro Workstation says it supports SMB Direct and 2 other distinguishing features total (one of which is NVDIMMs which are already dead) and is $100 upgrade you’d be able to run an SMB server over RDMA from it, but nope. And server 2022 node pricing isn’t cheap. Luckily since I almost never use the machine that’s running the file server anymore installing linux on it wouldn’t be too bad except the 3 weeks of hunting down the proper configurations for everything and working driver versions on the net while reading through post after post of people who spell out “FREE AND OPEN SOURCE SOFTWARE” every time they mention anything.

I’ve been a very happy user of the AQC107 predecessor and long waiting for a NIC that takes advantage of the PCIe v4 x1 form factor for full range NBase-T support… There is tons of x1 v4 slots out there that don’t get anything to plug into them and wasting 4 lanes of PCIe with the normal AQC107 NICs (or an M.2 slot) is just too painful!

Yet for some unfathomable reason, nobody seems to make an x1 NIC based on the 113.

Actually, any hardware with the 113 has been very hard to find, considering it’s been out there for years now.

@ThomasLloyd: if this is a real hardware bug, I’d be surprised that Marvell won’t do a newer revision. They are still very much alone in that market segment but being Broadcom, they may just not be interested in small fry any more…